0 التعليقات

0 المشاركات

17 مشاهدة

الدليل

الدليل

-

الرجاء تسجيل الدخول , للأعجاب والمشاركة والتعليق على هذا!

-

GAMEDEV.NETReal-time software global illumination?Author I am looking at Unreal's lumen system, and it seems to basically boil down to:Assign a bunch of sampling points that lie along the surface of meshes.Interpolate between those sampling points when rendering diffuse and specular indirect lighting at each pixel.This seems very intuitive to me, but…How are these points being decided?How are the nearest relevant sampling points found in the shader? Sparse Voxel Trees maybe. Been such a long time since I've thought or looked into it. But instead of a 3D grid where each cell is exactly the same distance apart, they physically in memory might be the next memory address/pixel over, but the distance between each pixel can represented unevenly. Essentially a linked list.1 20 21 28 29 301 0 1 0 1 1For simplicity if you have a texture of 1 or 0 only to represent full shadow or full light and you have 6GI samples across this axis and your fragment is being rendered at x = 25, then you would need to sample 21 and 28 and perform a linear blend. To perform this lookup, you could downsample this texture to get averages and perform a quick lookup. You could take 30-1 and see it's 29 units long and the texture is 6 pixels. so if you drop in x = 25: you assume 6/29 world units per pixel. 25 *(6/29) gets you the 5th index, You see it's greater, start marching backwards in the array. Probably not a good way to do it but gets you thinking in ideas how to fetch data based on world units, in an array that is not linear across 3D dimensions. I know for characters a while back each sample could be anywhere and you would pre-compute the GI. As a character or 3D model approached close distances to samples, it would know on the CPU side which samples influence the 3D model, instead of doing it per-pixel. How are these points being decided?- I think at one point users would place a bunch? In theory you could sample tons of areas and if the neighbors are all reading the same value, then you can merge. For instance an open meadow that is flat with all samples = 100% visibility, only really need one sample. Advertisement Idk how Lumen works in detail, but i've picked some things up:There is a low poly convex hull per object, which they call ‘cards’. (It's not precisely a convex hull, but can be a combination of multiple hulls, to have a better approximation for non convex shapes. If there's a hole inside an object, error must be high.)Those card polys are UV mapped, and there is some way to map the real geometry to a point / texture sample on the card. So basically that's an approximation of a bijective lightmap. Things like a terrain heightmap may have a specific solution i guess, since that's not a convex object.They use SW raytracing to calculate GI. To to so, they have SDF per object. At larger distance (or low settings), all objects are merged to a single, global SDF. Up close they use the per object SDF, but idk which acceleration structure is used to find them.A hit point is then (probaly) mapped to the card hull to sample the ‘lightmap’.They also do screenspace tracing for refinements.To shade the screen, they first generate small probes in a screen space regular grid. Maybe each probe is 8x8 pixels, and it's half sphere environment maps with octehedral projection.In case the geometry pixels under a corresponding grid probe have too high variance (e.g. a close up bush with a distant building behind it), they also maintain a list of additional, irregularely placed probes form a fixed budget to refine details.So they do not sample from card lightmaps directly, but fromt the screen space probes.There is a talk from Daniel Wright about this, and they call it final gathering.It's semmingly the only attempt to explain at least a small part of Lumen.So now you got two very different answers. Opinion: It's eventually hopeless to figure out how Lumen works. It's a stack of so many hacks, nobody outside Epic really knows how the entire thing works i guess. Complexity seems extremely high, and i'm sure Epic is not happy about that, still seeking for a better way.Results are pretty good, though.But so far i have never seen it to be truly dynamic beyond time of day. Games seeminlgy do not utilize this option.And since dynamic objects are excluded from SW Lumen anyway, it feels baking would be often the better solution. JoeJ said:Opinion: It's eventually hopeless to figure out how Lumen works.Not just that, they have changed/updated the solution multiple times already with … let's say varying results. The problem is, that Lumen itself became a huge hack upon hack … without providing satisfying results (compared to ppm or pt).It is not just this, but generally storing multiple variants of each mesh - geometrical representation (sometimes only proxy one … and complex one being in form of meshlets/clustered LOD for nanite), volumetric representation, card (set of convex hullts) representation, etc. Most of those representations are inaccurate, not generic enough (can't be reasonably generated/updated in runtime), etc.And all that still ends up being somewhat meh quality (precomputed lighting is always superior, lumen isn't true dynamic solution and errors are very visible). Author I did some work with Intel Embree and have some interesting results. First, the library is much faster than I thought was possible: This is a LIGHTMAP processed on the CPU:The next step was a lower resolution lightmap with more samples, and this also worked in real-time. I used the dynamic lightmap combined this with standard shadow maps direct lighting. The 100,000 poly car mesh could be lit per-vertex in 300 milliseconds. Not quite real-time, but pretty close.I combined vertices into fewer samples. Using six raycasts per sampling point, I got the same mesh to process in 4 milliseconds. I did not finish because I am just testing right now, and plan to come back to this later, but it looks like the approach will work. Author JoeJ said:There is a low poly convex hull per object, which they call ‘cards’. (It's not precisely a convex hull, but can be a combination of multiple hulls, to have a better approximation for non convex shapes. If there's a hole inside an object, error must be high.) Those card polys are UV mapped, and there is some way to map the real geometry to a point / texture sample on the card. So basically that's an approximation of a bijective lightmap. Things like a terrain heightmap may have a specific solution i guess, since that's not a convex object.I'm guessing that stuff operates on a per-vertex basis?That's basically the same thing I did in my last example, without realizing it. For each vertex in the mesh, find the closest vertex in the “lighting mesh”, and assign the second UV coords to that lightmap coordinate. Store the light data in a texture, and then perform a texture lookup in the vertex shader for the vertex color. Updating a small texture is way cheaper to do than every vertex in a million poly mesh. Advertisement Yeah maybe. Idk how Epic finds the closest point from the visible mesh to a convex hull, or if they even need to. They might not, because they gather from the screenspace probes, not from the hull directly. I never found a nice way to solve this mapping problem, but i can mention some alternatives to the convex hulls:Using a volume, e.g. voxel cone tracing or DDGI. (Inefficient because it's not sparse, and error becasue we are not at the surface)Using irregular, adaptive surface probes, e.g. EAs surfels. (Iirc they generate them from screenspace and add / remove surfels as we move through the scene.)Using a precalculated and persistent hierarchy of surfels like i do:(Adds big storage cost, but i need a robust representation because i use the surfels also for tracing.)Here somebody seems to use adaptive surfels to make Sannikovs Radiance Cascades work in 3D: https://m4xc.dev/blog/surfel-maintenance/Pretty interesting. I use this Cascades optimiztion too, but i came up with it almost 20 years earlier and kept it a secret. Yeah maybe. Idk how Epic finds the closest point from the visible mesh to a convex hull, or if they even need to. They might not, because they gather from the screenspace probes, not from the hull directly. I never found a nice way to solve this mapping problem, but i can mention some alternatives to the convex hulls:Using a volume, e.g. voxel cone tracing or DDGI. (Inefficient because it's not sparse, and error becasue we are not at the surface)Using irregular, adaptive surface probes, e.g. EAs surfels. (Iirc they generate them from screenspace and add / remove surfels as we move through the scene.)Using a precalculated and persistent hierarchy of surfels like i do:(Adds big storage cost, but i need a robust representation because i use the surfels also for tracing.)Here somebody seems to use adaptive surfels to make Sannikovs Radiance Cascades work in 3D: https://m4xc.dev/blog/surfel-maintenance/Pretty interesting. I use this Cascades optimiztion too, but i came up with it almost 20 years earlier and kept it a secret. Author I just don't see the point of these techniques when only 11% of Steam users have a GEForce 3070 or better and 40% have hardware about equivalent to a 4060 or worse. The 4060 has 3000 cores and 8 GB RAM, which is pretty close to a 1080 from 2016. Author I just don't see the point of these techniques when only 11% of Steam users have a GEForce 3070 or better and 40% have hardware about equivalent to a 4060 or worse. The 4060 has 3000 cores and 8 GB RAM, which is pretty close to a 1080 from 2016.0 التعليقات 0 المشاركات 14 مشاهدة

GAMEDEV.NETReal-time software global illumination?Author I am looking at Unreal's lumen system, and it seems to basically boil down to:Assign a bunch of sampling points that lie along the surface of meshes.Interpolate between those sampling points when rendering diffuse and specular indirect lighting at each pixel.This seems very intuitive to me, but…How are these points being decided?How are the nearest relevant sampling points found in the shader? Sparse Voxel Trees maybe. Been such a long time since I've thought or looked into it. But instead of a 3D grid where each cell is exactly the same distance apart, they physically in memory might be the next memory address/pixel over, but the distance between each pixel can represented unevenly. Essentially a linked list.1 20 21 28 29 301 0 1 0 1 1For simplicity if you have a texture of 1 or 0 only to represent full shadow or full light and you have 6GI samples across this axis and your fragment is being rendered at x = 25, then you would need to sample 21 and 28 and perform a linear blend. To perform this lookup, you could downsample this texture to get averages and perform a quick lookup. You could take 30-1 and see it's 29 units long and the texture is 6 pixels. so if you drop in x = 25: you assume 6/29 world units per pixel. 25 *(6/29) gets you the 5th index, You see it's greater, start marching backwards in the array. Probably not a good way to do it but gets you thinking in ideas how to fetch data based on world units, in an array that is not linear across 3D dimensions. I know for characters a while back each sample could be anywhere and you would pre-compute the GI. As a character or 3D model approached close distances to samples, it would know on the CPU side which samples influence the 3D model, instead of doing it per-pixel. How are these points being decided?- I think at one point users would place a bunch? In theory you could sample tons of areas and if the neighbors are all reading the same value, then you can merge. For instance an open meadow that is flat with all samples = 100% visibility, only really need one sample. Advertisement Idk how Lumen works in detail, but i've picked some things up:There is a low poly convex hull per object, which they call ‘cards’. (It's not precisely a convex hull, but can be a combination of multiple hulls, to have a better approximation for non convex shapes. If there's a hole inside an object, error must be high.)Those card polys are UV mapped, and there is some way to map the real geometry to a point / texture sample on the card. So basically that's an approximation of a bijective lightmap. Things like a terrain heightmap may have a specific solution i guess, since that's not a convex object.They use SW raytracing to calculate GI. To to so, they have SDF per object. At larger distance (or low settings), all objects are merged to a single, global SDF. Up close they use the per object SDF, but idk which acceleration structure is used to find them.A hit point is then (probaly) mapped to the card hull to sample the ‘lightmap’.They also do screenspace tracing for refinements.To shade the screen, they first generate small probes in a screen space regular grid. Maybe each probe is 8x8 pixels, and it's half sphere environment maps with octehedral projection.In case the geometry pixels under a corresponding grid probe have too high variance (e.g. a close up bush with a distant building behind it), they also maintain a list of additional, irregularely placed probes form a fixed budget to refine details.So they do not sample from card lightmaps directly, but fromt the screen space probes.There is a talk from Daniel Wright about this, and they call it final gathering.It's semmingly the only attempt to explain at least a small part of Lumen.So now you got two very different answers. Opinion: It's eventually hopeless to figure out how Lumen works. It's a stack of so many hacks, nobody outside Epic really knows how the entire thing works i guess. Complexity seems extremely high, and i'm sure Epic is not happy about that, still seeking for a better way.Results are pretty good, though.But so far i have never seen it to be truly dynamic beyond time of day. Games seeminlgy do not utilize this option.And since dynamic objects are excluded from SW Lumen anyway, it feels baking would be often the better solution. JoeJ said:Opinion: It's eventually hopeless to figure out how Lumen works.Not just that, they have changed/updated the solution multiple times already with … let's say varying results. The problem is, that Lumen itself became a huge hack upon hack … without providing satisfying results (compared to ppm or pt).It is not just this, but generally storing multiple variants of each mesh - geometrical representation (sometimes only proxy one … and complex one being in form of meshlets/clustered LOD for nanite), volumetric representation, card (set of convex hullts) representation, etc. Most of those representations are inaccurate, not generic enough (can't be reasonably generated/updated in runtime), etc.And all that still ends up being somewhat meh quality (precomputed lighting is always superior, lumen isn't true dynamic solution and errors are very visible). Author I did some work with Intel Embree and have some interesting results. First, the library is much faster than I thought was possible: This is a LIGHTMAP processed on the CPU:The next step was a lower resolution lightmap with more samples, and this also worked in real-time. I used the dynamic lightmap combined this with standard shadow maps direct lighting. The 100,000 poly car mesh could be lit per-vertex in 300 milliseconds. Not quite real-time, but pretty close.I combined vertices into fewer samples. Using six raycasts per sampling point, I got the same mesh to process in 4 milliseconds. I did not finish because I am just testing right now, and plan to come back to this later, but it looks like the approach will work. Author JoeJ said:There is a low poly convex hull per object, which they call ‘cards’. (It's not precisely a convex hull, but can be a combination of multiple hulls, to have a better approximation for non convex shapes. If there's a hole inside an object, error must be high.) Those card polys are UV mapped, and there is some way to map the real geometry to a point / texture sample on the card. So basically that's an approximation of a bijective lightmap. Things like a terrain heightmap may have a specific solution i guess, since that's not a convex object.I'm guessing that stuff operates on a per-vertex basis?That's basically the same thing I did in my last example, without realizing it. For each vertex in the mesh, find the closest vertex in the “lighting mesh”, and assign the second UV coords to that lightmap coordinate. Store the light data in a texture, and then perform a texture lookup in the vertex shader for the vertex color. Updating a small texture is way cheaper to do than every vertex in a million poly mesh. Advertisement Yeah maybe. Idk how Epic finds the closest point from the visible mesh to a convex hull, or if they even need to. They might not, because they gather from the screenspace probes, not from the hull directly. I never found a nice way to solve this mapping problem, but i can mention some alternatives to the convex hulls:Using a volume, e.g. voxel cone tracing or DDGI. (Inefficient because it's not sparse, and error becasue we are not at the surface)Using irregular, adaptive surface probes, e.g. EAs surfels. (Iirc they generate them from screenspace and add / remove surfels as we move through the scene.)Using a precalculated and persistent hierarchy of surfels like i do:(Adds big storage cost, but i need a robust representation because i use the surfels also for tracing.)Here somebody seems to use adaptive surfels to make Sannikovs Radiance Cascades work in 3D: https://m4xc.dev/blog/surfel-maintenance/Pretty interesting. I use this Cascades optimiztion too, but i came up with it almost 20 years earlier and kept it a secret. Yeah maybe. Idk how Epic finds the closest point from the visible mesh to a convex hull, or if they even need to. They might not, because they gather from the screenspace probes, not from the hull directly. I never found a nice way to solve this mapping problem, but i can mention some alternatives to the convex hulls:Using a volume, e.g. voxel cone tracing or DDGI. (Inefficient because it's not sparse, and error becasue we are not at the surface)Using irregular, adaptive surface probes, e.g. EAs surfels. (Iirc they generate them from screenspace and add / remove surfels as we move through the scene.)Using a precalculated and persistent hierarchy of surfels like i do:(Adds big storage cost, but i need a robust representation because i use the surfels also for tracing.)Here somebody seems to use adaptive surfels to make Sannikovs Radiance Cascades work in 3D: https://m4xc.dev/blog/surfel-maintenance/Pretty interesting. I use this Cascades optimiztion too, but i came up with it almost 20 years earlier and kept it a secret. Author I just don't see the point of these techniques when only 11% of Steam users have a GEForce 3070 or better and 40% have hardware about equivalent to a 4060 or worse. The 4060 has 3000 cores and 8 GB RAM, which is pretty close to a 1080 from 2016. Author I just don't see the point of these techniques when only 11% of Steam users have a GEForce 3070 or better and 40% have hardware about equivalent to a 4060 or worse. The 4060 has 3000 cores and 8 GB RAM, which is pretty close to a 1080 from 2016.0 التعليقات 0 المشاركات 14 مشاهدة -

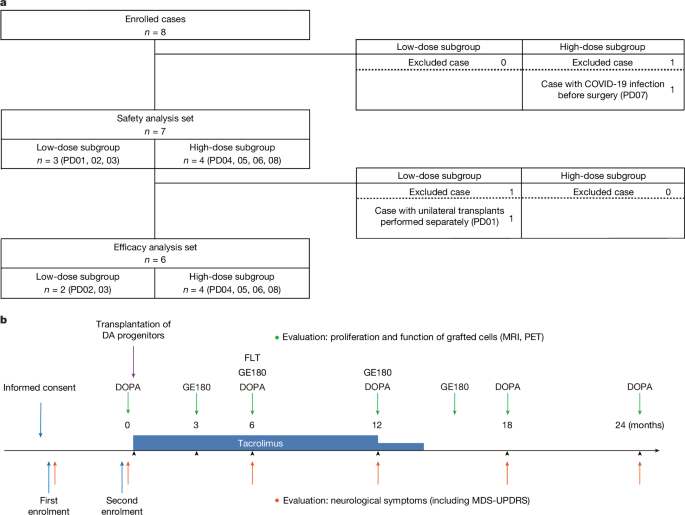

WWW.NATURE.COMPhase I/II trial of iPS-cell-derived dopaminergic cells for Parkinson’s diseaseNature, Published online: 16 April 2025; doi:10.1038/s41586-025-08700-0After transplantation into the brain of patients with Parkinson’s disease, allogeneic dopaminergic progenitors derived from induced pluripotent stem cells survived, produced dopamine and did not form tumours, therefore suggesting safety and potential clinical benefits for Parkinson’s disease.0 التعليقات 0 المشاركات 15 مشاهدة

WWW.NATURE.COMPhase I/II trial of iPS-cell-derived dopaminergic cells for Parkinson’s diseaseNature, Published online: 16 April 2025; doi:10.1038/s41586-025-08700-0After transplantation into the brain of patients with Parkinson’s disease, allogeneic dopaminergic progenitors derived from induced pluripotent stem cells survived, produced dopamine and did not form tumours, therefore suggesting safety and potential clinical benefits for Parkinson’s disease.0 التعليقات 0 المشاركات 15 مشاهدة -

-

-

-

INDIEGAMESPLUS.COM‘Cozy Dungeons’ Has You Fighting Monsters & Decorating RoomsCozy Dungeons is a game about clearing monsters out of crypts and castles, then renovating them for new folks to move in. There have been a lot of games that have mixed different play styles and genres. Often, when you are destroying everything in a dungeon, leaving piles of slime and blood behind, you don’t really think about who is going to end up living or using the space afterwards. Well, this game has you defeating enemies then putting the rooms back together to complete specific requirements so that everyone can be happy in their home. The actual dungeon fighting in Cozy Dungeons feels simple and fun. You can use your sword to destroy all of the enemies in each room, clearing it out. Some rooms also have block-pushing puzzles or button puzzles like you’d see in most dungeon crawlers. Where this game then feels different is through the decorating. Rooms have different requirements to be sold, which you might only really figure out through listening to the NPCs that are currently trying to live in the room. They’ll give you hints as to what they want. You will then need to design the room, adding in specific items they want or getting rid of things that have grown old and tired in the rooms. It’s quite fun to decorate and there is a lot of freedom, especially as you unlock new items to place and mess around with. Some rooms, from moving items, have secret doors into new areas that you might have not even discovered if you didn’t take the time to fix the place up. I got the chance to play through a few rounds of Cozy Dungeons at The Mix, where I found these two very different concepts of fighting and designing fit together perfectly. The cutesy graphic style also makes it all link up, even if there are puddles of slime that need to be swept up before you are able to start decorating! The dual phases mean you do not have to worry about enemies when trying to design, which is another plus. Cozy Dungeons is currently in development, but in the meantime, you can add it to your Steam Wishlist. About The Author0 التعليقات 0 المشاركات 15 مشاهدة

INDIEGAMESPLUS.COM‘Cozy Dungeons’ Has You Fighting Monsters & Decorating RoomsCozy Dungeons is a game about clearing monsters out of crypts and castles, then renovating them for new folks to move in. There have been a lot of games that have mixed different play styles and genres. Often, when you are destroying everything in a dungeon, leaving piles of slime and blood behind, you don’t really think about who is going to end up living or using the space afterwards. Well, this game has you defeating enemies then putting the rooms back together to complete specific requirements so that everyone can be happy in their home. The actual dungeon fighting in Cozy Dungeons feels simple and fun. You can use your sword to destroy all of the enemies in each room, clearing it out. Some rooms also have block-pushing puzzles or button puzzles like you’d see in most dungeon crawlers. Where this game then feels different is through the decorating. Rooms have different requirements to be sold, which you might only really figure out through listening to the NPCs that are currently trying to live in the room. They’ll give you hints as to what they want. You will then need to design the room, adding in specific items they want or getting rid of things that have grown old and tired in the rooms. It’s quite fun to decorate and there is a lot of freedom, especially as you unlock new items to place and mess around with. Some rooms, from moving items, have secret doors into new areas that you might have not even discovered if you didn’t take the time to fix the place up. I got the chance to play through a few rounds of Cozy Dungeons at The Mix, where I found these two very different concepts of fighting and designing fit together perfectly. The cutesy graphic style also makes it all link up, even if there are puddles of slime that need to be swept up before you are able to start decorating! The dual phases mean you do not have to worry about enemies when trying to design, which is another plus. Cozy Dungeons is currently in development, but in the meantime, you can add it to your Steam Wishlist. About The Author0 التعليقات 0 المشاركات 15 مشاهدة -

-

-

0 التعليقات 0 المشاركات 22 مشاهدة