0 Comentários

0 Compartilhamentos

22 Visualizações

Diretório

Diretório

-

Faça o login para curtir, compartilhar e comentar!

-

GAMINGBOLT.COMResident Evil 9 Seemingly Teased in Resident Evil 4 Remake Celebratory VideoCapcom’s Resident Evil 4 remake recently hit a new milestone, selling over ten million copies. To celebrate, the development team shared a funny little video on Twitter where the villagers in the climactic opening don party hats to celebrate. Doctor Salvador is busy shredding his chainsaw like an electric guitar while Leon raises his fist in respect. However, some users noticed that the sign near the tail-end looked a little odd. It features various lines of thanks, but perhaps most notable is the shape. Flip it 90 degrees to the left, and there’s a distinct resemblance to the Roman numeral for “9.” This has been taken as a tease for the next Resident Evil, the ninth mainline title after Resident Evil Village, though in what capacity remains to be seen. Aside from Onimusha: Way of the Sword in 2026, Capcom’s release catalogue looks a little dry. An announcement for Resident Evil 9, followed by a launch late this year or next year (if you believe the rumors), could help. As always, stay tuned for updates. Resident Evil 4 remake is available for Xbox Series X/S, PS4, PS5, and PC. Check out our reviews for the base game and its expansion, Separate Ways. エージェントの皆様へ感謝の気持ちを込めて記念映像をご用意いたしました。ぜひ音付きでお楽しみください。Attention all agents,We've prepared a special video to express our gratitude to all of you, which we hope you'll enjoy (with sound ON)!RE4 Dev Team pic.twitter.com/cKaS198UVY— Capcom Dev 1 (@dev1_official) April 25, 20250 Comentários 0 Compartilhamentos 25 Visualizações

GAMINGBOLT.COMResident Evil 9 Seemingly Teased in Resident Evil 4 Remake Celebratory VideoCapcom’s Resident Evil 4 remake recently hit a new milestone, selling over ten million copies. To celebrate, the development team shared a funny little video on Twitter where the villagers in the climactic opening don party hats to celebrate. Doctor Salvador is busy shredding his chainsaw like an electric guitar while Leon raises his fist in respect. However, some users noticed that the sign near the tail-end looked a little odd. It features various lines of thanks, but perhaps most notable is the shape. Flip it 90 degrees to the left, and there’s a distinct resemblance to the Roman numeral for “9.” This has been taken as a tease for the next Resident Evil, the ninth mainline title after Resident Evil Village, though in what capacity remains to be seen. Aside from Onimusha: Way of the Sword in 2026, Capcom’s release catalogue looks a little dry. An announcement for Resident Evil 9, followed by a launch late this year or next year (if you believe the rumors), could help. As always, stay tuned for updates. Resident Evil 4 remake is available for Xbox Series X/S, PS4, PS5, and PC. Check out our reviews for the base game and its expansion, Separate Ways. エージェントの皆様へ感謝の気持ちを込めて記念映像をご用意いたしました。ぜひ音付きでお楽しみください。Attention all agents,We've prepared a special video to express our gratitude to all of you, which we hope you'll enjoy (with sound ON)!RE4 Dev Team pic.twitter.com/cKaS198UVY— Capcom Dev 1 (@dev1_official) April 25, 20250 Comentários 0 Compartilhamentos 25 Visualizações -

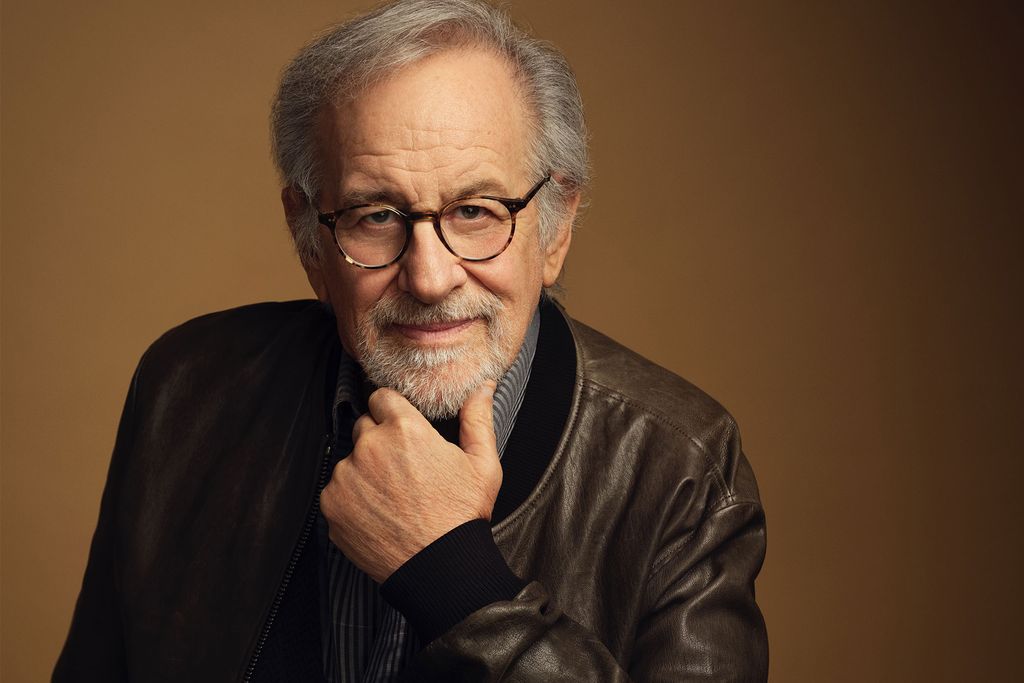

WWW.SMITHSONIANMAG.COMMeet This Year's Winners of the Portrait of a Nation Award, Including Steven Spielberg and Temple GrandinMeet This Year’s Winners of the Portrait of a Nation Award, Including Steven Spielberg and Temple Grandin Portraits of the honorees, who have made “transformative contributions to the United States,” will be added to the permanent collection of the Smithsonian’s National Portrait Gallery Filmmaker Steven Spielberg was among the 2025 recipients of the Portrait of a Nation Award. Evan Mulling / National Portrait Gallery The Smithsonian’s National Portrait Gallery has announced that it will honor four prominent Americans with the prestigious 2025 Portrait of a Nation Award. This year’s recipients are Steven Spielberg, the Academy Award-winning filmmaker; Temple Grandin, a scientist and autism advocate; Joy Harjo, the first Native American U.S. poet laureate; and Jamie Dimon, the CEO of JPMorgan Chase. “Each of the 2025 honorees were selected for their ingenuity and ability to transform their respective fields, from business and science to literature and film,” Rhea L. Combs, the gallery’s director of curatorial affairs, tells Smithsonian magazine. “By presenting their portraits, and the creativity of the artists behind them, we hope to inspire others to envision their personal power and move the needle of history in their own way.” First presented in 2015, the award recognizes individuals “who have made transformative contributions to the United States and its people across numerous fields of endeavor,” according to a statement from the gallery. Portraits of Spielberg, Grandin, Harjo and Dimon will be commissioned or acquired by the gallery in anticipation of the award gala on November 15, 2025. After going on view from November 14, 2025, to October 25, 2026, the portraits will enter the museum’s permanent collections. “This year’s honorees represent the remarkable breadth of American achievement,” Kim Sajet, the director of the museum, says in the statement. “The National Portrait Gallery is delighted to recognize these extraordinary individuals whose accomplishments embody our nation’s resilience and whose portraits will inspire visitors from across the country.” Ahead of their unveiling among the portraits of presidents, artists and other renowned Americans, take a moment to meet this year’s honorees. Steven Spielberg Steven Spielberg, Academy Award-winning filmmaker Michael Blackshire / Los Angeles Times via Getty Images “I dream for a living,” Spielberg once said. Fantastical films like E.T. (1982) and Jaws (1975) showcase his vivid imagination, as well as his ability to turn dreams into Hollywood blockbusters. But Spielberg also dreams about our past, and he tries to locate lessons for the future in some of history’s darkest moments. For instance, Amistad (1997) tells the story of an 1839 revolt aboard a slave ship, while Schindler’s List In Saving Private Ryan, Spielberg’s 1998 World War II epic, the director was determined “to trivialize neither the nature of war nor what it does to participants,” as Smithsonian magazine’s Kenneth Turan wrote in 2005. “Spielberg got so close to the chaos of warfare that the film led veterans who had never spoken to their children about combat to do so.” Spielberg isn’t the only director to be honored with his likeness in the National Portrait Gallery. Ava DuVernay, the esteemed filmmaker who created and directed the Martin Luther King Jr. biopic Selma (2014), won the award in 2022. She called winning “a jaw-dropping thing to me because it’s a dream I never knew to dream,” Smithsonian magazine’s Meilan Solly reported. Temple Grandin Temple Grandin, animal scientist and autism advocate Kelly Buster / National Portrait Gallery Two years after Grandin’s birth in 1947, doctors advised her parents to institutionalize their daughter, who had autism and didn’t speak until she was around 4. Her parents rejected the advice and instead placed Grandin in private schools. She flourished intellectually and hasn’t stopped since. After earning a PhD in animal science from the University of Illinois at Urbana-Champaign, Grandin became an authority on humane livestock treatment, designing systems that reduce stress for animals in slaughterhouses. Today, she’s also known for her autism advocacy. When she rose to prominence in the 1990s, autism was a taboo subject. But Grandin has always spoken openly about her experiences. In 2010, Time magazine named her one of the 100 most influential people in the world. “She thinks that she and other autistic people, though they unquestionably have great problems in some areas, may have extraordinary, and socially valuable, powers in others—provided that they are allowed to be themselves, autistic,” Oliver Sacks wrote in the New Yorker Joy Harjo Joy Harjo, former U.S. poet laureate Denise Toombs / National Portrait Gallery “I had no plans to be a poet,” Harjo tells KOSU’s Sarah Liese. Growing up as a citizen of the Muscogee Nation, she knew that many other Native American students were training “for education, for medical fields, to be Native attorneys,” Harjo adds. “Poetry to everyone seemed frivolous, like it wasn’t necessary. But you come down to someone dying, you come down to falling in love, falling out of love, you come down to marriage, you come to those moments of transformation, of being tested as a human being. And poetry is there.” Harjo wrote her first volume of poetry, a nine-poem chapbook called The Last Song, in 1975. In one poem from the collection, titled “3 a.m.,” Harjo writes about “two Indians” in the Albuquerque airport “at three in the morning / trying to find a way back.” John Scarry’s description of the poem in World Literature Today as “a work filled with ghosts from the Native American past, figures seen operating in an alien culture that is itself a victim of fragmentation” applies to much of Harjo’s career. In 2019, Harjo became the first Native American to be named U.S. poet laureate. In that role, she led “Living Nations, Living Words,” a project that mapped Native poets across North America. Jamie Dimon Jamie Dimon, CEO of JPMorgan Chase Arturo Olmos / National Portrait Gallery As the head of America’s largest bank, Dimon oversees more than $3 trillion in assets across the world. Born in Queens, New York, in 1956, he began a career in investment banking after graduating from Harvard Business School. “He just outworks everybody,” Duff McDonald, the author of Last Man Standing: The Ascent of Jamie Dimon and JPMorgan Chase, explained in a 2009 interview with the Chase Alumni Association. After becoming CEO of the bank in 2006, Dimon rose to prominence as he steered it through the Great Recession. “The only brand that came out of this thing undamaged was Chase,” McDonald said. Other business and finance leaders honored with the Portrait of a Nation Award include Amazon founder Jeff Bezos and former PepsiCo CEO Indra Nooyi, who were selected in 2019. Get the latest stories in your inbox every weekday.0 Comentários 0 Compartilhamentos 17 Visualizações

WWW.SMITHSONIANMAG.COMMeet This Year's Winners of the Portrait of a Nation Award, Including Steven Spielberg and Temple GrandinMeet This Year’s Winners of the Portrait of a Nation Award, Including Steven Spielberg and Temple Grandin Portraits of the honorees, who have made “transformative contributions to the United States,” will be added to the permanent collection of the Smithsonian’s National Portrait Gallery Filmmaker Steven Spielberg was among the 2025 recipients of the Portrait of a Nation Award. Evan Mulling / National Portrait Gallery The Smithsonian’s National Portrait Gallery has announced that it will honor four prominent Americans with the prestigious 2025 Portrait of a Nation Award. This year’s recipients are Steven Spielberg, the Academy Award-winning filmmaker; Temple Grandin, a scientist and autism advocate; Joy Harjo, the first Native American U.S. poet laureate; and Jamie Dimon, the CEO of JPMorgan Chase. “Each of the 2025 honorees were selected for their ingenuity and ability to transform their respective fields, from business and science to literature and film,” Rhea L. Combs, the gallery’s director of curatorial affairs, tells Smithsonian magazine. “By presenting their portraits, and the creativity of the artists behind them, we hope to inspire others to envision their personal power and move the needle of history in their own way.” First presented in 2015, the award recognizes individuals “who have made transformative contributions to the United States and its people across numerous fields of endeavor,” according to a statement from the gallery. Portraits of Spielberg, Grandin, Harjo and Dimon will be commissioned or acquired by the gallery in anticipation of the award gala on November 15, 2025. After going on view from November 14, 2025, to October 25, 2026, the portraits will enter the museum’s permanent collections. “This year’s honorees represent the remarkable breadth of American achievement,” Kim Sajet, the director of the museum, says in the statement. “The National Portrait Gallery is delighted to recognize these extraordinary individuals whose accomplishments embody our nation’s resilience and whose portraits will inspire visitors from across the country.” Ahead of their unveiling among the portraits of presidents, artists and other renowned Americans, take a moment to meet this year’s honorees. Steven Spielberg Steven Spielberg, Academy Award-winning filmmaker Michael Blackshire / Los Angeles Times via Getty Images “I dream for a living,” Spielberg once said. Fantastical films like E.T. (1982) and Jaws (1975) showcase his vivid imagination, as well as his ability to turn dreams into Hollywood blockbusters. But Spielberg also dreams about our past, and he tries to locate lessons for the future in some of history’s darkest moments. For instance, Amistad (1997) tells the story of an 1839 revolt aboard a slave ship, while Schindler’s List In Saving Private Ryan, Spielberg’s 1998 World War II epic, the director was determined “to trivialize neither the nature of war nor what it does to participants,” as Smithsonian magazine’s Kenneth Turan wrote in 2005. “Spielberg got so close to the chaos of warfare that the film led veterans who had never spoken to their children about combat to do so.” Spielberg isn’t the only director to be honored with his likeness in the National Portrait Gallery. Ava DuVernay, the esteemed filmmaker who created and directed the Martin Luther King Jr. biopic Selma (2014), won the award in 2022. She called winning “a jaw-dropping thing to me because it’s a dream I never knew to dream,” Smithsonian magazine’s Meilan Solly reported. Temple Grandin Temple Grandin, animal scientist and autism advocate Kelly Buster / National Portrait Gallery Two years after Grandin’s birth in 1947, doctors advised her parents to institutionalize their daughter, who had autism and didn’t speak until she was around 4. Her parents rejected the advice and instead placed Grandin in private schools. She flourished intellectually and hasn’t stopped since. After earning a PhD in animal science from the University of Illinois at Urbana-Champaign, Grandin became an authority on humane livestock treatment, designing systems that reduce stress for animals in slaughterhouses. Today, she’s also known for her autism advocacy. When she rose to prominence in the 1990s, autism was a taboo subject. But Grandin has always spoken openly about her experiences. In 2010, Time magazine named her one of the 100 most influential people in the world. “She thinks that she and other autistic people, though they unquestionably have great problems in some areas, may have extraordinary, and socially valuable, powers in others—provided that they are allowed to be themselves, autistic,” Oliver Sacks wrote in the New Yorker Joy Harjo Joy Harjo, former U.S. poet laureate Denise Toombs / National Portrait Gallery “I had no plans to be a poet,” Harjo tells KOSU’s Sarah Liese. Growing up as a citizen of the Muscogee Nation, she knew that many other Native American students were training “for education, for medical fields, to be Native attorneys,” Harjo adds. “Poetry to everyone seemed frivolous, like it wasn’t necessary. But you come down to someone dying, you come down to falling in love, falling out of love, you come down to marriage, you come to those moments of transformation, of being tested as a human being. And poetry is there.” Harjo wrote her first volume of poetry, a nine-poem chapbook called The Last Song, in 1975. In one poem from the collection, titled “3 a.m.,” Harjo writes about “two Indians” in the Albuquerque airport “at three in the morning / trying to find a way back.” John Scarry’s description of the poem in World Literature Today as “a work filled with ghosts from the Native American past, figures seen operating in an alien culture that is itself a victim of fragmentation” applies to much of Harjo’s career. In 2019, Harjo became the first Native American to be named U.S. poet laureate. In that role, she led “Living Nations, Living Words,” a project that mapped Native poets across North America. Jamie Dimon Jamie Dimon, CEO of JPMorgan Chase Arturo Olmos / National Portrait Gallery As the head of America’s largest bank, Dimon oversees more than $3 trillion in assets across the world. Born in Queens, New York, in 1956, he began a career in investment banking after graduating from Harvard Business School. “He just outworks everybody,” Duff McDonald, the author of Last Man Standing: The Ascent of Jamie Dimon and JPMorgan Chase, explained in a 2009 interview with the Chase Alumni Association. After becoming CEO of the bank in 2006, Dimon rose to prominence as he steered it through the Great Recession. “The only brand that came out of this thing undamaged was Chase,” McDonald said. Other business and finance leaders honored with the Portrait of a Nation Award include Amazon founder Jeff Bezos and former PepsiCo CEO Indra Nooyi, who were selected in 2019. Get the latest stories in your inbox every weekday.0 Comentários 0 Compartilhamentos 17 Visualizações -

VENTUREBEAT.COMSubway Surfers and Crossy Road’s crossover brings together mobile classicsSubway Surfers and Crossy Road recently crossed over, bringing together two of the longest-running titles in mobile gaming.Read More0 Comentários 0 Compartilhamentos 13 Visualizações

VENTUREBEAT.COMSubway Surfers and Crossy Road’s crossover brings together mobile classicsSubway Surfers and Crossy Road recently crossed over, bringing together two of the longest-running titles in mobile gaming.Read More0 Comentários 0 Compartilhamentos 13 Visualizações -

WWW.THEVERGE.COMGPU prices are out of control againEvery so often, Central Computers — one of the last remaining dedicated Silicon Valley computer stores — lets subscribers know it’s managed to obtain a small shipment of AMD graphics cards. Today, it informed me that I could now purchase a $600 Radeon RX 9070 XT for $850 — a $250 markup.It’s not alone. I just checked every major US retailer and street prices on eBay, and I regret to inform you: the great GPU shortage has returned. Many AMD cards are being marked up $100, $200, $250, even $280. The street price of an Nvidia RTX 5080 is now over $1,500, a full $500 higher than MSRP. And an RTX 5090, the most powerful consumer GPU? You can’t even get the $2,000 card for $3,000 today.Here, I’ve built tables to show you:GPU street prices: April 2025ItemMSRPAverage eBay street price (Mar-Apr)Best retail price (April 25th)AMD Radeon RX 9070 XT$599$957$880AMD Radeon RX 9070$549$761$835Nvidia RTX 5090$1,999$3,871$3,140Nvidia RTX 5080$999$1,533$1,390Nvidia RTX 5070 Ti$749$1,052$825Nvidia RTX 5070$549$715$610“Best retail price” is the actual price I saw a card for on April 25th — roughly the minimum you’d pay.You shouldn’t just blame tariffs for these price hikes. In early March, we found retailers were already scalping their supposedly entry-level MSRP models of the new AMD graphics cards. Nor is this likely to just be high demand, given how few cards are changing hands on eBay: only around 1,100 new Nvidia GPUs, and around 266 new AMD GPUs were listed there over the past 30 days.But tariffs may have an greater impact soon: on May 2nd, the de minimis exemption will no longer apply, meaning shipments valued at $800 or less can be taxed, and many more shipments will likely get hit as a result. Stores like Shein and Temu have already raised prices in anticipation.Here’s a deeper dive on the “MSRP” models of the AMD cards, which were all originally listed at $549 or $599:AMD “MSRP” GPU prices, April 2025ItemMSRPApril 25th, NeweggApril 25th, Micro CentereBay street price (past 30 days)Units on eBay (past 30 days)PowerColor Reaper 9070 XT$599$699$799$7556Sapphire Pulse 9070 XT$599$879$849$87939XFX Swift 9070 XT$599$839$829$9492ASRock Steel Legend 9070 XT$599$699$699$89232Gigabyte Gaming 9070 XT$599$659N/A$91332PowerColor Reaper 9070$549$699$679$7654Sapphire Pulse 9070$549$669$789$7717XFX Swift OC 9070$549$639$749$74625ASRock Challenger 9070$549$549$609$8801Gigabyte Gaming OC 9070$549$669$669$89336eBay street prices are an average.I’ve focused this table on Newegg and Micro Center since they carry more models than any other retailer, though I also spotted “MSRP” 9070 XT cards at $800 and $850 at Amazon today, and an $830 card at Best Buy. Otherwise, these are the new sticker prices, not necessarily attainable prices, as most were out of stock.From December 2020 to July 2022, I periodically tracked the prices of game consoles and GPUs during the covid-19 pandemic, when they were incredibly expensive to obtain. At one point, some GPUs were worth triple their MSRP. I’d love to hear from Verge subscribers in particular: is this a valuable service we should continue in the tariff era? Or do you just want to know when it’s safe to enter the water again?See More:0 Comentários 0 Compartilhamentos 13 Visualizações

WWW.THEVERGE.COMGPU prices are out of control againEvery so often, Central Computers — one of the last remaining dedicated Silicon Valley computer stores — lets subscribers know it’s managed to obtain a small shipment of AMD graphics cards. Today, it informed me that I could now purchase a $600 Radeon RX 9070 XT for $850 — a $250 markup.It’s not alone. I just checked every major US retailer and street prices on eBay, and I regret to inform you: the great GPU shortage has returned. Many AMD cards are being marked up $100, $200, $250, even $280. The street price of an Nvidia RTX 5080 is now over $1,500, a full $500 higher than MSRP. And an RTX 5090, the most powerful consumer GPU? You can’t even get the $2,000 card for $3,000 today.Here, I’ve built tables to show you:GPU street prices: April 2025ItemMSRPAverage eBay street price (Mar-Apr)Best retail price (April 25th)AMD Radeon RX 9070 XT$599$957$880AMD Radeon RX 9070$549$761$835Nvidia RTX 5090$1,999$3,871$3,140Nvidia RTX 5080$999$1,533$1,390Nvidia RTX 5070 Ti$749$1,052$825Nvidia RTX 5070$549$715$610“Best retail price” is the actual price I saw a card for on April 25th — roughly the minimum you’d pay.You shouldn’t just blame tariffs for these price hikes. In early March, we found retailers were already scalping their supposedly entry-level MSRP models of the new AMD graphics cards. Nor is this likely to just be high demand, given how few cards are changing hands on eBay: only around 1,100 new Nvidia GPUs, and around 266 new AMD GPUs were listed there over the past 30 days.But tariffs may have an greater impact soon: on May 2nd, the de minimis exemption will no longer apply, meaning shipments valued at $800 or less can be taxed, and many more shipments will likely get hit as a result. Stores like Shein and Temu have already raised prices in anticipation.Here’s a deeper dive on the “MSRP” models of the AMD cards, which were all originally listed at $549 or $599:AMD “MSRP” GPU prices, April 2025ItemMSRPApril 25th, NeweggApril 25th, Micro CentereBay street price (past 30 days)Units on eBay (past 30 days)PowerColor Reaper 9070 XT$599$699$799$7556Sapphire Pulse 9070 XT$599$879$849$87939XFX Swift 9070 XT$599$839$829$9492ASRock Steel Legend 9070 XT$599$699$699$89232Gigabyte Gaming 9070 XT$599$659N/A$91332PowerColor Reaper 9070$549$699$679$7654Sapphire Pulse 9070$549$669$789$7717XFX Swift OC 9070$549$639$749$74625ASRock Challenger 9070$549$549$609$8801Gigabyte Gaming OC 9070$549$669$669$89336eBay street prices are an average.I’ve focused this table on Newegg and Micro Center since they carry more models than any other retailer, though I also spotted “MSRP” 9070 XT cards at $800 and $850 at Amazon today, and an $830 card at Best Buy. Otherwise, these are the new sticker prices, not necessarily attainable prices, as most were out of stock.From December 2020 to July 2022, I periodically tracked the prices of game consoles and GPUs during the covid-19 pandemic, when they were incredibly expensive to obtain. At one point, some GPUs were worth triple their MSRP. I’d love to hear from Verge subscribers in particular: is this a valuable service we should continue in the tariff era? Or do you just want to know when it’s safe to enter the water again?See More:0 Comentários 0 Compartilhamentos 13 Visualizações -

WWW.MARKTECHPOST.COMGoogle DeepMind Research Introduces QuestBench: Evaluating LLMs’ Ability to Identify Missing Information in Reasoning TasksLarge language models (LLMs) have gained significant traction in reasoning tasks, including mathematics, logic, planning, and coding. However, a critical challenge emerges when applying these models to real-world scenarios. While current implementations typically operate under the assumption that all necessary information is provided upfront in well-specified tasks, reality often presents incomplete or ambiguous situations. Users frequently omit crucial details when formulating math problems, and autonomous systems like robots must function in environments with partial observability. This fundamental mismatch between idealised complete-information settings and the incomplete nature of real-world problems necessitates LLMs to develop proactive information-gathering capabilities. Recognising information gaps and generating relevant clarifying questions represents an essential but underdeveloped functionality for LLMs to effectively navigate ambiguous scenarios and provide accurate solutions in practical applications. Various approaches have attempted to address the challenge of information gathering in ambiguous scenarios. Active learning strategies acquire sequential data through methods like Bayesian optimisation, reinforcement learning, and robot planning with partially observable states. Research on ambiguity in natural language has explored semantic uncertainties, factual question-answering, task-oriented dialogues, and personalised preferences. Question-asking methods for LLMs include direct prompting techniques, information gain computation, and multi-stage clarification frameworks. However, most existing benchmarks focus on subjective tasks where multiple valid clarifying questions exist, making objective evaluation difficult. These approaches address ambiguous or knowledge-based tasks rather than underspecified reasoning problems, where an objectively correct question is determinable. QuestBench presents a robust approach to evaluating LLMs’ ability to identify and acquire missing information in reasoning tasks. The methodology formalises underspecified problems as Constraint Satisfaction Problems (CSPs) where a target variable cannot be determined without additional information. Unlike semantic ambiguity, where multiple interpretations exist but each yields a solvable answer, underspecification renders problems unsolvable without supplementary data. QuestBench specifically focuses on “1-sufficient CSPs” – problems requiring knowledge of just one unknown variable’s value to solve for the target variable. The benchmark comprises three distinct domains: Logic-Q (logical reasoning tasks), Planning-Q (blocks world planning problems with partially observed initial states), and GSM-Q/GSME-Q (grade-school math problems in verbal and equation forms). The framework strategically categorises problems along four axes of difficulty: number of variables, number of constraints, search depth required, and expected guesses needed by brute-force search. This classification offers insights into LLMs’ reasoning strategies and performance limitations. QuestBench employs a formal Constraint Satisfaction Problem framework, precisely identify and evaluate information gaps in reasoning tasks. A CSP is defined as a tuple ⟨X, D, C, A, y⟩ where X represents variables, D denotes their domains, C encompasses constraints, A consists of variable assignments, and y is the target variable to solve. The framework introduces the “Known” predicate, indicating when a variable’s value is determinable either through direct assignment or derivation from existing constraints. A CSP is classified as underspecified when the target variable y cannot be determined from available information. The methodology focuses specifically on “1-sufficient CSPs”, where knowing just one additional variable is sufficient to solve for the target. The benchmark measures model performance along four difficulty axes that correspond to algorithmic complexity: total number of variables (|X|), total number of constraints (|C|), depth of backwards search tree (d), and expected number of random guesses needed (𝔼BF). These metrics provide quantitative measures of problem complexity and help differentiate between semantic ambiguity (multiple valid interpretations) and underspecification (missing information). For each task, models must identify the single sufficient variable that, when known, enables solving for the target variable, requiring both recognition of information gaps and strategic reasoning about constraint relationships. Experimental evaluation of QuestBench reveals varying capabilities among leading large language models in information-gathering tasks. GPT-4o, GPT-4-o1 Preview, Claude 3.5 Sonnet, Gemini 1.5 Pro/Flash, Gemini 2.0 Flash Thinking Experimental, and open-sourced Gemma models were tested across zero-shot, chain-of-thought, and four-shot settings. Tests were conducted on representative subsets of 288 GSM-Q and 151 GSME-Q tasks between June 2024 and March 2025. Performance analysis along the difficulty axes demonstrates that models struggle most with problems featuring high search depths and complex constraint relationships. Chain-of-thought prompting generally improved performance across all models, suggesting that explicit reasoning pathways help identify information gaps. Among the evaluated models, Gemini 2.0 Flash Thinking Experimental achieved the highest accuracy, particularly on planning tasks, while open-source models showed competitive performance on logical reasoning tasks but struggled with complex math problems requiring deeper search. QuestBench provides a unique framework for evaluating LLMs’ ability to identify underspecified information and generate appropriate clarifying questions in reasoning tasks. Current state-of-the-art models demonstrate reasonable performance on simple algebra problems but struggle significantly with complex logic and planning tasks. Performance deteriorates as problem complexity increases along key dimensions like search depth and expected number of brute-force guesses. These findings highlight that while reasoning ability is necessary for effective question-asking, it alone may not be sufficient. Significant advancement opportunities exist in developing LLMs that can better recognize information gaps and request clarification when operating under uncertainty. Check out the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit. Asif RazzaqWebsite | + postsBioAsif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.Asif Razzaqhttps://www.marktechpost.com/author/6flvq/A Comprehensive Tutorial on the Five Levels of Agentic AI Architectures: From Basic Prompt Responses to Fully Autonomous Code Generation and ExecutionAsif Razzaqhttps://www.marktechpost.com/author/6flvq/NVIDIA AI Releases OpenMath-Nemotron-32B and 14B-Kaggle: Advanced AI Models for Mathematical Reasoning that Secured First Place in the AIMO-2 Competition and Set New Benchmark RecordsAsif Razzaqhttps://www.marktechpost.com/author/6flvq/Meta AI Releases Web-SSL: A Scalable and Language-Free Approach to Visual Representation LearningAsif Razzaqhttps://www.marktechpost.com/author/6flvq/AWS Introduces SWE-PolyBench: A New Open-Source Multilingual Benchmark for Evaluating AI Coding Agents0 Comentários 0 Compartilhamentos 24 Visualizações

-

TOWARDSAI.NETScaling Intelligence: Overcoming Infrastructure Challenges in Large Language Model OperationsScaling Intelligence: Overcoming Infrastructure Challenges in Large Language Model Operations 1 like April 26, 2025 Share this post Author(s): Rajarshi Tarafdar Originally published on Towards AI. The scenario is pretty brightening for artificial intelligence (AI) and large language models (LLMs) — enabling features from chatbots to advanced decision-making systems. However, making these models compatible with enterprise use cases is a great challenge, which must be addressed with cutting-edge infrastructure. The industry changes rapidly, but several challenges remain open to eventually make operationally large at-scale LLMs. This article discusses these challenges of infrastructure and provides advice for companies on the way to overcoming them to capture the rich benefits of LLMs. Market Growth & Investment in LLM Infrastructure The Generative AI global market is shooting up like never before. So much so that it is estimated that, in the year when the moon hits 2025, spending on generative AI will be $644 billion out of which 80% will be consumed on hardware such as servers and AI-endowed devices. Automation, efficiency, and insights were other important factors driving the increase that have businesses integrating LLMs into their workflows to be able to use even more AI-powered applications. Furthermore, according to projections, the public cloud services market will cross $805 billion in 2024 and grow at a 19.4% compound annual growth rate (CAGR) during 2024. Cloud infrastructure is a key enabler for such LLMs as it helps companies acquire the computing power required to run big models without spending heavily on in-house or physical equipment. Such cloud-based approaches also prompted developments in specialized infrastructure ecosystems that will exceed $350 billion by the year 2035. Core Infrastructure Challenges in Scaling LLMs Despite the immense market opportunity, there are several core challenges that organizations face when scaling LLM infrastructure for enterprise use. 1. Computational Demands Computational requirements rank among the most severe challenges faced when scaling LLMs. For example, modern LLMs like GPT-4 have about 1.75 trillion parameters and correspondingly massive computational requirements for training and inference tasks. To cater to these requirements, companies need specialized hardware like GPUs (Graphics Processing Units) or TPUs (Tensor Processing Units). But procuring and maintaining such high-performance processors can reach exorbitant levels. The economics of training these LLMs add to the concern. Perhaps 10 million dollars or more would be the cost of power and hardware to develop a state-of-the-art model. In addition, since LLMs consume copious amounts of energy while training and even while deploying, it is an additional environmental and financial burden. Another important thing here is latency constraints. Global enterprises need their LLMs to provide low-latency responses to end users in many regions; this can often require deploying model instances in many regions, adding yet another layer of complication to the infrastructure setup. A balancing act is to manage latency and ensure consistency across the different regions. 2. Scalability Bottlenecks Scaling of LLM operations brings another challenge that the inference cost rises non-linearly with increased user traffic. Using LLMs to serve thousands or millions of users can be resource-draining and demand that the scaling solution therefore, be put in place to be able to dynamically scale resources based on user demand. In other words, resource scaling would affect the traditional-heavy infrastructure to be able to satisfy increasing demands of enterprise-grade LLM applications, which will lead to avoidable delays and rising operational costs. The other challenge with scaling LLM operations is an increase in costs for inference on an almost nonlinear scale along the curve as user traffic increases. There are some very resource-heavy tasks involved in using LLMs: serving thousands or even millions of users would mean dynamic scaling solutions are required, automatically adjusting resources based on demand. In short, these scaling operations may adversely affect traditional, heavyweight infrastructure that is supposed to satisfy continuously large demand from enterprise-level LLM applications, resulting in a lot of undesirable delays and soaring operational costs. 3. Hardware Limitations The hardware constraints of scaling LLMs are significant. In 2024, the worldwide GPU shortage increased the prices by 40%, putting on hold applications based on LLMs across industries. Because of limited access to GPUs, organizations often have to differ and wait for the warehouse because this can disrupt a lot of timelines. Further, increasingly complex LLMs require more memory, hence additional stress on hardware resources. One of the techniques to address this problem is quantization, which reduces the precision while calculating with the model. This leads to consuming between 30 and 50 percent of lesser memory without impacting the model’s accuracy, hence improving the usage of built hardware resources by organizations. Enterprise Strategies for Scaling LLM Operations To address the challenges of scaling LLM infrastructure, enterprises have developed several innovative strategies that combine short-term fixes and long-term investments in technology. 1. Distributed Computing Scaling LLMs by distributed computing is one such means. Organizations harnessing Kubernetes clusters with cloud auto-scaling solutions can deploy and scale LLMs efficiently across various servers and regions. Different geographic locations help reduce latency by 35% for global users, keeping LLM applications responsive under heavy load. 2. Model Optimization Another essential strategy to consider is model optimization. By eliminating parameters that are not useful, organizations can optimize their models’ complexity by using techniques like knowledge distillation without any impact on performance. These optimizations can provide up to 25 percent reductions in inference costs, thus improving the feasibility of scaling for deploying LLMs in enterprise applications. 3. Hybrid Architectures Many organizations are turning to hybrid architectures, which combine CPU and GPU configurations to optimize performance and cost. By using GPUs for larger models and CPUs for smaller models or auxiliary tasks, enterprises can lower hardware expenses by 50% while maintaining the performance needed to meet operational demands. Data & Customization in Scaling LLMs The quality of data and model customization are prerequisites for getting the best performance while scaling the LLMs. Here, coat LLMs into specific applications of the business-tied domains of tuning for the best accuracy. Up to 60% in task accuracy can be improved through fine-tuning applicable to a specific domain in typical industries like finance, having to pinpoint fraud accurately and effectively. In addition, retrieval-augmented generation (RAG) is a strong approach to enhancing the capacity of LLMs in enterprise applications. RAG reduces hallucinations by 45% in systems such as enterprise-level question-answering (QA) systems. In this way, it combines the power of classical LLMs and external retrieval mechanisms in order to boost performance. In addition to improving the accuracy of the models, organizations would also make a more reliable AI system. One major challenge to AI projects has been poor data quality. It has affected at least 70% of the AI projects, delaying them due to the very real need for high-quality, language-aligned datasets. For effective scaling within an organization’s LLM infrastructure, it becomes essential to invest in high-quality robust data pipelines and cleansing operations. Future Infrastructure Trends in Scaling LLMs Looking to the future, several infrastructure trends are expected to play a pivotal role in scaling LLMs. 1. Orchestration Hubs for Multi-Model Networks As the use of LLMs becomes more widespread, enterprises will increasingly rely on orchestration hubs to manage multi-model networks. These hubs will allow organizations to deploy, monitor, and optimize thousands of specialized LLMs, enabling efficient resource allocation and improving performance at scale. 2. Vector Databases and Interoperability Standards The rise of vector databases and interoperability standards will be a game-changer for scaling LLMs. These databases will allow LLMs to perform more efficiently by storing and retrieving data in a way that is optimized for machine learning applications. As the market for these technologies grows, they are expected to dominate $20 billion+ markets by 2030. 3. Energy-Efficient Chips One of the most exciting developments in scaling LLMs is the emergence of energy-efficient chips, such as neuromorphic processors. These chips promise to reduce the power consumption of LLMs by up to 80%, making it more sustainable to deploy LLMs at scale without incurring prohibitive energy costs. Real-World Applications and Trade-Offs In recent times, several organizations that have successfully scaled LLM operations attest to the benefits gained. One financial institution was able to cut 40% in fraud analysis costs using model parallelism across 32 GPUs, whereas in the case of a healthcare provider, RAG-enhanced LLMs achieved a 55% reduction in diagnostic error rates. However, enterprises are now faced with trade-offs between prompt fixes and long-term investment. Paradigms such as quantization and caching provide quick relief regarding the optimization of memory and cost, whereas long-term scaling is going to require investment in modular architecture, energy-efficient chips, and next-generation AI infrastructure. Conclusion Scaling LLMs is a major resource- and effort-intensive activity, which is necessary to unlock AI applications in enterprises. If organizations can improve and make LLM operations more cost-effective by addressing key infrastructure challenges, namely computational requirements, scalability bottlenecks, and hardware limitations, the enterprises will be on track. With the right approaches, ranging from distributed computing, domain-specific fine-tuning, enterprises can scale their AI capabilities to meet the emerging demand for intelligent applications. As the LLM ecosystem keeps evolving, sustained investment in infrastructure will be crucial to fostering growth and ensuring that AI remains a game-changing tool across various industries. Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor. Published via Towards AI Towards AI - Medium Share this post0 Comentários 0 Compartilhamentos 20 Visualizações

-

WWW.IGN.COMMaxroll’s Clair Obscur: Expedition 33 Branch with Guides, Codex, and PlannerClair Obscur: Expedition 33 is a new RPG and debut title from the French studio, Sandfall Interactive. With a blend of immersive storytelling and challenging gameplay, there’s nothing else quite like it. Maxroll has been hard at work on helpful guides for Expedition 33. There are guides to help you get started, teach you game mechanics, find valuable loot, and hone your builds. Maxroll’s Codex has weapons, skills, Pictos, and Lumina to help you prepare for the challenges you will face on the continent. If you’re the theorycrafty type, you can use Maxroll’s Expedition 33 Planner to make your own build, then share it on their Community Builds Section.Getting StartedGet started in the world of Expedition 33 with character guides, beginner resources, and Pictos guides from Maxroll. If you're looking for a step-by-step companion guide to keep up as you play, check out IGN's Expedition 33 Walkthrough. Beginner’s GuidePlayMaxroll’s comprehensive Beginner’s Guide for Clair Obscur: Expedition 33 introduces the game’s core mechanics like exploring the world, doing battle against Nevrons, each of the playable characters and their unique mechanics, plus introduces the game’s progression systems like weapons, attributes, Pictos and Luminas. Also check out IGN's 10 Things Expedition 33 Doesn't Tell You for a shorter guide of some easily-missed things you should know. Combat GuidePlayLearn more about defeating dangerous Nevrons with IGN's Combat Guide. This is a beginner's guide with some tips and tricks, including advice for how to use Lune and Maelle. Weapons, Attributes and UpgradesWeapons are a core part of building your team in Expedition 33. Each weapon (and character skill) deals different types of elemental damage, some of which are more effective than others against different Nevrons. Each character has a variety of different weapons that have increased attribute scaling as you level them, plus unlock special bonuses at levels 4, 10, and 20. Read more about Weapons, Attributes and Upgrades. Pictos and LuminasPictos are equippable items that give stats and a variety of unique effects. Each character can equip 3 Pictos, but you can use the Lumina system to gain even more special effects. If you’re struggling on an encounter, consider changing up your Pictos to gain more defenses, add damage or buff your team with things like Shell or Powerful. Learn more about Pictos and the Lumina system, a core progression feature in Expedition 33, and keep track of what you have and where to find them with IGN's Pictos checklist. Early Game Pictos GuidesThe Pictos system provides a lot of room to customize your party and build each character the way that you want, but there are a few Pictos which stand out as especially powerful during the early game. Keep an eye out for Dead Energy II and Critical Burn, complete side-content for the “lone wolf” style Last Stand Pictos and use Recovery to turn one character into a super-tank!CharactersLearn about each playable character in Expedition 33, their unique mechanics, and skills with Maxroll’s Character Skill Guides.More GuidesMaxroll also has a few more guides suitable for the midgame and endgame. These go into detail on how to unlock areas of the map, defeat certain enemies more easily, or which Pictos are the best.How to Unlock all of Esquie’s Traversal AbilitiesEsquie can break through obstacles, swim, fly, and even dive under the ocean. Learn how to unlock all of Esquie’s Abilities as you progress through the game. Though not related to Esquie, did you know you can break those black rocks with blue cracks in them? Enemy Strengths and WeaknessesLearn about the Strengths and Weaknesses for the enemies you encounter across the Continent. Abuse enemy weaknesses to deal 50% more damage, and avoid using elements they absorb, as those heal the enemy instead of damaging them!Zone ProgressionIf you’re feeling lost after completing the game’s story, Maxroll has you covered with a Zone Progression Guide, which gives some recommendations on when to complete different optional zones. IGN also has a list of Expedition 33 side quests with their rewards, so you can determine which ones are worth completing. Best PictosLearn the Best Pictos to equip in both the early game and the endgame. Maxroll’s guide details Pictos that provide generic power along with those that have more niche uses, empowering new build archetypes.CodexMaxroll’s Expedition 33 Codex has information on all of the different Weapons, Pictos, Luminas, and Skills available in the game. You can even adjust the level at the top to see how Weapons/Pictos scale.Planner and Community BuildsPlan out your build using Maxroll’s Expedition 33 Builder, then share it using the Community Builds section ! Let’s go over a few of the planner’s key features:Here are the features to keep in mind when using Maxroll’s Expedition 33 Planner.Select your characters and set up the active party. If you want to create different teams (with unique setups for each character) you can also do so here. At the top select an optional tag like “Story” or “Post-Story.” You use this section to navigate between each of the characters on your team to change their setups.Pick your weapon, then adjust the level. As you do the power and scaling change but attributes are not currently factored in.- Select the 6 skills you’re using on the character. NOTE: Gradient Skills are excluded here but you can learn more about them in the Codex! Pick your Pictos, you can use each Pictos once across your entire team. Select the correct level to display the stats added by each.Add Luminas here, the point count is displayed at the top.Allocate attributes, maybe use something your weapon scales off of?Your stats are displayed here, based on Pictos, Attributes, and base weapon damage.Add some notes, tell people about your skill rotation or where you found some of the cool stuff you’re using.Set your build to public to share it with the community.Tomorrow ComesThat’s it for Maxroll’s new guides for Clair Obscur: Expedition 33. Why not head over to the build planner and start theorycrafting? Written by IGN Staff with contributions from Tenkiei and Snail.0 Comentários 0 Compartilhamentos 25 Visualizações

WWW.IGN.COMMaxroll’s Clair Obscur: Expedition 33 Branch with Guides, Codex, and PlannerClair Obscur: Expedition 33 is a new RPG and debut title from the French studio, Sandfall Interactive. With a blend of immersive storytelling and challenging gameplay, there’s nothing else quite like it. Maxroll has been hard at work on helpful guides for Expedition 33. There are guides to help you get started, teach you game mechanics, find valuable loot, and hone your builds. Maxroll’s Codex has weapons, skills, Pictos, and Lumina to help you prepare for the challenges you will face on the continent. If you’re the theorycrafty type, you can use Maxroll’s Expedition 33 Planner to make your own build, then share it on their Community Builds Section.Getting StartedGet started in the world of Expedition 33 with character guides, beginner resources, and Pictos guides from Maxroll. If you're looking for a step-by-step companion guide to keep up as you play, check out IGN's Expedition 33 Walkthrough. Beginner’s GuidePlayMaxroll’s comprehensive Beginner’s Guide for Clair Obscur: Expedition 33 introduces the game’s core mechanics like exploring the world, doing battle against Nevrons, each of the playable characters and their unique mechanics, plus introduces the game’s progression systems like weapons, attributes, Pictos and Luminas. Also check out IGN's 10 Things Expedition 33 Doesn't Tell You for a shorter guide of some easily-missed things you should know. Combat GuidePlayLearn more about defeating dangerous Nevrons with IGN's Combat Guide. This is a beginner's guide with some tips and tricks, including advice for how to use Lune and Maelle. Weapons, Attributes and UpgradesWeapons are a core part of building your team in Expedition 33. Each weapon (and character skill) deals different types of elemental damage, some of which are more effective than others against different Nevrons. Each character has a variety of different weapons that have increased attribute scaling as you level them, plus unlock special bonuses at levels 4, 10, and 20. Read more about Weapons, Attributes and Upgrades. Pictos and LuminasPictos are equippable items that give stats and a variety of unique effects. Each character can equip 3 Pictos, but you can use the Lumina system to gain even more special effects. If you’re struggling on an encounter, consider changing up your Pictos to gain more defenses, add damage or buff your team with things like Shell or Powerful. Learn more about Pictos and the Lumina system, a core progression feature in Expedition 33, and keep track of what you have and where to find them with IGN's Pictos checklist. Early Game Pictos GuidesThe Pictos system provides a lot of room to customize your party and build each character the way that you want, but there are a few Pictos which stand out as especially powerful during the early game. Keep an eye out for Dead Energy II and Critical Burn, complete side-content for the “lone wolf” style Last Stand Pictos and use Recovery to turn one character into a super-tank!CharactersLearn about each playable character in Expedition 33, their unique mechanics, and skills with Maxroll’s Character Skill Guides.More GuidesMaxroll also has a few more guides suitable for the midgame and endgame. These go into detail on how to unlock areas of the map, defeat certain enemies more easily, or which Pictos are the best.How to Unlock all of Esquie’s Traversal AbilitiesEsquie can break through obstacles, swim, fly, and even dive under the ocean. Learn how to unlock all of Esquie’s Abilities as you progress through the game. Though not related to Esquie, did you know you can break those black rocks with blue cracks in them? Enemy Strengths and WeaknessesLearn about the Strengths and Weaknesses for the enemies you encounter across the Continent. Abuse enemy weaknesses to deal 50% more damage, and avoid using elements they absorb, as those heal the enemy instead of damaging them!Zone ProgressionIf you’re feeling lost after completing the game’s story, Maxroll has you covered with a Zone Progression Guide, which gives some recommendations on when to complete different optional zones. IGN also has a list of Expedition 33 side quests with their rewards, so you can determine which ones are worth completing. Best PictosLearn the Best Pictos to equip in both the early game and the endgame. Maxroll’s guide details Pictos that provide generic power along with those that have more niche uses, empowering new build archetypes.CodexMaxroll’s Expedition 33 Codex has information on all of the different Weapons, Pictos, Luminas, and Skills available in the game. You can even adjust the level at the top to see how Weapons/Pictos scale.Planner and Community BuildsPlan out your build using Maxroll’s Expedition 33 Builder, then share it using the Community Builds section ! Let’s go over a few of the planner’s key features:Here are the features to keep in mind when using Maxroll’s Expedition 33 Planner.Select your characters and set up the active party. If you want to create different teams (with unique setups for each character) you can also do so here. At the top select an optional tag like “Story” or “Post-Story.” You use this section to navigate between each of the characters on your team to change their setups.Pick your weapon, then adjust the level. As you do the power and scaling change but attributes are not currently factored in.- Select the 6 skills you’re using on the character. NOTE: Gradient Skills are excluded here but you can learn more about them in the Codex! Pick your Pictos, you can use each Pictos once across your entire team. Select the correct level to display the stats added by each.Add Luminas here, the point count is displayed at the top.Allocate attributes, maybe use something your weapon scales off of?Your stats are displayed here, based on Pictos, Attributes, and base weapon damage.Add some notes, tell people about your skill rotation or where you found some of the cool stuff you’re using.Set your build to public to share it with the community.Tomorrow ComesThat’s it for Maxroll’s new guides for Clair Obscur: Expedition 33. Why not head over to the build planner and start theorycrafting? Written by IGN Staff with contributions from Tenkiei and Snail.0 Comentários 0 Compartilhamentos 25 Visualizações -

9TO5MAC.COMLand Rover brought CarPlay to 15-year-old Range Rovers with ‘period‑correct hardware’While the world awaits next-gen CarPlay, Land Rover has made a retrofit CarPlay solution for Range Rovers from a previous decade. With the exception of wireless support, the CarPlay hardware appears to be pretty basic, and that’s the point. Land Rover recently announced an official CarPlay and Android Auto infotainment system upgrade for the third-generation Range Rovers. This covers the 2010, 2011, and 2012 model SUVs. OEM CarPlay didn’t appear in new cars sold until the 2015 model vehicles. Land Rover emphasizes that its official retrofit solution is intentionally made to add CarPlay to the infotainment experience without changing anything else. Owners will be able to enjoy wireless Apple CarPlay and Android Auto integration with the original touchscreen, effortlessly blending period‑correct hardware with the latest technology features. The wireless upgrade retains all the existing functionalities ‑ including the superb sound quality of the original audio system – while adding features such as music and podcast streaming services and modern connected navigation apps on Apple CarPlay and Android Auto. Joining the already extensive range of Classic Genuine Parts, the new infotainment system uses the original screen, ensuring upgraded models retain a factory‑fresh, OEM appearance – with bespoke fixtures and fittings engineered by Land Rover Classic. Available for Range Rover models manufactured between 2010 and 2012, the experts at Land Rover Classic have designed the new system to be fully compatible with all stereo specifications available on the original vehicle. Classic engineers have also worked hard to ensure clients can seamlessly transfer between the legacy user interface and the new Apple and Android functions. While the OEM upgrade is only offered in the UK and Germany, it shows just how much drivers care about CarPlay in 2025. There are more advanced retrofit CarPlay solutions for Range Rover models going back 20 years, but collectors will value the “period-correct hardware” solution. Do more with your iPhone Follow Zac Hall: X | Threads | Instagram | Mastodon Add 9to5Mac to your Google News feed. FTC: We use income earning auto affiliate links. More.You’re reading 9to5Mac — experts who break news about Apple and its surrounding ecosystem, day after day. Be sure to check out our homepage for all the latest news, and follow 9to5Mac on Twitter, Facebook, and LinkedIn to stay in the loop. Don’t know where to start? Check out our exclusive stories, reviews, how-tos, and subscribe to our YouTube channel0 Comentários 0 Compartilhamentos 23 Visualizações

9TO5MAC.COMLand Rover brought CarPlay to 15-year-old Range Rovers with ‘period‑correct hardware’While the world awaits next-gen CarPlay, Land Rover has made a retrofit CarPlay solution for Range Rovers from a previous decade. With the exception of wireless support, the CarPlay hardware appears to be pretty basic, and that’s the point. Land Rover recently announced an official CarPlay and Android Auto infotainment system upgrade for the third-generation Range Rovers. This covers the 2010, 2011, and 2012 model SUVs. OEM CarPlay didn’t appear in new cars sold until the 2015 model vehicles. Land Rover emphasizes that its official retrofit solution is intentionally made to add CarPlay to the infotainment experience without changing anything else. Owners will be able to enjoy wireless Apple CarPlay and Android Auto integration with the original touchscreen, effortlessly blending period‑correct hardware with the latest technology features. The wireless upgrade retains all the existing functionalities ‑ including the superb sound quality of the original audio system – while adding features such as music and podcast streaming services and modern connected navigation apps on Apple CarPlay and Android Auto. Joining the already extensive range of Classic Genuine Parts, the new infotainment system uses the original screen, ensuring upgraded models retain a factory‑fresh, OEM appearance – with bespoke fixtures and fittings engineered by Land Rover Classic. Available for Range Rover models manufactured between 2010 and 2012, the experts at Land Rover Classic have designed the new system to be fully compatible with all stereo specifications available on the original vehicle. Classic engineers have also worked hard to ensure clients can seamlessly transfer between the legacy user interface and the new Apple and Android functions. While the OEM upgrade is only offered in the UK and Germany, it shows just how much drivers care about CarPlay in 2025. There are more advanced retrofit CarPlay solutions for Range Rover models going back 20 years, but collectors will value the “period-correct hardware” solution. Do more with your iPhone Follow Zac Hall: X | Threads | Instagram | Mastodon Add 9to5Mac to your Google News feed. FTC: We use income earning auto affiliate links. More.You’re reading 9to5Mac — experts who break news about Apple and its surrounding ecosystem, day after day. Be sure to check out our homepage for all the latest news, and follow 9to5Mac on Twitter, Facebook, and LinkedIn to stay in the loop. Don’t know where to start? Check out our exclusive stories, reviews, how-tos, and subscribe to our YouTube channel0 Comentários 0 Compartilhamentos 23 Visualizações -

FUTURISM.COMGovernment Launching Database of Everyday People With AutismImage by Andrew Harnik/Getty ImagesThe National Institutes of Health (NIH) is launching a registry tracking Americans with autism as part of a series of new studies by America's anti-vax health czar, Robert F. Kennedy, Jr, to discover the "cause" of the condition.As CBS News reports, NIH director and COVID truther Jay Bhattacharya told councillors that the studies, and the so-called "disease" registry that will be integrated into them, will glean data from a wide range of sources including pharmacies, Veterans Affairs, private insurance companies, and — perhaps most invasively — data from smart watches and fitness trackers.As of now, it's unclear how this data is being pulled, if people will be able to opt out, or if anyone will be informed when their data is used for these studies. We've reached out to the NIH and the Department of Health and Human Services (HHS) to ask about all of the above.Launched under the guise of streamlining healthcare information, this announcement comes just a week after Kennedy declared in an alarming and inaccurate speech that the Department of Health and Human Services (HHS) will, under his leadership, embark on a quest to discover the "cause" of autism, which he has referred to as both a "preventable disease" and an "epidemic."The allegedly brainwormed political scion added that children who live with autism will "never pay taxes" or "hold a job" — inaccurate statements that raised the hackles of autism advocates who called that rhetoric eugenicist.As if all that weren't ominous enough, the newly-announced studies will also involve up to 20 groups of outside researchers, which suggests they too will have access to all that sensitive health data — including that of minors. In our questions to NIH and HHS, we also asked about how that data will be stored and safeguarded.Initially, RFK Jr. vowed to have discovered the cause of autism by September — but Bhattacharya was forced to walk back that outrageous timeline after acknowledging that even the fastest government grants process will drag his boss' bunk research out until at least year's end."We're going to get hopefully grants out the door by the end of the summer, and people will get to work," the NIH director told reporters earlier this week. "We'll have a major conference, with updates, within the next year."Bhattacharya also tempered Kennedy's claims that there will be a silver bullet "cause" of autism discovered by his still-unannounced outside "experts" — though he appeared to trip over his words a bit while doing so."It's hard to guarantee when science will make an advance," he said during the same press conference. "It depends on, you know... nature has its say."Though Kennedy has had to do his own rhetorical walkback in the face of a massive and deadly measles outbreak, there's a very good chance that the "environmental toxins" referenced in his recent speech are nothing more than vaccines — which, despite being long demonstrated not to cause autism, remain a pet target for the HHS secretary.Is the man in charge of America's health compiling an autism database to use people's health records to prove the long-debunked theory that vaccines cause autism? Only time will tell — but there's plenty of reason to believe he will.Share This Article0 Comentários 0 Compartilhamentos 24 Visualizações

FUTURISM.COMGovernment Launching Database of Everyday People With AutismImage by Andrew Harnik/Getty ImagesThe National Institutes of Health (NIH) is launching a registry tracking Americans with autism as part of a series of new studies by America's anti-vax health czar, Robert F. Kennedy, Jr, to discover the "cause" of the condition.As CBS News reports, NIH director and COVID truther Jay Bhattacharya told councillors that the studies, and the so-called "disease" registry that will be integrated into them, will glean data from a wide range of sources including pharmacies, Veterans Affairs, private insurance companies, and — perhaps most invasively — data from smart watches and fitness trackers.As of now, it's unclear how this data is being pulled, if people will be able to opt out, or if anyone will be informed when their data is used for these studies. We've reached out to the NIH and the Department of Health and Human Services (HHS) to ask about all of the above.Launched under the guise of streamlining healthcare information, this announcement comes just a week after Kennedy declared in an alarming and inaccurate speech that the Department of Health and Human Services (HHS) will, under his leadership, embark on a quest to discover the "cause" of autism, which he has referred to as both a "preventable disease" and an "epidemic."The allegedly brainwormed political scion added that children who live with autism will "never pay taxes" or "hold a job" — inaccurate statements that raised the hackles of autism advocates who called that rhetoric eugenicist.As if all that weren't ominous enough, the newly-announced studies will also involve up to 20 groups of outside researchers, which suggests they too will have access to all that sensitive health data — including that of minors. In our questions to NIH and HHS, we also asked about how that data will be stored and safeguarded.Initially, RFK Jr. vowed to have discovered the cause of autism by September — but Bhattacharya was forced to walk back that outrageous timeline after acknowledging that even the fastest government grants process will drag his boss' bunk research out until at least year's end."We're going to get hopefully grants out the door by the end of the summer, and people will get to work," the NIH director told reporters earlier this week. "We'll have a major conference, with updates, within the next year."Bhattacharya also tempered Kennedy's claims that there will be a silver bullet "cause" of autism discovered by his still-unannounced outside "experts" — though he appeared to trip over his words a bit while doing so."It's hard to guarantee when science will make an advance," he said during the same press conference. "It depends on, you know... nature has its say."Though Kennedy has had to do his own rhetorical walkback in the face of a massive and deadly measles outbreak, there's a very good chance that the "environmental toxins" referenced in his recent speech are nothing more than vaccines — which, despite being long demonstrated not to cause autism, remain a pet target for the HHS secretary.Is the man in charge of America's health compiling an autism database to use people's health records to prove the long-debunked theory that vaccines cause autism? Only time will tell — but there's plenty of reason to believe he will.Share This Article0 Comentários 0 Compartilhamentos 24 Visualizações