0 Commentarios

0 Acciones

23 Views

Directorio

Directorio

-

Please log in to like, share and comment!

-

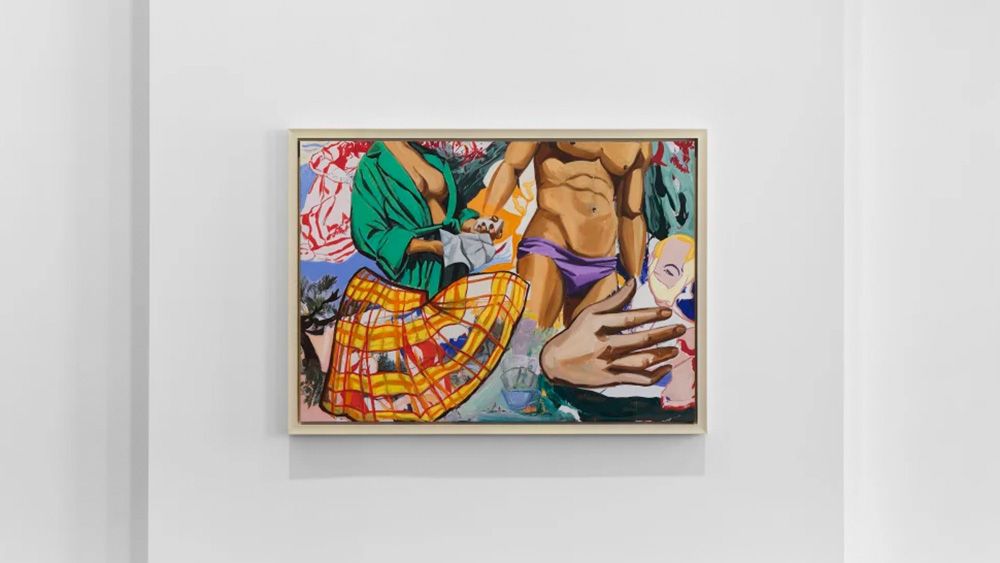

WWW.CREATIVEBLOQ.COMThis artist was huge in the '80s. Now, he's taught AI to steal his styleAfter decades of appropriating pop culture imagery, David Salle now poaches from his own work.0 Commentarios 0 Acciones 24 Views

WWW.CREATIVEBLOQ.COMThis artist was huge in the '80s. Now, he's taught AI to steal his styleAfter decades of appropriating pop culture imagery, David Salle now poaches from his own work.0 Commentarios 0 Acciones 24 Views -

WWW.WIRED.COMBoldHue Review: Print Your Own FoundationThis makeup printer custom shade-matches you—and creates your foundation on demand.0 Commentarios 0 Acciones 22 Views

WWW.WIRED.COMBoldHue Review: Print Your Own FoundationThis makeup printer custom shade-matches you—and creates your foundation on demand.0 Commentarios 0 Acciones 22 Views -

APPLEINSIDER.COMMac Studio M4 Max review one month later: Costly computing power, worth every centSwitching to an M4 Max Mac Studio was the most expensive Apple purchase I've ever made, but one month in, it is definitely the best.M4 Mac Studio sitting silently on my deskYou're not going to get specifications and timings with tape measures, because I've never been swayed by a Geekbench score. What I look for, and what I've got with my Mac Studio, is an appreciable difference in my work.In case you can read a spec sheet better than I can, though, let me tell you that I'm now using an M4 Max Mac Studio with 48GB RAM and 2TB SSD. It's replaced my office M1 Mac mini, which had 16GB RAM and 2TB SSD. Continue Reading on AppleInsider | Discuss on our Forums0 Commentarios 0 Acciones 21 Views

APPLEINSIDER.COMMac Studio M4 Max review one month later: Costly computing power, worth every centSwitching to an M4 Max Mac Studio was the most expensive Apple purchase I've ever made, but one month in, it is definitely the best.M4 Mac Studio sitting silently on my deskYou're not going to get specifications and timings with tape measures, because I've never been swayed by a Geekbench score. What I look for, and what I've got with my Mac Studio, is an appreciable difference in my work.In case you can read a spec sheet better than I can, though, let me tell you that I'm now using an M4 Max Mac Studio with 48GB RAM and 2TB SSD. It's replaced my office M1 Mac mini, which had 16GB RAM and 2TB SSD. Continue Reading on AppleInsider | Discuss on our Forums0 Commentarios 0 Acciones 21 Views -

ARCHINECT.COMAtlanta's tallest building in 30 years reaches important construction milestoneThe 1072 West Peachtree development, Atlanta’s tallest new building in 30 years, has updated progress on its construction after reaching the 20th floor milestone. This mixed-use TVS Studio design is 60 stories in height, topping out at 749 feet when it’s all said and done. You can expect a completion in the spring of 2026. It offers 350 new residential units and 224,000 square feet of Class A office space at a vital time when vacancy rates in the downtown area have been hovering around 25% since the end of COVID-19. Image is courtesy Rockefeller GroupImage is courtesy Rockefeller GroupImage is courtesy Rockefeller Group0 Commentarios 0 Acciones 24 Views

ARCHINECT.COMAtlanta's tallest building in 30 years reaches important construction milestoneThe 1072 West Peachtree development, Atlanta’s tallest new building in 30 years, has updated progress on its construction after reaching the 20th floor milestone. This mixed-use TVS Studio design is 60 stories in height, topping out at 749 feet when it’s all said and done. You can expect a completion in the spring of 2026. It offers 350 new residential units and 224,000 square feet of Class A office space at a vital time when vacancy rates in the downtown area have been hovering around 25% since the end of COVID-19. Image is courtesy Rockefeller GroupImage is courtesy Rockefeller GroupImage is courtesy Rockefeller Group0 Commentarios 0 Acciones 24 Views -

GAMINGBOLT.COMNintendo Switch 2 – Majority of Japanese Customers Reportedly Opting for Region-Locked VersionPre-orders for the Nintendo Switch 2 having gone live has resulted in quite a few bits of interesting details about the console and its demographics coming out. As caught by VGC, a recent informal survey by Japanese X user yukino_san_14 has indicated that not many fans in the country want to pay the extra costs to get the region-free model of the console. Rather, the majority of the Switch fan base in the country seems content with the region-locked Japanese-only model. More than 60,000 customers that had entered the pre-order lottery on the My Nintendo Store have been surveyed. Of these, 69.7 percent said that they had applied for the Mario Kart World bundle of the Japanese-only Switch 2. 27.5 percent said that they had applied for just the Japanese-only console itself, rather than the bundle. 2.9 percent applied for the region-free version of the Switch 2. Interestingly, 91.2 percent of the buyers that were going for the region-free version of the Switch 2 were able to successfully place their pre-orders. It is noting that, while these numbers might be interesting, the survey was still ultimately an informal one, with a relatively small group of customers. For context, the region-free Switch 2 being sold in Japan, which supports a host of different languages, is priced at ¥69,990, compared to the ¥49,980 price tag of the Japanese-only console. This adds a ¥20,000 to the price tag for the region-free console. While Nintendo hasn’t made any statements regarding the matter yet, the decision to have the region-free version of the console priced was likely made to prevent Switch 2 buyers from illegally exporting the console after buying it at a lower price in Japan. The Switch 2 is proving to be a highly-anticipated console in Japan. Earlier this week, Nintendo president Shuntaro Furukawa released a statement on social media, revealing that more than 2 million customers had signed up for the lottery-based pre-order system in Japan. In his statement, Furukawa also apologised, noting that Nintendo may not be able to meet such massive demand in time for the console’s June 5 launch. “On April 2, we announced the details of the Nintendo Switch 2 and began accepting applications for a lottery sale on the My Nintendo Store,” wrote Furukawa (translation via X). “As a result, we received applications from an astonishing 2.2 million people in Japan alone. “However, this far exceeded our prior expectations and greatly surpasses the number of Nintendo Switch 2 units we can deliver from the My Nintendo Store on June 5. Consequently, it is with great regret that we anticipate a significant number of customers will not be selected in tomorrow’s lottery announcement on April 24.” He did say, however, that Nintendo is planning on ramping up production of the console moving forward in order to meet the demand down the road. “Furthermore, we are planning to produce and ship a substantial number of Nintendo Switch 2 units moving forward,” he wrote. “We sincerely apologize for the time it will take to fully meet your expectations and kindly ask for your understanding.” 7割がマリカセットに応募(約150万人)。多言語版は3%と少ないため当選率の高さに繋がっている pic.twitter.com/yYLjwzRQVa— ゆきのさん (@yukino_san_14) April 25, 20250 Commentarios 0 Acciones 22 Views

GAMINGBOLT.COMNintendo Switch 2 – Majority of Japanese Customers Reportedly Opting for Region-Locked VersionPre-orders for the Nintendo Switch 2 having gone live has resulted in quite a few bits of interesting details about the console and its demographics coming out. As caught by VGC, a recent informal survey by Japanese X user yukino_san_14 has indicated that not many fans in the country want to pay the extra costs to get the region-free model of the console. Rather, the majority of the Switch fan base in the country seems content with the region-locked Japanese-only model. More than 60,000 customers that had entered the pre-order lottery on the My Nintendo Store have been surveyed. Of these, 69.7 percent said that they had applied for the Mario Kart World bundle of the Japanese-only Switch 2. 27.5 percent said that they had applied for just the Japanese-only console itself, rather than the bundle. 2.9 percent applied for the region-free version of the Switch 2. Interestingly, 91.2 percent of the buyers that were going for the region-free version of the Switch 2 were able to successfully place their pre-orders. It is noting that, while these numbers might be interesting, the survey was still ultimately an informal one, with a relatively small group of customers. For context, the region-free Switch 2 being sold in Japan, which supports a host of different languages, is priced at ¥69,990, compared to the ¥49,980 price tag of the Japanese-only console. This adds a ¥20,000 to the price tag for the region-free console. While Nintendo hasn’t made any statements regarding the matter yet, the decision to have the region-free version of the console priced was likely made to prevent Switch 2 buyers from illegally exporting the console after buying it at a lower price in Japan. The Switch 2 is proving to be a highly-anticipated console in Japan. Earlier this week, Nintendo president Shuntaro Furukawa released a statement on social media, revealing that more than 2 million customers had signed up for the lottery-based pre-order system in Japan. In his statement, Furukawa also apologised, noting that Nintendo may not be able to meet such massive demand in time for the console’s June 5 launch. “On April 2, we announced the details of the Nintendo Switch 2 and began accepting applications for a lottery sale on the My Nintendo Store,” wrote Furukawa (translation via X). “As a result, we received applications from an astonishing 2.2 million people in Japan alone. “However, this far exceeded our prior expectations and greatly surpasses the number of Nintendo Switch 2 units we can deliver from the My Nintendo Store on June 5. Consequently, it is with great regret that we anticipate a significant number of customers will not be selected in tomorrow’s lottery announcement on April 24.” He did say, however, that Nintendo is planning on ramping up production of the console moving forward in order to meet the demand down the road. “Furthermore, we are planning to produce and ship a substantial number of Nintendo Switch 2 units moving forward,” he wrote. “We sincerely apologize for the time it will take to fully meet your expectations and kindly ask for your understanding.” 7割がマリカセットに応募(約150万人)。多言語版は3%と少ないため当選率の高さに繋がっている pic.twitter.com/yYLjwzRQVa— ゆきのさん (@yukino_san_14) April 25, 20250 Commentarios 0 Acciones 22 Views -

VENTUREBEAT.COMHow many people work in the game industry? | Two different viewsWe explore two different efforts to determine how many people work in the game industry.Read More0 Commentarios 0 Acciones 22 Views

VENTUREBEAT.COMHow many people work in the game industry? | Two different viewsWe explore two different efforts to determine how many people work in the game industry.Read More0 Commentarios 0 Acciones 22 Views -

WWW.THEVERGE.COMDigital photo frame company Nixplay cut its free cloud storage to almost nothingOne of the most frustrating realities about modern technology products is that while so many of them can get exciting new features via the internet, they can lose them just as easily. That happened to owners of Nixplay smart digital photo frames this week when they were hit with a previously announced update the company said would “remove premium features and reduce limits,” including dropping cloud photo and video storage to just 500MB. Nixplay has offered free cloud storage for a long time — here’s a 2016 PCMag review that mentions an 8-inch frame that came with 10GB of space for no extra charge. In addition to losing higher storage limits, the company has also nixed the previously free ability to sync a single Google Photos album. The company’s announcement said that those whose existing free accounts already exceed the new 500MB limit would see some content “restricted from sharing or viewing on a frame without editing your content or upgrading your subscription.” People on the Nixplay subreddit aren’t happy about the change, with posts complaining about the changes affecting existing customers rather than only new ones or calling it a scam. One user’s begrudging post says they’ll subscribe, but that they’re only doing so because they’ve accrued “a few thousand photos in the cloud” and don’t want to teach their partner, who hates computers, how to use a new app. Nixplay’s paid subscriptions cost either $19.99 a year for 100GB of photo storage (Nixplay Lite) or $29.99 per year for unlimited photo storage (Nixplay Plus). Both tiers also include the ability to sync with Google Photos, although it’s not clear if that feature works the same as it did before, given a recent change Google made that broke how many digital frames sync with its photos service.0 Commentarios 0 Acciones 21 Views

WWW.THEVERGE.COMDigital photo frame company Nixplay cut its free cloud storage to almost nothingOne of the most frustrating realities about modern technology products is that while so many of them can get exciting new features via the internet, they can lose them just as easily. That happened to owners of Nixplay smart digital photo frames this week when they were hit with a previously announced update the company said would “remove premium features and reduce limits,” including dropping cloud photo and video storage to just 500MB. Nixplay has offered free cloud storage for a long time — here’s a 2016 PCMag review that mentions an 8-inch frame that came with 10GB of space for no extra charge. In addition to losing higher storage limits, the company has also nixed the previously free ability to sync a single Google Photos album. The company’s announcement said that those whose existing free accounts already exceed the new 500MB limit would see some content “restricted from sharing or viewing on a frame without editing your content or upgrading your subscription.” People on the Nixplay subreddit aren’t happy about the change, with posts complaining about the changes affecting existing customers rather than only new ones or calling it a scam. One user’s begrudging post says they’ll subscribe, but that they’re only doing so because they’ve accrued “a few thousand photos in the cloud” and don’t want to teach their partner, who hates computers, how to use a new app. Nixplay’s paid subscriptions cost either $19.99 a year for 100GB of photo storage (Nixplay Lite) or $29.99 per year for unlimited photo storage (Nixplay Plus). Both tiers also include the ability to sync with Google Photos, although it’s not clear if that feature works the same as it did before, given a recent change Google made that broke how many digital frames sync with its photos service.0 Commentarios 0 Acciones 21 Views -

WWW.MARKTECHPOST.COMA Coding Implementation with Arcad: Integrating Gemini Developer API Tools into LangGraph Agents for Autonomous AI WorkflowsArcade transforms your LangGraph agents from static conversational interfaces into dynamic, action-driven assistants by providing a rich suite of ready-made tools, including web scraping and search, as well as specialized APIs for finance, maps, and more. In this tutorial, we will learn how to initialize ArcadeToolManager, fetch individual tools (such as Web.ScrapeUrl) or entire toolkits, and seamlessly integrate them into Google’s Gemini Developer API chat model via LangChain’s ChatGoogleGenerativeAI. With a few steps, we installed dependencies, securely loaded your API keys, retrieved and inspected your tools, configured the Gemini model, and spun up a ReAct-style agent complete with checkpointed memory. Throughout, Arcade’s intuitive Python interface kept your code concise and your focus squarely on crafting powerful, real-world workflows, no low-level HTTP calls or manual parsing required. !pip install langchain langchain-arcade langchain-google-genai langgraph We integrate all the core libraries you need, including LangChain’s core functionality, the Arcade integration for fetching and managing external tools, the Google GenAI connector for Gemini access via API key, and LangGraph’s orchestration framework, so you can get up and running in one go. from getpass import getpass import os if "GOOGLE_API_KEY" not in os.environ: os.environ["GOOGLE_API_KEY"] = getpass("Gemini API Key: ") if "ARCADE_API_KEY" not in os.environ: os.environ["ARCADE_API_KEY"] = getpass("Arcade API Key: ") We securely prompt you for your Gemini and Arcade API keys, without displaying them on the screen. It sets them as environment variables, only asking if they are not already defined, to keep your credentials out of your notebook code. from langchain_arcade import ArcadeToolManager manager = ArcadeToolManager(api_key=os.environ["ARCADE_API_KEY"]) tools = manager.get_tools(tools=["Web.ScrapeUrl"], toolkits=["Google"]) print("Loaded tools:", [t.name for t in tools]) We initialize the ArcadeToolManager with your API key, then fetch both the Web.ScrapeUrl tool and the full Google toolkit. It finally prints out the names of the loaded tools, allowing you to confirm which capabilities are now available to your agent. from langchain_google_genai import ChatGoogleGenerativeAI from langgraph.checkpoint.memory import MemorySaver model = ChatGoogleGenerativeAI( model="gemini-1.5-flash", temperature=0, max_tokens=None, timeout=None, max_retries=2, ) bound_model = model.bind_tools(tools) memory = MemorySaver() We initialize the Gemini Developer API chat model (gemini-1.5-flash) with zero temperature for deterministic replies, bind in your Arcade tools so the agent can call them during its reasoning, and set up a MemorySaver to persist the agent’s state checkpoint by checkpoint. from langgraph.prebuilt import create_react_agent graph = create_react_agent( model=bound_model, tools=tools, checkpointer=memory ) We spin up a ReAct‐style LangGraph agent that wires together your bound Gemini model, the fetched Arcade tools, and the MemorySaver checkpointer, enabling your agent to iterate through thinking, tool invocation, and reflection with state persisted across calls. from langgraph.errors import NodeInterrupt config = { "configurable": { "thread_id": "1", "user_id": "user@example.com" } } user_input = { "messages": [ ("user", "List any new and important emails in my inbox.") ] } try: for chunk in graph.stream(user_input, config, stream_mode="values"): chunk["messages"][-1].pretty_print() except NodeInterrupt as exc: print(f"\n🔒 NodeInterrupt: {exc}") print("Please update your tool authorization or adjust your request, then re-run.") We set up your agent’s config (thread ID and user ID) and user prompt, then stream the ReAct agent’s responses, pretty-printing each chunk as it arrives. If a tool call hits an authorization guard, it catches the NodeInterrupt and tells you to update your credentials or adjust the request before retrying. In conclusion, by centering our agent architecture on Arcade, we gain instant access to a plug-and-play ecosystem of external capabilities that would otherwise take days to build from scratch. The bind_tools pattern merges Arcade’s toolset with Gemini’s natural-language reasoning, while LangGraph’s ReAct framework orchestrates tool invocation in response to user queries. Whether you’re crawling websites for real-time data, automating routine lookups, or embedding domain-specific APIs, Arcade scales with your ambitions, letting you swap in new tools or toolkits as your use cases evolve. Here is the Colab Notebook. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit. Asif RazzaqWebsite | + postsBioAsif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.Asif Razzaqhttps://www.marktechpost.com/author/6flvq/Meta AI Introduces Token-Shuffle: A Simple AI Approach to Reducing Image Tokens in TransformersAsif Razzaqhttps://www.marktechpost.com/author/6flvq/A Comprehensive Tutorial on the Five Levels of Agentic AI Architectures: From Basic Prompt Responses to Fully Autonomous Code Generation and ExecutionAsif Razzaqhttps://www.marktechpost.com/author/6flvq/NVIDIA AI Releases OpenMath-Nemotron-32B and 14B-Kaggle: Advanced AI Models for Mathematical Reasoning that Secured First Place in the AIMO-2 Competition and Set New Benchmark RecordsAsif Razzaqhttps://www.marktechpost.com/author/6flvq/Meta AI Releases Web-SSL: A Scalable and Language-Free Approach to Visual Representation Learning0 Commentarios 0 Acciones 25 Views

WWW.MARKTECHPOST.COMA Coding Implementation with Arcad: Integrating Gemini Developer API Tools into LangGraph Agents for Autonomous AI WorkflowsArcade transforms your LangGraph agents from static conversational interfaces into dynamic, action-driven assistants by providing a rich suite of ready-made tools, including web scraping and search, as well as specialized APIs for finance, maps, and more. In this tutorial, we will learn how to initialize ArcadeToolManager, fetch individual tools (such as Web.ScrapeUrl) or entire toolkits, and seamlessly integrate them into Google’s Gemini Developer API chat model via LangChain’s ChatGoogleGenerativeAI. With a few steps, we installed dependencies, securely loaded your API keys, retrieved and inspected your tools, configured the Gemini model, and spun up a ReAct-style agent complete with checkpointed memory. Throughout, Arcade’s intuitive Python interface kept your code concise and your focus squarely on crafting powerful, real-world workflows, no low-level HTTP calls or manual parsing required. !pip install langchain langchain-arcade langchain-google-genai langgraph We integrate all the core libraries you need, including LangChain’s core functionality, the Arcade integration for fetching and managing external tools, the Google GenAI connector for Gemini access via API key, and LangGraph’s orchestration framework, so you can get up and running in one go. from getpass import getpass import os if "GOOGLE_API_KEY" not in os.environ: os.environ["GOOGLE_API_KEY"] = getpass("Gemini API Key: ") if "ARCADE_API_KEY" not in os.environ: os.environ["ARCADE_API_KEY"] = getpass("Arcade API Key: ") We securely prompt you for your Gemini and Arcade API keys, without displaying them on the screen. It sets them as environment variables, only asking if they are not already defined, to keep your credentials out of your notebook code. from langchain_arcade import ArcadeToolManager manager = ArcadeToolManager(api_key=os.environ["ARCADE_API_KEY"]) tools = manager.get_tools(tools=["Web.ScrapeUrl"], toolkits=["Google"]) print("Loaded tools:", [t.name for t in tools]) We initialize the ArcadeToolManager with your API key, then fetch both the Web.ScrapeUrl tool and the full Google toolkit. It finally prints out the names of the loaded tools, allowing you to confirm which capabilities are now available to your agent. from langchain_google_genai import ChatGoogleGenerativeAI from langgraph.checkpoint.memory import MemorySaver model = ChatGoogleGenerativeAI( model="gemini-1.5-flash", temperature=0, max_tokens=None, timeout=None, max_retries=2, ) bound_model = model.bind_tools(tools) memory = MemorySaver() We initialize the Gemini Developer API chat model (gemini-1.5-flash) with zero temperature for deterministic replies, bind in your Arcade tools so the agent can call them during its reasoning, and set up a MemorySaver to persist the agent’s state checkpoint by checkpoint. from langgraph.prebuilt import create_react_agent graph = create_react_agent( model=bound_model, tools=tools, checkpointer=memory ) We spin up a ReAct‐style LangGraph agent that wires together your bound Gemini model, the fetched Arcade tools, and the MemorySaver checkpointer, enabling your agent to iterate through thinking, tool invocation, and reflection with state persisted across calls. from langgraph.errors import NodeInterrupt config = { "configurable": { "thread_id": "1", "user_id": "user@example.com" } } user_input = { "messages": [ ("user", "List any new and important emails in my inbox.") ] } try: for chunk in graph.stream(user_input, config, stream_mode="values"): chunk["messages"][-1].pretty_print() except NodeInterrupt as exc: print(f"\n🔒 NodeInterrupt: {exc}") print("Please update your tool authorization or adjust your request, then re-run.") We set up your agent’s config (thread ID and user ID) and user prompt, then stream the ReAct agent’s responses, pretty-printing each chunk as it arrives. If a tool call hits an authorization guard, it catches the NodeInterrupt and tells you to update your credentials or adjust the request before retrying. In conclusion, by centering our agent architecture on Arcade, we gain instant access to a plug-and-play ecosystem of external capabilities that would otherwise take days to build from scratch. The bind_tools pattern merges Arcade’s toolset with Gemini’s natural-language reasoning, while LangGraph’s ReAct framework orchestrates tool invocation in response to user queries. Whether you’re crawling websites for real-time data, automating routine lookups, or embedding domain-specific APIs, Arcade scales with your ambitions, letting you swap in new tools or toolkits as your use cases evolve. Here is the Colab Notebook. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit. Asif RazzaqWebsite | + postsBioAsif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.Asif Razzaqhttps://www.marktechpost.com/author/6flvq/Meta AI Introduces Token-Shuffle: A Simple AI Approach to Reducing Image Tokens in TransformersAsif Razzaqhttps://www.marktechpost.com/author/6flvq/A Comprehensive Tutorial on the Five Levels of Agentic AI Architectures: From Basic Prompt Responses to Fully Autonomous Code Generation and ExecutionAsif Razzaqhttps://www.marktechpost.com/author/6flvq/NVIDIA AI Releases OpenMath-Nemotron-32B and 14B-Kaggle: Advanced AI Models for Mathematical Reasoning that Secured First Place in the AIMO-2 Competition and Set New Benchmark RecordsAsif Razzaqhttps://www.marktechpost.com/author/6flvq/Meta AI Releases Web-SSL: A Scalable and Language-Free Approach to Visual Representation Learning0 Commentarios 0 Acciones 25 Views -

TOWARDSAI.NETDesigning Customized and Dynamic Prompts for Large Language ModelsLatest Machine Learning Designing Customized and Dynamic Prompts for Large Language Models 0 like April 26, 2025 Share this post Author(s): Shenggang Li Originally published on Towards AI. A Practical Comparison of Context-Building, Templating, and Orchestration Techniques Across Modern LLM FrameworksPhoto by Free Nomad on Unsplash Imagine you’re at a coffee shop, and ask for a coffee. Simple, right? But if you don’t specify details like milk, sugar, or type of roast, you might not get exactly what you wanted. Similarly, when interacting with large language models (LLMs), how you ask — your prompts — makes a big difference. That’s why creating customized (static) and dynamic prompts is important. Customized prompts are like fixed recipes; they’re consistent, reliable, and straightforward. Dynamic prompts, on the other hand, adapt based on the context, much like a skilled barista adjusting the coffee order based on your mood or the weather. Let’s say you’re building an AI-powered customer support chatbot. If you use only static prompts, the bot might provide generic responses, leaving users frustrated. For example, asking “How can I help you today”? is static and might be too vague. But a dynamic prompt might incorporate the user’s recent interactions, asking something like, “I see you were checking our order status. Would you like help tracking it further”? This personalized approach can dramatically improve user satisfaction. I’ll dive into practical comparisons of these prompting methods, exploring context-building strategies, templating frameworks, and orchestration tools. I’ll examine real-world… Read the full blog for free on Medium. Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor. Published via Towards AI Towards AI - Medium Share this post0 Commentarios 0 Acciones 27 Views