UXDESIGN.CC

Society drives how we build products, create brands, and design experiences

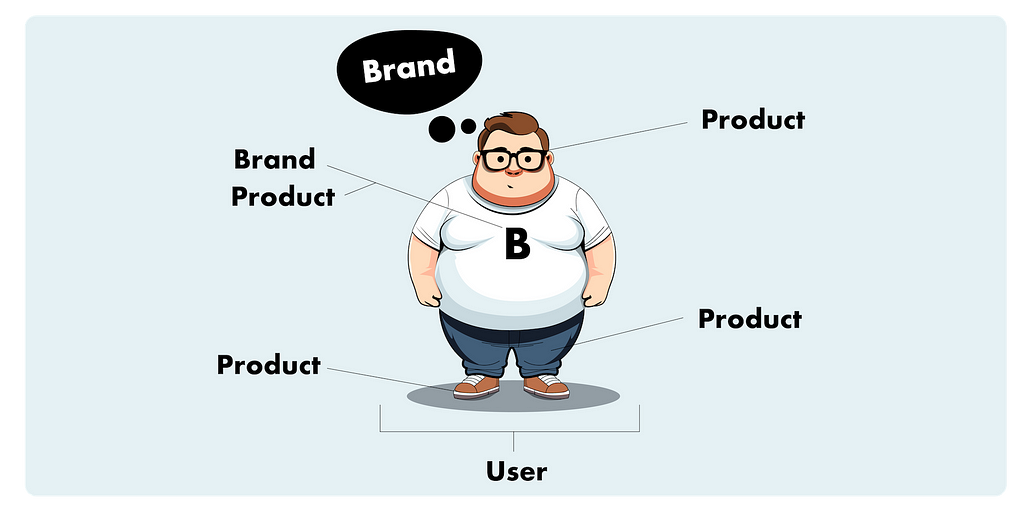

If a brand is more important than the product, then is the experience more important than the brand? If the products are similar, what makes themunique?What does the user or customer find importantThe brand, the product, or the experience? Image by theauthor.The never-ending debate on whether a brand is more important than the product often boils down to what drives product success: emotional connection or functional quality. However, most of the time, this is not an either-or question as businesses ideally need both to work harmoniously. User experience and service design influence the overall product experience, impacting the products success and companyimage.An excellent user experience might not be able to save a poor product, but can a stellar brand keep a poor product afloat? Conversely, can a negative brand image make a great product obsolete?Place, people, and thepastX (Twitter) has been making headlines recently and is witnessing a mass user exodus. 115000 users from the US alone have left the platform since the beginning of November 2024, discussing alternatives such as BlueSky, Threads, and Mastodon. Between November 5 and 15, the usage of the Bluesky app grew by 519% for US-based users. Many users cite bots, AI training models, advertisements, negative interactions, and politics as their main reasons for fleeing or reducing the number of posts on X. Is this due to the product or the brand? Will it likely face a similar fate to MySpace orGoogle+?Reeds Law states that the value of a social network depends on how well it can facilitate the formation of groups and not how well they facilitate connections between individuals. If users can define their own interactions and form groups among shared interests, it can provide a personalized experience. If this is possible on all platforms, what makes a person chooseone?Logos of Threads, X and Mastodon.X, Threads, and Mastodon generally offer a similar text-based social media product, but do they offer something new to the user? If not tied to innovation or market demand, will the branding, business decisions, or product experience drive or reduce engagement on these platforms?Emotional grounding means establishing a connection to a place, people, or past with a product. This connection will likely be even more important in the future, not only for food-related products but also for digital products and services.Results after searching for NBA-related content on X and Threats. The two products work, look, and feel rather similar. Image by theauthor.The faces of the products weuseMany digital products today have a person attached to the brand, such as Elon Musk on X, Steve Jobs/Tim Cook on Apple, Bill Gates on Microsoft, and Mark Zuckerberg on Facebook. These leaders impact the brand and product image, which can affect the adoption and engagement with the product. Figma, Adobe, LinkedIn, and Vimeo do not have a celebrity-like person attached to them. Do the celebrity CEOs of X, Apple, Microsoft, and Facebook fall under celebrity endorsement strategy or CEO branding?There is this inherent tension in advertising between these ideas that celebrities are there to bolster and endorse the product, yet they are also known to take attention away from the product.Elizabeth (Zab) Johnson, Wharton Neuroscience InitiativeCEO branding and celebrity endorsement can help a company connect with consumers and build or destroy customer confidence. Confidence comes from the ability to persuade, which is based on evolution and biology about following the lead of a high-status individual. Besides marketing and advertising, how can customers feel confident about a good product from a startup or a company without celebrity CEOs? Does the product or the experience rule over the brand afterall?Image by Felix Mittermeier onPexels.Bill Gates predicted that Content is King in 1996. Is it still true in 2024 andbeyond?In 1996, Bill Gates wrote Content is King in an essay about how the Internet and distribution of information and entertainment (content) would become a business opportunity for companies of allsizes.The way we engage and what we consider as content has changed over the years. It is common today to follow companies and strangers on the Internet for content related or unrelated to the product or person. Something unheard of two decades ago. The means to consume content and the type of content shared also vary by the products weuse.Users browse Reddit to discover content, not because of their branding, but because the usability of the product and content interaction makes it a unique and enjoyable experience. Comments on why users enjoy Reddit over other social media platforms highlight the desire to stay anonymous, discover interesting content, and engage with people sharing similar interests who are not necessarily within their immediate social circle. This brings an interesting question: Is the content king or the queen? Queen refers to the most valuable piece in chess, which alone might have a hard time surviving against a fleet from the opponent.Reddit r/nba. Screenshot by theauthor.The people come to your site because of the content you provide. This is still a solid statement, although what if the content can be retrieved from multiple sources, such as feeds or TLDR apps? Content exclusivity was more common 20 years ago. In todays digital age, there are multiple products and means that can provide the same or similar content, so how will the consumer make a decision? The product experience might triumph over the brand afterall.Fear of missingoutThe dot-com bubble from the late 1990s saw the rise of many online products and services, driven by the increased global adoption of the Internet and personal computing. Companies without viable business plans jumped aboard with endless IPOs, resulting in a burst in2000.In 2012, my washing machine had AI written on the surface, but what was the AI? Sensors counting the level of water are not necessarily AI, right? Perhaps it was more about marketing and branding.Is the current direction with AI-enhanced products looking at repeating the characteristics of the dot-com craze? We witness a plethora of new products utilizing AI come about, but are we reaching a point where the market is starting to become saturated with AI-based solutions? Is it AI for FOMOs (Fear Of Missing Out) sake, AI for brandings sake, or AI for improving the product and experience?Image prompted by the author via Adobe Firefly Image3.Building products and solvingproblemsCiting the infamous quote attributed to Henry Ford: If I would have asked my customers what they wanted, they would have said faster horses. Do customers know what they want? When analyzing insights, does the UX researcher know how to read between the lines to make assumptions about what the user mayneed?Henry Fords quote provides quite a good reflection on how solving user problems can result in innovation.Why did Segways electronic scooter fail, but lightweight electric scooters thrive? Both products were re-thinking short-distance transportation, but Segway was about technological innovation, and the latter about value innovation. The Segway was loaded with technology and had a hefty price tag of $5,000, all the while being big and heavy, generally too big to use on sidewalks or bring up to offices. On the contrary, reducing the amount of technology and rethinking how the users would use such a product took off 95% of the price, making the product lighter and more compact to charge and movearound.Technological innovation doesnt necessarily correlate to having a user-friendly product. If Segway was originally produced by Mercedes-Benz, would the brand presence have impacted its adoption? In this case, the product experience rules over thebrand.Youve got to start with the customer experience and work backwards for the technology. You cant start with the technology and try to figure out where youre going to try to sell itSteve Jobs, WWDC1997Is AI helping to solve a problem, or is it utilized towards technological innovation to create something new? Both, yet if a user cant use it to their advantage, is it part of a brand experience, technological innovation, or a product experience?Working together in harmony. Image prompted by the author via Adobe Firefly Image3.Video killed the radiostarWill AI take over the designers or developers jobs? Maybe not take over, but change and enhance. The introduction of development frameworks and content-management systems for example didnt take over jobs, but it allowed more people to access the technology and build products faster. Technological innovation also creates new jobs and opportunities. Besides prompt engineering, AI will likely generate new jobs aswell.AI can draft a complete design system in seconds. Similarly, WordPress or Webflow can create a site in a few steps. However, the use cases and demands still vary greatly. Ive often heard the following: We dont want the site to look like Wordpress, even if it is built on Wordpress. This translates into We dont want the site to look like all the other sites. Are we experiencing a similar situation with the current UI design landscape?In recent years, many digital products look, work, and feel the same. Familiarity makes it easy for the user to understand the product after all and re-inventing the wheel can be costly. This is also where branding has a chance to come into play. Branding is more than just colors and art direction.The results for searching NBA on Threads, LinkedIn, Youtube, Line, and Spotify. Can you spot the similarities? Image by theauthor.I used to have a Honda Civic Hatchback 88 and then a Volkswagen Polo 96, while they are a bit different in look and feel, the usability and iconography were still pretty much the same. You operate different cars by pushing the pedals and moving the stick-shift around. Familiar icons make it easy to switch between car models and brands, is it the same with different digital products?In comparison, I had to learn how to operate a lift truck when working in a factory, and while the appearance was similar, there was no familiar wheel, but a handle you had to turn around with one hand. It took a little time to get used to the change, but afterward, it was easy. When Objectives are different, the experience changes aswell.Products are made in the factory, but brands are created in the mindWalterLandorConsumer-facing apps may look, feel, and work the same, but a hospital medical application can be a different experience. The goals and context are different, but in most cases, we are most familiar with the apps that we have easy access to. When a hospital decides on an application or system to use across its facility, will the brand, the product, or the user experience contribute to decision-making?Website visitor statistics are now mostly private. Image by theauthor.The visual design we apply reflects oureraThroughout history, art and design have gone through different movements that shaped the look and feel of that time. The Art Nouveau (Jugendstil) movement in the 1890s aimed to break out from the historical styles into a modernized movement for total works of the arts, which was driven by the use of organic or geometric forms, harmony, and natural forms inspired by nature, rejecting excessive ornaments or Victorian-era decorative styles.If todays arts love the machine, technology, and organization, if they aspire to precision and reject anything vague and dreamy, this implies an instinctive repudiation of chaos and a longing to find the form appropriate to our times. - Oskar SchlemmerThe Bauhaus movement was initiated around 1919 and is infamous for the approach that the function of an object should dictate its form, and re-uniting art and industrial design. Similarly, De Stijl in 1917 focused on simplicity and primary colors. These movements rejected the ideas of decorative styling, paving the way for more functional crafts.The form should follow function used to be the main idea to break away from the decorative output, but the practitioners themselves started to revert to the decorative styles.Branding initially started as a way to depict ownership, then evolved into a way to be recognized in the sea of options. Looks, style, and personality have become more important, and branding is not only for companies looking at marketing their products but also personal branding has risen due to the impact of the Internet. Brands also represent values, which can impact if a customer engages with a brand or aproduct.Jaguar logo 2024. Image by Jaguar MediaCentre.Logos and visual identities are elements of branding, which have also changed visually throughout history. Jaguar recently revised their logo to better communicate a step into the electronic vehicle industry. This rebrand has prompted mixed feelings online, but logos also change with visual styles of different eras and industries, as seen with the evolution of the Jaguar Logo from 1922 to2024.Have it yourwayWill AI drive the future of product design by tailoring experiences to meet the needs and wants of the user, including the design and layout of interface elements and content strategy? This could include anything from font sizes to colors to content length and more. A blank canvas or content cards that will be populated byAI?Are brands and products becoming like sports teams? Are the products we use a statement of what brands we support or not, or do we use them to solve problems?Hypothetically, if Mark Zuckerberg were to acquire and become the CEO of Figma, would it impact the way you perceive Figma, or whether you use Figma ornot?Change is driven by business needs and changing consumer habits. Can AI assist in understanding the needs of users? The visual style and actions depict the standards and preferences of our time. Will we revert from minimalist design to more decorative design for the sake of being different, or wanting to elevate the product and brand experience?What is important? The brand, the product or the experience?References and furtherreadingPeople are fleeing Elon Musks X in Droves. What is Happening on Threads andBlueskyPeople are Fleeing Elon Musks X for Threads, and Bluesky. Welcome to the Era of Social Media FragmentationX Sees Largest User Exodus Since Elon MuskTakeoverBluesky Tops 20M Users, Narrowing Gap with Instagram ThreadsMySpaceWhat Went Wrong: The Site was a Massive Spaghetti-Ball MessWhy Facebook Beat MySpace, and Why MySpaces Revised Strategy Will LikelyFailFive Reasons Why Google+DiedThe Marketing Psychology Behind Celebrity EndorsementsCEO Branding Strategies for 2023 andBeyondWhy We Buy Products Connected to Place, People, andPastContent Is KingOriginal Bill Gates Essay & How It AppliesTodayWhy Do You Prefer Reddit Over Other SocialMedias?The Late 1990s Dot-Com Bubble Implodes in2000The Psychology Behind FOMO (Fear of MissingOut)Segway Case Study: Avoiding the Fate of ElectricScooterPrompt EngineeringArt NouveauMovementBauhaus MovementThe Bauhaus, 1919-1933De StijlOskar SchlemmerHistory ofBrandingFearless. Exuberant. Compelling. This is Jaguar, ReimaginedEvolution of the JaguarLogoSociety drives how we build products, create brands, and design experiences was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.