0 Commenti

0 condivisioni

132 Views

Elenco

Elenco

-

Effettua l'accesso per mettere mi piace, condividere e commentare!

-

GAMERANT.COMWhat Happens to Leftover Tokens After Sticker Drop Ends in Monopoly GO?Monopoly GO has reintroduced the Sticker Drop minigame in January 2025 as a way to help players win sticker packs of varying rarities and even a Wild Sticker to complete their Jingle Joy album. Similar to other Peg-E minigames, you first need to collect Peg-E tokens to play Sticker Drop. Since there are multiple ways to grab Peg-E Tokens, youll probably have a bunch of them left even after claiming the Wild Sticker in Monopoly GO. If youre wondering what happens to your extra Peg-E Tokens after the Sticker Drop minigame ends, read on.0 Commenti 0 condivisioni 126 Views

GAMERANT.COMWhat Happens to Leftover Tokens After Sticker Drop Ends in Monopoly GO?Monopoly GO has reintroduced the Sticker Drop minigame in January 2025 as a way to help players win sticker packs of varying rarities and even a Wild Sticker to complete their Jingle Joy album. Similar to other Peg-E minigames, you first need to collect Peg-E tokens to play Sticker Drop. Since there are multiple ways to grab Peg-E Tokens, youll probably have a bunch of them left even after claiming the Wild Sticker in Monopoly GO. If youre wondering what happens to your extra Peg-E Tokens after the Sticker Drop minigame ends, read on.0 Commenti 0 condivisioni 126 Views -

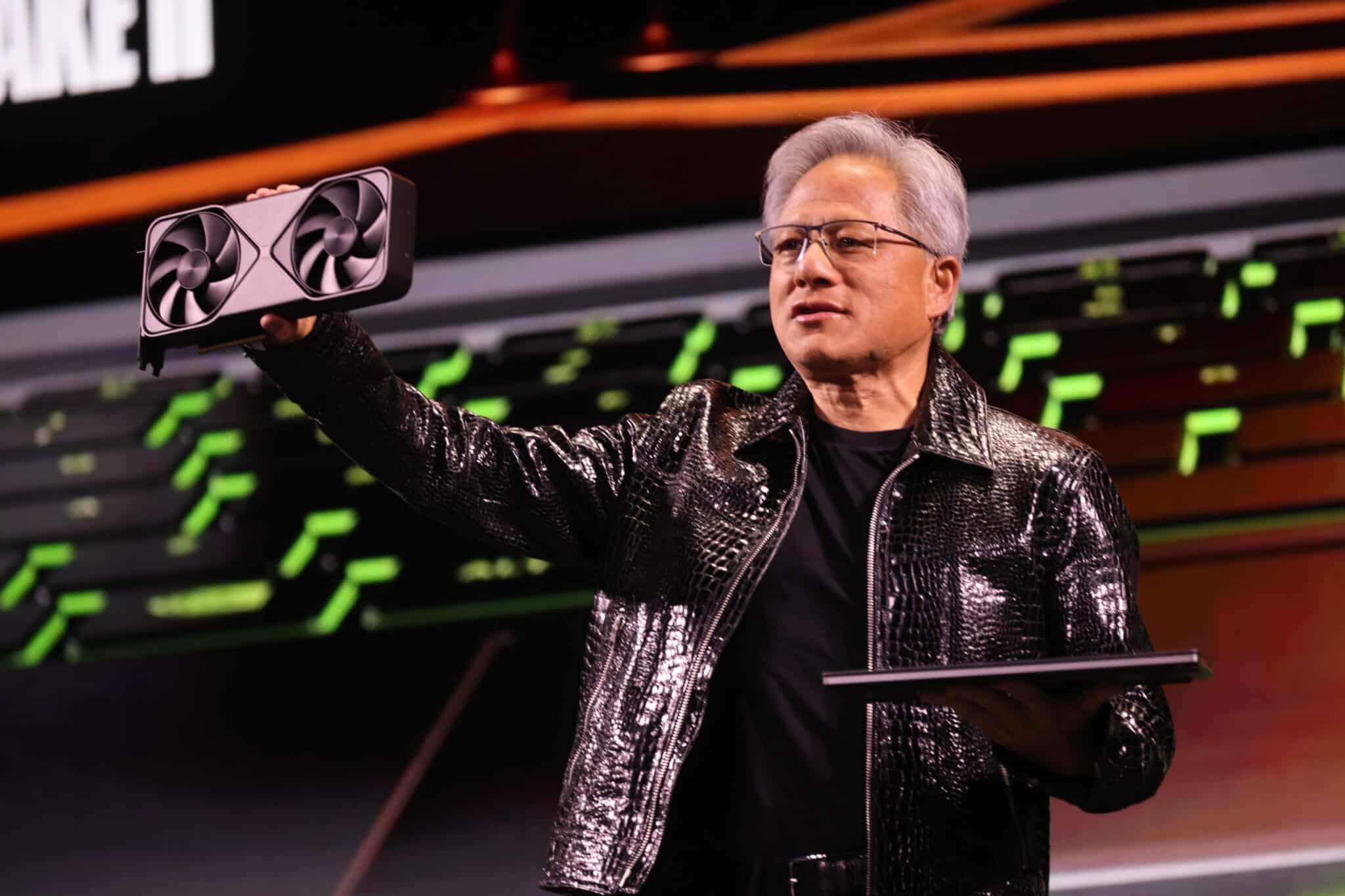

BLOGS.NVIDIA.COMCES 2025: AI Advancing at Incredible Pace, NVIDIA CEO SaysNVIDIA founder and CEO Jensen Huang kicked off CES 2025 with a 90-minute keynote that included new products to advance gaming, autonomous vehicles, robotics, and agentic AI.AI has been advancing at an incredible pace, he said before an audience of more than 6,000 packed into the Michelob Ultra Arena in Las Vegas.It started with perception AI understanding images, words, and sounds. Then generative AI creating text, images and sound, Huang said. Now, were entering the era of physical AI, AI that can proceed, reason, plan and act.NVIDIA GPUs and platforms are at the heart of this transformation, Huang explained, enabling breakthroughs across industries, including gaming, robotics and autonomous vehicles (AVs).Huangs keynote showcased how NVIDIAs latest innovations are enabling this new era of AI, with several groundbreaking announcements, including:The just-announced NVIDIA Cosmos platform advances physical AI with new models and video data processing pipelines for robots, autonomous vehicles and vision AI.New NVIDIA Blackwell-based GeForce RTX 50 Series GPUs offer stunning visual realism and unprecedented performance boosts.AI foundation models introduced at CES for RTX PCs feature NVIDIA NIM microservices and AI Blueprints for crafting digital humans, podcasts, images and videos.The new NVIDIA Project DIGITS brings the power of NVIDIA Grace Blackwell to developer desktops in a compact package that can practically fit in a pocket.NVIDIA is partnering with Toyota for safe next-gen vehicle development using the NVIDIA DRIVE AGX in-vehicle computer running NVIDIA DriveOS.Huang started off his talk by reflecting on NVIDIAs three-decade journey. In 1999, NVIDIA invented the programmable GPU. Since then, modern AI has fundamentally changed how computing works, he said. Every single layer of the technology stack has been transformed, an incredible transformation, in just 12 years.Revolutionizing Graphics With GeForce RTX 50 SeriesGeForce enabled AI to reach the masses, and now AI is coming home to GeForce, Huang said.With that, he introduced the NVIDIA GeForce RTX 5090 GPU, the most powerful GeForce RTX GPU so far, with 92 billion transistors and delivering 3,352 trillion AI operations per second (TOPS).Here it is our brand-new GeForce RTX 50 series, Blackwell architecture, Huang said, holding the blacked-out GPU aloft and noting how its able to harness advanced AI to enable breakthrough graphics. The GPU is just a beast.Even the mechanical design is a miracle, Huang said, noting that the graphics card has two cooling fans.More variations in the GPU series are coming. The GeForce RTX 5090 and GeForce RTX 5080 desktop GPUs are scheduled to be available Jan. 30. The GeForce RTX 5070 Ti and the GeForce RTX 5070 desktops are slated to be available starting in February. Laptop GPUs are expected in March.DLSS 4 introduces Multi Frame Generation, working in unison with the complete suite of DLSS technologies to boost performance by up to 8x. NVIDIA also unveiled NVIDIA Reflex 2, which can reduce PC latency by up to 75%.The latest generation of DLSS can generate three additional frames for every frame we calculate, Huang explained. As a result, were able to render at incredibly high performance, because AI does a lot less computation.RTX Neural Shaders use small neural networks to improve textures, materials and lighting in real-time gameplay. RTX Neural Faces and RTX Hair advance real-time face and hair rendering, using generative AI to animate the most realistic digital characters ever. RTX Mega Geometry increases the number of ray-traced triangles by up to 100x, providing more detail.Advancing Physical AI With Cosmos|In addition to advancements in graphics, Huang introduced the NVIDIA Cosmos world foundation model platform, describing it as a game-changer for robotics and industrial AI.The next frontier of AI is physical AI, Huang explained. He likened this moment to the transformative impact of large language models on generative AI.The ChatGPT moment for general robotics is just around the corner, he explained.Like large language models, world foundation models are fundamental to advancing robot and AV development, yet not all developers have the expertise and resources to train their own, Huang said.Cosmos integrates generative models, tokenizers, and a video processing pipeline to power physical AI systems like AVs and robots.Cosmos aims to bring the power of foresight and multiverse simulation to AI models, enabling them to simulate every possible future and select optimal actions.Cosmos models ingest text, image or video prompts and generate virtual world states as videos, Huang explained. Cosmos generations prioritize the unique requirements of AV and robotics use cases like real-world environments, lighting and object permanence.Leading robotics and automotive companies, including 1X, Agile Robots, Agility, Figure AI, Foretellix, Fourier, Galbot, Hillbot, IntBot, Neura Robotics, Skild AI, Virtual Incision, Waabi and XPENG, along with ridesharing giant Uber, are among the first to adopt Cosmos.In addition, Hyundai Motor Group is adopting NVIDIA AI and Omniverse to create safer, smarter vehicles, supercharge manufacturing and deploy cutting-edge robotics.Cosmos is open license and available on GitHub.Empowering Developers With AI Foundation ModelsBeyond robotics and autonomous vehicles, NVIDIA is empowering developers and creators with AI foundation models.Huang introduced AI foundation models for RTX PCs that supercharge digital humans, content creation, productivity and development.These AI models run in every single cloud because NVIDIA GPUs are now available in every single cloud, Huang said. Its available in every single OEM, so you could literally take these models, integrate them into your software packages, create AI agents and deploy them wherever the customers want to run the software.These models offered as NVIDIA NIM microservices are accelerated by the new GeForce RTX 50 Series GPUs.The GPUs have what it takes to run these swiftly, adding support for FP4 computing, boosting AI inference by up to 2x and enabling generative AI models to run locally in a smaller memory footprint compared with previous-generation hardware.Huang explained the potential of new tools for creators: Were creating a whole bunch of blueprints that our ecosystem could take advantage of. All of this is completely open source, so you could take it and modify the blueprints.Top PC manufacturers and system builders are launching NIM-ready RTX AI PCs with GeForce RTX 50 Series GPUs. AI PCs are coming to a home near you, Huang said.While these tools bring AI capabilities to personal computing, NVIDIA is also advancing AI-driven solutions in the automotive industry, where safety and intelligence are paramount.Innovations in Autonomous VehiclesHuang announced the NVIDIA DRIVE Hyperion AV platform, built on the new NVIDIA AGX Thor system-on-a-chip (SoC), designed for generative AI models and delivering advanced functional safety and autonomous driving capabilities.The autonomous vehicle revolution is here, Huang said. Building autonomous vehicles, like all robots, requires three computers: NVIDIA DGX to train AI models, Omniverse to test drive and generate synthetic data, and DRIVE AGX, a supercomputer in the car.DRIVE Hyperion, the first end-to-end AV platform, integrates advanced SoCs, sensors, and safety systems for next-gen vehicles, a sensor suite and an active safety and level 2 driving stack, with adoption by automotive safety pioneers such as Mercedes-Benz, JLR and Volvo Cars.Huang highlighted the critical role of synthetic data in advancing autonomous vehicles. Real-world data is limited, so synthetic data is essential for training the autonomous vehicle data factory, he explained.Powered by NVIDIA Omniverse AI models and Cosmos, this approach generates synthetic driving scenarios that enhance training data by orders of magnitude.Using Omniverse and Cosmos, NVIDIAs AI data factory can scale hundreds of drives into billions of effective miles, Huang said, dramatically increasing the datasets needed for safe and advanced autonomous driving.We are going to have mountains of training data for autonomous vehicles, he added.Toyota, the worlds largest automaker, will build its next-generation vehicles on the NVIDIA DRIVE AGX Orin, running the safety-certified NVIDIA DriveOS operating system, Huang said.Just as computer graphics was revolutionized at such an incredible pace, youre going to see the pace of AV development increasing tremendously over the next several years, Huang said. These vehicles will offer functionally safe, advanced driving assistance capabilities.Agentic AI and Digital ManufacturingNVIDIA and its partners have launched AI Blueprints for agentic AI, including PDF-to-podcast for efficient research and video search and summarization for analyzing large quantities of video and images enabling developers to build, test and run AI agents anywhere.AI Blueprints empower developers to deploy custom agents for automating enterprise workflows This new category of partner blueprints integrates NVIDIA AI Enterprise software, including NVIDIA NIM microservices and NVIDIA NeMo, with platforms from leading providers like CrewAI, Daily, LangChain, LlamaIndex and Weights & Biases.Additionally, Huang announced new Llama Nemotron.Developers can use NVIDIA NIM microservices to build AI agents for tasks like customer support, fraud detection, and supply chain optimization.Available as NVIDIA NIM microservices, the models can supercharge AI agents on any accelerated system.NVIDIA NIM microservices streamline video content management, boosting efficiency and audience engagement in the media industry.Moving beyond digital applications, NVIDIAs innovations are paving the way for AI to revolutionize the physical world with robotics.All of the enabling technologies that Ive been talking about are going to make it possible for us in the next several years to see very rapid breakthroughs, surprising breakthroughs, in general robotics.In manufacturing, the NVIDIA Isaac GR00T Blueprint for synthetic motion generation will help developers generate exponentially large synthetic motion data to train their humanoids using imitation learning.Huang emphasized the importance of training robots efficiently, using NVIDIAs Omniverse to generate millions of synthetic motions for humanoid training.The Mega blueprint enables large-scale simulation of robot fleets, adopted by leaders like Accenture and KION for warehouse automation.These AI tools set the stage for NVIDIAs latest innovation: a personal AI supercomputer called Project DIGITS.NVIDIA Unveils Project DigitsPutting NVIDIA Grace Blackwell on every desk and at every AI developers fingertips, Huang unveiled NVIDIA Project DIGITS.I have one more thing that I want to show you, Huang said. None of this would be possible if not for this incredible project that we started about a decade ago. Inside the company, it was called Project DIGITS deep learning GPU intelligence training system.Huang highlighted the legacy of NVIDIAs AI supercomputing journey, telling the story of how in 2016 he delivered the first NVIDIA DGX system to OpenAI. And obviously, it revolutionized artificial intelligence computing.The new Project DIGITS takes this mission further. Every software engineer, every engineer, every creative artist everybody who uses computers today as a tool will need an AI supercomputer, Huang said.Huang revealed that Project DIGITS, powered by the GB10 Grace Blackwell Superchip, represents NVIDIAs smallest yet most powerful AI supercomputer. This is NVIDIAs latest AI supercomputer, Huang said, showcasing the device. It runs the entire NVIDIA AI stack all of NVIDIA software runs on this. DGX Cloud runs on this.The compact yet powerful Project DIGITS is expected to be available in May.A Year of BreakthroughsIts been an incredible year, Huang said as he wrapped up the keynote. Huang highlighted NVIDIAs major achievements: Blackwell systems, physical AI foundation models, and breakthroughs in agentic AI and roboticsI want to thank all of you for your partnership, Huang said.See notice regarding software product information.0 Commenti 0 condivisioni 141 Views

BLOGS.NVIDIA.COMCES 2025: AI Advancing at Incredible Pace, NVIDIA CEO SaysNVIDIA founder and CEO Jensen Huang kicked off CES 2025 with a 90-minute keynote that included new products to advance gaming, autonomous vehicles, robotics, and agentic AI.AI has been advancing at an incredible pace, he said before an audience of more than 6,000 packed into the Michelob Ultra Arena in Las Vegas.It started with perception AI understanding images, words, and sounds. Then generative AI creating text, images and sound, Huang said. Now, were entering the era of physical AI, AI that can proceed, reason, plan and act.NVIDIA GPUs and platforms are at the heart of this transformation, Huang explained, enabling breakthroughs across industries, including gaming, robotics and autonomous vehicles (AVs).Huangs keynote showcased how NVIDIAs latest innovations are enabling this new era of AI, with several groundbreaking announcements, including:The just-announced NVIDIA Cosmos platform advances physical AI with new models and video data processing pipelines for robots, autonomous vehicles and vision AI.New NVIDIA Blackwell-based GeForce RTX 50 Series GPUs offer stunning visual realism and unprecedented performance boosts.AI foundation models introduced at CES for RTX PCs feature NVIDIA NIM microservices and AI Blueprints for crafting digital humans, podcasts, images and videos.The new NVIDIA Project DIGITS brings the power of NVIDIA Grace Blackwell to developer desktops in a compact package that can practically fit in a pocket.NVIDIA is partnering with Toyota for safe next-gen vehicle development using the NVIDIA DRIVE AGX in-vehicle computer running NVIDIA DriveOS.Huang started off his talk by reflecting on NVIDIAs three-decade journey. In 1999, NVIDIA invented the programmable GPU. Since then, modern AI has fundamentally changed how computing works, he said. Every single layer of the technology stack has been transformed, an incredible transformation, in just 12 years.Revolutionizing Graphics With GeForce RTX 50 SeriesGeForce enabled AI to reach the masses, and now AI is coming home to GeForce, Huang said.With that, he introduced the NVIDIA GeForce RTX 5090 GPU, the most powerful GeForce RTX GPU so far, with 92 billion transistors and delivering 3,352 trillion AI operations per second (TOPS).Here it is our brand-new GeForce RTX 50 series, Blackwell architecture, Huang said, holding the blacked-out GPU aloft and noting how its able to harness advanced AI to enable breakthrough graphics. The GPU is just a beast.Even the mechanical design is a miracle, Huang said, noting that the graphics card has two cooling fans.More variations in the GPU series are coming. The GeForce RTX 5090 and GeForce RTX 5080 desktop GPUs are scheduled to be available Jan. 30. The GeForce RTX 5070 Ti and the GeForce RTX 5070 desktops are slated to be available starting in February. Laptop GPUs are expected in March.DLSS 4 introduces Multi Frame Generation, working in unison with the complete suite of DLSS technologies to boost performance by up to 8x. NVIDIA also unveiled NVIDIA Reflex 2, which can reduce PC latency by up to 75%.The latest generation of DLSS can generate three additional frames for every frame we calculate, Huang explained. As a result, were able to render at incredibly high performance, because AI does a lot less computation.RTX Neural Shaders use small neural networks to improve textures, materials and lighting in real-time gameplay. RTX Neural Faces and RTX Hair advance real-time face and hair rendering, using generative AI to animate the most realistic digital characters ever. RTX Mega Geometry increases the number of ray-traced triangles by up to 100x, providing more detail.Advancing Physical AI With Cosmos|In addition to advancements in graphics, Huang introduced the NVIDIA Cosmos world foundation model platform, describing it as a game-changer for robotics and industrial AI.The next frontier of AI is physical AI, Huang explained. He likened this moment to the transformative impact of large language models on generative AI.The ChatGPT moment for general robotics is just around the corner, he explained.Like large language models, world foundation models are fundamental to advancing robot and AV development, yet not all developers have the expertise and resources to train their own, Huang said.Cosmos integrates generative models, tokenizers, and a video processing pipeline to power physical AI systems like AVs and robots.Cosmos aims to bring the power of foresight and multiverse simulation to AI models, enabling them to simulate every possible future and select optimal actions.Cosmos models ingest text, image or video prompts and generate virtual world states as videos, Huang explained. Cosmos generations prioritize the unique requirements of AV and robotics use cases like real-world environments, lighting and object permanence.Leading robotics and automotive companies, including 1X, Agile Robots, Agility, Figure AI, Foretellix, Fourier, Galbot, Hillbot, IntBot, Neura Robotics, Skild AI, Virtual Incision, Waabi and XPENG, along with ridesharing giant Uber, are among the first to adopt Cosmos.In addition, Hyundai Motor Group is adopting NVIDIA AI and Omniverse to create safer, smarter vehicles, supercharge manufacturing and deploy cutting-edge robotics.Cosmos is open license and available on GitHub.Empowering Developers With AI Foundation ModelsBeyond robotics and autonomous vehicles, NVIDIA is empowering developers and creators with AI foundation models.Huang introduced AI foundation models for RTX PCs that supercharge digital humans, content creation, productivity and development.These AI models run in every single cloud because NVIDIA GPUs are now available in every single cloud, Huang said. Its available in every single OEM, so you could literally take these models, integrate them into your software packages, create AI agents and deploy them wherever the customers want to run the software.These models offered as NVIDIA NIM microservices are accelerated by the new GeForce RTX 50 Series GPUs.The GPUs have what it takes to run these swiftly, adding support for FP4 computing, boosting AI inference by up to 2x and enabling generative AI models to run locally in a smaller memory footprint compared with previous-generation hardware.Huang explained the potential of new tools for creators: Were creating a whole bunch of blueprints that our ecosystem could take advantage of. All of this is completely open source, so you could take it and modify the blueprints.Top PC manufacturers and system builders are launching NIM-ready RTX AI PCs with GeForce RTX 50 Series GPUs. AI PCs are coming to a home near you, Huang said.While these tools bring AI capabilities to personal computing, NVIDIA is also advancing AI-driven solutions in the automotive industry, where safety and intelligence are paramount.Innovations in Autonomous VehiclesHuang announced the NVIDIA DRIVE Hyperion AV platform, built on the new NVIDIA AGX Thor system-on-a-chip (SoC), designed for generative AI models and delivering advanced functional safety and autonomous driving capabilities.The autonomous vehicle revolution is here, Huang said. Building autonomous vehicles, like all robots, requires three computers: NVIDIA DGX to train AI models, Omniverse to test drive and generate synthetic data, and DRIVE AGX, a supercomputer in the car.DRIVE Hyperion, the first end-to-end AV platform, integrates advanced SoCs, sensors, and safety systems for next-gen vehicles, a sensor suite and an active safety and level 2 driving stack, with adoption by automotive safety pioneers such as Mercedes-Benz, JLR and Volvo Cars.Huang highlighted the critical role of synthetic data in advancing autonomous vehicles. Real-world data is limited, so synthetic data is essential for training the autonomous vehicle data factory, he explained.Powered by NVIDIA Omniverse AI models and Cosmos, this approach generates synthetic driving scenarios that enhance training data by orders of magnitude.Using Omniverse and Cosmos, NVIDIAs AI data factory can scale hundreds of drives into billions of effective miles, Huang said, dramatically increasing the datasets needed for safe and advanced autonomous driving.We are going to have mountains of training data for autonomous vehicles, he added.Toyota, the worlds largest automaker, will build its next-generation vehicles on the NVIDIA DRIVE AGX Orin, running the safety-certified NVIDIA DriveOS operating system, Huang said.Just as computer graphics was revolutionized at such an incredible pace, youre going to see the pace of AV development increasing tremendously over the next several years, Huang said. These vehicles will offer functionally safe, advanced driving assistance capabilities.Agentic AI and Digital ManufacturingNVIDIA and its partners have launched AI Blueprints for agentic AI, including PDF-to-podcast for efficient research and video search and summarization for analyzing large quantities of video and images enabling developers to build, test and run AI agents anywhere.AI Blueprints empower developers to deploy custom agents for automating enterprise workflows This new category of partner blueprints integrates NVIDIA AI Enterprise software, including NVIDIA NIM microservices and NVIDIA NeMo, with platforms from leading providers like CrewAI, Daily, LangChain, LlamaIndex and Weights & Biases.Additionally, Huang announced new Llama Nemotron.Developers can use NVIDIA NIM microservices to build AI agents for tasks like customer support, fraud detection, and supply chain optimization.Available as NVIDIA NIM microservices, the models can supercharge AI agents on any accelerated system.NVIDIA NIM microservices streamline video content management, boosting efficiency and audience engagement in the media industry.Moving beyond digital applications, NVIDIAs innovations are paving the way for AI to revolutionize the physical world with robotics.All of the enabling technologies that Ive been talking about are going to make it possible for us in the next several years to see very rapid breakthroughs, surprising breakthroughs, in general robotics.In manufacturing, the NVIDIA Isaac GR00T Blueprint for synthetic motion generation will help developers generate exponentially large synthetic motion data to train their humanoids using imitation learning.Huang emphasized the importance of training robots efficiently, using NVIDIAs Omniverse to generate millions of synthetic motions for humanoid training.The Mega blueprint enables large-scale simulation of robot fleets, adopted by leaders like Accenture and KION for warehouse automation.These AI tools set the stage for NVIDIAs latest innovation: a personal AI supercomputer called Project DIGITS.NVIDIA Unveils Project DigitsPutting NVIDIA Grace Blackwell on every desk and at every AI developers fingertips, Huang unveiled NVIDIA Project DIGITS.I have one more thing that I want to show you, Huang said. None of this would be possible if not for this incredible project that we started about a decade ago. Inside the company, it was called Project DIGITS deep learning GPU intelligence training system.Huang highlighted the legacy of NVIDIAs AI supercomputing journey, telling the story of how in 2016 he delivered the first NVIDIA DGX system to OpenAI. And obviously, it revolutionized artificial intelligence computing.The new Project DIGITS takes this mission further. Every software engineer, every engineer, every creative artist everybody who uses computers today as a tool will need an AI supercomputer, Huang said.Huang revealed that Project DIGITS, powered by the GB10 Grace Blackwell Superchip, represents NVIDIAs smallest yet most powerful AI supercomputer. This is NVIDIAs latest AI supercomputer, Huang said, showcasing the device. It runs the entire NVIDIA AI stack all of NVIDIA software runs on this. DGX Cloud runs on this.The compact yet powerful Project DIGITS is expected to be available in May.A Year of BreakthroughsIts been an incredible year, Huang said as he wrapped up the keynote. Huang highlighted NVIDIAs major achievements: Blackwell systems, physical AI foundation models, and breakthroughs in agentic AI and roboticsI want to thank all of you for your partnership, Huang said.See notice regarding software product information.0 Commenti 0 condivisioni 141 Views -

BLOGS.NVIDIA.COMNew GeForce RTX 50 Series GPUs Double Creative Performance in 3D, Video and Generative AIGeForce RTX 50 Series Desktop and Laptop GPUs, unveiled today at the CES trade show, are poised to power the next era of generative and agentic AI content creation offering new tools and capabilities for video, livestreaming, 3D and more.Built on the NVIDIA Blackwell architecture, GeForce RTX 50 Series GPUs can run creative generative AI models up to 2x faster in a smaller memory footprint, compared with the previous generation. They feature ninth-generation NVIDIA encoders for advanced video editing and livestreaming, and come with NVIDIA DLSS 4 and up to 32GB of VRAM to tackle massive 3D projects.These GPUs come with various software updates, including two new AI-powered NVIDIA Broadcast effects, updates to RTX Video and RTX Remix, and NVIDIA NIM microservices prepackaged and optimized models built to jumpstart AI content creation workflows on RTX AI PCs.Built for the Generative AI EraGenerative AI can create sensational results for creators, but with models growing in both complexity and scale, generative AI can be difficult to run even on the latest hardware.The GeForce RTX 50 Series adds FP4 support to help address this issue. FP4 is a lower quantization method, similar to file compression, that decreases model sizes. Compared with FP16 the default method that most models feature FP4 uses less than half of the memory and 50 Series GPUs provide over 2x performance compared to the previous generation. This can be done with virtually no loss in quality with advanced quantization methods offered by NVIDIA TensorRT Model Optimizer.For example, Black Forest Labs FLUX.1 [dev] model at FP16 requires over 23GB of VRAM, meaning it can only be supported by the GeForce RTX 4090 and professional GPUs. With FP4, FLUX.1 [dev] requires less than 10GB, so it can run locally on more GeForce RTX GPUs.With a GeForce RTX 4090 with FP16, the FLUX.1 [dev] model can generate images in 15 seconds with 30 steps. With a GeForce RTX 5090 with FP4, images can be generated in just over five seconds.A new NVIDIA AI Blueprint for 3D-guided generative AI based on FLUX.1 [dev], which will be offered as an NVIDIA NIM microservice, offers artists greater control over text-based image generation. With this blueprint, creators can use simple 3D objects created by hand or generated with AI and lay them out in a 3D renderer like Blender to guide AI image generation.A prepackaged workflow powered by the FLUX NIM microservice and ComfyUI can then generate high-quality images that match the 3D scenes composition.The NVIDIA Blueprint for 3D-guided generative AI is expected to be available through GitHub using a one-click installer in February.Stability AI announced that its Stable Point Aware 3D, or SPAR3D, model will be available this month on RTX AI PCs. Thanks to RTX acceleration, the new model from Stability AI will help transform 3D design, delivering exceptional control over 3D content creation by enabling real-time editing and the ability to generate an object in less than a second from a single image.Professional-Grade Video for AllGeForce RTX 50 Series GPUs deliver a generational leap in NVIDIA encoders and decoders with support for the 4:2:2 pro-grade color format, multiview-HEVC (MV-HEVC) for 3D and virtual reality (VR) video, and the new AV1 Ultra High Quality mode.Most consumer cameras are confined to 4:2:0 color compression, which reduces the amount of color information. 4:2:0 is typically sufficient for video playback on browsers, but it cant provide the color depth needed for advanced video editors to color grade videos. The 4:2:2 format provides double the color information with just a 1.3x increase in RAW file size offering an ideal balance for video editing workflows.Decoding 4:2:2 video can be challenging due to the increased file sizes. GeForce RTX 50 Series GPUs include 4:2:2 hardware support that can decode up to eight times the 4K 60 frames per second (fps) video sources per decoder, enabling smooth multi-camera video editing.The GeForce RTX 5090 GPU is equipped with three encoders and two decoders, the GeForce RTX 5080 GPU includes two encoders and two decoders, the 5070 Ti GPUs has two encoders with a single decoder, and the GeForce RTX 5070 GPU includes a single encoder and decoder. These multi-encoder and decoder setups, paired with faster GPUs, enable the GeForce RTX 5090 to export video 60% faster than the GeForce RTX 4090 and at 4x speed compared with the GeForce RTX 3090.GeForce RTX 50 Series GPUs also feature the ninth-generation NVIDIA video encoder, NVENC, that offers a 5% improvement in video quality on HEVC and AV1 encoding (BD-BR), as well as a new AV1 Ultra Quality mode that achieves 5% more compression at the same quality. They also include the sixth-generation NVIDIA decoder, with 2x the decode speed for H.264 video.NVIDIA is collaborating with Adobe Premiere Pro, Blackmagic Designs DaVinci Resolve, Capcut and Wondershare Filmora to integrate these technologies, starting in February.3D video is starting to catch on thanks to the growth of VR, AR and mixed reality headsets. The new RTX 50 Series GPUs also come with support for MV-HEVC codecs to unlock such formats in the near future.Livestreaming EnhancedLivestreaming is a juggling act, where the streamer has to entertain the audience, produce a show and play a video game all at the same time. Top streamers can afford to hire producers and moderators to share the workload, but most have to manage these responsibilities on their own and often in long shifts until now.Streamlabs, a Logitech brand and leading provider of broadcasting software and tools for content creators, is collaborating with NVIDIA and Inworld AI to create the Streamlabs Intelligent Streaming Assistant.Streamlabs Intelligent Streaming Assistant is an AI agent that can act as a sidekick, producer and technical support. The sidekick that can join streams as a 3D avatar to answer questions, comment on gameplay or chats, or help initiate conversations during quiet periods. It can help produce streams, switching to the most relevant scenes and playing audio and video cues during interesting gameplay moments. It can even serve as an IT assistant that helps configure streams and troubleshoot issues.Streamlabs Intelligent Streaming Assistant is powered by NVIDIA ACE technologies for creating digital humans and Inworld AI, an AI framework for agentic AI experiences. The assistant will be available later this year.Millions have used the NVIDIA Broadcast app to turn offices and dorm rooms into home studios using AI-powered features that improve audio and video quality without needing expensive, specialized equipment.Two new AI-powered beta effects are being added to the NVIDIA Broadcast app.The first, Studio Voice, enhances the sound of a users microphone to match that of a high-quality microphone. The other, Virtual Key Light, can relight a subjects face to deliver even coverage as if it were well-lit by two lights.Because they harness demanding AI models, these beta features are recommended for video conferencing or non-gaming livestreams using a GeForce RTX 5080 GPU or higher. NVIDIA is working to expand these features to more GeForce RTX GPUs in future updates.The NVIDIA Broadcast upgrade also includes an updated user interface that allows users to apply more effects simultaneously, as well as improvements to the background noise removal, virtual background and eye contact effects.The updated NVIDIA Broadcast app will be available in February.Livestreamers can also benefit from NVENC 5% BD-BR video quality improvement for HEVC and AV1 in the latest beta of Twitchs Enhanced Broadcast feature in OBS, and the improved AV1 encoder for streaming in Discord or YouTube.RTX Video an AI feature that enhances video playback on popular internet browsers like Google Chrome and Microsoft Edge, and locally with Video Super Resolution and HDR is getting an update to decrease GPU usage by 30%, expanding the lineup of GeForce RTX GPUs that can run Video Super Resolution with higher quality.The RTX Video update is slated for a future NVIDIA App release.Unprecedented 3D Render PerformanceThe GeForce RTX 5090 GPU offers 32GB of GPU memory the largest of any GeForce RTX GPU ever, marking a 33% increase over the GeForce RTX 4090 GPU. This lets 3D artists build larger, richer worlds while using multiple applications simultaneously. Plus, new RTX 50 Series fourth-generation RT Cores can run 3D applications 40% faster.DLSS 4 debuts Multi Frame Generation to boost frame rates by using AI to generate up to three frames per rendered frame. This enables animators to smoothly navigate a scene with 4x as many frames, or render 3D content at 60 fps or more.D5 Render and Chaos Vantage, two popular professional-grade 3D apps for architects and designers, will add support for DLSS 4 in February.3D artists have adopted generative AI to boost productivity in generating draft 3D meshes, HDRi maps or even animations to prototype a scene. At CES, Stability AI announced SPAR3D, its new 3D model that can generate 3D meshes from images in seconds with RTX acceleration.NVIDIA RTX Remix a modding platform that lets modders capture game assets, automatically enhance materials with generative AI tools and create stunning RTX remasters with full ray tracing supports DLSS 4, increasing graphical fidelity and frame rates to maximize realism and immersion during gameplay.RTX Remix soon plans to support Neural Radiance Cache, a neural shader that uses AI to train on live game data and estimate per-pixel accurate indirect lighting. RTX Remix creators can also expect access to RTX Skin in their mods, the first ray-traced sub-surface scattering implementation in games. With RTX Skin, RTX Remix mods expect to feature characters with new levels of realism, as light will reflect and propagate through their skin, grounding them in the worlds they inhabit.GeForce RTX 5090 and 5080 GPUs will be available for purchase starting Jan. 30 followed by GeForce RTX 5070 Ti and 5070 GPUs in February and RTX 50 Series laptops in March.All systems equipped with GeForce RTX GPUs include the NVIDIA Studio platform optimizations, with over 130 GPU-accelerated content creation apps, as well as NVIDIA Studio Drivers, tested extensively and released monthly to enhance performance and maximize stability in popular creative applications.Stay tuned for more updates on the GeForce RTX 50 Series. Learn more about how the GeForce RTX 50 Series supercharges gaming, and check out all of NVIDIAs announcements at CES.Every month brings new creative app updates and optimizations powered by the NVIDIA StudioFollow NVIDIA Studio on Instagram, X and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.See notice regarding software product information.0 Commenti 0 condivisioni 141 Views

BLOGS.NVIDIA.COMNew GeForce RTX 50 Series GPUs Double Creative Performance in 3D, Video and Generative AIGeForce RTX 50 Series Desktop and Laptop GPUs, unveiled today at the CES trade show, are poised to power the next era of generative and agentic AI content creation offering new tools and capabilities for video, livestreaming, 3D and more.Built on the NVIDIA Blackwell architecture, GeForce RTX 50 Series GPUs can run creative generative AI models up to 2x faster in a smaller memory footprint, compared with the previous generation. They feature ninth-generation NVIDIA encoders for advanced video editing and livestreaming, and come with NVIDIA DLSS 4 and up to 32GB of VRAM to tackle massive 3D projects.These GPUs come with various software updates, including two new AI-powered NVIDIA Broadcast effects, updates to RTX Video and RTX Remix, and NVIDIA NIM microservices prepackaged and optimized models built to jumpstart AI content creation workflows on RTX AI PCs.Built for the Generative AI EraGenerative AI can create sensational results for creators, but with models growing in both complexity and scale, generative AI can be difficult to run even on the latest hardware.The GeForce RTX 50 Series adds FP4 support to help address this issue. FP4 is a lower quantization method, similar to file compression, that decreases model sizes. Compared with FP16 the default method that most models feature FP4 uses less than half of the memory and 50 Series GPUs provide over 2x performance compared to the previous generation. This can be done with virtually no loss in quality with advanced quantization methods offered by NVIDIA TensorRT Model Optimizer.For example, Black Forest Labs FLUX.1 [dev] model at FP16 requires over 23GB of VRAM, meaning it can only be supported by the GeForce RTX 4090 and professional GPUs. With FP4, FLUX.1 [dev] requires less than 10GB, so it can run locally on more GeForce RTX GPUs.With a GeForce RTX 4090 with FP16, the FLUX.1 [dev] model can generate images in 15 seconds with 30 steps. With a GeForce RTX 5090 with FP4, images can be generated in just over five seconds.A new NVIDIA AI Blueprint for 3D-guided generative AI based on FLUX.1 [dev], which will be offered as an NVIDIA NIM microservice, offers artists greater control over text-based image generation. With this blueprint, creators can use simple 3D objects created by hand or generated with AI and lay them out in a 3D renderer like Blender to guide AI image generation.A prepackaged workflow powered by the FLUX NIM microservice and ComfyUI can then generate high-quality images that match the 3D scenes composition.The NVIDIA Blueprint for 3D-guided generative AI is expected to be available through GitHub using a one-click installer in February.Stability AI announced that its Stable Point Aware 3D, or SPAR3D, model will be available this month on RTX AI PCs. Thanks to RTX acceleration, the new model from Stability AI will help transform 3D design, delivering exceptional control over 3D content creation by enabling real-time editing and the ability to generate an object in less than a second from a single image.Professional-Grade Video for AllGeForce RTX 50 Series GPUs deliver a generational leap in NVIDIA encoders and decoders with support for the 4:2:2 pro-grade color format, multiview-HEVC (MV-HEVC) for 3D and virtual reality (VR) video, and the new AV1 Ultra High Quality mode.Most consumer cameras are confined to 4:2:0 color compression, which reduces the amount of color information. 4:2:0 is typically sufficient for video playback on browsers, but it cant provide the color depth needed for advanced video editors to color grade videos. The 4:2:2 format provides double the color information with just a 1.3x increase in RAW file size offering an ideal balance for video editing workflows.Decoding 4:2:2 video can be challenging due to the increased file sizes. GeForce RTX 50 Series GPUs include 4:2:2 hardware support that can decode up to eight times the 4K 60 frames per second (fps) video sources per decoder, enabling smooth multi-camera video editing.The GeForce RTX 5090 GPU is equipped with three encoders and two decoders, the GeForce RTX 5080 GPU includes two encoders and two decoders, the 5070 Ti GPUs has two encoders with a single decoder, and the GeForce RTX 5070 GPU includes a single encoder and decoder. These multi-encoder and decoder setups, paired with faster GPUs, enable the GeForce RTX 5090 to export video 60% faster than the GeForce RTX 4090 and at 4x speed compared with the GeForce RTX 3090.GeForce RTX 50 Series GPUs also feature the ninth-generation NVIDIA video encoder, NVENC, that offers a 5% improvement in video quality on HEVC and AV1 encoding (BD-BR), as well as a new AV1 Ultra Quality mode that achieves 5% more compression at the same quality. They also include the sixth-generation NVIDIA decoder, with 2x the decode speed for H.264 video.NVIDIA is collaborating with Adobe Premiere Pro, Blackmagic Designs DaVinci Resolve, Capcut and Wondershare Filmora to integrate these technologies, starting in February.3D video is starting to catch on thanks to the growth of VR, AR and mixed reality headsets. The new RTX 50 Series GPUs also come with support for MV-HEVC codecs to unlock such formats in the near future.Livestreaming EnhancedLivestreaming is a juggling act, where the streamer has to entertain the audience, produce a show and play a video game all at the same time. Top streamers can afford to hire producers and moderators to share the workload, but most have to manage these responsibilities on their own and often in long shifts until now.Streamlabs, a Logitech brand and leading provider of broadcasting software and tools for content creators, is collaborating with NVIDIA and Inworld AI to create the Streamlabs Intelligent Streaming Assistant.Streamlabs Intelligent Streaming Assistant is an AI agent that can act as a sidekick, producer and technical support. The sidekick that can join streams as a 3D avatar to answer questions, comment on gameplay or chats, or help initiate conversations during quiet periods. It can help produce streams, switching to the most relevant scenes and playing audio and video cues during interesting gameplay moments. It can even serve as an IT assistant that helps configure streams and troubleshoot issues.Streamlabs Intelligent Streaming Assistant is powered by NVIDIA ACE technologies for creating digital humans and Inworld AI, an AI framework for agentic AI experiences. The assistant will be available later this year.Millions have used the NVIDIA Broadcast app to turn offices and dorm rooms into home studios using AI-powered features that improve audio and video quality without needing expensive, specialized equipment.Two new AI-powered beta effects are being added to the NVIDIA Broadcast app.The first, Studio Voice, enhances the sound of a users microphone to match that of a high-quality microphone. The other, Virtual Key Light, can relight a subjects face to deliver even coverage as if it were well-lit by two lights.Because they harness demanding AI models, these beta features are recommended for video conferencing or non-gaming livestreams using a GeForce RTX 5080 GPU or higher. NVIDIA is working to expand these features to more GeForce RTX GPUs in future updates.The NVIDIA Broadcast upgrade also includes an updated user interface that allows users to apply more effects simultaneously, as well as improvements to the background noise removal, virtual background and eye contact effects.The updated NVIDIA Broadcast app will be available in February.Livestreamers can also benefit from NVENC 5% BD-BR video quality improvement for HEVC and AV1 in the latest beta of Twitchs Enhanced Broadcast feature in OBS, and the improved AV1 encoder for streaming in Discord or YouTube.RTX Video an AI feature that enhances video playback on popular internet browsers like Google Chrome and Microsoft Edge, and locally with Video Super Resolution and HDR is getting an update to decrease GPU usage by 30%, expanding the lineup of GeForce RTX GPUs that can run Video Super Resolution with higher quality.The RTX Video update is slated for a future NVIDIA App release.Unprecedented 3D Render PerformanceThe GeForce RTX 5090 GPU offers 32GB of GPU memory the largest of any GeForce RTX GPU ever, marking a 33% increase over the GeForce RTX 4090 GPU. This lets 3D artists build larger, richer worlds while using multiple applications simultaneously. Plus, new RTX 50 Series fourth-generation RT Cores can run 3D applications 40% faster.DLSS 4 debuts Multi Frame Generation to boost frame rates by using AI to generate up to three frames per rendered frame. This enables animators to smoothly navigate a scene with 4x as many frames, or render 3D content at 60 fps or more.D5 Render and Chaos Vantage, two popular professional-grade 3D apps for architects and designers, will add support for DLSS 4 in February.3D artists have adopted generative AI to boost productivity in generating draft 3D meshes, HDRi maps or even animations to prototype a scene. At CES, Stability AI announced SPAR3D, its new 3D model that can generate 3D meshes from images in seconds with RTX acceleration.NVIDIA RTX Remix a modding platform that lets modders capture game assets, automatically enhance materials with generative AI tools and create stunning RTX remasters with full ray tracing supports DLSS 4, increasing graphical fidelity and frame rates to maximize realism and immersion during gameplay.RTX Remix soon plans to support Neural Radiance Cache, a neural shader that uses AI to train on live game data and estimate per-pixel accurate indirect lighting. RTX Remix creators can also expect access to RTX Skin in their mods, the first ray-traced sub-surface scattering implementation in games. With RTX Skin, RTX Remix mods expect to feature characters with new levels of realism, as light will reflect and propagate through their skin, grounding them in the worlds they inhabit.GeForce RTX 5090 and 5080 GPUs will be available for purchase starting Jan. 30 followed by GeForce RTX 5070 Ti and 5070 GPUs in February and RTX 50 Series laptops in March.All systems equipped with GeForce RTX GPUs include the NVIDIA Studio platform optimizations, with over 130 GPU-accelerated content creation apps, as well as NVIDIA Studio Drivers, tested extensively and released monthly to enhance performance and maximize stability in popular creative applications.Stay tuned for more updates on the GeForce RTX 50 Series. Learn more about how the GeForce RTX 50 Series supercharges gaming, and check out all of NVIDIAs announcements at CES.Every month brings new creative app updates and optimizations powered by the NVIDIA StudioFollow NVIDIA Studio on Instagram, X and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.See notice regarding software product information.0 Commenti 0 condivisioni 141 Views -

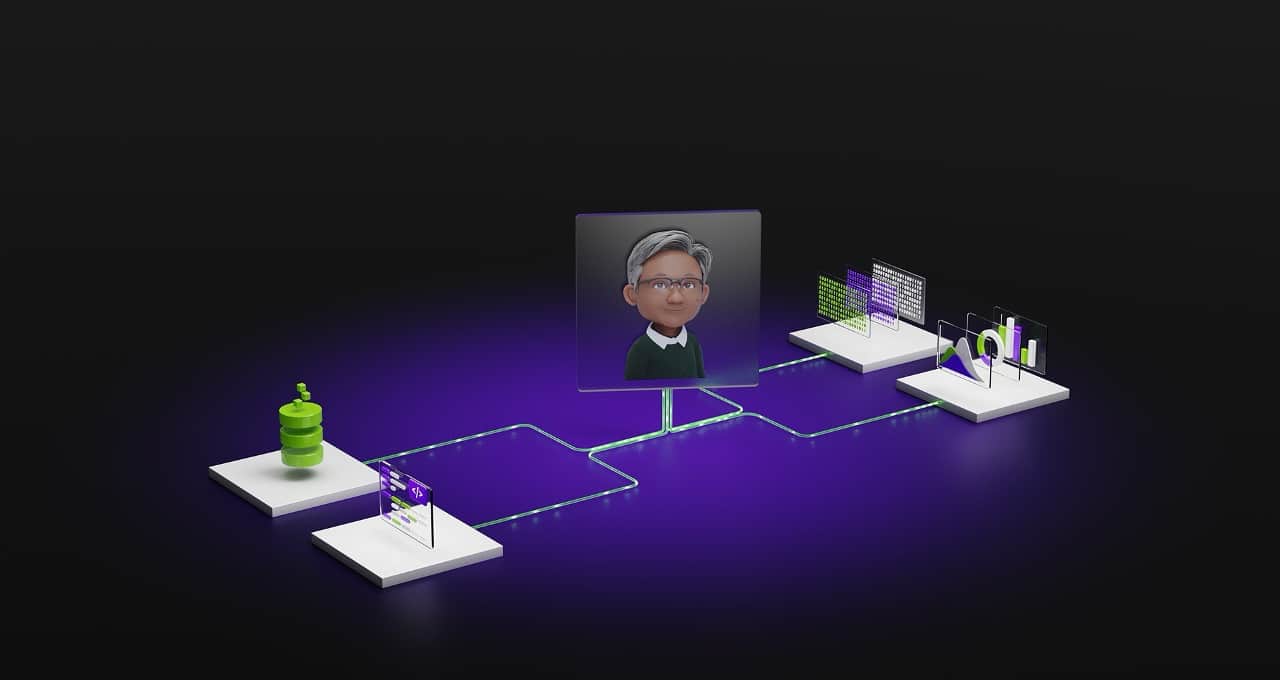

BLOGS.NVIDIA.COMNVIDIA Announces Nemotron Model Families to Advance Agentic AIArtificial intelligence is entering a new era agentic AI where teams of specialized agents can help people solve complex problems and automate repetitive tasks.With custom AI agents, enterprises across industries can manufacture intelligence and achieve unprecedented productivity. These advanced AI agents require a system of multiple generative AI models optimized for agentic AI functions and capabilities. This complexity means that the need for powerful, efficient, enterprise-grade models has never been greater.To provide a foundation for enterprise agentic AI, NVIDIA today announced the Llama Nemotron family of open large language models (LLMs). Built with Llama, the models can help developers create and deploy AI agents across a range of applications including customer support, fraud detection, and product supply chain and inventory management optimization.To be effective, many AI agents need both language skills and the ability to perceive the world and respond with the appropriate action.With new NVIDIA Cosmos Nemotron vision language models (VLMs) and NVIDIA NIM microservices for video search and summarization, developers can build agents that analyze and respond to images and video from autonomous machines, hospitals, stores and warehouses, as well as sports events, movies and news. For developers seeking to generate physics-aware videos for robotics and autonomous vehicles, NVIDIA today separately announced NVIDIA Cosmos world foundation models.Open Llama Nemotron Models Optimize Compute Efficiency, Accuracy for AI AgentsBuilt with Llama foundation models one of the most popular commercially viable open-source model collections, downloaded over 650 million times NVIDIA Llama Nemotron models provide optimized building blocks for AI agent development. This builds on NVIDIAs commitment to developing state-of-the-art models, such as Llama 3.1 Nemotron 70B, now available through the NVIDIA API catalog.Llama Nemotron models are pruned and trained with NVIDIAs latest techniques and high-quality datasets for enhanced agentic capabilities. They excel at instruction following, chat, function calling, coding and math, while being size-optimized to run on a broad range of NVIDIA accelerated computing resources.Agentic AI is the next frontier of AI development, and delivering on this opportunity requires full-stack optimization across a system of LLMs to deliver efficient, accurate AI agents, said Ahmad Al-Dahle, vice president and head of GenAI at Meta. Through our collaboration with NVIDIA and our shared commitment to open models, the NVIDIA Llama Nemotron family built on Llama can help enterprises quickly create their own custom AI agents.Leading AI agent platform providers including SAP and ServiceNow are expected to be among the first to use the new Llama Nemotron models.AI agents that collaborate to solve complex tasks across multiple lines of the business will unlock a whole new level of enterprise productivity beyond todays generative AI scenarios, said Philipp Herzig, chief AI officer at SAP. Through SAPs Joule, hundreds of millions of enterprise users will interact with these agents to accomplish their goals faster than ever before. NVIDIAs new open Llama Nemotron model family will foster the development of multiple specialized AI agents to transform business processes.AI agents make it possible for organizations to achieve more with less effort, setting new standards for business transformation, said Jeremy Barnes, vice president of platform AI at ServiceNow. The improved performance and accuracy of NVIDIAs open Llama Nemotron models can help build advanced AI agent services that solve complex problems across functions, in any industry.The NVIDIA Llama Nemotron models use NVIDIA NeMo for distilling, pruning and alignment. Using these techniques, the models are small enough to run on a variety of computing platforms while providing high accuracy as well as increased model throughput.The Llama Nemotron model family will be available as downloadable models and as NVIDIA NIM microservices that can be easily deployed on clouds, data centers, PCs and workstations. They offer enterprises industry-leading performance with reliable, secure and seamless integration into their agentic AI application workflows.Customize and Connect to Business Knowledge With NVIDIA NeMoThe Llama Nemotron and Cosmos Nemotron model families are coming in Nano, Super and Ultra sizes to provide options for deploying AI agents at every scale.Nano: The most cost-effective model optimized for real-time applications with low latency, ideal for deployment on PCs and edge devices.Super: A high-accuracy model offering exceptional throughput on a single GPU.Ultra: The highest-accuracy model, designed for data-center-scale applications demanding the highest performance.Enterprises can also customize the models for their specific use cases and domains with NVIDIA NeMo microservices to simplify data curation, accelerate model customization and evaluation, and apply guardrails to keep responses on track.With NVIDIA NeMo Retriever, developers can also integrate retrieval-augmented generation capabilities to connect models to their enterprise data.And using NVIDIA Blueprints for agentic AI, enterprises can quickly create their own applications using NVIDIAs advanced AI tools and end-to-end development expertise. In fact, NVIDIA Cosmos Nemotron, NVIDIA Llama Nemotron and NeMo Retriever supercharge the new NVIDIA Blueprint for video search and summarization, announced separately today.NeMo, NeMo Retriever and NVIDIA Blueprints are all available with the NVIDIA AI Enterprise software platform.AvailabilityLlama Nemotron and Cosmos Nemotron models will be available soon as hosted application programming interfaces and for download on build.nvidia.com and Hugging Face. Access for development, testing and research is free for members of the NVIDIA Developer Program.Enterprises can run Llama Nemotron and Cosmos Nemotron NIM microservices in production with the NVIDIA AI Enterprise software platform on accelerated data center and cloud infrastructure.Sign up to get notified about Llama Nemotron and Cosmos Nemotron models, and join NVIDIA at CES.See notice regarding software product information.0 Commenti 0 condivisioni 142 Views

BLOGS.NVIDIA.COMNVIDIA Announces Nemotron Model Families to Advance Agentic AIArtificial intelligence is entering a new era agentic AI where teams of specialized agents can help people solve complex problems and automate repetitive tasks.With custom AI agents, enterprises across industries can manufacture intelligence and achieve unprecedented productivity. These advanced AI agents require a system of multiple generative AI models optimized for agentic AI functions and capabilities. This complexity means that the need for powerful, efficient, enterprise-grade models has never been greater.To provide a foundation for enterprise agentic AI, NVIDIA today announced the Llama Nemotron family of open large language models (LLMs). Built with Llama, the models can help developers create and deploy AI agents across a range of applications including customer support, fraud detection, and product supply chain and inventory management optimization.To be effective, many AI agents need both language skills and the ability to perceive the world and respond with the appropriate action.With new NVIDIA Cosmos Nemotron vision language models (VLMs) and NVIDIA NIM microservices for video search and summarization, developers can build agents that analyze and respond to images and video from autonomous machines, hospitals, stores and warehouses, as well as sports events, movies and news. For developers seeking to generate physics-aware videos for robotics and autonomous vehicles, NVIDIA today separately announced NVIDIA Cosmos world foundation models.Open Llama Nemotron Models Optimize Compute Efficiency, Accuracy for AI AgentsBuilt with Llama foundation models one of the most popular commercially viable open-source model collections, downloaded over 650 million times NVIDIA Llama Nemotron models provide optimized building blocks for AI agent development. This builds on NVIDIAs commitment to developing state-of-the-art models, such as Llama 3.1 Nemotron 70B, now available through the NVIDIA API catalog.Llama Nemotron models are pruned and trained with NVIDIAs latest techniques and high-quality datasets for enhanced agentic capabilities. They excel at instruction following, chat, function calling, coding and math, while being size-optimized to run on a broad range of NVIDIA accelerated computing resources.Agentic AI is the next frontier of AI development, and delivering on this opportunity requires full-stack optimization across a system of LLMs to deliver efficient, accurate AI agents, said Ahmad Al-Dahle, vice president and head of GenAI at Meta. Through our collaboration with NVIDIA and our shared commitment to open models, the NVIDIA Llama Nemotron family built on Llama can help enterprises quickly create their own custom AI agents.Leading AI agent platform providers including SAP and ServiceNow are expected to be among the first to use the new Llama Nemotron models.AI agents that collaborate to solve complex tasks across multiple lines of the business will unlock a whole new level of enterprise productivity beyond todays generative AI scenarios, said Philipp Herzig, chief AI officer at SAP. Through SAPs Joule, hundreds of millions of enterprise users will interact with these agents to accomplish their goals faster than ever before. NVIDIAs new open Llama Nemotron model family will foster the development of multiple specialized AI agents to transform business processes.AI agents make it possible for organizations to achieve more with less effort, setting new standards for business transformation, said Jeremy Barnes, vice president of platform AI at ServiceNow. The improved performance and accuracy of NVIDIAs open Llama Nemotron models can help build advanced AI agent services that solve complex problems across functions, in any industry.The NVIDIA Llama Nemotron models use NVIDIA NeMo for distilling, pruning and alignment. Using these techniques, the models are small enough to run on a variety of computing platforms while providing high accuracy as well as increased model throughput.The Llama Nemotron model family will be available as downloadable models and as NVIDIA NIM microservices that can be easily deployed on clouds, data centers, PCs and workstations. They offer enterprises industry-leading performance with reliable, secure and seamless integration into their agentic AI application workflows.Customize and Connect to Business Knowledge With NVIDIA NeMoThe Llama Nemotron and Cosmos Nemotron model families are coming in Nano, Super and Ultra sizes to provide options for deploying AI agents at every scale.Nano: The most cost-effective model optimized for real-time applications with low latency, ideal for deployment on PCs and edge devices.Super: A high-accuracy model offering exceptional throughput on a single GPU.Ultra: The highest-accuracy model, designed for data-center-scale applications demanding the highest performance.Enterprises can also customize the models for their specific use cases and domains with NVIDIA NeMo microservices to simplify data curation, accelerate model customization and evaluation, and apply guardrails to keep responses on track.With NVIDIA NeMo Retriever, developers can also integrate retrieval-augmented generation capabilities to connect models to their enterprise data.And using NVIDIA Blueprints for agentic AI, enterprises can quickly create their own applications using NVIDIAs advanced AI tools and end-to-end development expertise. In fact, NVIDIA Cosmos Nemotron, NVIDIA Llama Nemotron and NeMo Retriever supercharge the new NVIDIA Blueprint for video search and summarization, announced separately today.NeMo, NeMo Retriever and NVIDIA Blueprints are all available with the NVIDIA AI Enterprise software platform.AvailabilityLlama Nemotron and Cosmos Nemotron models will be available soon as hosted application programming interfaces and for download on build.nvidia.com and Hugging Face. Access for development, testing and research is free for members of the NVIDIA Developer Program.Enterprises can run Llama Nemotron and Cosmos Nemotron NIM microservices in production with the NVIDIA AI Enterprise software platform on accelerated data center and cloud infrastructure.Sign up to get notified about Llama Nemotron and Cosmos Nemotron models, and join NVIDIA at CES.See notice regarding software product information.0 Commenti 0 condivisioni 142 Views -

WWW.POLYGON.COMCatan, the classic gateway board game, gets a bright new 6th edition this yearKlaus Teubers Catan, widely credited with kicking off the modern board gaming renaissance, turns 30 years old this year. To celebrate, Catan the global company behind the beloved tabletop brand is refreshing the look and feel of the classic title for the first time in a decade with a revised sixth edition launching in April, 2025. New, revised expansions and extensions are also currently in production for release. Polygon sat down with managing director Benjamin Teuber, son of the original games late designer, to discuss the upcoming release.Catan tells a story about Vikings colonizing islands long before the dawn of modern Europe, asking players to make their way in its fictional world by trading resources like wood, grain, and sheep. The games popularity directly contributed to changing American tastes for board games. Today, domestic audiences have a strong preference for these so-called Euro-style board games, which like Catan eschew direct conflict and instead favor a collaborative style of gameplay with more limited randomness and dice-rolling.Benjamin Teuber told Polygon that even he is continually surprised by the impact his father had on the industry.It changed so much of how [board] gaming is perceived, he said in a recent video call, and how you bring people to the table.Ten years ago Catan changed its name, dropping The Settlers of preamble and putting the islands name up front. This time around the biggest change by far is the new box art, which features a brighter and more naturalistic expression of the Catan universe complete with hex-shaped fields in the background, which directly mirror the look of the game on the table. Theres also a very heartfelt Easter egg embedded in the cover of the base game a tiny Klaus Teuber driving a cart full of wheat up the lane.Tile art has also been updated and the components, like roads and buildings, are a hair larger while a helpful plastic pack-in makes drawing cards easier. However, for existing fans, Catan should look and play very much like it has in the past. Importantly, it will also remain compatible with existing expansions so fans can be assured of cross compatibility with the supplements they already own. Benjamin Teuber said that he hopes the games appeal extends well into the next three decades.My personal opinion is that the best way to make a game known is to play it and to make people play it, he said. I think there are a few things which you just have to do nowadays, which are a good video tutorial of explaining the game, giving easy access to the game, maybe a good app to teach the game. But then you need to make people just experience it. Catan, alongside Catan: Cities and Knights and Catan: Seafarers expansions, will be available globally in the 6th edition beginning April 4, 2025 alongside their five- and six-player extensions.0 Commenti 0 condivisioni 150 Views

WWW.POLYGON.COMCatan, the classic gateway board game, gets a bright new 6th edition this yearKlaus Teubers Catan, widely credited with kicking off the modern board gaming renaissance, turns 30 years old this year. To celebrate, Catan the global company behind the beloved tabletop brand is refreshing the look and feel of the classic title for the first time in a decade with a revised sixth edition launching in April, 2025. New, revised expansions and extensions are also currently in production for release. Polygon sat down with managing director Benjamin Teuber, son of the original games late designer, to discuss the upcoming release.Catan tells a story about Vikings colonizing islands long before the dawn of modern Europe, asking players to make their way in its fictional world by trading resources like wood, grain, and sheep. The games popularity directly contributed to changing American tastes for board games. Today, domestic audiences have a strong preference for these so-called Euro-style board games, which like Catan eschew direct conflict and instead favor a collaborative style of gameplay with more limited randomness and dice-rolling.Benjamin Teuber told Polygon that even he is continually surprised by the impact his father had on the industry.It changed so much of how [board] gaming is perceived, he said in a recent video call, and how you bring people to the table.Ten years ago Catan changed its name, dropping The Settlers of preamble and putting the islands name up front. This time around the biggest change by far is the new box art, which features a brighter and more naturalistic expression of the Catan universe complete with hex-shaped fields in the background, which directly mirror the look of the game on the table. Theres also a very heartfelt Easter egg embedded in the cover of the base game a tiny Klaus Teuber driving a cart full of wheat up the lane.Tile art has also been updated and the components, like roads and buildings, are a hair larger while a helpful plastic pack-in makes drawing cards easier. However, for existing fans, Catan should look and play very much like it has in the past. Importantly, it will also remain compatible with existing expansions so fans can be assured of cross compatibility with the supplements they already own. Benjamin Teuber said that he hopes the games appeal extends well into the next three decades.My personal opinion is that the best way to make a game known is to play it and to make people play it, he said. I think there are a few things which you just have to do nowadays, which are a good video tutorial of explaining the game, giving easy access to the game, maybe a good app to teach the game. But then you need to make people just experience it. Catan, alongside Catan: Cities and Knights and Catan: Seafarers expansions, will be available globally in the 6th edition beginning April 4, 2025 alongside their five- and six-player extensions.0 Commenti 0 condivisioni 150 Views -

LIFEHACKER.COMCES 2025: This Beach Umbrella Can Charge Your PhoneWe may earn a commission from links on this page.If what you enjoy most about the beach is sitting in the shade, sipping a cold beverage, and scrolling on your phone, have I got exciting news for you: Today at CES, Anker Solix announced the immanent release of a new Solar Beach Umbrella, as well as an electric cooler, the EverFrost 2. When used together, these devices can quite literally work to keep you cool this summer.Outdoor umbrellas with solar panels arent entirely new, and the options currently on the market typically have a small solar-capturing panel that can power lights built into the frame. However, Anker is approaching solar integration in its outdoor furniture differently. The panels span the entire crown of the umbrella, offering 100W of maximum solar input, which is the same as a standard solar panel. They seem wildly efficient at producing energy, tooAnker promises 200% solar generation in low light (20,000lux), and 130% in bright light intensity (50,000lux). This means the umbrella will create a generous amount of solar driven energy even when it is overcast. Credit: Anker Solix What will you do with all that energy? Probably recharge your phone, or perhaps a speaker. But Anker thinks you might also want to plug in any of the many power stations it sells, to get even more juice for your devices. Maybe you'll plug it directly into the EverFrost 2, to keep food and drinks frosty? (Though fully charged, the cooler will last 52 hours using only the onboard battery, so youd only need the umbrella to power if you're planning to spend a long time at the beach.) The umbrella has XT-60 and USB-C ports, and the battery on the cooler also acts as a power bank, offering 60W USB-C and 12W USB-A charging.The umbrella is 84 inches tall and 74 inches in diameter, enough to keep a few people shaded during the day. The solar panels are waterproof (rated IP67) and use sunshade fabric to reduce heat under the umbrella. Anker hasn't indicated what the cooler or umbrella weigh, an important omission given hauling these things to the beach might be a lot of work, what with all that battery power onboard.It's more likely you'll keep the umbrella on your patio, where it will become an additional source of power in your yard. Anker Solix is solidly a battery company. They make terrific backup batteries, from tiny portables to whole home backups. They have a whole line of solar generators, and the solar panels to go with them. While solar power was for years relegated to powering outdoor goods that you didnt have electricity for, and was generally unreliable, these days I actively choose solar over batteries because the technology has improved phenomenally. The solar panels on my outdoor security cameras perform astoundingly, even through weeks of overcast weather. While umbrella and cooler companies have previously tred to bring solar into their products, the results haven't always been reliable, and the part they struggled withthe solar panelsis precisely the tech that Anker Solix is known for.But more importantly, I think this speaks to where solar is headed. Consider what smart plugs can tell us: For years inside the home, we have been able to install smart plugs to turn common items into smart devices. Eventually, that connectivity made its way to the devices themselves. By bringing solar panels to devices themselves, Anker Solix is reducing the need for its own power stations. Imagine a future where you don't need to lug around a giant power bank, but only the devices you want to use, all of which will all have onboard solar power. It will be interesting to see how Anker leverages solar moving forward to build out other experiences. Why not patio furniture that heats in winter or cools in summer?The Anker Solix Beach Umbrella will be available this summe, with pricing to be announced. The Everfrost 2 will be available Feb. 21 in three sizes, starting at $699.0 Commenti 0 condivisioni 139 Views

LIFEHACKER.COMCES 2025: This Beach Umbrella Can Charge Your PhoneWe may earn a commission from links on this page.If what you enjoy most about the beach is sitting in the shade, sipping a cold beverage, and scrolling on your phone, have I got exciting news for you: Today at CES, Anker Solix announced the immanent release of a new Solar Beach Umbrella, as well as an electric cooler, the EverFrost 2. When used together, these devices can quite literally work to keep you cool this summer.Outdoor umbrellas with solar panels arent entirely new, and the options currently on the market typically have a small solar-capturing panel that can power lights built into the frame. However, Anker is approaching solar integration in its outdoor furniture differently. The panels span the entire crown of the umbrella, offering 100W of maximum solar input, which is the same as a standard solar panel. They seem wildly efficient at producing energy, tooAnker promises 200% solar generation in low light (20,000lux), and 130% in bright light intensity (50,000lux). This means the umbrella will create a generous amount of solar driven energy even when it is overcast. Credit: Anker Solix What will you do with all that energy? Probably recharge your phone, or perhaps a speaker. But Anker thinks you might also want to plug in any of the many power stations it sells, to get even more juice for your devices. Maybe you'll plug it directly into the EverFrost 2, to keep food and drinks frosty? (Though fully charged, the cooler will last 52 hours using only the onboard battery, so youd only need the umbrella to power if you're planning to spend a long time at the beach.) The umbrella has XT-60 and USB-C ports, and the battery on the cooler also acts as a power bank, offering 60W USB-C and 12W USB-A charging.The umbrella is 84 inches tall and 74 inches in diameter, enough to keep a few people shaded during the day. The solar panels are waterproof (rated IP67) and use sunshade fabric to reduce heat under the umbrella. Anker hasn't indicated what the cooler or umbrella weigh, an important omission given hauling these things to the beach might be a lot of work, what with all that battery power onboard.It's more likely you'll keep the umbrella on your patio, where it will become an additional source of power in your yard. Anker Solix is solidly a battery company. They make terrific backup batteries, from tiny portables to whole home backups. They have a whole line of solar generators, and the solar panels to go with them. While solar power was for years relegated to powering outdoor goods that you didnt have electricity for, and was generally unreliable, these days I actively choose solar over batteries because the technology has improved phenomenally. The solar panels on my outdoor security cameras perform astoundingly, even through weeks of overcast weather. While umbrella and cooler companies have previously tred to bring solar into their products, the results haven't always been reliable, and the part they struggled withthe solar panelsis precisely the tech that Anker Solix is known for.But more importantly, I think this speaks to where solar is headed. Consider what smart plugs can tell us: For years inside the home, we have been able to install smart plugs to turn common items into smart devices. Eventually, that connectivity made its way to the devices themselves. By bringing solar panels to devices themselves, Anker Solix is reducing the need for its own power stations. Imagine a future where you don't need to lug around a giant power bank, but only the devices you want to use, all of which will all have onboard solar power. It will be interesting to see how Anker leverages solar moving forward to build out other experiences. Why not patio furniture that heats in winter or cools in summer?The Anker Solix Beach Umbrella will be available this summe, with pricing to be announced. The Everfrost 2 will be available Feb. 21 in three sizes, starting at $699.0 Commenti 0 condivisioni 139 Views -

WWW.ENGADGET.COMMSI made a CPU cooler with a tiny built-in turntable and its pure funOne of the things I love the most about CES is finding all the silly one-offs and concept products that might never see full production. At CES 2025, MSI made something truly joyful when it created a custom CPU cooler that features a built-in turntable.The water blocks official name is the Mag Coreliquid A13 concept, though a name doesnt really matter because MSI says it doesnt have plans to turn it into an actual retail device. Thats kind of a bummer since not only does it mean you wont be able to put a fun little spinning table inside your desktop, it also sucks because the cooler that the A13 is based on the Mag Coreliquid A15 360 is a real product that has some neat specs. It features an offset CPU mount that can improve the performance of recent Intel chips (like the Core Ultra 200S) that have hotspots in unusual positions.MSI made a concept CPU water block for CES 2025 that has a built-in turntable and it's kind of awesome.Sadly, there are no plans to put it into actual production. Also, the Lucky the dragon figure does not come included.@engadget pic.twitter.com/X70XJeAq8I Sam Rutherford (@samrutherford) January 7, 2025 Now I fully admit that the fun of having a spinning table inside your PC might be lost on a lot of people. But then again, just look at Lucky (thats the name of MSIs dragon mascot) twirling in place while the desktop churns along. And whats better is that you can raise the clear lid on the water block and put anything you want inside. Think about a fancy watch or maybe a disco ball. Wouldnt that be a hoot, especially with all those RGB lights nearby? Honestly, the whole setup is kind of mesmerizing.Photo by Sam Rutherford/EngadgetBut alas, the Mag Coreliquid A13 will never be yours. That is unless people make enough noise and keep bugging MSI until they make it for real. The power is in your hands.This article originally appeared on Engadget at https://www.engadget.com/gaming/pc/msi-made-a-cpu-cooler-with-a-tiny-built-in-turntable-and-its-pure-fun-063636564.html?src=rss0 Commenti 0 condivisioni 153 Views

-