0 Commentarios

0 Acciones

133 Views

Directorio

Directorio

-

Please log in to like, share and comment!

-

WWW.YANKODESIGN.COMPortable NAS storage is also a computer with a built-in touchscreenAfter a period of ambiguity over the buzzword, the promise of cloud storage enticed many people to give up their reliance on local hard drives and SSDs, at least for files they need to be accessible anywhere they go. But while that ubiquity might be good for some cases, especially for online collaboration, but also had its downsides when it comes to speed, reliable access, and pricing.Network-attached Storage or NAS products tried to sell the idea of having your own personal cloud at home, but that really only worked if you either stayed only at home or had remote access to your home network from anywhere. This rather unusual portable NAS tries to take that even further by letting you carry that cloud with you and, even better or stranger, actually use it as a tablet computer with a tiny screen, even for just a few minutes at least.Designer: UnifyDrive (via Liliputing)Technically speaking, NAS devices are already mini PCs in their own right, with a CPU, memory, and custom operating system. Most of the time, however, you access it from a computer at home or remotely via an Internet connection, so just like mini PCs, they come headless or dont have their own monitors. The UnifyDrive UP6, however, is anything but typical and not only comes with a built-in battery, it also has a touchscreen on the side, literally.The UnifyDrive UP6 sounds more like a portable SSD drive in Hulk mode, fitting six M.2 2280 SSDs for a total of 48TB of storage. The choice of storage technology, versus more affordable hard drives, not only keeps the NAS compact but also resilient to movements and bumps. It is, after all, meant to be carried around, giving near-instant access to data either through a cable or a local wireless network. It even has Bluetooth support to let phones and cameras transfer data directly to it.Created with GIMPThe built-in battery lets you use the NAS without plugging it in but also acts as an instant UPS (uninterruptible power supply) for emergencies. The touch-enabled screen, on the other hand, lets you use the UnifyDrive UP6 directly even without a connected computer, managing and sharing files, browsing the Web, or even watching media stored there. Unsurprisingly, theres also an element of AI in the mix, allowing users to search photos and videos with facial recognition and natural language queries, all without even having to connect to the Internet.The post Portable NAS storage is also a computer with a built-in touchscreen first appeared on Yanko Design.0 Commentarios 0 Acciones 116 Views

WWW.YANKODESIGN.COMPortable NAS storage is also a computer with a built-in touchscreenAfter a period of ambiguity over the buzzword, the promise of cloud storage enticed many people to give up their reliance on local hard drives and SSDs, at least for files they need to be accessible anywhere they go. But while that ubiquity might be good for some cases, especially for online collaboration, but also had its downsides when it comes to speed, reliable access, and pricing.Network-attached Storage or NAS products tried to sell the idea of having your own personal cloud at home, but that really only worked if you either stayed only at home or had remote access to your home network from anywhere. This rather unusual portable NAS tries to take that even further by letting you carry that cloud with you and, even better or stranger, actually use it as a tablet computer with a tiny screen, even for just a few minutes at least.Designer: UnifyDrive (via Liliputing)Technically speaking, NAS devices are already mini PCs in their own right, with a CPU, memory, and custom operating system. Most of the time, however, you access it from a computer at home or remotely via an Internet connection, so just like mini PCs, they come headless or dont have their own monitors. The UnifyDrive UP6, however, is anything but typical and not only comes with a built-in battery, it also has a touchscreen on the side, literally.The UnifyDrive UP6 sounds more like a portable SSD drive in Hulk mode, fitting six M.2 2280 SSDs for a total of 48TB of storage. The choice of storage technology, versus more affordable hard drives, not only keeps the NAS compact but also resilient to movements and bumps. It is, after all, meant to be carried around, giving near-instant access to data either through a cable or a local wireless network. It even has Bluetooth support to let phones and cameras transfer data directly to it.Created with GIMPThe built-in battery lets you use the NAS without plugging it in but also acts as an instant UPS (uninterruptible power supply) for emergencies. The touch-enabled screen, on the other hand, lets you use the UnifyDrive UP6 directly even without a connected computer, managing and sharing files, browsing the Web, or even watching media stored there. Unsurprisingly, theres also an element of AI in the mix, allowing users to search photos and videos with facial recognition and natural language queries, all without even having to connect to the Internet.The post Portable NAS storage is also a computer with a built-in touchscreen first appeared on Yanko Design.0 Commentarios 0 Acciones 116 Views -

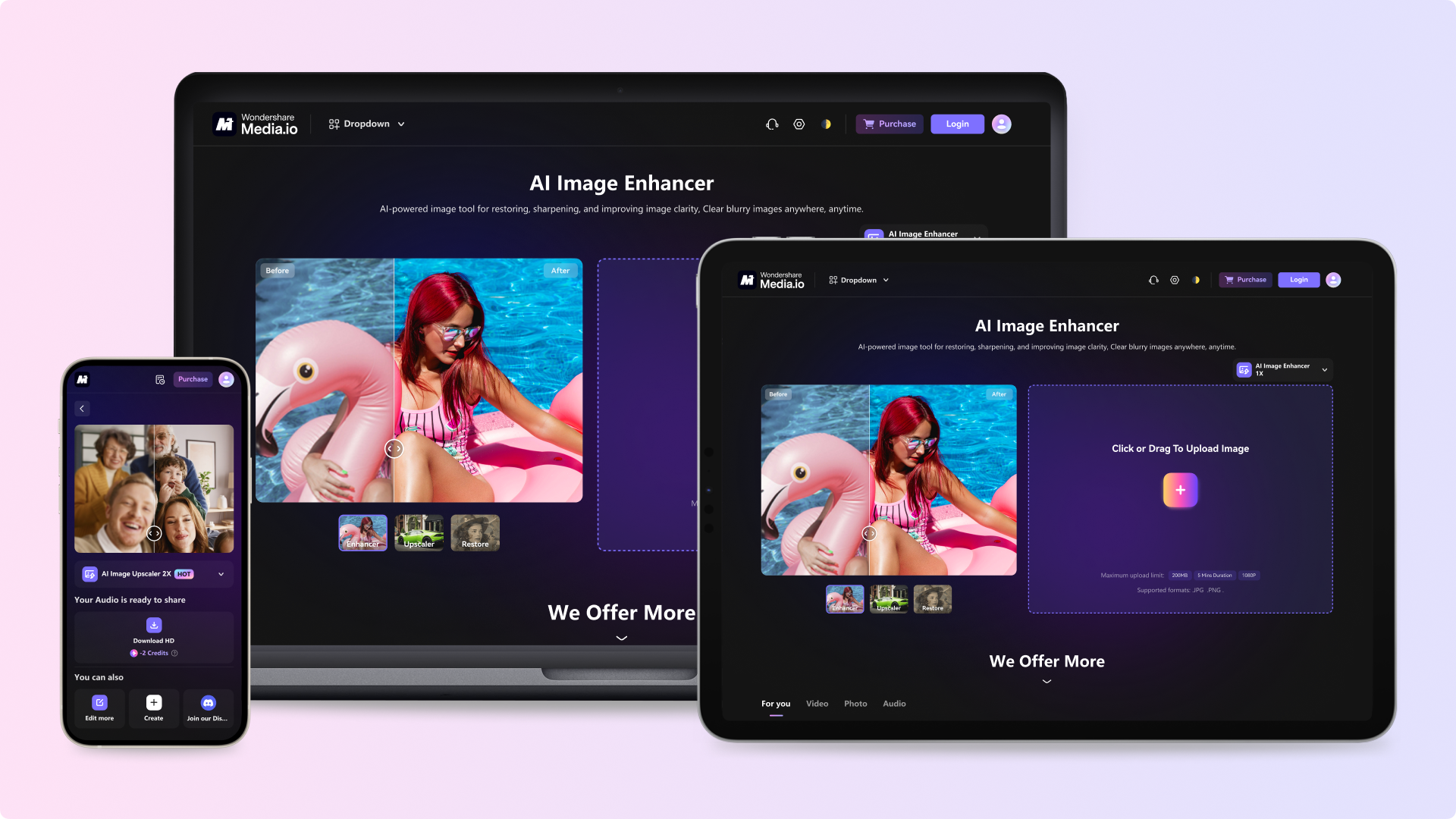

WWW.CREATIVEBLOQ.COMHow the Media.io AI Image Enhancer can supercharge your creative assets4 features that will banish your photography woes.0 Commentarios 0 Acciones 134 Views

WWW.CREATIVEBLOQ.COMHow the Media.io AI Image Enhancer can supercharge your creative assets4 features that will banish your photography woes.0 Commentarios 0 Acciones 134 Views -

WWW.WIRED.COMFar-Right Extremists Are LARPing as Emergency Workers in Los AngelesWhite supremacists and MAGA livestreamers are using the wildfires to solicit donations, juice social media engagement, and recruit new followers.0 Commentarios 0 Acciones 126 Views

WWW.WIRED.COMFar-Right Extremists Are LARPing as Emergency Workers in Los AngelesWhite supremacists and MAGA livestreamers are using the wildfires to solicit donations, juice social media engagement, and recruit new followers.0 Commentarios 0 Acciones 126 Views -

WWW.MACWORLD.COMNew Mac malware can bypass Apples XProtect security scannerMacworldA new report by security firm Check Point Research provides details on Banshee Stealer, Mac malware that attackers use to gain access to web browser data, such as login information and browser history, as well as crypto wallets. It sounds scary, but there isnt too much to worry about.Banshee Stealer is a new version of the malware that was discovered in July 2024. The malware has been updated with encryption taken from Apples XProtect, according to Check Point Research. XProtect is used by macOS as a layer of defense against malware, but Banshee Stealers new encryption allowed it to sneak by XProtect.Check Point Software reports that the browsers vulnerable to the malware are Google Chrome, Brave, Microsoft Edge, Opera, Vivaldi, and Yandexbrowsers that are based on the Chromium web engine that renders the websites within a browser. Safari, which is not on the list, is based on Apples WebKit engine. Bansee Stealer is mainly distributed through GitHub repositories of cracked software. It masquerades as other software that users are trying to download and also has a Windows counterpart called Lumina Stealer. Once installed on your system, it uses tricks to steal login data, including fraudulent browser extensions and pop-ups designed to look like legitimate macOS dialog boxes to get users to enter their system passwords.However, while mainstream media outlets have picked up on Banshee Stealer and security researcher Patrick Wardle points out on X that the threat is being blown 1000% out of proportion. Not only is the malware found only on sites that peddle mostly Illegitimate software, but the user also has to actively bypass macOSs Gatekeeper precautions to perform an installation.X/Patrick WardleHow to protect yourself from malwareThe easiest way to protect yourself from malware is to avoid downloading software from repositories such as GitHub and other download sites. Software in the Mac App Store has been vetted by Apple and is the safest way to get apps. If you prefer not to patronize the Mac App Store, then buy software directly from the developer and their website. If you insist on using cracked software then you will always run the risk of malware exposure.Apple releases security patches through OS updates, so installing them as soon as possible is important. And as always, when downloading software, get it from trusted sources, such as the App Store (which makes security checks of its software) or directly from the developer. Macworld has several guides to help, including a guide onwhether or not you need antivirus software, alist of Mac viruses, malware, and trojans, and acomparison of Mac security software0 Commentarios 0 Acciones 127 Views

WWW.MACWORLD.COMNew Mac malware can bypass Apples XProtect security scannerMacworldA new report by security firm Check Point Research provides details on Banshee Stealer, Mac malware that attackers use to gain access to web browser data, such as login information and browser history, as well as crypto wallets. It sounds scary, but there isnt too much to worry about.Banshee Stealer is a new version of the malware that was discovered in July 2024. The malware has been updated with encryption taken from Apples XProtect, according to Check Point Research. XProtect is used by macOS as a layer of defense against malware, but Banshee Stealers new encryption allowed it to sneak by XProtect.Check Point Software reports that the browsers vulnerable to the malware are Google Chrome, Brave, Microsoft Edge, Opera, Vivaldi, and Yandexbrowsers that are based on the Chromium web engine that renders the websites within a browser. Safari, which is not on the list, is based on Apples WebKit engine. Bansee Stealer is mainly distributed through GitHub repositories of cracked software. It masquerades as other software that users are trying to download and also has a Windows counterpart called Lumina Stealer. Once installed on your system, it uses tricks to steal login data, including fraudulent browser extensions and pop-ups designed to look like legitimate macOS dialog boxes to get users to enter their system passwords.However, while mainstream media outlets have picked up on Banshee Stealer and security researcher Patrick Wardle points out on X that the threat is being blown 1000% out of proportion. Not only is the malware found only on sites that peddle mostly Illegitimate software, but the user also has to actively bypass macOSs Gatekeeper precautions to perform an installation.X/Patrick WardleHow to protect yourself from malwareThe easiest way to protect yourself from malware is to avoid downloading software from repositories such as GitHub and other download sites. Software in the Mac App Store has been vetted by Apple and is the safest way to get apps. If you prefer not to patronize the Mac App Store, then buy software directly from the developer and their website. If you insist on using cracked software then you will always run the risk of malware exposure.Apple releases security patches through OS updates, so installing them as soon as possible is important. And as always, when downloading software, get it from trusted sources, such as the App Store (which makes security checks of its software) or directly from the developer. Macworld has several guides to help, including a guide onwhether or not you need antivirus software, alist of Mac viruses, malware, and trojans, and acomparison of Mac security software0 Commentarios 0 Acciones 127 Views -

WWW.COMPUTERWORLD.COMSmart glasses appeal comes into focus at CES 2025Smart glasses attracted a lot of attentionat last weeksConsumer Electronics Show, with a range of devices on display that combine lightweight frames with functionality such as heads-up displays and AI-powered assistants.These contrast with the mixed-reality headsets that created a buzz earlyin 2024, includingMetas Quest 3 andApples Vision Proboth of which aremuch heavier devices designed for shorter periods of use.Apples Vision Pro headset captured a lot of attention in 2024, but lighter-weight smart glasses were the rage at CES 2025.JLStock / ShutterstockThis year,the focus definitely seemed to be more on smart glasses than on headsets, in part because the Ray-Ban Meta smart glasses were a huge hit last year, said Avi Greengart, president and lead analyst at Techsponential.Smart glasses require purposeful compromise, when it comes to balancing functionality with a lightweight form factor, and different vendors are making different decisions, to achieve this, said Greengart.Hallidays smart glasses, for example, project text and imagesdirectly into the wearers field of view. This is perceived as a 3.5-in.screen that appears in the upper-right corner of the users view, and remains visibleevenin bright sunlight, Halliday claims. A proactive AI assistantwhich requiresaBluetooth connection to a smartphoneenables features such as real-time translation in up to 40 languages, live navigation for directions, and teleprompter-style display of notes.Hallidays smart glasses come in three different colors.HallidayAt1.2 ounces, theyre even lighter than Metas glasses (whichat1.7 ounces areonlymarginally heavierthan regular Ray-Bans). Hallidays smart glasses are available for preorder for $489, with shipping expected to begin at the end ofthe first quarter of this year.Even Realitiesalso offers a minimalist take with its G1 smart glasses, which start at $599. These include a micro-LED projectorthat beams a heads-up display ontoeachlens,whilean AI assistantenableslive translation and navigation when paired with a smartphone.Another vendor inthespace, Rokid,recentlyannounced its Glasses, alightweight (1.7 ounces) aimed at continuous use through the day.In addition toa simple green text display and intelligent assistant, Rokids device also packs a 12-megapixelcamera for image and video capture into the frames.Nuance Audioowned by Metas Ray-Ban partner, EssilorLuxotticahas an even more focused product: glasses that integrate a hearing aid into the frames. When you need a bit more help hearing someone, you turn them on and the glasses amplify the sound of the person you are looking at and direct it to speakers on the glasses stems that are aimed at your ears, said Greengart.Meta is rumored to be have an updated version of its Ray-Ban devices slated for release later this year. Theyhis willreportedlyfeature a simple display to show notifications and responses from Metas AI assistant. Meta has sold more thanamillion Ray-Ban smart glasses to date, according toCounterpoint Research stats.Most of these glasses are ones that I wouldnt mind wearing out in public, said Ramon Llamas, research director with IDCs devices and displays team. Were finally seeing designs that look and feel less bulky, and were getting into a bunch of styles instead of the usual wayfarer design.Other glasses, such asXreals One ProandTCLs RayNeo X2(marketed as augmented reality rather than smart glasses), are heftier andactas a portable display, with the ability to watch videos and access apps when tethered to a laptop or smartphone.Although demand for smart glasses isstillin its infancy, shipments are expected to see a compound annual growth rate of 85.7% through to 2028, according to recent IDCstats. These extended reality devices will soon be the second largest category within the broader AR/VR market, IDC predicts, with several million devices sold each year.Mixed reality headsets such as Apples Vision Pro and Metas Quest products will continue to account for the largest share of the AR/VR market, according to IDC, with extended reality smart glasses in second place.IDCThough many of the devicesshownat CES are largelyaimed atconsumers,somesmart glasses are also being tailored to enterprise customers (Vuzixbeinganexample).As the technology matures, Llamas sees a growing range of business use cases for smart glasses: capturing visual information hands-free, for instance, or live translation, whichcould also be useful for business travelers.This is where having access to business apps can help, especially if you can speak into those apps to execute a task and the smart glasses can handle that, said Llamas.I think were still a ways off from that actually taking place, so for now, expect smart glasses to be mostly within the realm of consumersspecifically tech enthusiasts and cognoscenti.0 Commentarios 0 Acciones 124 Views

WWW.COMPUTERWORLD.COMSmart glasses appeal comes into focus at CES 2025Smart glasses attracted a lot of attentionat last weeksConsumer Electronics Show, with a range of devices on display that combine lightweight frames with functionality such as heads-up displays and AI-powered assistants.These contrast with the mixed-reality headsets that created a buzz earlyin 2024, includingMetas Quest 3 andApples Vision Proboth of which aremuch heavier devices designed for shorter periods of use.Apples Vision Pro headset captured a lot of attention in 2024, but lighter-weight smart glasses were the rage at CES 2025.JLStock / ShutterstockThis year,the focus definitely seemed to be more on smart glasses than on headsets, in part because the Ray-Ban Meta smart glasses were a huge hit last year, said Avi Greengart, president and lead analyst at Techsponential.Smart glasses require purposeful compromise, when it comes to balancing functionality with a lightweight form factor, and different vendors are making different decisions, to achieve this, said Greengart.Hallidays smart glasses, for example, project text and imagesdirectly into the wearers field of view. This is perceived as a 3.5-in.screen that appears in the upper-right corner of the users view, and remains visibleevenin bright sunlight, Halliday claims. A proactive AI assistantwhich requiresaBluetooth connection to a smartphoneenables features such as real-time translation in up to 40 languages, live navigation for directions, and teleprompter-style display of notes.Hallidays smart glasses come in three different colors.HallidayAt1.2 ounces, theyre even lighter than Metas glasses (whichat1.7 ounces areonlymarginally heavierthan regular Ray-Bans). Hallidays smart glasses are available for preorder for $489, with shipping expected to begin at the end ofthe first quarter of this year.Even Realitiesalso offers a minimalist take with its G1 smart glasses, which start at $599. These include a micro-LED projectorthat beams a heads-up display ontoeachlens,whilean AI assistantenableslive translation and navigation when paired with a smartphone.Another vendor inthespace, Rokid,recentlyannounced its Glasses, alightweight (1.7 ounces) aimed at continuous use through the day.In addition toa simple green text display and intelligent assistant, Rokids device also packs a 12-megapixelcamera for image and video capture into the frames.Nuance Audioowned by Metas Ray-Ban partner, EssilorLuxotticahas an even more focused product: glasses that integrate a hearing aid into the frames. When you need a bit more help hearing someone, you turn them on and the glasses amplify the sound of the person you are looking at and direct it to speakers on the glasses stems that are aimed at your ears, said Greengart.Meta is rumored to be have an updated version of its Ray-Ban devices slated for release later this year. Theyhis willreportedlyfeature a simple display to show notifications and responses from Metas AI assistant. Meta has sold more thanamillion Ray-Ban smart glasses to date, according toCounterpoint Research stats.Most of these glasses are ones that I wouldnt mind wearing out in public, said Ramon Llamas, research director with IDCs devices and displays team. Were finally seeing designs that look and feel less bulky, and were getting into a bunch of styles instead of the usual wayfarer design.Other glasses, such asXreals One ProandTCLs RayNeo X2(marketed as augmented reality rather than smart glasses), are heftier andactas a portable display, with the ability to watch videos and access apps when tethered to a laptop or smartphone.Although demand for smart glasses isstillin its infancy, shipments are expected to see a compound annual growth rate of 85.7% through to 2028, according to recent IDCstats. These extended reality devices will soon be the second largest category within the broader AR/VR market, IDC predicts, with several million devices sold each year.Mixed reality headsets such as Apples Vision Pro and Metas Quest products will continue to account for the largest share of the AR/VR market, according to IDC, with extended reality smart glasses in second place.IDCThough many of the devicesshownat CES are largelyaimed atconsumers,somesmart glasses are also being tailored to enterprise customers (Vuzixbeinganexample).As the technology matures, Llamas sees a growing range of business use cases for smart glasses: capturing visual information hands-free, for instance, or live translation, whichcould also be useful for business travelers.This is where having access to business apps can help, especially if you can speak into those apps to execute a task and the smart glasses can handle that, said Llamas.I think were still a ways off from that actually taking place, so for now, expect smart glasses to be mostly within the realm of consumersspecifically tech enthusiasts and cognoscenti.0 Commentarios 0 Acciones 124 Views -

WWW.TECHNOLOGYREVIEW.COMTraining robots in the AI-powered industrial metaverseImagine the bustling floors of tomorrows manufacturing plant: Robots, well-versed in multiple disciplines through adaptive AI education, work seamlessly and safely alongside human counterparts. These robots can transition effortlessly between tasksfrom assembling intricate electronic components to handling complex machinery assembly. Each robots unique education enables it to predict maintenance needs, optimize energy consumption, and innovate processes on the fly, dictated by real-time data analyses and learned experiences in their digital worlds.Training for robots like this will happen in a virtual school, a meticulously simulated environment within the industrial metaverse. Here, robots learn complex skills on accelerated timeframes, acquiring in hours what might take humans months or even years.Beyond traditional programmingTraining for industrial robots was once like a traditional school: rigid, predictable, and limited to practicing the same tasks over and over. But now were at the threshold of the next era. Robots can learn in virtual classroomsimmersive environments in the industrial metaverse that use simulation, digital twins, and AI to mimic real-world conditions in detail. This digital world can provide an almost limitless training ground that mirrors real factories, warehouses, and production lines, allowing robots to practice tasks, encounter challenges, and develop problem-solving skills.What once took days or even weeks of real-world programming, with engineers painstakingly adjusting commands to get the robot to perform one simple task, can now be learned in hours in virtual spaces. This approach, known as simulation to reality (Sim2Real), blends virtual training with real-world application, bridging the gap between simulated learning and actual performance.Although the industrial metaverse is still in its early stages, its potential to reshape robotic training is clear, and these new ways of upskilling robots can enable unprecedented flexibility.Italian automation provider EPF found that AI shifted the companys entire approach to developing robots. We changed our development strategy from designing entire solutions from scratch to developing modular, flexible components that could be combined to create complete solutions, allowing for greater coherence and adaptability across different sectors, says EPFs chairman and CEO Franco Filippi.Learning by doingAI models gain power when trained on vast amounts of data, such as large sets of labeled examples, learning categories, or classes by trial and error. In robotics, however, this approach would require hundreds of hours of robot time and human oversight to train a single task. Even the simplest of instructions, like grab a bottle, for example, could result in many varied outcomes depending on the bottles shape, color, and environment. Training then becomes a monotonous loop that yields little significant progress for the time invested.Building AI models that can generalize and then successfully complete a task regardless of the environment is key for advancing robotics. Researchers from New York University, Meta, and Hello Robot have introduced robot utility models that achieve a 90% success rate in performing basic tasks across unfamiliar environments without additional training. Large language models are used in combination with computer vision to provide continuous feedback to the robot on whether it has successfully completed the task. This feedback loop accelerates the learning process by combining multiple AI techniquesand avoids repetitive training cycles.Robotics companies are now implementing advanced perception systems capable of training and generalizing across tasks and domains. For example, EPF worked with Siemens to integrate visual AI and object recognition into its robotics to create solutions that can adapt to varying product geometries and environmental conditions without mechanical reconfiguration.Learning by imaginingScarcity of training data is a constraint for AI, especially in robotics. However, innovations that use digital twins and synthetic data to train robots have significantly advanced on previously costly approaches.For example, Siemens SIMATIC Robot Pick AI expands on this vision of adaptability, transforming standard industrial robotsonce limited to rigid, repetitive tasksinto complex machines. Trained on synthetic datavirtual simulations of shapes, materials, and environmentsthe AI prepares robots to handle unpredictable tasks, like picking unknown items from chaotic bins, with over 98% accuracy. When mistakes happen, the system learns, improving through real-world feedback. Crucially, this isnt just a one-robot fix. Software updates scale across entire fleets, upgrading robots to work more flexibly and meet the rising demand for adaptive production.Another example is the robotics firm ANYbotics, which generates 3D models of industrial environments that function as digital twins of real environments. Operational data, such as temperature, pressure, and flow rates, are integrated to create virtual replicas of physical facilities where robots can train. An energy plant, for example, can use its site plans to generate simulations of inspection tasks it needs robots to perform in its facilities. This speeds the robots training and deployment, allowing them to perform successfully with minimal on-site setup.Simulation also allows for the near-costless multiplication of robots for training. In simulation, we can create thousands of virtual robots to practice tasks and optimize their behavior. This allows us to accelerate training time and share knowledge between robots, says Pter Fankhauser, CEO and co-founder of ANYbotics.Because robots need to understand their environment regardless of orientation or lighting, ANYbotics and partner Digica created a method of generating thousands of synthetic images for robot training. By removing the painstaking work of collecting huge numbers of real images from the shop floor, the time needed to teach robots what they need to know is drastically reduced.Similarly, Siemens leverages synthetic data to generate simulated environments to train and validate AI models digitally before deployment into physical products. By using synthetic data, we create variations in object orientation, lighting, and other factors to ensure the AI adapts well across different conditions, says Vincenzo De Paola, project lead at Siemens. We simulate everything from how the pieces are oriented to lighting conditions and shadows. This allows the model to train under diverse scenarios, improving its ability to adapt and respond accurately in the real world.Digital twins and synthetic data have proven powerful antidotes to data scarcity and costly robot training. Robots that train in artificial environments can be prepared quickly and inexpensively for wide varieties of visual possibilities and scenarios they may encounter in the real world. We validate our models in this simulated environment before deploying them physically, says De Paola. This approach allows us to identify any potential issues early and refine the model with minimal cost and time.This technologys impact can extend beyond initial robot training. If the robots real-world performance data is used to update its digital twin and analyze potential optimizations, it can create a dynamic cycle of improvement to systematically enhance the robots learning, capabilities, and performance over time.The well-educated robot at workWith AI and simulation powering a new era in robot training, organizations will reap the benefits. Digital twins allow companies to deploy advanced robotics with dramatically reduced setup times, and the enhanced adaptability of AI-powered vision systems makes it easier for companies to alter product lines in response to changing market demands.The new ways of schooling robots are transforming investment in the field by also reducing risk. Its a game-changer, says De Paola. Our clients can now offer AI-powered robotics solutions as services, backed by data and validated models. This gives them confidence when presenting their solutions to customers, knowing that the AI has been tested extensively in simulated environments before going live.Filippi envisions this flexibility enabling todays robots to make tomorrows products. The need in one or two years time will be for processing new products that are not known today. With digital twins and this new data environment, it is possible to design today a machine for products that are not known yet, says Filippi.Fankhauser takes this idea a step further. I expect our robots to become so intelligent that they can independently generate their own missions based on the knowledge accumulated from digital twins, he says. Today, a human still guides the robot initially, but in the future, theyll have the autonomy to identify tasks themselves.This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Reviews editorial staff.0 Commentarios 0 Acciones 168 Views

WWW.TECHNOLOGYREVIEW.COMTraining robots in the AI-powered industrial metaverseImagine the bustling floors of tomorrows manufacturing plant: Robots, well-versed in multiple disciplines through adaptive AI education, work seamlessly and safely alongside human counterparts. These robots can transition effortlessly between tasksfrom assembling intricate electronic components to handling complex machinery assembly. Each robots unique education enables it to predict maintenance needs, optimize energy consumption, and innovate processes on the fly, dictated by real-time data analyses and learned experiences in their digital worlds.Training for robots like this will happen in a virtual school, a meticulously simulated environment within the industrial metaverse. Here, robots learn complex skills on accelerated timeframes, acquiring in hours what might take humans months or even years.Beyond traditional programmingTraining for industrial robots was once like a traditional school: rigid, predictable, and limited to practicing the same tasks over and over. But now were at the threshold of the next era. Robots can learn in virtual classroomsimmersive environments in the industrial metaverse that use simulation, digital twins, and AI to mimic real-world conditions in detail. This digital world can provide an almost limitless training ground that mirrors real factories, warehouses, and production lines, allowing robots to practice tasks, encounter challenges, and develop problem-solving skills.What once took days or even weeks of real-world programming, with engineers painstakingly adjusting commands to get the robot to perform one simple task, can now be learned in hours in virtual spaces. This approach, known as simulation to reality (Sim2Real), blends virtual training with real-world application, bridging the gap between simulated learning and actual performance.Although the industrial metaverse is still in its early stages, its potential to reshape robotic training is clear, and these new ways of upskilling robots can enable unprecedented flexibility.Italian automation provider EPF found that AI shifted the companys entire approach to developing robots. We changed our development strategy from designing entire solutions from scratch to developing modular, flexible components that could be combined to create complete solutions, allowing for greater coherence and adaptability across different sectors, says EPFs chairman and CEO Franco Filippi.Learning by doingAI models gain power when trained on vast amounts of data, such as large sets of labeled examples, learning categories, or classes by trial and error. In robotics, however, this approach would require hundreds of hours of robot time and human oversight to train a single task. Even the simplest of instructions, like grab a bottle, for example, could result in many varied outcomes depending on the bottles shape, color, and environment. Training then becomes a monotonous loop that yields little significant progress for the time invested.Building AI models that can generalize and then successfully complete a task regardless of the environment is key for advancing robotics. Researchers from New York University, Meta, and Hello Robot have introduced robot utility models that achieve a 90% success rate in performing basic tasks across unfamiliar environments without additional training. Large language models are used in combination with computer vision to provide continuous feedback to the robot on whether it has successfully completed the task. This feedback loop accelerates the learning process by combining multiple AI techniquesand avoids repetitive training cycles.Robotics companies are now implementing advanced perception systems capable of training and generalizing across tasks and domains. For example, EPF worked with Siemens to integrate visual AI and object recognition into its robotics to create solutions that can adapt to varying product geometries and environmental conditions without mechanical reconfiguration.Learning by imaginingScarcity of training data is a constraint for AI, especially in robotics. However, innovations that use digital twins and synthetic data to train robots have significantly advanced on previously costly approaches.For example, Siemens SIMATIC Robot Pick AI expands on this vision of adaptability, transforming standard industrial robotsonce limited to rigid, repetitive tasksinto complex machines. Trained on synthetic datavirtual simulations of shapes, materials, and environmentsthe AI prepares robots to handle unpredictable tasks, like picking unknown items from chaotic bins, with over 98% accuracy. When mistakes happen, the system learns, improving through real-world feedback. Crucially, this isnt just a one-robot fix. Software updates scale across entire fleets, upgrading robots to work more flexibly and meet the rising demand for adaptive production.Another example is the robotics firm ANYbotics, which generates 3D models of industrial environments that function as digital twins of real environments. Operational data, such as temperature, pressure, and flow rates, are integrated to create virtual replicas of physical facilities where robots can train. An energy plant, for example, can use its site plans to generate simulations of inspection tasks it needs robots to perform in its facilities. This speeds the robots training and deployment, allowing them to perform successfully with minimal on-site setup.Simulation also allows for the near-costless multiplication of robots for training. In simulation, we can create thousands of virtual robots to practice tasks and optimize their behavior. This allows us to accelerate training time and share knowledge between robots, says Pter Fankhauser, CEO and co-founder of ANYbotics.Because robots need to understand their environment regardless of orientation or lighting, ANYbotics and partner Digica created a method of generating thousands of synthetic images for robot training. By removing the painstaking work of collecting huge numbers of real images from the shop floor, the time needed to teach robots what they need to know is drastically reduced.Similarly, Siemens leverages synthetic data to generate simulated environments to train and validate AI models digitally before deployment into physical products. By using synthetic data, we create variations in object orientation, lighting, and other factors to ensure the AI adapts well across different conditions, says Vincenzo De Paola, project lead at Siemens. We simulate everything from how the pieces are oriented to lighting conditions and shadows. This allows the model to train under diverse scenarios, improving its ability to adapt and respond accurately in the real world.Digital twins and synthetic data have proven powerful antidotes to data scarcity and costly robot training. Robots that train in artificial environments can be prepared quickly and inexpensively for wide varieties of visual possibilities and scenarios they may encounter in the real world. We validate our models in this simulated environment before deploying them physically, says De Paola. This approach allows us to identify any potential issues early and refine the model with minimal cost and time.This technologys impact can extend beyond initial robot training. If the robots real-world performance data is used to update its digital twin and analyze potential optimizations, it can create a dynamic cycle of improvement to systematically enhance the robots learning, capabilities, and performance over time.The well-educated robot at workWith AI and simulation powering a new era in robot training, organizations will reap the benefits. Digital twins allow companies to deploy advanced robotics with dramatically reduced setup times, and the enhanced adaptability of AI-powered vision systems makes it easier for companies to alter product lines in response to changing market demands.The new ways of schooling robots are transforming investment in the field by also reducing risk. Its a game-changer, says De Paola. Our clients can now offer AI-powered robotics solutions as services, backed by data and validated models. This gives them confidence when presenting their solutions to customers, knowing that the AI has been tested extensively in simulated environments before going live.Filippi envisions this flexibility enabling todays robots to make tomorrows products. The need in one or two years time will be for processing new products that are not known today. With digital twins and this new data environment, it is possible to design today a machine for products that are not known yet, says Filippi.Fankhauser takes this idea a step further. I expect our robots to become so intelligent that they can independently generate their own missions based on the knowledge accumulated from digital twins, he says. Today, a human still guides the robot initially, but in the future, theyll have the autonomy to identify tasks themselves.This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Reviews editorial staff.0 Commentarios 0 Acciones 168 Views -

APPLEINSIDER.COMApple joins AI hardware standards consortium to improve server performanceApple has joined the board of directors for the Ultra Accelerator Link Consortium, giving it more of a say in how the architecture for AI server infrastructure will evolve.UALink logoThe Ultra Accelerator Link Consortium (UALink), is an open industry standard group for the development of UALink specifications. As a potential key element used for the development of artificial intelligence models and accelerators, the development of the standards could be massively beneficial to the future of AI itself.On Tuesday, it was announced that three more members have been elected to the consortium's board. Apple was one of the trio, alongside Alibaba and Synopsys. Continue Reading on AppleInsider | Discuss on our Forums0 Commentarios 0 Acciones 154 Views

APPLEINSIDER.COMApple joins AI hardware standards consortium to improve server performanceApple has joined the board of directors for the Ultra Accelerator Link Consortium, giving it more of a say in how the architecture for AI server infrastructure will evolve.UALink logoThe Ultra Accelerator Link Consortium (UALink), is an open industry standard group for the development of UALink specifications. As a potential key element used for the development of artificial intelligence models and accelerators, the development of the standards could be massively beneficial to the future of AI itself.On Tuesday, it was announced that three more members have been elected to the consortium's board. Apple was one of the trio, alongside Alibaba and Synopsys. Continue Reading on AppleInsider | Discuss on our Forums0 Commentarios 0 Acciones 154 Views -

ARCHINECT.COMFEMA: America's buildings are woefully underprepared for natural disastersA code adoption tracking resource produced by the Federal Emergency Management Agency (FEMA) that shows the status of different states compliance with hazard-resistant zoning measures is especially relevant given the recent spate of catastrophic weather eventsaffecting Los Angeles and other American cities.The BCAT portal includes data through the end of Q4 2024. Overall, just one-third (33%) of all "natural hazard-prone jurisdictions"have successfully adopted the most current hazard-resistant building codes. This includes protections against damaging wind loads, hurricanes, floods, seismic activity, and tornadoes and can be taken as a snapshot of the overall readiness for buildings in the U.S. to protect against other kinds of natural disasters.We saw succinctly in the past six months the efficacy of these codes in protecting structures (or not) against forces such as hurricanes and other extreme weather events.Related on Archinect: Burning down the house to make American hom...0 Commentarios 0 Acciones 151 Views

ARCHINECT.COMFEMA: America's buildings are woefully underprepared for natural disastersA code adoption tracking resource produced by the Federal Emergency Management Agency (FEMA) that shows the status of different states compliance with hazard-resistant zoning measures is especially relevant given the recent spate of catastrophic weather eventsaffecting Los Angeles and other American cities.The BCAT portal includes data through the end of Q4 2024. Overall, just one-third (33%) of all "natural hazard-prone jurisdictions"have successfully adopted the most current hazard-resistant building codes. This includes protections against damaging wind loads, hurricanes, floods, seismic activity, and tornadoes and can be taken as a snapshot of the overall readiness for buildings in the U.S. to protect against other kinds of natural disasters.We saw succinctly in the past six months the efficacy of these codes in protecting structures (or not) against forces such as hurricanes and other extreme weather events.Related on Archinect: Burning down the house to make American hom...0 Commentarios 0 Acciones 151 Views -

GAMINGBOLT.COMExodus Will Have Long-Term Narrative Consequences Depending on Players RelationshipsDeveloper Archetype Entertainment recently held a Q&A in order to provide an update on upcoming sci-fi action RPG Exodus. In the Q&A, creative director James Ohlen and executive producer Chad Robertson have revealed some new details about the upcoming title.The relationships players form with their teammates in Exodus will be a big factor in the game, with the studio revealing that setting out on missions will affect relationships with characters you dont meet for a while. This is because missions in Exodus could take several years, and even decades to complete.You go off on your Exodus Journeys and you leave behind your city and some of your friends and maybe even family members, and you make choices about them, said Ohlen.An example provided by Ohlen indicated that players could end up not meeting some characters for several decades, and the choices they make will affect characters that are now several years older than when you previously met them.Not everyone has to come on an Exodus with you, he explained. You might leave some behind and when you come back it could be a decade later, it could be four decades later, and those choices will have impacted your relationships with people that are now a decade older or three or four decades older.This narrative decision stems from the fact that the story of Exodus revolves around humanity looking for a new home in the stars, and journeys at that massive scale will definitely take several years. There is also some level of time dilation happening in the games story, likely owing to interstellar travel at incredible speeds.Robertson also revealed that the primary antagonists in the game, referred to as the Mara Yama, will be creepy. This stems from the studio wanting the games primary enemies being an evil Celestial civilization, but could also manage to be creepy.The Mara Yama were revealed in a trailer from October, which showcased just how strange and creepy they can be. The civilization doesnt quite have a planet it calls home, and is instead more nomadic in nature, travelling across the stars in its own citadels.The studio goes on to talk about various other smaller aspects of the game, including how time dilation will affect players, details about the player character (known in the game as the Traveler), and even which characters the developers would like to be if they lived in the universe of Exodus.Originally unveiled back in 2023, Exodus will be a third-person action RPG that will involve a mix of both fast-paced action, as well as quite a bit of exploration. The games narrative is being handled by storied sci-fi writer Drew Karbyshyn, who revealed in an interview last year that there will be plenty of long-term choices and consequences for players to experience.The most recent trailer released for the game back in December gave us a closer look at its third-person shooter combat. The trailer gave us a look at a firefight against a host of different enemies, while also letting us catch a glimpse of some of the various abilities players will have access to in the game.0 Commentarios 0 Acciones 142 Views

GAMINGBOLT.COMExodus Will Have Long-Term Narrative Consequences Depending on Players RelationshipsDeveloper Archetype Entertainment recently held a Q&A in order to provide an update on upcoming sci-fi action RPG Exodus. In the Q&A, creative director James Ohlen and executive producer Chad Robertson have revealed some new details about the upcoming title.The relationships players form with their teammates in Exodus will be a big factor in the game, with the studio revealing that setting out on missions will affect relationships with characters you dont meet for a while. This is because missions in Exodus could take several years, and even decades to complete.You go off on your Exodus Journeys and you leave behind your city and some of your friends and maybe even family members, and you make choices about them, said Ohlen.An example provided by Ohlen indicated that players could end up not meeting some characters for several decades, and the choices they make will affect characters that are now several years older than when you previously met them.Not everyone has to come on an Exodus with you, he explained. You might leave some behind and when you come back it could be a decade later, it could be four decades later, and those choices will have impacted your relationships with people that are now a decade older or three or four decades older.This narrative decision stems from the fact that the story of Exodus revolves around humanity looking for a new home in the stars, and journeys at that massive scale will definitely take several years. There is also some level of time dilation happening in the games story, likely owing to interstellar travel at incredible speeds.Robertson also revealed that the primary antagonists in the game, referred to as the Mara Yama, will be creepy. This stems from the studio wanting the games primary enemies being an evil Celestial civilization, but could also manage to be creepy.The Mara Yama were revealed in a trailer from October, which showcased just how strange and creepy they can be. The civilization doesnt quite have a planet it calls home, and is instead more nomadic in nature, travelling across the stars in its own citadels.The studio goes on to talk about various other smaller aspects of the game, including how time dilation will affect players, details about the player character (known in the game as the Traveler), and even which characters the developers would like to be if they lived in the universe of Exodus.Originally unveiled back in 2023, Exodus will be a third-person action RPG that will involve a mix of both fast-paced action, as well as quite a bit of exploration. The games narrative is being handled by storied sci-fi writer Drew Karbyshyn, who revealed in an interview last year that there will be plenty of long-term choices and consequences for players to experience.The most recent trailer released for the game back in December gave us a closer look at its third-person shooter combat. The trailer gave us a look at a firefight against a host of different enemies, while also letting us catch a glimpse of some of the various abilities players will have access to in the game.0 Commentarios 0 Acciones 142 Views