Why fast-learning robots are wearing Meta glasses

www.computerworld.com

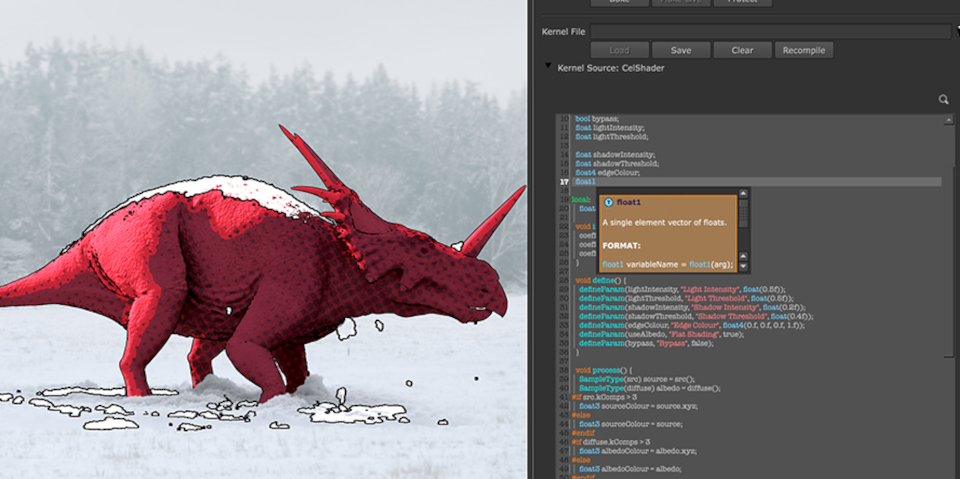

AI and robots need data lots of it. Companies that have millions of users have an advantage in this data collection, because they can use the data of their customers.A well-known example isGooglesreCAPTCHA v2service,which I recently lambasted. reCAPTCHA v2 is a cloud-based service operated by Google that is supposed to stop bots from proceeding to a website (it doesnt). When usersprove theyre human by clicking on the boxes containing traffic lights, crosswalks, buses and other objects specified in the challenge, that information is used to train models for Waymos self-driving cars and improve the accuracy of Google Maps. Its also used to improve vision capabilities used in Google Photos and image search algorithms.Google isnt the only company that does this.Microsoftprocesses voice recordings from Teams and Cortana to refine speech models, for example.Now Meta is using data user to train robots, sort of. Metas augmented reality (AR) division and researchers at Georgia Institute of Technology have developed a framework calledEgoMimic, which uses video feeds from smartglasses to train robots.Regular robot imitation learning requires painstaking teleoperation of robotic arms humans wearing special sensor-laden clothing and VR goggles perform the movements robots are being trained for, and the robot software learns how to do the task. It is a slow, expensive, and unscalable process.EgoMimic uses Metas Project Aria glasses to train robots.Meta announced Project Aria in September 2020 as a research initiative to develop advanced AR glasses. The glasses have five cameras (including monochrome, RGB, and eye-tracking), Inertial Measurement Units (IMUs), microphones, and environmental sensors. The project also has privacy safeguards such as anonymization algorithms, a recording indicator LED, and a physical privacy switch, according to Meta.The purpose of Aria is to enable applications in robotics, accessibility, and 3D scene reconstruction. Meta rolled out the Aria Research Kit (ARK) on Oct. 9.By using Project Aria glasses to record first-person video of humans performing tasks like shirt folding or packing groceries, Georgia Tech researchers built a dataset thatsmore than 40 times more demonstration-richthan equivalent robot-collected data.The technology acts as a sophisticated translator between human and robotic movement. Using mathematical techniques called Gaussian normalization, the system maps the rotations of a human wrist to the precise joint angles of a robot arm, ensuring natural motions get converted into mechanical actions without dangerous exaggerations. This movement translation works alongside a shared visual understanding both the human demonstrators smartglasses and the robots cameras feed into the same artificial intelligence program, creating common ground for interpreting objects and environments.A critical safety layer called action masking prevents impossible maneuvers, functioning like an invisible fence that stops robots from attempting any biomechanically implausible actions they observe in humans.The magic happens through theEgoMimic algorithm, which bridges the gap between human demonstration and robotic execution.Heres the funny part: After collecting video data, researchers mount the same AR glasses onto a robot, effectively giving iteyes and sensor data that perceive the tasks from the exact same perspective as the human who demonstrated the task.Thats right. The research involves robots wearing glasses. (You can see what this looks like inthe video published by Meta.)The algorithm then translates the human movements from the videos into actionable instructions for the robots joints and grippers. This approach reduces the need for extensive robot-specific training data.Training by teachingThe approach taken by EgoMimic could revolutionize how robots are trained, moving beyond current methods that require physically guiding machines through each step. The EgoMimic approach basically democratizes robot training, enabling small business, farmers and others who normally wouldnt even attempt robot training to do the training themselves.(Note that Metas and Georgia Techs EgoMimic is different from the University of North Carolina at Charlottes EgoMimic project, which uses first-person videos for training large vision-language models.) EgoMimic is expected to be publically demonstrated at the2025 IEEE EngineersInternational Conference on Robotics and Automationin Atlanta, which begins May 19.This general approach is likely to move from the research stage to the public stage, where multimodal AI video feeds from millions of users wearing smartglasses (which in the future will come standard with all the cameras and sensors in Project Aria glasses). AI will identify the user videos where the user is doing something (cooking, tending a garden, operating equipment) and making that available to robot training systems. This is basically how chatbots got their AI for training billions of people live their lives and in the process generate content of every sort, which is then harvested for AI training.The EgoMimic researchers didnt invent the concept of using consumer electronics to train robots. One pioneer in the field, a former healthcare-robot researcher named Dr. Sarah Zhang, has demonstrated 40% improvements in the speed of training healthcare robots using smartphones and digital cameras; they enable nurses to teach robots through gestures, voice commands, and real-time demonstrations instead of complicated programming.This improved robot training is made possible by AI that can learn from fewer examples. A nurse might show a robot how to deliver medications twice, and the robot generalizes the task to handle variations like avoiding obstacles or adjusting schedules. The robots also use sensors like depth-sensing cameras and motion detectors to interpret gestures and voice instructions.Its easy to see how the Zhang approach combined with the EgoMimic system using smartglasses and deployed at a massive scale could dramatically enhance robot training.Heres a scenario to demonstrate what might be possible with future smartglasses-based robot training. A small business owner who owns a restaurant cant keep up with delivery pizza orders. So, he or she buys a robot to make pizzas faster, puts on the special smartglasses while making pizzas, then simply puts the same glasses on the robot. The robot can then use that learning to take over the pizza-making process.The revolution here wouldnt be robotic pizza making. In fact, robots are already being used for commercial pizzas. The revolution is that a pizza cook, not a robot scientist or developer, is training robots with his proprietary and specific recipes. This could provide a small business owner with a huge advantage over buying a pre-programmed robot that performs a task generically and identically to other buyers of that particular robot.You could see similar use cases in homes or factories. If you want a robot to perform a task, you simply teach it how to do that task by demonstrating it.Of course, this real-world, practical use case is years away. But using smartglasses to train robots isnt something the public, or even technologists, are even thinking about right now.But smartglasses-based training is just one way robotics will be democratized and mainstreamed in the years ahead.

0 Kommentare

·0 Anteile

·46 Ansichten

_aNXVKKM.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp)