uxdesign.cc

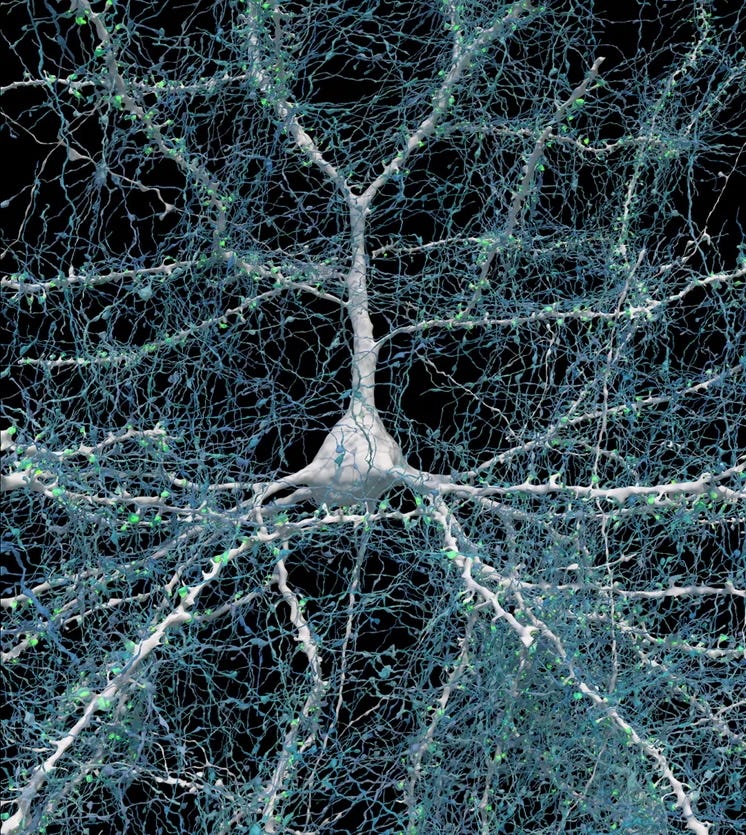

How design shapes, elevates, and (lets be honest) savesAIGoogle and Harvard researchers use AI to reconstruct an intricate 3D view of the human braindown to nearly every cell and connection. Who says science and art are different? Credit: Google Research & Lichtman Lab (Harvard University). Renderings by D. Berger (Harvard University)In just a few years, weve gone from laughing at it to fearing it. By now most of us have come to terms with the fact that, like it or not, it is here to stay. Designers in tech face a unique set of challenges in this evolving landscape. As design practitioners, we must be adaptable- to the needs of stakeholders and clients, to our teams and professional partners, and to rapid changes in the technological landscape in which we work. In this article Ill be looking at how UI/UX and Product Designers can create better AI-enabled Products, the design strategies to consider when building intelligent interfaces, and how design-in-tech might prepare us for the future of this technology.Can you tell Im a Potterhead? Captured on Google Gemini (10.02.25)AI-enabled products? What arethose?By AI-driven interfaces and/or AI-enabled products, I refer to mobile and web apps that employ large language models (LLMs) or other ML techniques as their primary technology. Intelligent Interfaces refer to the UI of these products.In the last couple of years .ai is everywhere and its not unexpected, given that LLMs are getting more efficacious and reliable with each passing week. AI-enabled products can serve users for productivity, design, sales, marketing, business development, and probably many more use cases. I dont know them all, but maybe you do! If not, just ask your favourite AI.Something that underpins all of these applications is data. Data is the lifeblood of AI, it is what allows the product in question to function effectively. In turn, AI-led tech products continuously amplify the value of data by unlocking insights. This makes data analytics not just a category in the list above, but also a fundamental component of AI-powered tools. [Edit after DeepSeek R1: AI now also amplifies the value of data by trainingitself!]An earlier view of the ChatGPT UI Quite flat and unfocused, in my opinion (Kashyap, 2023).Where does UX get on (andoff)?AI without good UX is like a Ferrari on display with the steering wheel removed Impressive, no doubt. A fine specimen. But sit in there and youll feel a mix of disappointment, confusion andanger.UX is the secret sauce that makes people actually want to use a product. Image: A beautifully designed Mobile Travel App with Voice AI by Maria K. onBehanceAI systems are complex by default. All the fancy neural networks, machine learning models, and data pipelines are impressive under the hood, but quite intimidating to the average person even on the surface. A well-designed interface abstracts the stupendous complexity that is AI, presenting users with more familiar and intuitive options for input and interaction. UX anticipates and accommodates the need for accessibility, usability and empathy in these otherwise cold systems. Data and code make AI smart and clever, but its UX that makes you actually want to use it. Its what turns algorithms into tangible tools, creepy predictions into comforting conveniences, and raw computing power into refined experiences. Without UX, AI is just a jumble of intimidating potential; with it, AI becomes a partner, effortlessly upgrading the way we work and live. Think about the next time you see Your New Productivity Co-Pilot, or hopefully one with a more originalname.Photo by Tirza van Dijk onUnsplashDesigners, pay attentionBest practices! They make the design world go round. They keep us designers on our toes and make us mind our Ps and Qs. If youre a designer of any of the varieties mentioned earlier: Welcome, I shake you warmly by the hand. These are the things youll want to stay on top of to sharpen your Intelligent Interface, make users love using your product, and dont get spooked and/or confused as soon as the AI does something unexpected. If you are a different variety of tech person: Youre equally welcome to continue reading, to the benefit of all the designers you may workwith.ChatGPT now reveals its thought process in this expansion just above the output. Captured on ChatGPT by OpenAI (10.02.25)1. XAI fordaysThe average user is going to be pretty skeptical, disoriented even, when they first start using your product. We want to make sure our users trust the product and its capabilities, feel confident using it, understand how it works (to a reasonable extent), and are able to interpret the output effectively. We dont want users to necessarily need a tutorial or crash course to use the product (unless its really that specialised). We also dont want them to have to verify the results through Google, ChatGPT, or another better tool. Following design principles of explainable AI (XAI) is how we getthere.ExplanationAKA So creepy, how did it knowthat?!The AI should be able to explain how it arrived at its output. The explanation should be accurate, comprehensive and easy to understand (Phillips et al., 2021). While creepiness isnt as much of a concern today as it was 2 years ago, explanation is still beneficial in creating confidence in the product, the brand and the technology. Providing appropriate evidence and reasoning for output helps users trust the efficacy of the tool without worrying about more technically dense aspects of what its doing and how its doingit.Netflixs caption on its recommendations are a frequently seen instance of Explainable AI. Captured on Netflix (10.02.25).InterpretationAKA Thats cool, but WTF does itmean?!The answers and explanations provided should be easy to interpret, understand and act upon. Your user may be an expert in the given subject matter, but in most cases its best to assume that the user is not an expert and provide output that anyone can understand and move forwardwith.ChatGPT tends to get repetitive when dealing with its limits of knowledge. A more appropriate way to approach this might be letting users know upfront that it might not be able to precisely answer a given question, followed by the information it can actually provide, with a suggestion for the next prompt. Captured on ChatGPT by OpenAI (10.02.25)Knowledge limitsAKA Turns out it AInt all that smart (haha geddit?)When the AI reaches the edges of its domain of knowledge, its best to communicate it effectively to the user. Follow-up questions are welcome. Dont be overconfident in your system, and dont encourage users to be overconfident either. Instead, help them to quickly understand what it can and cannotdo.Fairness and bias preventionAKA I think it thinks Im a 35 y/o straight whitemanAI is only as good as the data its trained on, and the data is often a hot mess, with biases and hallucinations just waiting to happen. Bias prevention and other ethical guidelines need to be baked into the system, taking the form of self checks and effective communication on potential ethical issues within the responses of your AI. Its easy for these biases to get politicised, which is likely to be irrelevant and antithetical to the purpose of yourproduct.Input fields are one of the most important zones for error management. This is not an AI interface of course, but we can learn from the way New Password UIs are designed. (Sunwall & Neusesser, 2024)2. Error handling and managementErrors whether from the system or the user should be met with clear, helpful messages that guide users forward without friction. Your product likely wont have perfect outputs every time, and your users should feel comfortable with learning through failure without their workflow being adversely affected. When the errors come creeping, its only polite to be accountable for them, communicate the error in a way thats easy to understand, and provide options for resolution.Error messaging may take the form of basic error indicators, tooltips with an action button, and/or manual control panels as a layer of redundancy (Cornianu, 2025). Subtle, well-placed animations can help bevel the experience. General UX best practices for error messages are important herethe error communication should be visible, proportional to the scale of the error, and follow accessibility guidelines. Effective UX writing also shines through. We dont want users to feel like they did something wrong (even if they have), or experience confusion about how to resolve an error. Well-designed error management protocols help users trust the system, build confidence as they use the product, and reduce overall friction in the experience. In the words of Roald Dahl, smooth and oily as hair cream (The Hitchhiker, 1977).ChatGPTs feature to call upon any custom GPT into any chat might scream user-friendly, but the same doesnt apply to the outputs, which are not distinguished as an output of the custom GPT. A missed opportunity to improve usability and enrich experience, in my opinion. Captured on ChatGPT by OpenAI (10.02.25)3. Usability, prioritisedDont make your users think. You can achieve that by paying attention to usability, a cornerstone of the UI/UX practice. With AI-enabled products, we need to think about usability from both the engineering and the design side of things. An AI-enabled product is essentially a smart partner that proactively anticipates the needs of users and provides just the right amount of information and context where neededthats the engineering bit. The role of designers is to ensure that the system as a whole is intuitive, efficient, satisfying, and easy to use. Interfaces must work to bridge the gap between fickle minded humans and cold, data-driven systems. The gold standard can be achieved when designers work closely and iteratively with engineers, paying attention to visual consistency, adhering to accessibility guidelines, writing effective UX copy, and even providing neat third party integrations that can supercharge your users workflow.Many ChatGPT-based AI tools still use an input window to receive customisations and feedback from users. You can also influence output by telling it your desired temperature and top_p in the prompt, which sounds like a usability workaround to me. Modes of User input and Feedback is an area that still has a lot of scope for research and improvement in terms of their usability.4. Feedback andinputsSo far, proactively getting feedback and inputs from users allowed models to improve and adapt, gain credibility, and gave the user a sense of agency in the interaction (Chaturvedi, n.d.). Only a year ago, most AI-enabled products had a now obsolete mode of user feedback baked into their system in the form of ratings or a thumbs up/down button towards each answer. These products had largely been playing around in uncharted territory, but that territory is quickly becoming more charted. Very soon, models can be expected to move toward more self-sufficient operation, as demonstrated by DeepSeeks reinforcement learning and built-in Chain-of-Thought capabilities. This doesnt mean that user feedback and inputs are no longer needed. Far from it, they will continue to have an important place in interaction design for AI-enabled products for the foreseeable future. However, they will need to diversify and take different forms as we move toward more intuitive formats of interaction with AI enabled tools. This is something both designers and engineers will need to stay on topof.5. Iteration and collaborationDesigning for AI-enabled products differs from designing websites and apps in a pretty big, fundamental waythe outcomes and user journeys are uncertain. Unlike traditional design, where every user flow can be meticulously planned if designers so wished, AI introduces a wildcard element that makes it impossible to map out every single scenario. Nobody can predict, list, or account for every potential type of output the model may give us. In fool proofing this situation, Designers and Engineers must closely collaborate to guide AIs potential outputs, keep things aligned with what users actually need, and provide output in ways the user expects it to. We will need to learn each others disciplinary principles, needs and, to some extent, skills. Siloed operation is fairly detrimental to effective product design and development today. For the AI-enabled products we will be creating in the near future, it could spellfailure.Where are we headed withthis?Just as I was wrapping up this article, DeepSeek R1 was launched. In the span of about a week, the tech, AI and ML industries may have entered a new era time will tell, but I suspect we wont have long to wait. Ive written about Designers working with AI in the past, in an article that aged well but also became obsolete in barely a year. I looked back at my draft after the launch of DeepSeek R1 and realised that in the few weeks since I started writing this article, it already sounded a little outdated. Depending on when youre here, it may sound even more outdated.Rich Holmes on SubstackVibe coding is the new trend thats cropped up in product design (Holmes, 2025). When I first heard about it, it sounded a lot like something that people from other disciplines can get their fingers stuck straight into product design and promote learning. My view is that product designers will need to pay attention to the use cases of user experience, now more than ever. In the short term we might see torrents of new AI-enabled products that come into existence in a throw stuff at the wall and see what sticks manner; user experience learning curves will be steep here. In the medium to long term, it provides insights on how we could refocus and refine the way we design AI-enabled products in a new age oftech.A masterclass in how user and UX research can completely transform bad user experience, with some help from Natural Language Processing, Machine Learning, Computer Vision and similar technologies. Product Assembly Assistance by Multiple Owners onBehance.A new layer of multimodalMultimodal AI serves to allow AI-enabled products to communicate with users in more natural ways. Usually, this means you can type in a text input, record a voice message or video, or upload media files. Theoretically speaking, this enabled models to process all these forms of data in a way that seems more human-like. My personal view is that engineers and researchers cannot get complacent after developments they think will bring improvement to how easy a product is to use, when we just dont know for sure that it will in fact bring said improvement. Processing data in a human-like manner may not ensure better usability of a product that already runs on non-human intelligence, is relatively unknown in its workings, and limited in its scope of interaction. Usability principles ask that the multimodality of these models extend beyond input possibilities, to also include output and interaction possibilities. There is a lot of scope for research in this area to understand what actually constitutes an intuitive AI interface, when AI is by default not an intuitive thing.What I would like to see in the near future is a more visual and graphical mode of interaction that encourages more back-and-forth exchange with models before arriving at a comprehensive output that is self-initiated and self-evaluated by the model. Maybe its just me, but plain old chat-based interaction with an unknown entity leaves something wanting. It feels like a stopgap MSN Messenger-esque format thats serving this purpose until we can figure out a better, more user-friendly way to get the best out of these powerful tools. And its time to start figuring thatout.The ThirdParadigmWeve been in the early stages of AI-enabled products, and the people who have been making them are usually not UX specialists. Jakob Nielsen speaks of a new paradigm of UI design Intent-based Outcome Specification where we specify the outcome we want rather than specifying what we want the computer to do. In doing so, we hand over all control in the execution of the intended outcome to the computer, creating a somewhat one-sided interaction that is handicapped by the capabilities of the technology. So far, the most commonly employed interaction style has been the chat interface, since it helped account for the usability issues posed by these products. And so, the Prompt Engineers were born to paper over the cracks and tickle ChatGPT in the right spot so it coughs up the right results (Nielsen, 2024).As AI continues to improve (itself?), we can expect a shift towards more complex non-command interactionswhere systems dont wait for you to click a button but anticipate your needs based on passive cues. We are right at the point where user research can step in, digging into existing user data to fine-tune these interactions, making AI-enabled products not just smarter but genuinely moreusable.Gmails contextual smart replies, now powered by Gemini, are a good example of a non command interface. Start typing your mail and Gmail hits you with suggestions to finish the mail in one tap. No commands needed, Gemini quietly observes and responds. (Moon,2024).Non-command interfaces mark a fundamental shift from the old-school I click, you respond paradigm to systems that sense and react to user behavior without explicit commands. Unlike traditional menus or GUIs that demand direct input, non-command interfaces are designed to quietly observe and adapt, blending seamlessly into the users natural flow (Nielsen, 2019). However, theres still a lot to learn from the classic graphical user interface (GUI) playbook.By combining the intent-based nature of AI interactions with the intuitive familiarity of GUIs (and some technical wizardry that Im not qualified to elucidate), we can create systems that balance passive observation with user control. Imagine an interface that knows what you might want but still gives you the option to steer the ship when needed. Its about merging the best of both worldsAI that feels like its reading your mind but still respects your agency. I believe that a fusion of non-command design and GUI elements might be the key to pushing Intelligent Interfaces into their next evolutionary phase.ReferencesChaturvedi, R. (n.d.). Design human-centered AI interfaces. Reforge. https://www.reforge.com/guides/design-human-centered-ai-interfaces#recapCornianu, B. (2025, January 30). Training AI with pure reinforcement Learning: Insights from DeepSeek R1. Victory Square Partners. https://victorysquarepartners.com/training-ai-with-pure-reinforcement-learning-insights-from-deepseek-r1/Holmes, R. (2025, March 6). Rich Holmes on substack. Substack. Retrieved March 10, 2025, from https://substack.com/@richholmes/note/c-98472653Kashyap, R. (2023, April). I Dont Know A Great Answer OR I Dont Know, A Great Answer. Qeios. https://doi.org/10.32388/DFE2XG.2 Figure4Lipiski, D. (2024, August 13). Designing AI interfaces: Challenges, trends & future prospects | Pragmatic Coders. Pragmatic Coders. https://www.pragmaticcoders.com/blog/designing-ai-interfaces-challenges-trends-and-future-prospectsMoon, M. (2024, September 27). Google launches Geminis contextual smart replies in Gmail. Engadget. https://www.engadget.com/ai/google-launches-geminis-contextual-smart-replies-in-gmail-140021232.html Thumbnail ImageNielsen, J. (2019, May 4). Noncommand user interfaces. Nielsen Norman Group. https://www.nngroup.com/articles/noncommand/Nielsen, J. (2024, October 24). AI: First new UI paradigm in 60 years. Nielsen Norman Group. https://www.nngroup.com/articles/ai-paradigm/Phillips, P. J., Hahn, C. A., Fontana, P. C., Yates, A. N., Greene, K., Broniatowski, D. A., & Przybocki, M. A. (2021). Four principles of explainable artificial intelligence. https://doi.org/10.6028/nist.ir.8312Sunwall, E., & Neusesser, T. (2024, August 2). Error-Message guidelines. Nielsen Norman Group. https://www.nngroup.com/articles/error-message-guidelines/ Clear change password.AI + UX: design for intelligent interfaces was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.