0 Reacties

0 aandelen

86 Views

Bedrijvengids

Bedrijvengids

-

Please log in to like, share and comment!

-

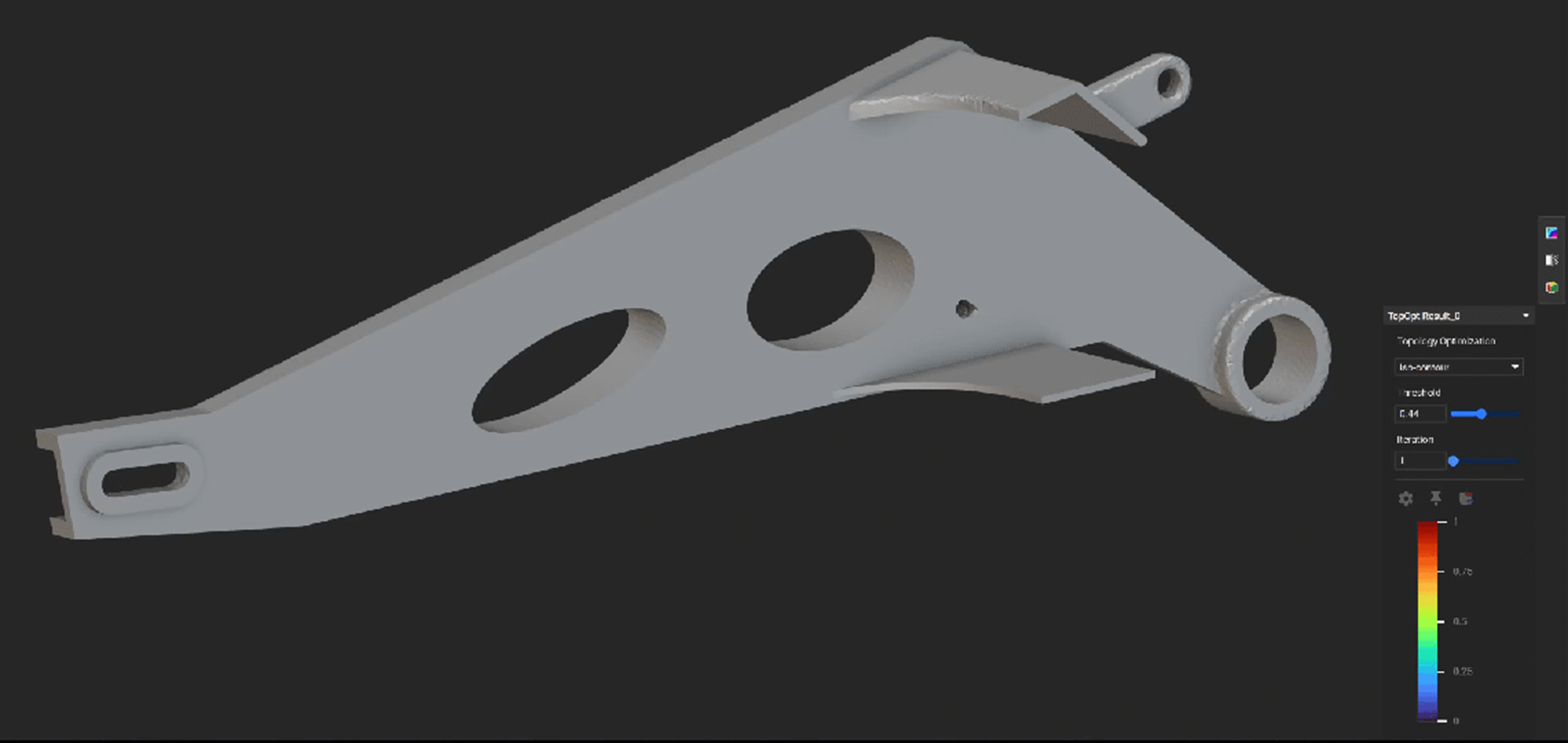

BLOGS.NVIDIA.COMBeyond CAD: How nTop Uses AI and Accelerated Computing to Enhance Product DesignAs a teenager, Bradley Rothenberg was obsessed with CAD: computer-aided design software. Before he turned 30, Rothenberg channeled that interest into building a startup, nTop, which today offers product developers — across vastly different industries — fast, highly iterative tools that help them model and create innovative, often deeply unorthodox designs. One of Rothenberg’s key insights has been how closely iteration at scale and innovation correlate — especially in the design space. He also realized that by creating engineering software for GPUs, rather than CPUs — which powered (and still power) virtually every CAD tool — nTop could tap into parallel processing algorithms and AI to offer designers fast, virtually unlimited iteration for any design project. The result: almost limitless opportunities for innovation. Product designers of all stripes took note. A decade after its founding, nTop — a member of the NVIDIA Inception program for cutting-edge startups — now employs more than 100 people, primarily in New York City, where it’s headquartered, as well as in Germany, France and the U.K. — with plans to grow another 10% by year’s end. Its computation design tools autonomously iterate alongside designers, spitballing different virtual shapes and potential materials to arrive at products, or parts of a product, that are highly performant. It’s design trial and error at scale. “As a designer, you frequently have all these competing goals and questions: If I make this change, will my design be too heavy? Will it be too thick?” Rothenberg said. “When making a change to the design, you want to see how that impacts performance, and nTop helps evaluate those performance changes in real time.” Ocado used nTop software to redesign its 600 series robot to be far lighter and sturdier than earlier versions. U.K.-based supermarket chain Ocado, which builds and deploys autonomous robots, is one of nTop’s biggest customers. Ocado differentiates itself from other large European grocery chains through its deep integration of autonomous robots and grocery picking. Its office-chair-sized robots speed around massive warehouses — approaching the size of eight American football fields — at around 20 mph, passing within a millimeter of one another as they pick and sort groceries in hive-like structures. In early designs, Ocado’s robots often broke down or even caught fire. Their weight also meant Ocado had to build more robust — and more expensive — warehouses. Using nTop’s software, Ocado’s robotics team quickly redesigned 16 critical parts in its robots, cutting the robot’s overall weight by two-thirds. Critically, the redesign took around a week. Earlier redesigns that didn’t use nTop’s tools took about four months. Prototypes of the 600 series robot were printed out using 3D printers for fast-turn testing. “Ocado created a more robust version of its robot that was an order of magnitude cheaper and faster,” Rothenberg said. “Its designers went through these rapid design cycles where they could press a button and the entire robot’s structure would be redesigned overnight using nTop, prepping it for testing the next day.” The Ocado use case is typical of how designers use nTop’s tools. nTop software runs hundreds of simulations analyzing how different conditions might impact a design’s performance. Insights from those simulations are then fed back into the design algorithm, and the entire process restarts. Designers can easily tweak their designs based on the results, until the iterations land on an optimal result. nTop has begun integrating AI models into its simulation workloads, along with an nTop customer’s bespoke design data into its iteration process. nTop uses the NVIDIA Modulus framework, NVIDIA Omniverse platform and NVIDIA CUDA-X libraries to train and infer its accelerated computing workloads and AI models. “We have neural networks that can be trained on the geometry and physics of a company’s data,” Rothenberg said. “If a company has a specific way of engineering the structure of a car, it can construct that car in nTop, train up an AI in nTop and very quickly iterate through different versions of the car’s structure or any future car designs by accessing all the data the model is already trained on.” nTop’s tools have wide applicability across industries. A Formula 1 design team used nTop to virtually model countless versions of heat sinks before choosing an unorthodox but highly performant sink for its car. Traditionally, heat sinks are made of small, uniform pieces of metal aligned side by side to maximize metal-air interaction and, therefore, heat exchange and cooling. A heat sink designed for a Formula 1 race car offered 3x more surface area and was 25% lighter than previous sinks. The engineers iterated with nTop on an undulating multilevel sink that maximized air-metal interaction even as it optimized aerodynamics, which is crucial for racing. The new heat sink achieved 3x the surface area for heat transfer than earlier models, while cutting weight by 25%, delivering superior cooling performance and enhanced efficiency. Going forward, nTop anticipates its implicit modeling tools will drive greater adoption from product designers who want to work with an iterative “partner” trained on their company’s proprietary data. “We work with many different partners who develop designs, run a bunch of simulations using models and then optimize for the best results,” said Rothenberg. “The advances they’re making really speak for themselves.” Learn more about nTop’s product design workflow and work with partners.0 Reacties 0 aandelen 125 Views

BLOGS.NVIDIA.COMBeyond CAD: How nTop Uses AI and Accelerated Computing to Enhance Product DesignAs a teenager, Bradley Rothenberg was obsessed with CAD: computer-aided design software. Before he turned 30, Rothenberg channeled that interest into building a startup, nTop, which today offers product developers — across vastly different industries — fast, highly iterative tools that help them model and create innovative, often deeply unorthodox designs. One of Rothenberg’s key insights has been how closely iteration at scale and innovation correlate — especially in the design space. He also realized that by creating engineering software for GPUs, rather than CPUs — which powered (and still power) virtually every CAD tool — nTop could tap into parallel processing algorithms and AI to offer designers fast, virtually unlimited iteration for any design project. The result: almost limitless opportunities for innovation. Product designers of all stripes took note. A decade after its founding, nTop — a member of the NVIDIA Inception program for cutting-edge startups — now employs more than 100 people, primarily in New York City, where it’s headquartered, as well as in Germany, France and the U.K. — with plans to grow another 10% by year’s end. Its computation design tools autonomously iterate alongside designers, spitballing different virtual shapes and potential materials to arrive at products, or parts of a product, that are highly performant. It’s design trial and error at scale. “As a designer, you frequently have all these competing goals and questions: If I make this change, will my design be too heavy? Will it be too thick?” Rothenberg said. “When making a change to the design, you want to see how that impacts performance, and nTop helps evaluate those performance changes in real time.” Ocado used nTop software to redesign its 600 series robot to be far lighter and sturdier than earlier versions. U.K.-based supermarket chain Ocado, which builds and deploys autonomous robots, is one of nTop’s biggest customers. Ocado differentiates itself from other large European grocery chains through its deep integration of autonomous robots and grocery picking. Its office-chair-sized robots speed around massive warehouses — approaching the size of eight American football fields — at around 20 mph, passing within a millimeter of one another as they pick and sort groceries in hive-like structures. In early designs, Ocado’s robots often broke down or even caught fire. Their weight also meant Ocado had to build more robust — and more expensive — warehouses. Using nTop’s software, Ocado’s robotics team quickly redesigned 16 critical parts in its robots, cutting the robot’s overall weight by two-thirds. Critically, the redesign took around a week. Earlier redesigns that didn’t use nTop’s tools took about four months. Prototypes of the 600 series robot were printed out using 3D printers for fast-turn testing. “Ocado created a more robust version of its robot that was an order of magnitude cheaper and faster,” Rothenberg said. “Its designers went through these rapid design cycles where they could press a button and the entire robot’s structure would be redesigned overnight using nTop, prepping it for testing the next day.” The Ocado use case is typical of how designers use nTop’s tools. nTop software runs hundreds of simulations analyzing how different conditions might impact a design’s performance. Insights from those simulations are then fed back into the design algorithm, and the entire process restarts. Designers can easily tweak their designs based on the results, until the iterations land on an optimal result. nTop has begun integrating AI models into its simulation workloads, along with an nTop customer’s bespoke design data into its iteration process. nTop uses the NVIDIA Modulus framework, NVIDIA Omniverse platform and NVIDIA CUDA-X libraries to train and infer its accelerated computing workloads and AI models. “We have neural networks that can be trained on the geometry and physics of a company’s data,” Rothenberg said. “If a company has a specific way of engineering the structure of a car, it can construct that car in nTop, train up an AI in nTop and very quickly iterate through different versions of the car’s structure or any future car designs by accessing all the data the model is already trained on.” nTop’s tools have wide applicability across industries. A Formula 1 design team used nTop to virtually model countless versions of heat sinks before choosing an unorthodox but highly performant sink for its car. Traditionally, heat sinks are made of small, uniform pieces of metal aligned side by side to maximize metal-air interaction and, therefore, heat exchange and cooling. A heat sink designed for a Formula 1 race car offered 3x more surface area and was 25% lighter than previous sinks. The engineers iterated with nTop on an undulating multilevel sink that maximized air-metal interaction even as it optimized aerodynamics, which is crucial for racing. The new heat sink achieved 3x the surface area for heat transfer than earlier models, while cutting weight by 25%, delivering superior cooling performance and enhanced efficiency. Going forward, nTop anticipates its implicit modeling tools will drive greater adoption from product designers who want to work with an iterative “partner” trained on their company’s proprietary data. “We work with many different partners who develop designs, run a bunch of simulations using models and then optimize for the best results,” said Rothenberg. “The advances they’re making really speak for themselves.” Learn more about nTop’s product design workflow and work with partners.0 Reacties 0 aandelen 125 Views -

BLOG.PLAYSTATION.COMOfficial PlayStation Podcast Episode 512: True BlueEmail us at PSPodcast@sony.com! Subscribe via Apple Podcasts, Spotify, or download here Hey, everybody! Sid, Kristen, Tim, and I are back this week to discuss Days Gone Remastered and the new hit indie game Blue Prince. We also get Tim’s top five most-played PlayStation games and discover what new titles everyone has been checking out. Stuff We Talked About Next week’s release highlights: Darkest Dungeon II | PS5, PS4 Flintlock: The Siege of Dawn | PS5 EA Sports College Football 25 | PS5 Marathon — Gameplay Reveal Showcase on April 12 Blue Prince — Behind-the-scenes with the devs PlayStation Portal — Cloud Game Streaming Beta features Days Gone Remastered — New Horde Assault mode deep dive Bloom & Rage Lost Records — Creative Director interview all about Tape 2 The Last of Us Complete — Now available on PS5 Indiana Jones and the Great Circle — PS5 Pro features deep dive Assassin’s Creed Shadows — PS5 Pro features detailed by Bethesda PlayStation Plus Game Catalog April Extra and Premium Hogwarts Legacy | PS4, PS5 Blue Prince | PS5 Lost Records: Bloom & Rage – Tape 2 | PS5 EA Sports PGA Tour | PS5 Battlefield 1 | PS4 PlateUp! | PS4, PS5 Premium Alone in the Dark 2 | PS4, PS5 War of the Monsters | PS4, PS5 The Cast View and download image Download the image close Close Download this image Sid Shuman – Senior Director of Content Communications, SIE View and download image Download the image close Close Download this image Kristen Zitani – Senior Content Communications Specialist, SIE View and download image Download the image close Close Download this image O’Dell Harmon Jr. – Content Communications Specialist, SIE View and download image Download the image close Close Download this image Tim Turi – Content Communications Manager, SIE Thanks to Dormilón for our rad theme song and show music. [Editor’s note: PSN game release dates are subject to change without notice. Game details are gathered from press releases from their individual publishers and/or ESRB rating descriptions.]0 Reacties 0 aandelen 132 Views

BLOG.PLAYSTATION.COMOfficial PlayStation Podcast Episode 512: True BlueEmail us at PSPodcast@sony.com! Subscribe via Apple Podcasts, Spotify, or download here Hey, everybody! Sid, Kristen, Tim, and I are back this week to discuss Days Gone Remastered and the new hit indie game Blue Prince. We also get Tim’s top five most-played PlayStation games and discover what new titles everyone has been checking out. Stuff We Talked About Next week’s release highlights: Darkest Dungeon II | PS5, PS4 Flintlock: The Siege of Dawn | PS5 EA Sports College Football 25 | PS5 Marathon — Gameplay Reveal Showcase on April 12 Blue Prince — Behind-the-scenes with the devs PlayStation Portal — Cloud Game Streaming Beta features Days Gone Remastered — New Horde Assault mode deep dive Bloom & Rage Lost Records — Creative Director interview all about Tape 2 The Last of Us Complete — Now available on PS5 Indiana Jones and the Great Circle — PS5 Pro features deep dive Assassin’s Creed Shadows — PS5 Pro features detailed by Bethesda PlayStation Plus Game Catalog April Extra and Premium Hogwarts Legacy | PS4, PS5 Blue Prince | PS5 Lost Records: Bloom & Rage – Tape 2 | PS5 EA Sports PGA Tour | PS5 Battlefield 1 | PS4 PlateUp! | PS4, PS5 Premium Alone in the Dark 2 | PS4, PS5 War of the Monsters | PS4, PS5 The Cast View and download image Download the image close Close Download this image Sid Shuman – Senior Director of Content Communications, SIE View and download image Download the image close Close Download this image Kristen Zitani – Senior Content Communications Specialist, SIE View and download image Download the image close Close Download this image O’Dell Harmon Jr. – Content Communications Specialist, SIE View and download image Download the image close Close Download this image Tim Turi – Content Communications Manager, SIE Thanks to Dormilón for our rad theme song and show music. [Editor’s note: PSN game release dates are subject to change without notice. Game details are gathered from press releases from their individual publishers and/or ESRB rating descriptions.]0 Reacties 0 aandelen 132 Views -

WWW.POLYGON.COMEpic Universe’s rail-jumping Donkey Kong coaster is bumpy, but beautifulVisitors to the sprawling theme-park ecosystem of Orlando, Florida will soon be able to ride what’s become one of the most talked about roller coasters on the planet, without booking an overseas trip to Japan. Epic Universe, Universal’s brand new theme park, opens on May 22, 2025 with Donkey Kong Country, a near-replica of the park that opened last year at Universal Studios Japan. Donkey Kong Country is the only “portal-within-a-portal” at Epic Universe, meaning that you access the area by first going through a Super Nintendo World warp pipe entrance, then through another large “portal” toward the back of the Mushroom Kingdom. Once through, visitors are transported into a lush, tropical landscape littered with barrels, palm trees, and piles of bananas. Guests can stop at the Bubbly Barrel, the area’s quick service eatery, for refreshments, like a Pineapple Banana Dole Whip. Music enhances the area’s ambience as various songs from the Donkey Kong games provide a banging bongo beat that reverberates throughout. At the center is the imposing Golden Temple that houses the area’s only ride: the Donkey Kong themed roller coaster, Mine-Cart Madness. On this ride, you board a 4-seater vehicle resembling a mine cart that appears to “jump” across mangled tracks, replicating Donkey Kong Country’s infamous rail-jumping level. The technology that brings this rail-jumping effect into real life is Universal’s patented “Boom coaster” concept. The ride vehicle is attached to an arm that extends far above the coaster’s real track, allowing ride designers to place fake minecart rails between riders and the coaster’s actual track. This unique ride system has had the roller coaster community buzzing ever since the patent was made public as it really opens the floodgates for incredibly elaborate roller coaster theming. The patent even demonstrates several possibilities in addition to the rail-jumping effect, like gliding on clouds or jumping over obstructions on false tracks. The unfortunate tradeoff with this new technology is that it makes the ride a bit bumpy and shaky, especially considering it’s a brand new roller coaster that hasn’t officially opened to the public yet. Since the center of gravity of each vehicle is so high above the real coaster track, the slightest bounce, shake or jolt is accentuated and amplified; similar to how a skyscraper’s top floors sway more than its foundation when facing high winds. After riding it for myself, I would say Mine-Cart Madness is not a ‘headbanger,’ that’ll rattle you until you puke, but even employees warned of the ride’s roughness compared to the park’s other family roller coasters. While the rail-jumping effects are uncanny, the ride is much more tame than you might expect. This is no white-knuckle roller coaster. Mine-Cart Madness is more of a family ride that relies on its special effects to provide its thrills. The first big moment of the ride involves an ascent to the ride’s highest point where your mine cart vehicle is shot out of a classic Donkey Kong barrel cannon. Despite the effects and fanfare, you’re not launched through the air at high speeds and instead slowly glide from the barrel cannon onto a set of tracks and into the ride’s first tunnel. The ride never flips upside down and never sends riders backwards like many of the other Epic Universe coasters. While Universal hasn’t yet publicized the ride’s top speed, it felt slower than the park’s most kid-friendly coaster, Hiccup’s Wing Gliders, which has a top speed of 45mph. (We reached out to Universal for specifics on Mine-Cart Madness’s top speed but did not hear back in time for publication.) The ride’s height requirement also reflects that this coaster is suitable for young children as it’s only 40 inches. (Although, kids under 48 inches must be accompanied by a supervising adult.) Despite its bumps, Mine-Cart Madness is still a stellar attraction due to its innovative technology and incredibly elaborate theming. It’s clear that Universal’s designers worked closely with Nintendo to ensure the ride stayed true to the iconic franchise for diehard fans, while also offering a world-class themed experience for families. It’s just a bit concerning that the ride experience is already feeling a bit rough before opening day, as no roller coaster gets smoother as it ages. [Disclosure: Reporting for this article was conducted out of a press event held at Epic Universe in Orlando, Florida on April 5. Universal provided Polygon’s accommodations for the event. You can find additional information about Polygon’s ethics policy here.]0 Reacties 0 aandelen 79 Views

WWW.POLYGON.COMEpic Universe’s rail-jumping Donkey Kong coaster is bumpy, but beautifulVisitors to the sprawling theme-park ecosystem of Orlando, Florida will soon be able to ride what’s become one of the most talked about roller coasters on the planet, without booking an overseas trip to Japan. Epic Universe, Universal’s brand new theme park, opens on May 22, 2025 with Donkey Kong Country, a near-replica of the park that opened last year at Universal Studios Japan. Donkey Kong Country is the only “portal-within-a-portal” at Epic Universe, meaning that you access the area by first going through a Super Nintendo World warp pipe entrance, then through another large “portal” toward the back of the Mushroom Kingdom. Once through, visitors are transported into a lush, tropical landscape littered with barrels, palm trees, and piles of bananas. Guests can stop at the Bubbly Barrel, the area’s quick service eatery, for refreshments, like a Pineapple Banana Dole Whip. Music enhances the area’s ambience as various songs from the Donkey Kong games provide a banging bongo beat that reverberates throughout. At the center is the imposing Golden Temple that houses the area’s only ride: the Donkey Kong themed roller coaster, Mine-Cart Madness. On this ride, you board a 4-seater vehicle resembling a mine cart that appears to “jump” across mangled tracks, replicating Donkey Kong Country’s infamous rail-jumping level. The technology that brings this rail-jumping effect into real life is Universal’s patented “Boom coaster” concept. The ride vehicle is attached to an arm that extends far above the coaster’s real track, allowing ride designers to place fake minecart rails between riders and the coaster’s actual track. This unique ride system has had the roller coaster community buzzing ever since the patent was made public as it really opens the floodgates for incredibly elaborate roller coaster theming. The patent even demonstrates several possibilities in addition to the rail-jumping effect, like gliding on clouds or jumping over obstructions on false tracks. The unfortunate tradeoff with this new technology is that it makes the ride a bit bumpy and shaky, especially considering it’s a brand new roller coaster that hasn’t officially opened to the public yet. Since the center of gravity of each vehicle is so high above the real coaster track, the slightest bounce, shake or jolt is accentuated and amplified; similar to how a skyscraper’s top floors sway more than its foundation when facing high winds. After riding it for myself, I would say Mine-Cart Madness is not a ‘headbanger,’ that’ll rattle you until you puke, but even employees warned of the ride’s roughness compared to the park’s other family roller coasters. While the rail-jumping effects are uncanny, the ride is much more tame than you might expect. This is no white-knuckle roller coaster. Mine-Cart Madness is more of a family ride that relies on its special effects to provide its thrills. The first big moment of the ride involves an ascent to the ride’s highest point where your mine cart vehicle is shot out of a classic Donkey Kong barrel cannon. Despite the effects and fanfare, you’re not launched through the air at high speeds and instead slowly glide from the barrel cannon onto a set of tracks and into the ride’s first tunnel. The ride never flips upside down and never sends riders backwards like many of the other Epic Universe coasters. While Universal hasn’t yet publicized the ride’s top speed, it felt slower than the park’s most kid-friendly coaster, Hiccup’s Wing Gliders, which has a top speed of 45mph. (We reached out to Universal for specifics on Mine-Cart Madness’s top speed but did not hear back in time for publication.) The ride’s height requirement also reflects that this coaster is suitable for young children as it’s only 40 inches. (Although, kids under 48 inches must be accompanied by a supervising adult.) Despite its bumps, Mine-Cart Madness is still a stellar attraction due to its innovative technology and incredibly elaborate theming. It’s clear that Universal’s designers worked closely with Nintendo to ensure the ride stayed true to the iconic franchise for diehard fans, while also offering a world-class themed experience for families. It’s just a bit concerning that the ride experience is already feeling a bit rough before opening day, as no roller coaster gets smoother as it ages. [Disclosure: Reporting for this article was conducted out of a press event held at Epic Universe in Orlando, Florida on April 5. Universal provided Polygon’s accommodations for the event. You can find additional information about Polygon’s ethics policy here.]0 Reacties 0 aandelen 79 Views -

DESIGN-MILK.COMF5: Gala Magriñá Talks New York City, Nature, Travel + MoreInterior designer Gala Magriñá studied film and television at New York University, and after graduation she wanted a job that would allow her to continue to make movies to submit to festivals. She accepted a position with Italian clothing brand Diesel creating window and in-store displays. Magriñá eventually opened her own production agency and orchestrated events for a roster of high-profile companies like Jimmy Choo and Harper’s Bazaar. Along the way she discovered Vedic meditation, which not only captured her interest, but also shaped her vision for interiors. “It was a game-changer, helping me reconnect with my purpose and rethink the impact I wanted to make in the work,” Magriñá says. “My passion for mindfulness led me to shift my focus from creating temporary spaces to creating permanent interiors, and that’s when my mission came into focus.” Gala Magriñá Magriñá was ready for her next venture, and so in 2018 she launched her eponymous firm. Based in Westchester, New York and women-led, the team’s commercial and residential projects can be found in every corner of the globe. Magriñá strives to merge the cool and beautiful with the holistic and mindful. By deftly balancing a range of elements, each of her spaces inspire and elevate the energy of the individuals who inhabit them. The designer considers herself fortunate to have mentors who offer support and guidance. She counts many interior designers as friends, and she’ll often strategize with them or bounce ideas around and get their feedback, which enhances her own process. Even with a busy schedule, Magriñá always makes time to go within and center herself. “When I find my energy waning around 3 pm, I will stop working and meditate,” she notes. “It helps me reset and get ready for the evening.” Today, Gala Magriñá joins us for Friday Five! Photo: Gala Magriñá 1. Travel Travel is my greatest source of inspiration. I’ve been fortunate enough to explore quite a handful of different places, and I come back inspired and changed in some way. Some of my favorite cities to visit have been Rishikesh in India, Hong Kong, Dubai, and Tokyo because the cultures were so different from what I am used to. I find that any time we can step outside our routines to expose ourselves to new experiences broadens our perspectives in a way that staying home just can’t. When I go to a new city I like to make a list of museums, stores, hotels, and restaurants to visit and plan walkable routes to see all of them. Along the way I let myself get lost on different streets which has led me to find some of the best spots! I love this balance of planning a bit while allowing some mixed-in spontaneity. This combo has never failed me. Photo: Gala Magriñá 2. Art + Objects Another way I stay inspired is by going to art and design fairs. I live in NYC where we’re lucky to have quite a few locally, but I also love Basel and Design Miami. These are some of my favorite days. I typically take the day off work, go with interior design friends/peers, and then we go to a fabulous lunch somewhere. While I’m there, I take tons of photos that I meticulously file away as inspiration for future projects. When you’re busy it’s easy to keep your head down and keep grinding, but as a creative, it’s important to take days off to find inspiration and share some good laughs with friends. Photo: Gala Magriñá 3. Interior Architectural + Design Details I am constantly observing how things have been built or designed, capturing photos wherever I go. When I’m traveling with family, while they’re busy taking photos of monuments or of the group, I’m snapping shots of door knobs and moldings and asking them to move out of the frame! I am particularly drawn to European design, and get tons of inspiration while there. I find they do really simple, yet modern and cool things, and love bringing some of those elements into my projects when I return to the States. Photo: Gala Magriñá 4. Nature Another magnificent source of inspiration and awe for me is nature. I try and take a walk outside in the park every day, no matter the weather. I find that walking in nature and just observing brings me peace of mind, and a lot of the time, some of my best ideas. The grandeur and perfection of nature always humbles me and makes all my problems seem small. So, immersing myself in nature is essential for my well-being and inner peace. Photo: Gala Magriñá 5. NYC Last but not least is my hometown of NYC. I’ve lived here since I was eight and every time I step foot in the city I am inspired all over again. From the people, the fashion, and the pulse of the city, it all sparks my creativity. Some of my best ideas come to me while I am walking from place to place with my headphones in and music on. Turning the corner and seeing the Chrysler building bathed in sunlight never ceases to amaze and inspire me. I once got the chance to ask the performance artist Marina Abramovic why she loved living in NYC and she said: “The energy. Manhattan is built on bedrock which just shoots the energy right back up at you”. I wholeheartedly agree and feel it every time I step into the city. Works by Gala Magriñá Design: Photo: Joseph Kramm Design Studio Office in Long Island City Gala Magriñá Design conceptualized a new 4,000-square-foot headquarters office for creative agency DesignStudio in Long Island City, New York that seamlessly marries the brand’s identity with the culture of their new home in New York City. Mid-century modern design meets industrial modern flair with curated spaces for everything from hybrid meeting rooms conducive to Zoom calls, a laid-back all-hands meeting space, and areas for collaborative brainstorming or single-task focused work. Both highly functional and beautiful, the office is truly made for the way that people work in a post-COVID world, where a meeting of creative minds can come to convene, work and play. Photo: Joseph Kramm Irvington, NY Residence This project was the ultimate design challenge – infusing a 6,100-square-foot colonial-style home from 1929 with our signature style of eclectic modern pop with minimal construction. Gala Magriñá Design honored the original architectural details and by painting the rooms a soothing off-white, it provided us with a blank canvas to work with.We included pops of complimentary color with furniture and brought in personality and style via abstract art and meticulously curated objects and accessories. Photo: Joseph Kramm West 67th Street Apartment A two-bedroom, two-bathroom apartment with sweeping views of the New York City skyline and Central Park in need of a major overhaul. Gala Magriñá Design did a full remodel of the apartment choosing soft tones, natural woods, and a variety of textures to create some major “sanctuary” vibes throughout and let NYC be the star of the show. Magriñá chose a dark blue grasscloth wallpaper for the primary bedroom that adds depth and texture to an otherwise airy and ethereal room. Light woods paired with carrara marble give the room a warm, elegant feel. Plenty of plants introduce a dose of nature much needed for urban environments. In the guest bedroom, biomorphic shapes introduce other touches of nature to the space via wallpaper and a curved headboard for a fun and unexpected twist. Photo: Christian Torres Postlight 5th Avenue Office Postlight approached Gala Magriñá Design to reimagine its 4,700-square-foot 5th Avenue office post-pandemic with a unique idea: create a Soho House-inspired space for both employees and clients. They wanted a space that was conducive to holding workshops, meetings and events for clients and the public as well as an inspirational workspace for their employees. Magriñá responded with curated lounge areas – some conducive to group meetings and some for individual-focused work. Custom wallpaper inspired by the brand colors and logo as well as custom art reflect the company’s mission and values. A 24’ TV and logo wall for speakers to present from also serves as a presentation space as well as a great photo moment. Each meeting and conference room was assigned a color that we created a tone-on-tone room out of with matching paint and custom area rugs. The kitchen was designed to host events with a massive island and plenty of space around it for guests to mingle at all while respecting the original old school NewYork style architecture. Holding that no office space is complete without an Instagrammable moment, Magriñá created a photo-worthy reception with plants, bright pops of color and neon. Photo: Christian Torres Yousician NYC Office Yousician approached Gala Magriñá Design to design its first U.S. Office in NYC. Housed in a beautifully renovated loft-style building in the Meatpacking district, Magriñá brought the brand to life while honoring the existing architecture. The company’s signature green is incorporated in tone-on-tone rooms and used as a base color to coordinate pops of colors with. In lieu of an additional meeting room, one of the closed spaces was converted into a music-inspired hangout room complete with a bar and record player. The approach takes the concept of “Resimercial” to another level, allowing for a more fun and informal setting for team and client meetings. A communal table in the open office area creates yet another work and meeting destination for clients and employees alike and ensures there are plenty of different spaces to work and collaborate.0 Reacties 0 aandelen 112 Views

DESIGN-MILK.COMF5: Gala Magriñá Talks New York City, Nature, Travel + MoreInterior designer Gala Magriñá studied film and television at New York University, and after graduation she wanted a job that would allow her to continue to make movies to submit to festivals. She accepted a position with Italian clothing brand Diesel creating window and in-store displays. Magriñá eventually opened her own production agency and orchestrated events for a roster of high-profile companies like Jimmy Choo and Harper’s Bazaar. Along the way she discovered Vedic meditation, which not only captured her interest, but also shaped her vision for interiors. “It was a game-changer, helping me reconnect with my purpose and rethink the impact I wanted to make in the work,” Magriñá says. “My passion for mindfulness led me to shift my focus from creating temporary spaces to creating permanent interiors, and that’s when my mission came into focus.” Gala Magriñá Magriñá was ready for her next venture, and so in 2018 she launched her eponymous firm. Based in Westchester, New York and women-led, the team’s commercial and residential projects can be found in every corner of the globe. Magriñá strives to merge the cool and beautiful with the holistic and mindful. By deftly balancing a range of elements, each of her spaces inspire and elevate the energy of the individuals who inhabit them. The designer considers herself fortunate to have mentors who offer support and guidance. She counts many interior designers as friends, and she’ll often strategize with them or bounce ideas around and get their feedback, which enhances her own process. Even with a busy schedule, Magriñá always makes time to go within and center herself. “When I find my energy waning around 3 pm, I will stop working and meditate,” she notes. “It helps me reset and get ready for the evening.” Today, Gala Magriñá joins us for Friday Five! Photo: Gala Magriñá 1. Travel Travel is my greatest source of inspiration. I’ve been fortunate enough to explore quite a handful of different places, and I come back inspired and changed in some way. Some of my favorite cities to visit have been Rishikesh in India, Hong Kong, Dubai, and Tokyo because the cultures were so different from what I am used to. I find that any time we can step outside our routines to expose ourselves to new experiences broadens our perspectives in a way that staying home just can’t. When I go to a new city I like to make a list of museums, stores, hotels, and restaurants to visit and plan walkable routes to see all of them. Along the way I let myself get lost on different streets which has led me to find some of the best spots! I love this balance of planning a bit while allowing some mixed-in spontaneity. This combo has never failed me. Photo: Gala Magriñá 2. Art + Objects Another way I stay inspired is by going to art and design fairs. I live in NYC where we’re lucky to have quite a few locally, but I also love Basel and Design Miami. These are some of my favorite days. I typically take the day off work, go with interior design friends/peers, and then we go to a fabulous lunch somewhere. While I’m there, I take tons of photos that I meticulously file away as inspiration for future projects. When you’re busy it’s easy to keep your head down and keep grinding, but as a creative, it’s important to take days off to find inspiration and share some good laughs with friends. Photo: Gala Magriñá 3. Interior Architectural + Design Details I am constantly observing how things have been built or designed, capturing photos wherever I go. When I’m traveling with family, while they’re busy taking photos of monuments or of the group, I’m snapping shots of door knobs and moldings and asking them to move out of the frame! I am particularly drawn to European design, and get tons of inspiration while there. I find they do really simple, yet modern and cool things, and love bringing some of those elements into my projects when I return to the States. Photo: Gala Magriñá 4. Nature Another magnificent source of inspiration and awe for me is nature. I try and take a walk outside in the park every day, no matter the weather. I find that walking in nature and just observing brings me peace of mind, and a lot of the time, some of my best ideas. The grandeur and perfection of nature always humbles me and makes all my problems seem small. So, immersing myself in nature is essential for my well-being and inner peace. Photo: Gala Magriñá 5. NYC Last but not least is my hometown of NYC. I’ve lived here since I was eight and every time I step foot in the city I am inspired all over again. From the people, the fashion, and the pulse of the city, it all sparks my creativity. Some of my best ideas come to me while I am walking from place to place with my headphones in and music on. Turning the corner and seeing the Chrysler building bathed in sunlight never ceases to amaze and inspire me. I once got the chance to ask the performance artist Marina Abramovic why she loved living in NYC and she said: “The energy. Manhattan is built on bedrock which just shoots the energy right back up at you”. I wholeheartedly agree and feel it every time I step into the city. Works by Gala Magriñá Design: Photo: Joseph Kramm Design Studio Office in Long Island City Gala Magriñá Design conceptualized a new 4,000-square-foot headquarters office for creative agency DesignStudio in Long Island City, New York that seamlessly marries the brand’s identity with the culture of their new home in New York City. Mid-century modern design meets industrial modern flair with curated spaces for everything from hybrid meeting rooms conducive to Zoom calls, a laid-back all-hands meeting space, and areas for collaborative brainstorming or single-task focused work. Both highly functional and beautiful, the office is truly made for the way that people work in a post-COVID world, where a meeting of creative minds can come to convene, work and play. Photo: Joseph Kramm Irvington, NY Residence This project was the ultimate design challenge – infusing a 6,100-square-foot colonial-style home from 1929 with our signature style of eclectic modern pop with minimal construction. Gala Magriñá Design honored the original architectural details and by painting the rooms a soothing off-white, it provided us with a blank canvas to work with.We included pops of complimentary color with furniture and brought in personality and style via abstract art and meticulously curated objects and accessories. Photo: Joseph Kramm West 67th Street Apartment A two-bedroom, two-bathroom apartment with sweeping views of the New York City skyline and Central Park in need of a major overhaul. Gala Magriñá Design did a full remodel of the apartment choosing soft tones, natural woods, and a variety of textures to create some major “sanctuary” vibes throughout and let NYC be the star of the show. Magriñá chose a dark blue grasscloth wallpaper for the primary bedroom that adds depth and texture to an otherwise airy and ethereal room. Light woods paired with carrara marble give the room a warm, elegant feel. Plenty of plants introduce a dose of nature much needed for urban environments. In the guest bedroom, biomorphic shapes introduce other touches of nature to the space via wallpaper and a curved headboard for a fun and unexpected twist. Photo: Christian Torres Postlight 5th Avenue Office Postlight approached Gala Magriñá Design to reimagine its 4,700-square-foot 5th Avenue office post-pandemic with a unique idea: create a Soho House-inspired space for both employees and clients. They wanted a space that was conducive to holding workshops, meetings and events for clients and the public as well as an inspirational workspace for their employees. Magriñá responded with curated lounge areas – some conducive to group meetings and some for individual-focused work. Custom wallpaper inspired by the brand colors and logo as well as custom art reflect the company’s mission and values. A 24’ TV and logo wall for speakers to present from also serves as a presentation space as well as a great photo moment. Each meeting and conference room was assigned a color that we created a tone-on-tone room out of with matching paint and custom area rugs. The kitchen was designed to host events with a massive island and plenty of space around it for guests to mingle at all while respecting the original old school NewYork style architecture. Holding that no office space is complete without an Instagrammable moment, Magriñá created a photo-worthy reception with plants, bright pops of color and neon. Photo: Christian Torres Yousician NYC Office Yousician approached Gala Magriñá Design to design its first U.S. Office in NYC. Housed in a beautifully renovated loft-style building in the Meatpacking district, Magriñá brought the brand to life while honoring the existing architecture. The company’s signature green is incorporated in tone-on-tone rooms and used as a base color to coordinate pops of colors with. In lieu of an additional meeting room, one of the closed spaces was converted into a music-inspired hangout room complete with a bar and record player. The approach takes the concept of “Resimercial” to another level, allowing for a more fun and informal setting for team and client meetings. A communal table in the open office area creates yet another work and meeting destination for clients and employees alike and ensures there are plenty of different spaces to work and collaborate.0 Reacties 0 aandelen 112 Views -

LIFEHACKER.COMMicrosoft Edge Recently Got a Speed BoostFor many, Microsoft Edge's only purpose is to download another browser entirely, like Chrome or Firefox. But this isn't Internet Explorer: Edge is a competent browser in its own right, whether you have a Mac or a PC. If you do use the browser, you might be pleasantly surprised by how snappy it feels after you update it—at least, according to Microsoft.Edge 134 and newer's performance gains In a Thursday post on Windows Blogs, Microsoft confirmed that, starting with version 134, Microsoft Edge is a tad faster than previous iterations. In fact, Edge 134 is up to 9% faster, when you run it through Speedometer 3.0, a benchmarking tool for web browsers.Microsoft says that when testing Edge version 134 on an Intel i5-13500 running Windows 11, the browser scores a 32.7 on the Speedometer 3.0 benchmark. The company compares it to Edge 133, which scored 29.6, and Edge 132, which scored 28.8. Edge 132 (left), Edge 133 (center), and Edge 134 (right). Credit: Microsoft In addition to this raw benchmark score, Microsoft touted some Edge 134 stats: The company says the latest version of the browser can navigate the web 1.7% faster; browser start is 2% faster; and web pages are 5% to 7% more responsive when compared to Edge 133. Microsoft based their results from its "field telemetry," which simulates how someone may use the web on various devices and sites. Microsoft didn't point to any specific changes in Edge 134 that could contribute to this performance boost. However, the company credits code changes to Edge and Chromium (the underlying engine that powers many webs browser, Chrome included) to improved browser speeds. Microsoft does say that these improvements may differ depending on your setup, which should go without saying: Edge 134 may vary in performance between a PC running Windows 11 and a Mac running macOS Sequoia. Similarly, an older Intel Mac may perform slower than the latest Intel chip on PC, and it's not clear how well a Mac running Apple silicon compares to other devices. Whatever your setup, you can likely expect this latest version of Microsoft Edge to perform at least slightly better after the update. There's a bit more to the storyIf you're an avid Edge user, you might know that Edge 134 isn't actually the latest version. That would be Edge 135, which dropped earlier this month. Edge 134 came out on March 6, but Microsoft didn't acknowledge these performance gains until April 10. If you regularly keep Edge updated, you might have already been living with the speed boosts for over a month. However, not all of us are so on it: When checking my own version of Edge, I found it to still be on a version of Edge 133. Now, I'm on 135—I never even had the chance to run 134! In my own very unofficial test running Speedometer 3.0, 135 only scored 24.4, plus or minus 0.67. That might sound low when compared to Microsoft's tests, but the latest version of Chrome scored 22.1, plus or minus 0.28, while Safari scored 21.5, plus or minus 0.92. I suspect my various extensions could affect the scores, but I did quit all other open applications to run the test (I'm on an M1 Mac). Performance gains aren't the only improvements to Edge this year. Back in February, Microsoft started testing a feature that can block fullscreen pop-ups. Shortly after, the company rolled out a RAM management feature for gamers that had been in beta testing for some time.0 Reacties 0 aandelen 107 Views

LIFEHACKER.COMMicrosoft Edge Recently Got a Speed BoostFor many, Microsoft Edge's only purpose is to download another browser entirely, like Chrome or Firefox. But this isn't Internet Explorer: Edge is a competent browser in its own right, whether you have a Mac or a PC. If you do use the browser, you might be pleasantly surprised by how snappy it feels after you update it—at least, according to Microsoft.Edge 134 and newer's performance gains In a Thursday post on Windows Blogs, Microsoft confirmed that, starting with version 134, Microsoft Edge is a tad faster than previous iterations. In fact, Edge 134 is up to 9% faster, when you run it through Speedometer 3.0, a benchmarking tool for web browsers.Microsoft says that when testing Edge version 134 on an Intel i5-13500 running Windows 11, the browser scores a 32.7 on the Speedometer 3.0 benchmark. The company compares it to Edge 133, which scored 29.6, and Edge 132, which scored 28.8. Edge 132 (left), Edge 133 (center), and Edge 134 (right). Credit: Microsoft In addition to this raw benchmark score, Microsoft touted some Edge 134 stats: The company says the latest version of the browser can navigate the web 1.7% faster; browser start is 2% faster; and web pages are 5% to 7% more responsive when compared to Edge 133. Microsoft based their results from its "field telemetry," which simulates how someone may use the web on various devices and sites. Microsoft didn't point to any specific changes in Edge 134 that could contribute to this performance boost. However, the company credits code changes to Edge and Chromium (the underlying engine that powers many webs browser, Chrome included) to improved browser speeds. Microsoft does say that these improvements may differ depending on your setup, which should go without saying: Edge 134 may vary in performance between a PC running Windows 11 and a Mac running macOS Sequoia. Similarly, an older Intel Mac may perform slower than the latest Intel chip on PC, and it's not clear how well a Mac running Apple silicon compares to other devices. Whatever your setup, you can likely expect this latest version of Microsoft Edge to perform at least slightly better after the update. There's a bit more to the storyIf you're an avid Edge user, you might know that Edge 134 isn't actually the latest version. That would be Edge 135, which dropped earlier this month. Edge 134 came out on March 6, but Microsoft didn't acknowledge these performance gains until April 10. If you regularly keep Edge updated, you might have already been living with the speed boosts for over a month. However, not all of us are so on it: When checking my own version of Edge, I found it to still be on a version of Edge 133. Now, I'm on 135—I never even had the chance to run 134! In my own very unofficial test running Speedometer 3.0, 135 only scored 24.4, plus or minus 0.67. That might sound low when compared to Microsoft's tests, but the latest version of Chrome scored 22.1, plus or minus 0.28, while Safari scored 21.5, plus or minus 0.92. I suspect my various extensions could affect the scores, but I did quit all other open applications to run the test (I'm on an M1 Mac). Performance gains aren't the only improvements to Edge this year. Back in February, Microsoft started testing a feature that can block fullscreen pop-ups. Shortly after, the company rolled out a RAM management feature for gamers that had been in beta testing for some time.0 Reacties 0 aandelen 107 Views -

WWW.ENGADGET.COMSony has priced the Bravia Theater Bar 6 soundbar at $650Sony just announced pricing for a number of upcoming products, including the Bravia Theater Bar 6 soundbar. This 3.1.2-channel system costs $650 for US consumers. It comes with a wireless subwoofer and is compatible with Dolby Atmos and DTS:X. The soundbar includes up-mixing tech to transform stereo content to 3D sound when immersive formats aren’t available. Dialogue gets an upgrade here, thanks to a dedicated center speaker and an AI-powered tool called Voice Zoom 3. Sony The beefier Bravia Theater System 6 is a 5.1-channel system that costs $770. This is an all-in-one setup that comes with a soundbar, subwoofer and rear speakers. The included soundbar is not the Bar 6, despite the similar name. However, it does support both Dolby Atmos and DTS:X. The subwoofer also features a slightly different design than the one that ships with the Bar 6. This system does offer stereo up-mixing to transform content into 3D audio via Sony’s proprietary algorithm. The Bravia Theater Rear 8 speakers are compatible with the company’s soundbars and work with its 360 Spatial Sound Mapping tech to calibrate the system to the acoustics of a specific living room. The speakers cost $450. Having the pricing here is nice, but we still don’t have any official release dates. The current plan by Sony is to start selling these products later this spring.This article originally appeared on Engadget at https://www.engadget.com/audio/speakers/sony-has-priced-the-bravia-theater-bar-6-soundbar-at-650-175824102.html?src=rss0 Reacties 0 aandelen 74 Views

-

WWW.TECHRADAR.COMChina admits behind closed doors it was involved in Volt Typhoon attacksA series of cyberattacks targeting US infrastructure has been privately claimed by China.0 Reacties 0 aandelen 84 Views

WWW.TECHRADAR.COMChina admits behind closed doors it was involved in Volt Typhoon attacksA series of cyberattacks targeting US infrastructure has been privately claimed by China.0 Reacties 0 aandelen 84 Views -

WWW.CNBC.COMUnitedHealth is making struggling doctors repay loans issued after last year's cyberattackUnitedHealth is aggressively recouping the loans the company offered doctors following the 2024 cyberattack at its Change Healthcare unit.0 Reacties 0 aandelen 104 Views

WWW.CNBC.COMUnitedHealth is making struggling doctors repay loans issued after last year's cyberattackUnitedHealth is aggressively recouping the loans the company offered doctors following the 2024 cyberattack at its Change Healthcare unit.0 Reacties 0 aandelen 104 Views -

WWW.FASTCOMPANY.COMReport: 69% of workers say their risk of burnout is moderate to highMany companies are acutely aware that a notable portion of their workers are struggling with burnout. The data makes that much clear: A Mercer report from last year found that 82% of workers said they were at risk of burnout. In another study conducted by the National Alliance on Mental Illness and Ipsos, over half of the workers surveyed said they had experienced burnout because of their job in 2023. Even so, it seems that employers may be underestimating just how widespread burnout really is among the workforce. A new report by the online marketplace Care.com indicates that while the vast majority of companies surveyed—84%—know that burnout can noticeably impact retention, they don’t fully understand the scope of the issue. While employers believed only about 45% of their workers were at risk of burnout, 69% of employees said they were at moderate to high risk of burnout. The Care.com report, which polled 600 human resources executives and 1,000 rank-and-file employees, points to caregiving responsibilities as a driving factor behind burnout. Of the respondents who pay for family care, most of them say caregiving puts them at higher risk of burnout, and that their stress in the workplace is amplified by the burden of managing work expectations alongside caregiving. For many working parents, particularly those in their forties, caregiving responsibilities can take the form of both eldercare and childcare. What helps with burnout What does seem to help, at least according to Care.com’s findings, are workplace benefits that help support caregivers—an offering that has become increasingly common at companies that have invested in more niche employee benefits. Mental health resources have become some of the most popular benefits, along with fertility and family-building support. A number of companies also provide caregiver support in the form of subsidies or backup care coverage, which can help families manage gaps in childcare and offset the exorbitant cost of care. Despite the popularity of these employee benefit, companies don’t always see high rates of utilization across the board. But according to the Care.com report, when employers provide benefits tailored to caregivers, there can be a clear correlation with retention and stronger performance. The benefits that employees want About one in five employees said that they have quit jobs over a lack of caregiver benefits—or that they would leave for a role that offers those benefits. Among employees who have access to those benefits, 45% say that their productivity increased while 40% report lower rates of absenteeism. The emotional impact, too, can be significant: Over half of employees said caregiver benefits boosted their quality of life, improved their work-life balance, and reduced stress levels. According to the report, it seems that employees and employers alike are largely aligned on the expectation that companies can and should help families manage the cost of caregiving. What’s not always clear is the form that assistance should take. Narrow benefits like on-site childcare may not be the right solution for all parents, or companies may find that many of their employees are actually looking for help with eldercare—which is why it’s important that employers actually take the time to understand what their workforce really needs.0 Reacties 0 aandelen 90 Views

WWW.FASTCOMPANY.COMReport: 69% of workers say their risk of burnout is moderate to highMany companies are acutely aware that a notable portion of their workers are struggling with burnout. The data makes that much clear: A Mercer report from last year found that 82% of workers said they were at risk of burnout. In another study conducted by the National Alliance on Mental Illness and Ipsos, over half of the workers surveyed said they had experienced burnout because of their job in 2023. Even so, it seems that employers may be underestimating just how widespread burnout really is among the workforce. A new report by the online marketplace Care.com indicates that while the vast majority of companies surveyed—84%—know that burnout can noticeably impact retention, they don’t fully understand the scope of the issue. While employers believed only about 45% of their workers were at risk of burnout, 69% of employees said they were at moderate to high risk of burnout. The Care.com report, which polled 600 human resources executives and 1,000 rank-and-file employees, points to caregiving responsibilities as a driving factor behind burnout. Of the respondents who pay for family care, most of them say caregiving puts them at higher risk of burnout, and that their stress in the workplace is amplified by the burden of managing work expectations alongside caregiving. For many working parents, particularly those in their forties, caregiving responsibilities can take the form of both eldercare and childcare. What helps with burnout What does seem to help, at least according to Care.com’s findings, are workplace benefits that help support caregivers—an offering that has become increasingly common at companies that have invested in more niche employee benefits. Mental health resources have become some of the most popular benefits, along with fertility and family-building support. A number of companies also provide caregiver support in the form of subsidies or backup care coverage, which can help families manage gaps in childcare and offset the exorbitant cost of care. Despite the popularity of these employee benefit, companies don’t always see high rates of utilization across the board. But according to the Care.com report, when employers provide benefits tailored to caregivers, there can be a clear correlation with retention and stronger performance. The benefits that employees want About one in five employees said that they have quit jobs over a lack of caregiver benefits—or that they would leave for a role that offers those benefits. Among employees who have access to those benefits, 45% say that their productivity increased while 40% report lower rates of absenteeism. The emotional impact, too, can be significant: Over half of employees said caregiver benefits boosted their quality of life, improved their work-life balance, and reduced stress levels. According to the report, it seems that employees and employers alike are largely aligned on the expectation that companies can and should help families manage the cost of caregiving. What’s not always clear is the form that assistance should take. Narrow benefits like on-site childcare may not be the right solution for all parents, or companies may find that many of their employees are actually looking for help with eldercare—which is why it’s important that employers actually take the time to understand what their workforce really needs.0 Reacties 0 aandelen 90 Views