0 Commentaires

0 Parts

29 Vue

Annuaire

Annuaire

-

Connectez-vous pour aimer, partager et commenter!

-

WWW.ENGADGET.COMSkullcandy’s new Method 360 ANC earbuds have been tuned by BoseSkullcandy just revealed a new pair of wireless earbuds, the Method 360 ANC. Interestingly, the company has teamed up with rival headphone-maker Bose for this product. The earbuds have been tuned by Bose and use eartips similar to the ones found with the company’s QuietComfort line. This is part of the pre-existing Sound by Bose platform, in which the company helps rivals with tuning and sound quality. The Motorola Moto Buds+ earbuds also include this feature. Otherwise, the Method 360 ANC earbuds seem like a solid entry in a crowded field. They offer ANC, multipoint connections, custom EQ options, wear detection and other bells and whistles. The battery lasts around 10 hours per charge, which increases to 40 hours when considering the charging case. However, this metric is with ANC turned off. There is a quick-charge feature that advertises two hours of use on just ten minutes of charging. This charging case includes an O-ring, so it can be clipped to stuff, and is available in several colors. Skullcandy The earbuds ship with multiple sets of eartips that also use technology by Bose to ensure a “secure, ultra-comfortable fit and superior noise isolation.” They integrate with the Skull-iQ app, for making EQ adjustments, reconfiguring buttons and changing ANC modes. The Method 360 ANC earbuds are available right now at an introductory price of $100. The cost will go up to $130 later on.This article originally appeared on Engadget at https://www.engadget.com/audio/headphones/skullcandys-new-method-360-anc-earbuds-have-been-tuned-by-bose-230053911.html?src=rss0 Commentaires 0 Parts 15 Vue

-

WWW.TECHRADAR.COMSkullcandy's new ANC earbuds have sound by Bose, and look like Bose, but don't have Bose pricesTrue wireless earbuds with Bose sound, long battery life, low price, and a really cool case0 Commentaires 0 Parts 14 Vue

WWW.TECHRADAR.COMSkullcandy's new ANC earbuds have sound by Bose, and look like Bose, but don't have Bose pricesTrue wireless earbuds with Bose sound, long battery life, low price, and a really cool case0 Commentaires 0 Parts 14 Vue -

WWW.CNBC.COMFigma confidentially files for IPO more than a year after ditching Adobe dealFigma, whose software is widely used among app designers, appears headed for the public market after abandoning a tie-up with Adobe in 2023.0 Commentaires 0 Parts 15 Vue

WWW.CNBC.COMFigma confidentially files for IPO more than a year after ditching Adobe dealFigma, whose software is widely used among app designers, appears headed for the public market after abandoning a tie-up with Adobe in 2023.0 Commentaires 0 Parts 15 Vue -

BEFORESANDAFTERS.COMGetting your VFX head around ACES 2.0ILM’s Alex Fry is here to help, and explain how it was used on ‘Transformers One’. At the recent SIGGRAPH Asia 2024 conference in Tokyo, Industrial Light & Magic senior color and imaging engineer Alex Fry gave a fascinating talk about his role in the development of ACES 2.0. ACES—the Academy Color Encoding System, from the Academy of Motion Picture Arts and Sciences—is an industry standard for managing color throughout the life cycle of theatrical motion picture, television, video game, and immersive storytelling projects. The most substantial change in ACES 2.0 is related to a complete redesign of the rendering transform. Here, as the technical documentation on ACES notes, “Different deliverable outputs [now] ‘match’ better and making outputs to display setups other than the provided presets is intended to be user-driven. The rendering transforms are less likely to produce undesirable artifacts ‘out of the box’, which means less time can be spent fixing problematic images and more time making pictures look the way you want.” It’s perhaps also worth pointing out here what the key design goals of ACES 2.0 were (again, from the technical documentation): Improve consistency of tone scale and provide an easy to use parameter to allow for outputs between preset dynamic ranges Minimize hue skews across exposure range in a region of same hue Unify for structural consistency across transform type Easy to use parameters to create outputs other than the presets Robust gamut mapping to improve harsh clipping artifacts Fill extents of output code value cube (where appropriate and expected) Invertible – not necessarily reversible, but Output > ACES > Output round-trip should be possible Accomplish all of the above while maintaining an acceptable “out-of-the box” rendering At SIGGRAPH Asia, Fry described what it took amongst the ACES leadership and technical advisory groups to get to these new developments with ACES 2.0, in particular, in reducing visual artifacts and ensuring consistency across SDR and HDR displays. Fry also discussed first hand how ACES 2.0 was implemented on ILM’s Transformers One (on which Fry was compositing supervisor). befores & afters got to sit down at the conference with Fry for a one-on-one discussion of ACES 2.0 and Transformers One. Alex Fry at SIGGRAPH Asia 2024. b&a: You’re a member of the Technical Advisory Council for ACES. How did you first become intertwined with ACES? Alex Fry: I had been generally interested in color as a Comp’er back when I was at Rising Sun Pictures. [Visual effects supervisor] Tim Crosbie taught me the basics and really helped me with a few leaps of understanding that I probably wouldn’t have arrived at by myself. I stayed interested in it, playing around with film LUTs and grading. DI and comp are very closely related, they’re jobs that are more similar than they are different. Then at Animal Logic, I became one of the few people there who was consistently interested in that sort of thing, and had opinions about it. When ACES popped up as something you’d hear about—during The Great Gatsby era—I started to learn a bit more about it, the concepts just resonated with me. I thought, yes, this is obviously where we should be going. ACES standardizes a bunch of things, both technically, conceptually, and the language around those things. At every different company I’ve been at, we’re all kind of doing the same thing, but we would call it slightly different things, the terminology would all be 10% different. So, if you’re having the same conversation with someone from another company, you might be mostly talking about the same thing, but you wouldn’t quite know 100%, unless you were incredibly explicit about it. I was the first Comper, well, the only Comper for a long time, on The LEGO Movie, as it was in early development, and it seemed like ACES had some things that would help us out. Before then I’d been on The Legend of the Guardians and there were certain things to do with the way the display transforms worked that were a little limiting. ACES looked like it answered a few of those questions. I reached out to Alex Forsythe at The Academy and had a couple of chats about it, and we made one or two little additions to make it work with the pipeline that we already had, and got those pieces prototyped so I could pitch it to the production, who bought in completely. The production was a great success. Then, off the back of that, I did a run of talks at the Academy, NAB and SIGGRAPH. The Lego Movie was one of the first mainstream studio productions that used ACES, so there was a lot of interest in exactly how we used it, and what it gave us. In the years after that, I was fairly active in a few of the ACES forums, making the case for people using it, and also just building little tools that made it a little easier to live with in reality of production rather than the idealized version of production. Eventually, when the ACES 2.0 project came around, I was asked to co-chair the Output Transforms working group with Kevin Wheatley from Framestore. Transformers One. b&a: As an overall thing, what does ACES 2.0 do better than ACES 1.0? Alex Fry: The two main things that are better are, better visual and perceptual matches between the SDR and HDR renderings of the transforms, and better behavior for extreme colors at the edge of gamut, or extreme colors that are heavily overexposed. Both of those areas are much improved. That need has really come out of people’s actual experiences working with the system and how it behaves with certain difficult images. In terms of the original SDR/HDR renderings, HDR displays just weren’t functionally a thing in the real world when ACES was first developed. HDR displays were a thing that were coming, and it was an area that the system attempted to address, but for the original developers, you didn’t have an actual HDR display on your desk 15 years ago. They were only at the Emerging Technologies booth at SIGGRAPH and places like that. They were pretty rare. They weren’t something that people were actively trying to grade movies through regularly. The Dolby PRM monitor came out around 2010, but unless you were actively grading and finishing DI content on one, you just didn’t have daily exposure. It’s a different world now. Everyone’s phone is HDR. Most people’s TVs have HDR, even if they didn’t actively choose it. You don’t have to seek it out. So it was time to pursue better consistency of SDR and HDR in ACES 2.0. b&a: How did the working group try and reach these new standards? Alex Fry: A lot of tinkering. The thing is, a lot of the design requirements are somewhat contradictory and somewhat in conflict with, or at least in tension with, each other. Certain things in the requirements list we had heavily conflicted with the other ones, and you’ve got to kind of work out the right level of compromise. Some of them are just very nebulous, like it being ‘attractive’ and it being ‘neutral’. Those are very vague things. ‘Dealing well with out-of-gamut colors’ is very much in conflict with the ‘invertibility’ question. Once you get into the nitty-gritty of it, there are parts of it that are science, but they’re a little bit vague as far as science goes. We’re trying to use mathematical models to approximate certain properties of the human visual system. Those things are a little nebulous at best. They’re variable between people, and not everyone agrees on certain things happening in reality. It’s very hard to even kind of settle those arguments because people’s own perceptions of what they see or how they describe what they see are tricky. Also, some of these things are vague cultural conventions, like the issue we constantly have with hue skews. Fire, for example, comes up a lot as a tricky one to do. It’s a visual artifact that we have in the ACES 1.0 rendering, but a lot of people think it looks good for fire. You can point back to historical mediums like painting and say, ‘Yes, prior to cinema, prior to anyone taking a photograph, people would still often paint in the middle of a fire as being more yellow.’ It becomes very murky when you’ve got issues like that mixed in with color appearance models, which work under some circumstances but not these other circumstances. Transformers One. b&a: How did ACES 2.0 impact development on Transformers One? Alex Fry: The direct benefit was a better match between the HDR and SDR versions, and having the final film match everyone’s creative intent. Despite the proliferation of HDR screens in the consumer space, we can’t always work in HDR in a professional context. Linux is our primary desktop platform that we do all of our compositing, lighting and all texture painting on, and the OS has no meaningful support for HDR. There are various attempts to build HDR support into the window managers that are available on Linux, but it perpetually feels like it’s five years in the future, and it’s felt like that for quite a while. So, for now, with the platform that we’re working under, it’s just not a reality on the desktop. You add to that the fact that post-COVID, a large percentage of the industry is using some flavor of PCoIP, and those almost universally don’t support HDR. That’s not to say we never look at the work in HDR. We do regular HDR reviews internally, but most of the people who are sitting down making creative decisions are doing it in SDR. Most of the reviews happen in SDR, whether it’s via something like SyncSketch or QuickTime review movies. If you’re in a theater, it’s SDR. Most of the creative decisions get made in SDR, right up until the point of the DI session. Now, I can work directly in HDR but that’s because I’m more involved in the color side of it, and can make it work that way (Local MacOS). But, at scale, it’s all happening in SDR. The upside of a more coherent match between SDR and HDR is that creative calls that you make in SDR carry through to the HDR version. And, remember, the HDR version is the definitive version of the film. The SDR version—even the theatrical SDR version—is a derivative of the HDR version. It’s not like the early days of HDR where, really, the definitive version of film was SDR and maybe you got a HDR version. These days, the HDR version is the version and then everything else shakes out of that one. What this all meant on Transformers One was that we’d have production designer Jason Scheier doing concept art and paintings that are in SDR. They drive the look of the film. So the way those look has to translate well into our SDR comps, and then those creative decisions need to transition and hold true into the HDR domain. A simple example of this is eyes and dynamic range dependent hue skews. D-16, who later becomes Megatron, his eyes tell the story of his change over time. At the start of the film, his eyes are yellow, which is both a reference to the Marvel Comics version of Megatron back in the day, and a visual separator between his more innocent stage, when he’s D-16, to when he transitions and turns into Megatron, and his eyes become red. With the original ACES 1.0 Display Transforms, reds skew towards yellow as you increase exposure, and do so at different rates between the SDR and HDR renderings. Not only do we want Megatron’s eyes to actually be red, regardless of exposure level, we want that red to be the same red between HDR and SDR. This would have been very difficult to manage in the original ACES transforms. Obviously, Megatron’s eyes shifting from red to yellow as they get brighter isn’t just a visual artifact, it’s a story problem. One thing to note is that Transformers One didn’t use the final version of ACES 2.0, as it was well into production long before we finished the algorithm. It used an earlier development version. At a certain point, we just had to go, ‘We’re locking on this one’, specifically version 28, if anyone’s interested. Transformers One. b&a: Where can people see the full HDR release of Transformers One? Alex Fry: Streaming is by far the easiest way, the HDR version is available on all the streaming platforms where the film is available. If you have a modern TV (Ideally an OLED) it should be good to go, or any iPhone post iPhone X. The film did get a HDR release in Dolby Vision in the US. It also got mastered for the Barco HDR system, which uses light steering tech. I think there were other places around the world that have emissive LED screens that showed the HDR master. None of those are going to have quite the same dynamic range as the home HDR version, though. Internally, we were viewing it on a Sony A95L, which uses a Samsung QD OLED panel. It’s super-bright and super-punchy, with a massive color gamut. That kind of screen would be the best way to see it in HDR, basically a really good home OLED, as big as you can get. That said, nothing replaces the full theatrical experience. When push comes to shove, I’d still go for big and loud in a theatre, over small and bright at home. b&a: Finally, for someone reading this who might be a compositor, how important do you think is understanding ACES in their role today? Alex Fry: I’d say it’s pretty important to understand the abstraction between the pixels leaving the display, and the pixels you’re actually manipulating, whether it’s a full ACES pipeline that uses the ACES display transforms, or something else, that abstraction is key. At big companies, we try and automate things to the point where you might not really know what’s going on, but if you get a proper grip on what’s happening under the hood, life is just easier. It’s kind of the same way that understanding how pixels get from many different cameras into your comp can help. That can all be automated away, but it’s better if you actually understand what’s happening there. A Compositor’s job is to understand the whole stack and make it work, that’s certainly what I’ve always tried to bring to the job. You can find out more about ACES at https://acescentral.com/. The post Getting your VFX head around ACES 2.0 appeared first on befores & afters.0 Commentaires 0 Parts 15 Vue

BEFORESANDAFTERS.COMGetting your VFX head around ACES 2.0ILM’s Alex Fry is here to help, and explain how it was used on ‘Transformers One’. At the recent SIGGRAPH Asia 2024 conference in Tokyo, Industrial Light & Magic senior color and imaging engineer Alex Fry gave a fascinating talk about his role in the development of ACES 2.0. ACES—the Academy Color Encoding System, from the Academy of Motion Picture Arts and Sciences—is an industry standard for managing color throughout the life cycle of theatrical motion picture, television, video game, and immersive storytelling projects. The most substantial change in ACES 2.0 is related to a complete redesign of the rendering transform. Here, as the technical documentation on ACES notes, “Different deliverable outputs [now] ‘match’ better and making outputs to display setups other than the provided presets is intended to be user-driven. The rendering transforms are less likely to produce undesirable artifacts ‘out of the box’, which means less time can be spent fixing problematic images and more time making pictures look the way you want.” It’s perhaps also worth pointing out here what the key design goals of ACES 2.0 were (again, from the technical documentation): Improve consistency of tone scale and provide an easy to use parameter to allow for outputs between preset dynamic ranges Minimize hue skews across exposure range in a region of same hue Unify for structural consistency across transform type Easy to use parameters to create outputs other than the presets Robust gamut mapping to improve harsh clipping artifacts Fill extents of output code value cube (where appropriate and expected) Invertible – not necessarily reversible, but Output > ACES > Output round-trip should be possible Accomplish all of the above while maintaining an acceptable “out-of-the box” rendering At SIGGRAPH Asia, Fry described what it took amongst the ACES leadership and technical advisory groups to get to these new developments with ACES 2.0, in particular, in reducing visual artifacts and ensuring consistency across SDR and HDR displays. Fry also discussed first hand how ACES 2.0 was implemented on ILM’s Transformers One (on which Fry was compositing supervisor). befores & afters got to sit down at the conference with Fry for a one-on-one discussion of ACES 2.0 and Transformers One. Alex Fry at SIGGRAPH Asia 2024. b&a: You’re a member of the Technical Advisory Council for ACES. How did you first become intertwined with ACES? Alex Fry: I had been generally interested in color as a Comp’er back when I was at Rising Sun Pictures. [Visual effects supervisor] Tim Crosbie taught me the basics and really helped me with a few leaps of understanding that I probably wouldn’t have arrived at by myself. I stayed interested in it, playing around with film LUTs and grading. DI and comp are very closely related, they’re jobs that are more similar than they are different. Then at Animal Logic, I became one of the few people there who was consistently interested in that sort of thing, and had opinions about it. When ACES popped up as something you’d hear about—during The Great Gatsby era—I started to learn a bit more about it, the concepts just resonated with me. I thought, yes, this is obviously where we should be going. ACES standardizes a bunch of things, both technically, conceptually, and the language around those things. At every different company I’ve been at, we’re all kind of doing the same thing, but we would call it slightly different things, the terminology would all be 10% different. So, if you’re having the same conversation with someone from another company, you might be mostly talking about the same thing, but you wouldn’t quite know 100%, unless you were incredibly explicit about it. I was the first Comper, well, the only Comper for a long time, on The LEGO Movie, as it was in early development, and it seemed like ACES had some things that would help us out. Before then I’d been on The Legend of the Guardians and there were certain things to do with the way the display transforms worked that were a little limiting. ACES looked like it answered a few of those questions. I reached out to Alex Forsythe at The Academy and had a couple of chats about it, and we made one or two little additions to make it work with the pipeline that we already had, and got those pieces prototyped so I could pitch it to the production, who bought in completely. The production was a great success. Then, off the back of that, I did a run of talks at the Academy, NAB and SIGGRAPH. The Lego Movie was one of the first mainstream studio productions that used ACES, so there was a lot of interest in exactly how we used it, and what it gave us. In the years after that, I was fairly active in a few of the ACES forums, making the case for people using it, and also just building little tools that made it a little easier to live with in reality of production rather than the idealized version of production. Eventually, when the ACES 2.0 project came around, I was asked to co-chair the Output Transforms working group with Kevin Wheatley from Framestore. Transformers One. b&a: As an overall thing, what does ACES 2.0 do better than ACES 1.0? Alex Fry: The two main things that are better are, better visual and perceptual matches between the SDR and HDR renderings of the transforms, and better behavior for extreme colors at the edge of gamut, or extreme colors that are heavily overexposed. Both of those areas are much improved. That need has really come out of people’s actual experiences working with the system and how it behaves with certain difficult images. In terms of the original SDR/HDR renderings, HDR displays just weren’t functionally a thing in the real world when ACES was first developed. HDR displays were a thing that were coming, and it was an area that the system attempted to address, but for the original developers, you didn’t have an actual HDR display on your desk 15 years ago. They were only at the Emerging Technologies booth at SIGGRAPH and places like that. They were pretty rare. They weren’t something that people were actively trying to grade movies through regularly. The Dolby PRM monitor came out around 2010, but unless you were actively grading and finishing DI content on one, you just didn’t have daily exposure. It’s a different world now. Everyone’s phone is HDR. Most people’s TVs have HDR, even if they didn’t actively choose it. You don’t have to seek it out. So it was time to pursue better consistency of SDR and HDR in ACES 2.0. b&a: How did the working group try and reach these new standards? Alex Fry: A lot of tinkering. The thing is, a lot of the design requirements are somewhat contradictory and somewhat in conflict with, or at least in tension with, each other. Certain things in the requirements list we had heavily conflicted with the other ones, and you’ve got to kind of work out the right level of compromise. Some of them are just very nebulous, like it being ‘attractive’ and it being ‘neutral’. Those are very vague things. ‘Dealing well with out-of-gamut colors’ is very much in conflict with the ‘invertibility’ question. Once you get into the nitty-gritty of it, there are parts of it that are science, but they’re a little bit vague as far as science goes. We’re trying to use mathematical models to approximate certain properties of the human visual system. Those things are a little nebulous at best. They’re variable between people, and not everyone agrees on certain things happening in reality. It’s very hard to even kind of settle those arguments because people’s own perceptions of what they see or how they describe what they see are tricky. Also, some of these things are vague cultural conventions, like the issue we constantly have with hue skews. Fire, for example, comes up a lot as a tricky one to do. It’s a visual artifact that we have in the ACES 1.0 rendering, but a lot of people think it looks good for fire. You can point back to historical mediums like painting and say, ‘Yes, prior to cinema, prior to anyone taking a photograph, people would still often paint in the middle of a fire as being more yellow.’ It becomes very murky when you’ve got issues like that mixed in with color appearance models, which work under some circumstances but not these other circumstances. Transformers One. b&a: How did ACES 2.0 impact development on Transformers One? Alex Fry: The direct benefit was a better match between the HDR and SDR versions, and having the final film match everyone’s creative intent. Despite the proliferation of HDR screens in the consumer space, we can’t always work in HDR in a professional context. Linux is our primary desktop platform that we do all of our compositing, lighting and all texture painting on, and the OS has no meaningful support for HDR. There are various attempts to build HDR support into the window managers that are available on Linux, but it perpetually feels like it’s five years in the future, and it’s felt like that for quite a while. So, for now, with the platform that we’re working under, it’s just not a reality on the desktop. You add to that the fact that post-COVID, a large percentage of the industry is using some flavor of PCoIP, and those almost universally don’t support HDR. That’s not to say we never look at the work in HDR. We do regular HDR reviews internally, but most of the people who are sitting down making creative decisions are doing it in SDR. Most of the reviews happen in SDR, whether it’s via something like SyncSketch or QuickTime review movies. If you’re in a theater, it’s SDR. Most of the creative decisions get made in SDR, right up until the point of the DI session. Now, I can work directly in HDR but that’s because I’m more involved in the color side of it, and can make it work that way (Local MacOS). But, at scale, it’s all happening in SDR. The upside of a more coherent match between SDR and HDR is that creative calls that you make in SDR carry through to the HDR version. And, remember, the HDR version is the definitive version of the film. The SDR version—even the theatrical SDR version—is a derivative of the HDR version. It’s not like the early days of HDR where, really, the definitive version of film was SDR and maybe you got a HDR version. These days, the HDR version is the version and then everything else shakes out of that one. What this all meant on Transformers One was that we’d have production designer Jason Scheier doing concept art and paintings that are in SDR. They drive the look of the film. So the way those look has to translate well into our SDR comps, and then those creative decisions need to transition and hold true into the HDR domain. A simple example of this is eyes and dynamic range dependent hue skews. D-16, who later becomes Megatron, his eyes tell the story of his change over time. At the start of the film, his eyes are yellow, which is both a reference to the Marvel Comics version of Megatron back in the day, and a visual separator between his more innocent stage, when he’s D-16, to when he transitions and turns into Megatron, and his eyes become red. With the original ACES 1.0 Display Transforms, reds skew towards yellow as you increase exposure, and do so at different rates between the SDR and HDR renderings. Not only do we want Megatron’s eyes to actually be red, regardless of exposure level, we want that red to be the same red between HDR and SDR. This would have been very difficult to manage in the original ACES transforms. Obviously, Megatron’s eyes shifting from red to yellow as they get brighter isn’t just a visual artifact, it’s a story problem. One thing to note is that Transformers One didn’t use the final version of ACES 2.0, as it was well into production long before we finished the algorithm. It used an earlier development version. At a certain point, we just had to go, ‘We’re locking on this one’, specifically version 28, if anyone’s interested. Transformers One. b&a: Where can people see the full HDR release of Transformers One? Alex Fry: Streaming is by far the easiest way, the HDR version is available on all the streaming platforms where the film is available. If you have a modern TV (Ideally an OLED) it should be good to go, or any iPhone post iPhone X. The film did get a HDR release in Dolby Vision in the US. It also got mastered for the Barco HDR system, which uses light steering tech. I think there were other places around the world that have emissive LED screens that showed the HDR master. None of those are going to have quite the same dynamic range as the home HDR version, though. Internally, we were viewing it on a Sony A95L, which uses a Samsung QD OLED panel. It’s super-bright and super-punchy, with a massive color gamut. That kind of screen would be the best way to see it in HDR, basically a really good home OLED, as big as you can get. That said, nothing replaces the full theatrical experience. When push comes to shove, I’d still go for big and loud in a theatre, over small and bright at home. b&a: Finally, for someone reading this who might be a compositor, how important do you think is understanding ACES in their role today? Alex Fry: I’d say it’s pretty important to understand the abstraction between the pixels leaving the display, and the pixels you’re actually manipulating, whether it’s a full ACES pipeline that uses the ACES display transforms, or something else, that abstraction is key. At big companies, we try and automate things to the point where you might not really know what’s going on, but if you get a proper grip on what’s happening under the hood, life is just easier. It’s kind of the same way that understanding how pixels get from many different cameras into your comp can help. That can all be automated away, but it’s better if you actually understand what’s happening there. A Compositor’s job is to understand the whole stack and make it work, that’s certainly what I’ve always tried to bring to the job. You can find out more about ACES at https://acescentral.com/. The post Getting your VFX head around ACES 2.0 appeared first on befores & afters.0 Commentaires 0 Parts 15 Vue -

WWW.FASTCOMPANY.COMHow to lead humans in the age of AIThe Fast Company Impact Council is an invitation-only membership community of leaders, experts, executives, and entrepreneurs who share their insights with our audience. Members pay annual dues for access to peer learning, thought leadership opportunities, events and more. Disruption has become our new workplace reality. For managers, navigating change is an everyday responsibility, not an occasional responsibility. Gallup reports that 72% of employees recently experienced workplace disruptions, and nearly a third of leaders experienced extensive disruptions. Today, no disruption is as prevalent as the rise of artificial intelligence. Yes, as sophisticated as AI might become, the key to successfully leading your team through change does not lie in smarter tech, but rather in fostering the fundamental human skills that AI will never be capable of delivering. The human role Quiet the noise around AI and you will find the simple truth that the most crucial workplace capabilities remain deeply human. According to World Economic Forum’s Future of Jobs Report 2025, essential skills like resilience, agility, creativity, empathy, active listening, and curiosity are far more valuable than technical skills. Those skills listed may be commonly referred to as “soft,” but in the age of AI, they are not just feel-good assets reserved for your personality hires. The future of work hinges on how well your teams adapt, connect, and perform together as humans. Of course, none of this should be surprising. Good leaders understand the importance of human-centered skills. Yet, there remains a significant gap between what we value and what we actively build in our people. Deloitte’s 2025 Human Capital Trends Report says that 71% of managers and 76% of HR executives believe prioritizing human capabilities like emotional intelligence, resilience, and curiosity, is “very” or “critically” important. This human skills gap is even more urgent when Gen Z is factored in. They entered the workforce aligned with a shift to remote and hybrid environments, resulting in fewer opportunities to hone interpersonal skills through real-life interactions. This is not a critique of an entire generation, but rather an acknowledgment of a broad workplace challenge. And Gen Z is not alone in needing to strengthen communication across generational divides, but that is a topic for another day. Adding fuel to the fire are increased workloads, job insecurity, and economic stresses. When we combine these pressures with underdeveloped human skills, we see the predictable outcomes: disengagement, confusion, and last year’s buzzword, quiet quitting. If leaders are not proactively developing their team’s human capabilities, they leave them unprepared to navigate exactly the changes they are expected to embrace. Find comfort in discomfort So what should leaders do? The answer is simple, but the practice is challenging. Leaders must embrace their inner improviser. Yes, improvisation, like what you have watched on Whose Line Is It Anyway? Or the awkward performance your college roommate invited you to in that obscure college lounge. The skills of an improviser are a proven method for striving amidst uncertainty. Decades of experience at Second City Works and studies published by The Behavioral Scientist confirm the principles of improv equip us to handle change with agility, empathy, and resilience. A study involving 55 improv classes, including several facilitated by The Second City, revealed a powerful truth. Participants who intentionally sought out discomfort developed sharper focus, took bolder creative risks, and reported greater confidence and improved communication skills. The lesson? Discomfort is not the problem. It is the pathway forward. Leaders must model this openly. Normalize statements like, “This feels awkward, but we’ll navigate it together.” When your team sees discomfort as an opportunity to learn rather than a flaw to fear, they will follow your example. Encourage authentic curiosity Amid constant change, we crave clear answers. But sometimes rushing toward the first “right answer” closes the door to innovation and possibility. Instead, leaders should practice authentic curiosity. Ask your team, “What else could be true?” Welcome “I don’t know” moments. Create psychological safety so new ideas can surface without judgment. Curiosity keeps your teams adaptable. And according to the World Economic Forum, it remains one of the most valuable capabilities leaders can nurture. Make listening the cultural norm We talk a lot about the importance of listening, but few teams actually practice it consistently. Make listening intentional and visible. Respond with the phrase, “So what I’m hearing is,” followed by paraphrasing what you heard. Pose thoughtful questions that indicate your priority is understanding, not just replying. Consciously build pauses into conversations, especially during tense or critical discussions. When team members feel heard, they are more willing to collaborate, innovate, and commit to their teams. Listening is not simply polite. It is strategic and transformative. Disruptions will not slow down. Innovative technologies will continue to emerge. New directives will always appear. Priorities will shift rapidly. But leaders who want to guide teams who thrive, not just survive, must invest in their people first. An improvisor’s skills are worth cultivating. Because, the future of work does not need smarter tools, but it will demand more empowered, resilient humans, and the improvisational leader who inspired them. Tyler Dean Kempf is creative director of Second City Works.0 Commentaires 0 Parts 15 Vue

WWW.FASTCOMPANY.COMHow to lead humans in the age of AIThe Fast Company Impact Council is an invitation-only membership community of leaders, experts, executives, and entrepreneurs who share their insights with our audience. Members pay annual dues for access to peer learning, thought leadership opportunities, events and more. Disruption has become our new workplace reality. For managers, navigating change is an everyday responsibility, not an occasional responsibility. Gallup reports that 72% of employees recently experienced workplace disruptions, and nearly a third of leaders experienced extensive disruptions. Today, no disruption is as prevalent as the rise of artificial intelligence. Yes, as sophisticated as AI might become, the key to successfully leading your team through change does not lie in smarter tech, but rather in fostering the fundamental human skills that AI will never be capable of delivering. The human role Quiet the noise around AI and you will find the simple truth that the most crucial workplace capabilities remain deeply human. According to World Economic Forum’s Future of Jobs Report 2025, essential skills like resilience, agility, creativity, empathy, active listening, and curiosity are far more valuable than technical skills. Those skills listed may be commonly referred to as “soft,” but in the age of AI, they are not just feel-good assets reserved for your personality hires. The future of work hinges on how well your teams adapt, connect, and perform together as humans. Of course, none of this should be surprising. Good leaders understand the importance of human-centered skills. Yet, there remains a significant gap between what we value and what we actively build in our people. Deloitte’s 2025 Human Capital Trends Report says that 71% of managers and 76% of HR executives believe prioritizing human capabilities like emotional intelligence, resilience, and curiosity, is “very” or “critically” important. This human skills gap is even more urgent when Gen Z is factored in. They entered the workforce aligned with a shift to remote and hybrid environments, resulting in fewer opportunities to hone interpersonal skills through real-life interactions. This is not a critique of an entire generation, but rather an acknowledgment of a broad workplace challenge. And Gen Z is not alone in needing to strengthen communication across generational divides, but that is a topic for another day. Adding fuel to the fire are increased workloads, job insecurity, and economic stresses. When we combine these pressures with underdeveloped human skills, we see the predictable outcomes: disengagement, confusion, and last year’s buzzword, quiet quitting. If leaders are not proactively developing their team’s human capabilities, they leave them unprepared to navigate exactly the changes they are expected to embrace. Find comfort in discomfort So what should leaders do? The answer is simple, but the practice is challenging. Leaders must embrace their inner improviser. Yes, improvisation, like what you have watched on Whose Line Is It Anyway? Or the awkward performance your college roommate invited you to in that obscure college lounge. The skills of an improviser are a proven method for striving amidst uncertainty. Decades of experience at Second City Works and studies published by The Behavioral Scientist confirm the principles of improv equip us to handle change with agility, empathy, and resilience. A study involving 55 improv classes, including several facilitated by The Second City, revealed a powerful truth. Participants who intentionally sought out discomfort developed sharper focus, took bolder creative risks, and reported greater confidence and improved communication skills. The lesson? Discomfort is not the problem. It is the pathway forward. Leaders must model this openly. Normalize statements like, “This feels awkward, but we’ll navigate it together.” When your team sees discomfort as an opportunity to learn rather than a flaw to fear, they will follow your example. Encourage authentic curiosity Amid constant change, we crave clear answers. But sometimes rushing toward the first “right answer” closes the door to innovation and possibility. Instead, leaders should practice authentic curiosity. Ask your team, “What else could be true?” Welcome “I don’t know” moments. Create psychological safety so new ideas can surface without judgment. Curiosity keeps your teams adaptable. And according to the World Economic Forum, it remains one of the most valuable capabilities leaders can nurture. Make listening the cultural norm We talk a lot about the importance of listening, but few teams actually practice it consistently. Make listening intentional and visible. Respond with the phrase, “So what I’m hearing is,” followed by paraphrasing what you heard. Pose thoughtful questions that indicate your priority is understanding, not just replying. Consciously build pauses into conversations, especially during tense or critical discussions. When team members feel heard, they are more willing to collaborate, innovate, and commit to their teams. Listening is not simply polite. It is strategic and transformative. Disruptions will not slow down. Innovative technologies will continue to emerge. New directives will always appear. Priorities will shift rapidly. But leaders who want to guide teams who thrive, not just survive, must invest in their people first. An improvisor’s skills are worth cultivating. Because, the future of work does not need smarter tools, but it will demand more empowered, resilient humans, and the improvisational leader who inspired them. Tyler Dean Kempf is creative director of Second City Works.0 Commentaires 0 Parts 15 Vue -

WWW.YANKODESIGN.COMThis Minimalist Mountain Retreat Harmonizes With Norway’s Natural BeautyPorthole-style windows accentuate the gable ends of this timber mountain cabin in Norway, designed by architect Quentin Desfarges. Named Timber Nest, the home is situated near Rødberg, on a sloping, forested site with views of the Hardangervidda National Park mountains. Considering the site’s exposure and frequent heavy snowfall, Oslo-based Desfarges crafted a minimalist cabin that harmonizes with the landscape, inspired by the region’s traditional wooden cabins. “Timber Nest does not merely occupy its site; it coexists with it,” said Desfarges. “Every decision was made to preserve the existing vegetation on the site, allowing the surrounding birch forest to remain undisturbed. Crafted entirely from wood, the cabin embraces time-honored Nordic building traditions while celebrating the tactile beauty of exposed timber.” Designer: Quentin Desfarges The cabin’s interior is divided into two distinct halves. To the south, a double-height living, dining, and kitchen area is complemented by a mezzanine. To the north, bedrooms, and bathrooms are positioned along a central corridor. Large circular windows at both ends illuminate the living space and the loft-like upper bedrooms, while also framing views of the surrounding trees. This design maximizes natural light but also connects the interior with the scenic outdoors, maintaining a seamless integration with the environment. The layout ensures functionality and elegance, enhancing the cabin’s minimalist aesthetic. “The cabin endures the harsh Nordic winters – at times nearly swallowed by snow – yet remains open and light-filled, always in conversation with the shifting landscape,” said Desfarges. The home’s design prominently features an exposed wooden structure and cladding, highlighting the natural beauty of the materials used. Over time, the untreated pine planks on the exterior are intended to weather naturally, gradually transforming into a distinguished silver-grey hue that complements the surrounding landscape. Inside, the interiors are kept minimal to emphasize the simplicity and elegance of the design. To introduce subtle moments of color and interest, green-stained accents have been strategically incorporated. The kitchen counter, a key focal point within the space, along with the stairs, features this distinct green stain. These carefully chosen accents add vibrancy and contrast, creating visual interest while maintaining the overall sleek and minimal tone of the cabin’s interior design. “The palette is drawn directly from the site itself – solid pine and birch, reflecting the forest outside; a concrete floor, grounding the structure in the mountain’s geology,” said Desfarges. “These materials are left in their raw, honest state, allowing them to age gracefully in dialogue with the seasons. The occasional touch of green-tinted wood punctuates the interior, creating sculptural moments that echo the landscape’s fleeting bursts of color,” he concluded. The post This Minimalist Mountain Retreat Harmonizes With Norway’s Natural Beauty first appeared on Yanko Design.0 Commentaires 0 Parts 16 Vue

WWW.YANKODESIGN.COMThis Minimalist Mountain Retreat Harmonizes With Norway’s Natural BeautyPorthole-style windows accentuate the gable ends of this timber mountain cabin in Norway, designed by architect Quentin Desfarges. Named Timber Nest, the home is situated near Rødberg, on a sloping, forested site with views of the Hardangervidda National Park mountains. Considering the site’s exposure and frequent heavy snowfall, Oslo-based Desfarges crafted a minimalist cabin that harmonizes with the landscape, inspired by the region’s traditional wooden cabins. “Timber Nest does not merely occupy its site; it coexists with it,” said Desfarges. “Every decision was made to preserve the existing vegetation on the site, allowing the surrounding birch forest to remain undisturbed. Crafted entirely from wood, the cabin embraces time-honored Nordic building traditions while celebrating the tactile beauty of exposed timber.” Designer: Quentin Desfarges The cabin’s interior is divided into two distinct halves. To the south, a double-height living, dining, and kitchen area is complemented by a mezzanine. To the north, bedrooms, and bathrooms are positioned along a central corridor. Large circular windows at both ends illuminate the living space and the loft-like upper bedrooms, while also framing views of the surrounding trees. This design maximizes natural light but also connects the interior with the scenic outdoors, maintaining a seamless integration with the environment. The layout ensures functionality and elegance, enhancing the cabin’s minimalist aesthetic. “The cabin endures the harsh Nordic winters – at times nearly swallowed by snow – yet remains open and light-filled, always in conversation with the shifting landscape,” said Desfarges. The home’s design prominently features an exposed wooden structure and cladding, highlighting the natural beauty of the materials used. Over time, the untreated pine planks on the exterior are intended to weather naturally, gradually transforming into a distinguished silver-grey hue that complements the surrounding landscape. Inside, the interiors are kept minimal to emphasize the simplicity and elegance of the design. To introduce subtle moments of color and interest, green-stained accents have been strategically incorporated. The kitchen counter, a key focal point within the space, along with the stairs, features this distinct green stain. These carefully chosen accents add vibrancy and contrast, creating visual interest while maintaining the overall sleek and minimal tone of the cabin’s interior design. “The palette is drawn directly from the site itself – solid pine and birch, reflecting the forest outside; a concrete floor, grounding the structure in the mountain’s geology,” said Desfarges. “These materials are left in their raw, honest state, allowing them to age gracefully in dialogue with the seasons. The occasional touch of green-tinted wood punctuates the interior, creating sculptural moments that echo the landscape’s fleeting bursts of color,” he concluded. The post This Minimalist Mountain Retreat Harmonizes With Norway’s Natural Beauty first appeared on Yanko Design.0 Commentaires 0 Parts 16 Vue -

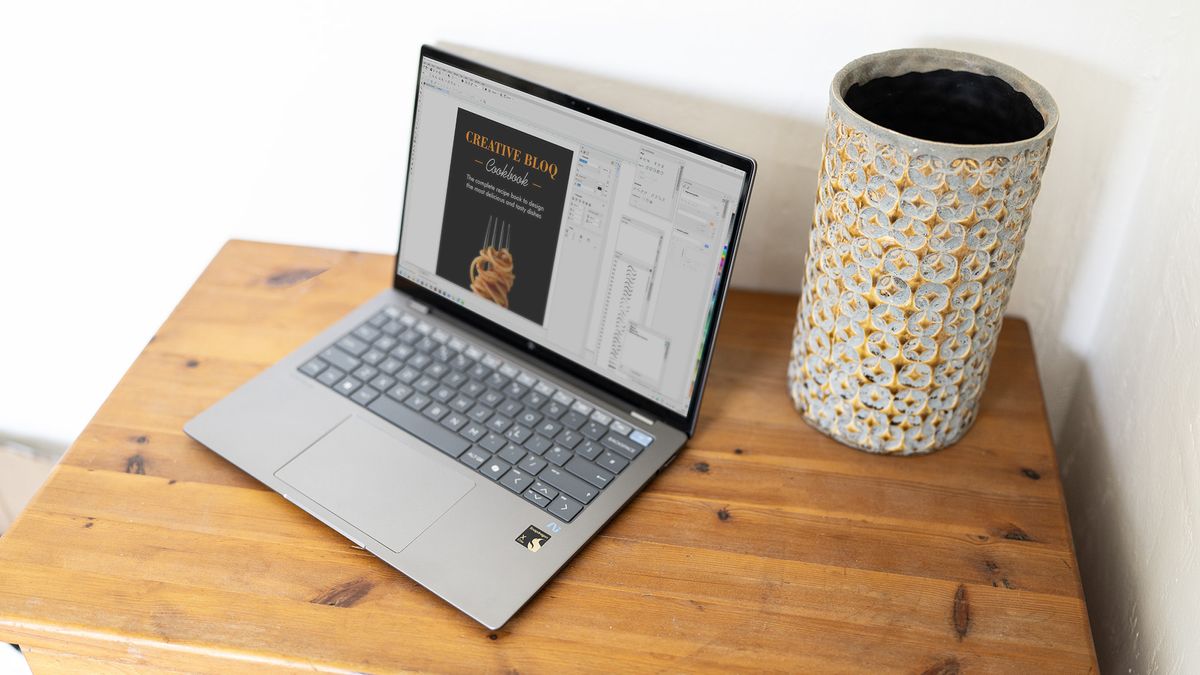

WWW.CREATIVEBLOQ.COMI just tested the Duracell Bunny of laptops – if you need a long battery, it doesn't get better than thisThe HP Omnibook X 14 looks like an ordinary laptop, but this Snapdragon-powered machine is an endurance runner.0 Commentaires 0 Parts 33 Vue

WWW.CREATIVEBLOQ.COMI just tested the Duracell Bunny of laptops – if you need a long battery, it doesn't get better than thisThe HP Omnibook X 14 looks like an ordinary laptop, but this Snapdragon-powered machine is an endurance runner.0 Commentaires 0 Parts 33 Vue -

WWW.WIRED.COMTrans Musicians Are Canceling US Tour Dates Due to Trump’s Gender ID RulesTwo trans Canadian artists are pulling out of US concerts as Donald Trump’s border crackdown sparks ‘panic.’0 Commentaires 0 Parts 15 Vue

WWW.WIRED.COMTrans Musicians Are Canceling US Tour Dates Due to Trump’s Gender ID RulesTwo trans Canadian artists are pulling out of US concerts as Donald Trump’s border crackdown sparks ‘panic.’0 Commentaires 0 Parts 15 Vue -

WWW.NYTIMES.COMNvidia Says U.S. Will Restrict Sales of More of Its A.I. Chips to ChinaThe restrictions are the first major limits the Trump administration has put on semiconductor sales outside the United States, toughening rules created by the Biden administration.0 Commentaires 0 Parts 15 Vue

WWW.NYTIMES.COMNvidia Says U.S. Will Restrict Sales of More of Its A.I. Chips to ChinaThe restrictions are the first major limits the Trump administration has put on semiconductor sales outside the United States, toughening rules created by the Biden administration.0 Commentaires 0 Parts 15 Vue