0 Kommentare

0 Anteile

57 Ansichten

Verzeichnis

Verzeichnis

-

Please log in to like, share and comment!

-

ARSTECHNICA.COMMicrosoft’s “1‑bit” AI model runs on a CPU only, while matching larger systemsSmall packages Microsoft’s “1‑bit” AI model runs on a CPU only, while matching larger systems Future AI might not need supercomputers thanks to models like BitNet b1.58 2B4T. Kyle Orland – Apr 18, 2025 3:46 pm | 7 Can big AI models get by with "smaller" weights? Credit: Getty Images Can big AI models get by with "smaller" weights? Credit: Getty Images Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more When it comes to actually storing the numerical weights that power a large language model's underlying neural network, most modern AI models rely on the precision of 16- or 32-bit floating point numbers. But that level of precision can come at the cost of large memory footprints (in the hundreds of gigabytes for the largest models) and significant processing resources needed for the complex matrix multiplication used when responding to prompts. Now, researchers at Microsoft's General Artificial Intelligence group have released a new neural network model that works with just three distinct weight values: -1, 0, or 1. Building on top of previous work Microsoft Research published in 2023, the new model's "ternary" architecture reduces overall complexity and "substantial advantages in computational efficiency," the researchers write, allowing it to run effectively on a simple desktop CPU. And despite the massive reduction in weight precision, the researchers claim that the model "can achieve performance comparable to leading open-weight, full-precision models of similar size across a wide range of tasks." Watching your weights The idea of simplifying model weights isn't a completely new one in AI research. For years, researchers have been experimenting with quantization techniques that squeeze their neural network weights into smaller memory envelopes. In recent years, the most extreme quantization efforts have focused on so-called "BitNets" that represent each weight in a single bit (representing +1 or -1). BitNet's dad jokes aren't exactly original, but they are sufficiently groan-worthy. BitNet demo BitNet's dad jokes aren't exactly original, but they are sufficiently groan-worthy. BitNet demo This feels a bit too respectful and cogent for a 2000s-era CPU debate. BitNet demo This feels a bit too respectful and cogent for a 2000s-era CPU debate. BitNet demo A concise answer that doesn't offer a whole lot of context. BitNet demo A concise answer that doesn't offer a whole lot of context. BitNet demo This feels a bit too respectful and cogent for a 2000s-era CPU debate. BitNet demo A concise answer that doesn't offer a whole lot of context. BitNet demo The new BitNet b1.58b model doesn't go quite that far—the ternary system is referred to as "1.58-bit," since that's the average number of bits needed to represent three values (log(3)/log(2)). But it sets itself apart from previous research by being "the first open-source, native 1-bit LLM trained at scale," resulting in a 2 billion token model based on a training dataset of 4 trillion tokens, the researchers write. The "native" bit is key there, since many previous quantization efforts simply attempted after-the-fact size reductions on pre-existing models trained at "full precision" using those large floating-point values. That kind of post-training quantization can lead to "significant performance degradation" compared to the models they're based on, the researchers write. Other natively trained BitNet models, meanwhile, have been at smaller scales that "may not yet match the capabilities of larger, full-precision counterparts," they write. Does size matter? Memory requirements are the most obvious advantage of reducing the complexity of a model's internal weights. The BitNet b1.58 model can run using just 0.4GB of memory, compared to anywhere from 2 to 5GB for other open-weight models of roughly the same parameter size. But the simplified weighting system also leads to more efficient operation at inference time, with internal operations that rely much more on simple addition instructions and less on computationally costly multiplication instructions. Those efficiency improvements mean BitNet b1.58 uses anywhere from 85 to 96 percent less energy compared to similar full-precision models, the researchers estimate. A demo of BitNet b1.58 running at speed on an Apple M2 CPU. By using a highly optimized kernel designed specifically for the BitNet architecture, the BitNet b1.58 model can also run multiple times faster than similar models running on a standard full-precision transformer. The system is efficient enough to reach "speeds comparable to human reading (5-7 tokens per second)" using a single CPU, the researchers write (you can download and run those optimized kernels yourself on a number of ARM and x86 CPUs, or try it using this web demo). Crucially, the researchers say these improvements don't come at the cost of performance on various benchmarks testing reasoning, math, and "knowledge" capabilities (although that claim has yet to be verified independently). Averaging the results on several common benchmarks, the researchers found that BitNet "achieves capabilities nearly on par with leading models in its size class while offering dramatically improved efficiency." Despite its smaller memory footprint, BitNet still performs similarly to "full precision" weighted models on many benchmarks. Despite its smaller memory footprint, BitNet still performs similarly to "full precision" weighted models on many benchmarks. Despite the apparent success of this "proof of concept" BitNet model, the researchers write that they don't quite understand why the model works as well as it does with such simplified weighting. "Delving deeper into the theoretical underpinnings of why 1-bit training at scale is effective remains an open area," they write. And more research is still needed to get these BitNet models to compete with the overall size and context window "memory" of today's largest models. Still, this new research shows a potential alternative approach for AI models that are facing spiraling hardware and energy costs from running on expensive and powerful GPUs. It's possible that today's "full precision" models are like muscle cars that are wasting a lot of energy and effort when the equivalent of a nice sub-compact could deliver similar results. Kyle Orland Senior Gaming Editor Kyle Orland Senior Gaming Editor Kyle Orland has been the Senior Gaming Editor at Ars Technica since 2012, writing primarily about the business, tech, and culture behind video games. He has journalism and computer science degrees from University of Maryland. He once wrote a whole book about Minesweeper. 7 Comments0 Kommentare 0 Anteile 50 Ansichten

ARSTECHNICA.COMMicrosoft’s “1‑bit” AI model runs on a CPU only, while matching larger systemsSmall packages Microsoft’s “1‑bit” AI model runs on a CPU only, while matching larger systems Future AI might not need supercomputers thanks to models like BitNet b1.58 2B4T. Kyle Orland – Apr 18, 2025 3:46 pm | 7 Can big AI models get by with "smaller" weights? Credit: Getty Images Can big AI models get by with "smaller" weights? Credit: Getty Images Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more When it comes to actually storing the numerical weights that power a large language model's underlying neural network, most modern AI models rely on the precision of 16- or 32-bit floating point numbers. But that level of precision can come at the cost of large memory footprints (in the hundreds of gigabytes for the largest models) and significant processing resources needed for the complex matrix multiplication used when responding to prompts. Now, researchers at Microsoft's General Artificial Intelligence group have released a new neural network model that works with just three distinct weight values: -1, 0, or 1. Building on top of previous work Microsoft Research published in 2023, the new model's "ternary" architecture reduces overall complexity and "substantial advantages in computational efficiency," the researchers write, allowing it to run effectively on a simple desktop CPU. And despite the massive reduction in weight precision, the researchers claim that the model "can achieve performance comparable to leading open-weight, full-precision models of similar size across a wide range of tasks." Watching your weights The idea of simplifying model weights isn't a completely new one in AI research. For years, researchers have been experimenting with quantization techniques that squeeze their neural network weights into smaller memory envelopes. In recent years, the most extreme quantization efforts have focused on so-called "BitNets" that represent each weight in a single bit (representing +1 or -1). BitNet's dad jokes aren't exactly original, but they are sufficiently groan-worthy. BitNet demo BitNet's dad jokes aren't exactly original, but they are sufficiently groan-worthy. BitNet demo This feels a bit too respectful and cogent for a 2000s-era CPU debate. BitNet demo This feels a bit too respectful and cogent for a 2000s-era CPU debate. BitNet demo A concise answer that doesn't offer a whole lot of context. BitNet demo A concise answer that doesn't offer a whole lot of context. BitNet demo This feels a bit too respectful and cogent for a 2000s-era CPU debate. BitNet demo A concise answer that doesn't offer a whole lot of context. BitNet demo The new BitNet b1.58b model doesn't go quite that far—the ternary system is referred to as "1.58-bit," since that's the average number of bits needed to represent three values (log(3)/log(2)). But it sets itself apart from previous research by being "the first open-source, native 1-bit LLM trained at scale," resulting in a 2 billion token model based on a training dataset of 4 trillion tokens, the researchers write. The "native" bit is key there, since many previous quantization efforts simply attempted after-the-fact size reductions on pre-existing models trained at "full precision" using those large floating-point values. That kind of post-training quantization can lead to "significant performance degradation" compared to the models they're based on, the researchers write. Other natively trained BitNet models, meanwhile, have been at smaller scales that "may not yet match the capabilities of larger, full-precision counterparts," they write. Does size matter? Memory requirements are the most obvious advantage of reducing the complexity of a model's internal weights. The BitNet b1.58 model can run using just 0.4GB of memory, compared to anywhere from 2 to 5GB for other open-weight models of roughly the same parameter size. But the simplified weighting system also leads to more efficient operation at inference time, with internal operations that rely much more on simple addition instructions and less on computationally costly multiplication instructions. Those efficiency improvements mean BitNet b1.58 uses anywhere from 85 to 96 percent less energy compared to similar full-precision models, the researchers estimate. A demo of BitNet b1.58 running at speed on an Apple M2 CPU. By using a highly optimized kernel designed specifically for the BitNet architecture, the BitNet b1.58 model can also run multiple times faster than similar models running on a standard full-precision transformer. The system is efficient enough to reach "speeds comparable to human reading (5-7 tokens per second)" using a single CPU, the researchers write (you can download and run those optimized kernels yourself on a number of ARM and x86 CPUs, or try it using this web demo). Crucially, the researchers say these improvements don't come at the cost of performance on various benchmarks testing reasoning, math, and "knowledge" capabilities (although that claim has yet to be verified independently). Averaging the results on several common benchmarks, the researchers found that BitNet "achieves capabilities nearly on par with leading models in its size class while offering dramatically improved efficiency." Despite its smaller memory footprint, BitNet still performs similarly to "full precision" weighted models on many benchmarks. Despite its smaller memory footprint, BitNet still performs similarly to "full precision" weighted models on many benchmarks. Despite the apparent success of this "proof of concept" BitNet model, the researchers write that they don't quite understand why the model works as well as it does with such simplified weighting. "Delving deeper into the theoretical underpinnings of why 1-bit training at scale is effective remains an open area," they write. And more research is still needed to get these BitNet models to compete with the overall size and context window "memory" of today's largest models. Still, this new research shows a potential alternative approach for AI models that are facing spiraling hardware and energy costs from running on expensive and powerful GPUs. It's possible that today's "full precision" models are like muscle cars that are wasting a lot of energy and effort when the equivalent of a nice sub-compact could deliver similar results. Kyle Orland Senior Gaming Editor Kyle Orland Senior Gaming Editor Kyle Orland has been the Senior Gaming Editor at Ars Technica since 2012, writing primarily about the business, tech, and culture behind video games. He has journalism and computer science degrees from University of Maryland. He once wrote a whole book about Minesweeper. 7 Comments0 Kommentare 0 Anteile 50 Ansichten -

WWW.NEWSCIENTIST.COMNew colour seen for the first time by tricking the eyesOur retinas could be made to see a vivid shade of blue-greenMikeCS images/Alamy Five people have witnessed an intense green-blue colour that has never been seen by humans before, thanks to a device that might one day enable those with a type of colour blindness to experience typical vision. We perceive colour via the retina at the back of the eye, which typically contains three types of light-detecting cone cells – called S, M and L – that absorb a range of blue, green or red light, respectively, and then send signals to the brain. When we see anything at the blue-green end of the visible spectrum, at least two types of cone cells are activated at the same time because there is some overlap in the wavelengths they detect. Advertisement Ren Ng at the University of California, Berkeley, wondered what colour people would perceive if only one type of cone was activated in this part of the spectrum. He was inspired by a device called Oz, developed by other researchers studying how the eye works, that uses a laser capable of stimulating single cone cells. Ng and his colleagues, including the scientists who built Oz, upgraded the device so that it could deliver light to a small square patch of about 1000 cone cells in the retina. Stimulating a single cone cell doesn’t generate enough of a signal to induce colour perception, says Ng. The researchers tested the upgraded version on five people, stimulating only the M cones in this small area of one eye, while the other was closed. The participants said they saw a blue-green colour, which the researchers have called olo, that was more intense than any they had seen before. “It’s hard to describe; it’s very brilliant,” says Ng, who has also seen olo. To verify these results, the participants took a colour-matching test. Each viewed olo and a second colour that they could tune via a dial to any shade on the standard visible spectrum, until it matched olo as closely as possible. They all dialled until it was an intense teal colour, which supports them seeing olo as they described. In another part of the experiment, the participants used a dial to add white light to either olo or a vivid teal until they matched even closer. All the participants diluted olo, which supports it being the more intense of the two shades. Andrew Stockman at University College London describes the research as “kind of fun”, but with potential medical implications. For instance, the device could one day enable people with red-green colour blindness, who find it hard to distinguish between these colours, to experience typical vision, he says. That is because the condition is sometimes caused by M and L cones both being activated by wavelengths of light that are very similar. Stimulating one over the other could enable people to see a wider range of shades, though this needs to be tested in trials, says Stockman. Journal reference:Science Advances DOI: 10.1126/sciadv.adu1052 Topics:vision0 Kommentare 0 Anteile 59 Ansichten

WWW.NEWSCIENTIST.COMNew colour seen for the first time by tricking the eyesOur retinas could be made to see a vivid shade of blue-greenMikeCS images/Alamy Five people have witnessed an intense green-blue colour that has never been seen by humans before, thanks to a device that might one day enable those with a type of colour blindness to experience typical vision. We perceive colour via the retina at the back of the eye, which typically contains three types of light-detecting cone cells – called S, M and L – that absorb a range of blue, green or red light, respectively, and then send signals to the brain. When we see anything at the blue-green end of the visible spectrum, at least two types of cone cells are activated at the same time because there is some overlap in the wavelengths they detect. Advertisement Ren Ng at the University of California, Berkeley, wondered what colour people would perceive if only one type of cone was activated in this part of the spectrum. He was inspired by a device called Oz, developed by other researchers studying how the eye works, that uses a laser capable of stimulating single cone cells. Ng and his colleagues, including the scientists who built Oz, upgraded the device so that it could deliver light to a small square patch of about 1000 cone cells in the retina. Stimulating a single cone cell doesn’t generate enough of a signal to induce colour perception, says Ng. The researchers tested the upgraded version on five people, stimulating only the M cones in this small area of one eye, while the other was closed. The participants said they saw a blue-green colour, which the researchers have called olo, that was more intense than any they had seen before. “It’s hard to describe; it’s very brilliant,” says Ng, who has also seen olo. To verify these results, the participants took a colour-matching test. Each viewed olo and a second colour that they could tune via a dial to any shade on the standard visible spectrum, until it matched olo as closely as possible. They all dialled until it was an intense teal colour, which supports them seeing olo as they described. In another part of the experiment, the participants used a dial to add white light to either olo or a vivid teal until they matched even closer. All the participants diluted olo, which supports it being the more intense of the two shades. Andrew Stockman at University College London describes the research as “kind of fun”, but with potential medical implications. For instance, the device could one day enable people with red-green colour blindness, who find it hard to distinguish between these colours, to experience typical vision, he says. That is because the condition is sometimes caused by M and L cones both being activated by wavelengths of light that are very similar. Stimulating one over the other could enable people to see a wider range of shades, though this needs to be tested in trials, says Stockman. Journal reference:Science Advances DOI: 10.1126/sciadv.adu1052 Topics:vision0 Kommentare 0 Anteile 59 Ansichten -

WWW.BUSINESSINSIDER.COMThe IRS had 3 different bosses during the week taxes were dueMichael Faulkender, the deputy Treasury secretary, was appointed the acting director of the IRS on Friday. Tom Williams/CQ-Roll Call, Inc via Getty Images 2025-04-19T00:03:01Z Save Saved Read in app This story is available exclusively to Business Insider subscribers. Become an Insider and start reading now. Have an account? Deputy Treasury Secretary Michael Faulkender became the acting commissioner of the IRS on Friday. Faulkender is the 3rd person to lead the IRS since tax season began and the 5th since Trump took office. Trump has nominated Billy Long for the role, but his confirmation is awaiting Senate approval. The Internal Revenue Service had another leadership shake-up on Friday, marking the third turnover the bureau has seen since tax week began — and the fifth since Donald Trump took office in January.Treasury Secretary Scott Bessent announced in a Friday statement that he had appointed his deputy, Michael Faulkender, to become acting commissioner of the IRS. Faulkender will take over from Gary Shapley, a former IRS staffer who held the position for just days following Melanie Krause's departure on Tuesday."Trust must be brought back to the IRS, and I am fully confident that Deputy Secretary Michael Faulkender is the right man for the moment," Bessent said in a statement on Friday. "Gary Shapley's passion and thoughtfulness for approaching ways by which to create durable and lasting reforms at the IRS is essential to our work, and he remains among my most important senior advisors at the US Treasury as we work together to rethink and reform the IRS."Shapley, last month, was tapped as a senior advisor to Bessent. He became a hero among conservatives following his testimony before Congress in July 2023, in which he and fellow IRS whistleblower Joseph Ziegler attested that the Justice Department had delayed a criminal probe and tax investigation into Hunter Biden while President Joe Biden was in office.In his statement, Bessent said Shapley and Ziegler would conduct a yearlong investigation into IRS reforms, after which Bessent said he "will ensure they are both in senior government roles that will enable the results of their investigation to translate into meaningful policy changes."Shapley took over the role of acting IRS commissioner after Krause resigned on Tuesday. Her resignation came on the heels of the IRS coming to an agreement with the Department of Homeland Security to share sensitive tax information related to undocumented immigrants to help the Trump administration locate and deport them,court documents show.The agreement was revealed in early April in a partially redacted document filed in a case challenging the legality of the IRS sharing individuals' tax information with external agencies.Krause took over the agency in an acting capacity after Doug O'Donnell resigned in February.Trump has nominated Former Republican Rep. Billy Long for the role, but his confirmation is awaiting Senate approval.The uncertainty regarding the bureau's leadership comes as theIRS is facing significant staff cuts. Business Insider previously reported that the staffing cuts are intended "to increase the efficiency and effectiveness of the IRS," and include a 75% reduction of the IRS's Office of Civil Rights and Compliance. Recommended video0 Kommentare 0 Anteile 55 Ansichten

-

GIZMODO.COMA New Superman Featurette Puts Faith in Its Hero and James GunnThere's a lot riding on Superman, but there's reason to believe Gunn's take on the DC hero will be a cinematic giant.0 Kommentare 0 Anteile 90 Ansichten

GIZMODO.COMA New Superman Featurette Puts Faith in Its Hero and James GunnThere's a lot riding on Superman, but there's reason to believe Gunn's take on the DC hero will be a cinematic giant.0 Kommentare 0 Anteile 90 Ansichten -

WWW.ARCHDAILY.COMWhispering Arc House / IDIEQWhispering Arc House / IDIEQSave this picture!© Harsh Nigam Architects: IDIEQ Area Area of this architecture project Area: 5600 ft² Year Completion year of this architecture project Year: 2025 Photographs Photographs:Harsh Nigam Manufacturers Brands with products used in this architecture project Manufacturers: ACC Limited, Apollo, Astral Pipes, Bosch, Cico, Hindalco, Kohler, Mianzi, Surie Polex, TATA Lead Architects: Saubhagya Civil Contractor: Sanajay Upadhyay, Asif Khan More SpecsLess Specs Save this picture! Text description provided by the architects. Nestled in the tranquil Bhabar region of Uttarakhand, adjacent to the Jim Corbett National Park, Whispering Arc is a contemplative retreat that merges human ingenuity with natural grace. Conceived as a four-bedroom farmstay, the project explores a delicate equilibrium between raw materiality, sustainability, and the poetry of place. The journey begins with a bold gesture: a red Corten steel gate that stands as both threshold and artwork. Set against the verdant landscape, its warm rusted tones mark the transition into a sanctuary where architecture feels rooted in the earth and open to the skies. This entry sets the tone for a project deeply attuned to its surroundings.Save this picture!Designed for Gaurav Tinjni, Amit Madan & Mohit Khurana the design pays homage to timeless forms and vernacular sensibilities. Exposed brick and sweeping arches define the spatial rhythm, creating a tactile, grounded experience. Reflected in the still waters of the pool, the arches form near-perfect circles—symbols of continuity and timelessness. These elements evoke both strength and serenity, offering a space that is as meditative as it is architectural. The material palette is both minimal and meaningful. Textured brickwork, satin-polished concrete floors, and bamboo accents speak of restraint and elegance. Funicular shells hang gracefully, capturing play of light and shadow like frozen waves, while exposed concrete filler slabs offer a subtle nod to the local craft heritage.Save this picture!Save this picture!Save this picture!Inside, the home flows with a quiet, cohesive energy. The interiors celebrate minimalism without austerity—natural materials, clean lines, and warm tones foster a sense of intimacy and reflection. The bathrooms, finished in seamless stained concrete, mirror the textures of the surrounding landscape, grounding the experience in earthy luxury.Save this picture!Save this picture!Crafted by local artisans, every detail contributes to a larger story of community and sustainability. Lighting fixtures are woven from rattan cane by tribal artisans, and a Mediterranean-style bamboo gazebo weaves light and air into patterns that change with the sun. These handmade elements preserve traditional skills while supporting local livelihoods.Save this picture!Save this picture!Save this picture!Save this picture!Save this picture!Environmental stewardship is integral to the design. A comprehensive rainwater management system—including chain drips and a deep recharge pit—returns excess water to the ground, ensuring the house actively nurtures the ecosystem it inhabits. The building's siting and material choices reduce embodied energy, while the openness of the structure encourages natural ventilation and daylighting. A distinctive parametric brick boundary wraps around the home like a woven skin—its rhythm of curves and arches invites exploration, acting as both filter and frame for the landscape beyond. From a distance, a singular curve hints at the spatial drama within.Save this picture!More than just a home, Whispering Arc is an architectural meditation—on light and shadow, tradition and innovation, permanence and fluidity. It offers a vision of luxury rooted not in excess, but in intention and harmony. Every brick, every shadow, every breeze that filters through the arches adds to its whispered narrative—of a place that listens to the land, and in turn, becomes part of its silence.Save this picture! Project gallerySee allShow less About this officeIDIEQOffice••• MaterialsMaterials and TagsPublished on April 18, 2025Cite: "Whispering Arc House / IDIEQ" 18 Apr 2025. ArchDaily. Accessed . <https://www.archdaily.com/1029183/whispering-arc-house-idieq&gt ISSN 0719-8884Save世界上最受欢迎的建筑网站现已推出你的母语版本!想浏览ArchDaily中国吗?是否 You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Kommentare 0 Anteile 57 Ansichten

WWW.ARCHDAILY.COMWhispering Arc House / IDIEQWhispering Arc House / IDIEQSave this picture!© Harsh Nigam Architects: IDIEQ Area Area of this architecture project Area: 5600 ft² Year Completion year of this architecture project Year: 2025 Photographs Photographs:Harsh Nigam Manufacturers Brands with products used in this architecture project Manufacturers: ACC Limited, Apollo, Astral Pipes, Bosch, Cico, Hindalco, Kohler, Mianzi, Surie Polex, TATA Lead Architects: Saubhagya Civil Contractor: Sanajay Upadhyay, Asif Khan More SpecsLess Specs Save this picture! Text description provided by the architects. Nestled in the tranquil Bhabar region of Uttarakhand, adjacent to the Jim Corbett National Park, Whispering Arc is a contemplative retreat that merges human ingenuity with natural grace. Conceived as a four-bedroom farmstay, the project explores a delicate equilibrium between raw materiality, sustainability, and the poetry of place. The journey begins with a bold gesture: a red Corten steel gate that stands as both threshold and artwork. Set against the verdant landscape, its warm rusted tones mark the transition into a sanctuary where architecture feels rooted in the earth and open to the skies. This entry sets the tone for a project deeply attuned to its surroundings.Save this picture!Designed for Gaurav Tinjni, Amit Madan & Mohit Khurana the design pays homage to timeless forms and vernacular sensibilities. Exposed brick and sweeping arches define the spatial rhythm, creating a tactile, grounded experience. Reflected in the still waters of the pool, the arches form near-perfect circles—symbols of continuity and timelessness. These elements evoke both strength and serenity, offering a space that is as meditative as it is architectural. The material palette is both minimal and meaningful. Textured brickwork, satin-polished concrete floors, and bamboo accents speak of restraint and elegance. Funicular shells hang gracefully, capturing play of light and shadow like frozen waves, while exposed concrete filler slabs offer a subtle nod to the local craft heritage.Save this picture!Save this picture!Save this picture!Inside, the home flows with a quiet, cohesive energy. The interiors celebrate minimalism without austerity—natural materials, clean lines, and warm tones foster a sense of intimacy and reflection. The bathrooms, finished in seamless stained concrete, mirror the textures of the surrounding landscape, grounding the experience in earthy luxury.Save this picture!Save this picture!Crafted by local artisans, every detail contributes to a larger story of community and sustainability. Lighting fixtures are woven from rattan cane by tribal artisans, and a Mediterranean-style bamboo gazebo weaves light and air into patterns that change with the sun. These handmade elements preserve traditional skills while supporting local livelihoods.Save this picture!Save this picture!Save this picture!Save this picture!Save this picture!Environmental stewardship is integral to the design. A comprehensive rainwater management system—including chain drips and a deep recharge pit—returns excess water to the ground, ensuring the house actively nurtures the ecosystem it inhabits. The building's siting and material choices reduce embodied energy, while the openness of the structure encourages natural ventilation and daylighting. A distinctive parametric brick boundary wraps around the home like a woven skin—its rhythm of curves and arches invites exploration, acting as both filter and frame for the landscape beyond. From a distance, a singular curve hints at the spatial drama within.Save this picture!More than just a home, Whispering Arc is an architectural meditation—on light and shadow, tradition and innovation, permanence and fluidity. It offers a vision of luxury rooted not in excess, but in intention and harmony. Every brick, every shadow, every breeze that filters through the arches adds to its whispered narrative—of a place that listens to the land, and in turn, becomes part of its silence.Save this picture! Project gallerySee allShow less About this officeIDIEQOffice••• MaterialsMaterials and TagsPublished on April 18, 2025Cite: "Whispering Arc House / IDIEQ" 18 Apr 2025. ArchDaily. Accessed . <https://www.archdaily.com/1029183/whispering-arc-house-idieq&gt ISSN 0719-8884Save世界上最受欢迎的建筑网站现已推出你的母语版本!想浏览ArchDaily中国吗?是否 You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Kommentare 0 Anteile 57 Ansichten -

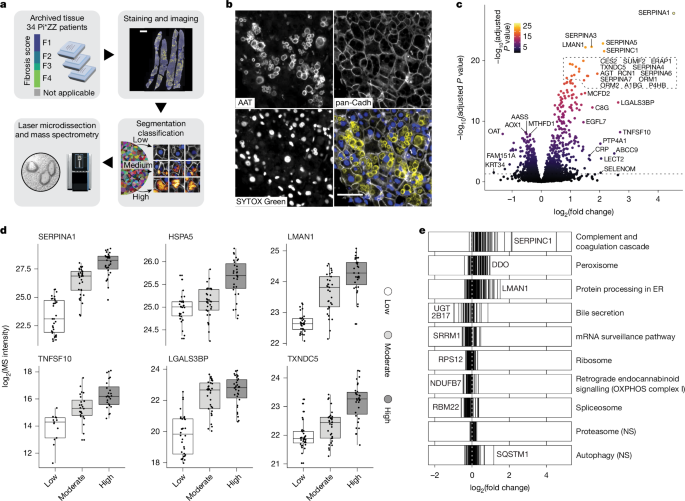

WWW.NATURE.COMDeep Visual Proteomics maps proteotoxicity in a genetic liver diseaseNature, Published online: 16 April 2025; doi:10.1038/s41586-025-08885-4High-resolution spatial proteomics were used to map molecular events during hepatocyte stress in pseudotime across all fibrosis stages, recapitulating known disease progression markers and revealing early peroxisomal activation and late-stage proteotoxic phenotypes.0 Kommentare 0 Anteile 46 Ansichten

WWW.NATURE.COMDeep Visual Proteomics maps proteotoxicity in a genetic liver diseaseNature, Published online: 16 April 2025; doi:10.1038/s41586-025-08885-4High-resolution spatial proteomics were used to map molecular events during hepatocyte stress in pseudotime across all fibrosis stages, recapitulating known disease progression markers and revealing early peroxisomal activation and late-stage proteotoxic phenotypes.0 Kommentare 0 Anteile 46 Ansichten -

WWW.LIVESCIENCE.COMUniverse may revolve once every 500 billion years — and that could solve a problem that threatened to break cosmologyA slowly spinning universe could resolve a puzzle in physics known as the Hubble tension, a new model suggests.0 Kommentare 0 Anteile 45 Ansichten

WWW.LIVESCIENCE.COMUniverse may revolve once every 500 billion years — and that could solve a problem that threatened to break cosmologyA slowly spinning universe could resolve a puzzle in physics known as the Hubble tension, a new model suggests.0 Kommentare 0 Anteile 45 Ansichten -

V.REDD.ITMy tribute to mononokeJust watched the movie in the theaters and felt super inspired 😩 submitted by /u/VossaDova [link] [comments]0 Kommentare 0 Anteile 56 Ansichten

V.REDD.ITMy tribute to mononokeJust watched the movie in the theaters and felt super inspired 😩 submitted by /u/VossaDova [link] [comments]0 Kommentare 0 Anteile 56 Ansichten -

MEDIUM.COMBuilding Real-World AI: Build Real World AI Applications with Gemini and ImagenBuilding Real-World AI: Build Real World AI Applications with Gemini and ImagenShaik Nomman·Follow3 min read·Just now--In the realm of Artificial Intelligence, the ability to not only generate realistic visuals but also to understand and describe them opens up exciting possibilities. In this post, we’ll walk through building a practical application using Google Cloud’s Vertex AI, where we’ll leverage the power of Imagen to generate a beautiful bouquet image and Gemini to then analyze it and create fitting birthday wishes.Step 1: Setting the Stage with Vertex AIBefore we dive into the code, ensure you have the google-cloud-aiplatform library installed. You can install it using pip:Bashpip install google-cloud-aiplatformAlso, make sure you have your Google Cloud Project ID and the desired location (region) handy, as we’ll need to configure Vertex AI.Step 2: Crafting the Bouquet with ImagenOur first task is to generate an image of a bouquet based on a text prompt. We’ll use the imagen-3.0-generate-002 model available on Vertex AI for this. Here's the Python code:Pythonimport vertexaifrom vertexai.preview.vision_models import ImageGenerationModelPROJECT_ID = 'your-gcp-project-id' # Replace with your actual Project IDLOCATION = 'your-gcp-region' # Replace with your actual regiondef generate_bouquet_image(project_id: str, location: str, output_file: str, prompt: str): """Generates an image of a bouquet based on the given prompt.""" vertexai.init(project=project_id, location=location) model = ImageGenerationModel.from_pretrained("imagen-3.0-generate-002") images = model.generate_images( prompt=prompt, number_of_images=1, seed=1, add_watermark=False, ) images[0].save(location=output_file) print(f"Bouquet image generated and saved to: {output_file}") return imagesif __name__ == "__main__": output_file_name = 'bouquet_image.jpeg' bouquet_prompt = 'Create an image containing a bouquet of 2 sunflowers and 3 roses' generate_bouquet_image(PROJECT_ID, LOCATION, output_file_name, bouquet_prompt)import vertexaifrom vertexai.preview.vision_models import ImageGenerationModelPROJECT_ID = 'your-gcp-project-id' # Replace with your actual Project IDLOCATION = 'your-gcp-region' # Replace with your actual regionRemember to replace 'your-gcp-project-id' and 'your-gcp-region' with your actual Google Cloud Project ID and region.This script initializes Vertex AI, loads the Imagen model, and then generates an image based on the provided prompt. The generated image is saved as bouquet_image.jpeg in the current directory.Step 3: Describing the Beauty with GeminiNow that we have our bouquet image, let’s use the power of the Gemini model (gemini-2.0-flash-001) to analyze it and generate relevant birthday wishes. Here's the code:Pythonimport vertexaifrom vertexai.generative_models import GenerativeModel, Part, Imageimport osPROJECT_ID = 'your-gcp-project-id' # Replace with your actual Project IDLOCATION = 'your-gcp-region' # Replace with your actual regiondef analyze_bouquet_image(image_path: str): """Analyzes the image and generates birthday wishes based on it.""" vertexai.init(project=PROJECT_ID, location=LOCATION) multimodal_model = GenerativeModel('gemini-2.0-flash-001') responses = multimodal_model.generate_content( [ Part.from_text('Generate birthday wishes based on this image'), Part.from_image(Image.load_from_file(image_path)), ], stream=True, ) print("Birthday wishes (streaming):") for response in responses: print(response.text, end="", flush=True) print()if __name__ == "__main__": image_file_path = "./bouquet_image.jpeg" analyze_bouquet_image(image_file_path)Again, replace 'your-gcp-project-id' and 'your-gcp-region' with your actual Google Cloud Project ID and region.This script initializes Vertex AI and loads the Gemini model. The analyze_bouquet_image function takes the path to the generated image (bouquet_image.jpeg). It then creates a multi-modal prompt with a text instruction and the image loaded using Part.from_image(Image.load_from_file(image_path)). The stream=True parameter allows us to see the birthday wishes as they are generated.Running the ApplicationTo see this in action:Save the first code block as a Python file (e.g., generate_bouquet.py).Save the second code block as another Python file (e.g., analyze_bouquet.py).Ensure you replace the placeholder Project ID and Region in both files.First, run the image generation script:Bashpython3 generate_bouquet.pyThis will create the bouquet_image.jpeg file.Then, run the image analysis script:Bashpython3 analyze_bouquet.pyThis will load the generated image and print the streaming birthday wishes to your console.The Power of Combining Imagen and GeminiThis example demonstrates a simple yet powerful application of combining Imagen’s image generation capabilities with Gemini’s multi-modal understanding. Imagine extending this to build interactive applications where users can describe their dream visuals and receive both a realistic representation and contextually relevant descriptions or follow-up actions.Vertex AI makes it incredibly accessible to harness these cutting-edge AI models, paving the way for innovative solutions in various domains, from creative content generation to intelligent image analysis.#VertexAI #Gemini #Imagen #GenerativeAI #MultiModalAI #GoogleCloud #AIApplications #RealWorldAI #Python0 Kommentare 0 Anteile 67 Ansichten