0 Комментарии

0 Поделились

159 Просмотры

Каталог

Каталог

-

Войдите, чтобы отмечать, делиться и комментировать!

-

METRO.CO.UKGames Inbox: What was the best game in the Xbox Developer Direct?Doom: The Dark Ages- now with added story (Bethesda)The Friday letters page is surprised and worried that EA has had two sales disappointments, as one reader is glad Ninja Gaiden is making a comeback.To join in with the discussions yourself email gamecentral@metro.co.ukNot only on XboxVery interesting Developer Direct for Xbox. Lots of great games that had nothing to do with Activision Blizzard and yet theyve got more games coming out in the first four months of this year than Sony has had in over two years. Its crazy that thats true and Im not even exaggerating.If Microsoft can keep this up, then I will be very impressed but why couldnt they have done this years ago? Ive got a PlayStation 5 and none of this gives me any urge to buy an Xbox console or Game Pass. Why would I? Ill just buy the games I want on the console Ive got, probably some of them after theyve been discounted.Xbox is just another publisher now, like EA or Ubisoft. In terms of how I connect with their games theres no difference. Was 25 years of Xbox gaming really leading up to this? If it makes money for them, it makes money, but it means they now have very little influence on the future of gaming.Without Xbox we wouldnt have got online gaming until years later, if it was just left to Sony. And in general its good to have a competitor for PlayStation, since Nintendo play by their own rules. Its a strange way to end the Xbox dream: successful but not in any of things they originally set out to do.TacleSight and soundIm in two minds over the Doom: The Dark Ages reveal. On the one hand I like what I see but a lot of what the devs were saying was really worrying. They obviously know everyones going to call it dumbed down, whether it is or not, and the stuff about the story is just what? What does Doom need a story for? Especially cut scenes that are going to be keeping you from the action.To me, it sounds like someone high up at Bethesda wasnt happy with the sales of the last couple and said they had to make it more accessible to casual gamers. But, as usual when a publisher says that, its not going to make a blind bit of difference and likely only put off the fans they already had by changing things.Hopefully its not that bad, because I really like the idea of the chain shield, but just totally unnecessary changes that are a huge red flag. Id love to know whose idea they were, so I can call them an idiot.JonesyObscure ClaireI knew the secret game at the Developer Direct would be Ninja Gaiden 4. It was so obvious as soon as the possibility arose, because Microsoft loves to obsess over old Xbox games and you can tell they think this is one of theirs. I bet they publish the next Dead Or Alive too, if and when that happens.But like you said, if Microsoft is, for whatever reason, funding non-blockbuster game then dont knock it, its good for everyone. This one not only helps PlatinumGames out but it brings back a franchise that probably wouldve been dead without them. Im all for it and I hope it turns out well, even if the graphics look kind of bad.I like the look of that French role-playing game too. For me this solidifies that Japanese role-playing games are absolutely a genre and not just where theyre made. It even has a terrible name that nobody will remember or be able to spell so authentic!FocusEmail your comments to: gamecentral@metro.co.ukThe doubleVery interesting to see EA suffering two flops (or at least disappointments) in a row, I cant remember that ever happening before. Im not surprised at Dragon Age: The Veilguard, as the marketing for that was very bad. Id bet that a lot of the damage was done with that terrible reveal trailer, which made it seem like some sort of bad comedy game for kids.I have no idea what they were thinking with that, but it should mean the game goes cheap sooner than it wouldve been and I can pick it up then.The one that does surprise me though is EA Sports FC 25. If the problem is bugs that would explain it but even so, I thought FIFA fans basically put up with anything. Its tempting to say that the answer is always make good games but its also do good marketing, ideally with someone that has an understanding of the game and its audience.Whatever you think of them, we dont need EA doing a Ubisoft. Especially as theyll just end up cheap enough for some other company to buy up and absorb.ZeissThe obvious choicesI feel like a 3D Mario and a Zelda remaster are so easy to guess for the Nintendo Switch 2 launch that you cant rely on any leak that mentions them, but I still hope its true. The original Switch had such an amazing first year and so far everything suggest Nintendo is going to try and repeat that success in as many specific ways as possible.That means Mario Kart probably coming out in the autumn, which Im fine with as I agree its not the sort of thing that seems right for a launch game. Personally, I hope the Zelda remaster is actually another remake of one of the 2D Zelda games. A Link To The Past or the Capcom games would be perfect, if you ask me, but I dont know how likely that is.CalbrenGrab bagI cant say that I was all that impressed by the Switch 2 reveal. Maybe the leaks sort of took the wind out of its sails. Its an interesting strategy for a company such as Nintendo to double down on proven success (Wii U was their lesson to not) when they are proven and successful risk takers in an increasingly volatile and risk averse market but Im positive that they now have a winning formula going forwards.Maybe, some might say sadly, theyve steered into the incremental zone. Its the games though and if the new hardware means thats positive progress then count me in (my inner cynicism tells me to wait for the inevitable OLED model).With that statement, Im going to try to invoke some Inbox magic as of now, in no particular order: Metroid Prime: 2 & 3 remasters for Switch 1/2 crossover, Shenmue remasters ( la PlayStation 4 standard), new Nintendogs, any Chibi-Robo!, Tenchu: Stealth Assassins, Dino Crisis, Hotel Dusk new episode, new F-Zero iteration, Super Mario Land 2 remake (along with original/reworked soundtrack options).Thats the spaghetti thrown to the wall. I could go on, but I think the cauldron is steaming dry already. Ill grab my coatD DubyaGC: That is a very random selection of games, but we wish you luck.Breeding disappointmentIm actually okay with Team17 changing their name because there is nothing about them now that has any similarity to what they used to be. Saying that, I still wish theyd make a new Alien Breed game, werent they going to at one point? Im sure I remember them announcing one.Considering theyve already given up on Worms though Im not surprised. Im not even going to bother mentioning SuperfrogBowieGC: There was a reboot trilogy of episodic games in the Xbox 360 era, but we dont recall them mentioning it again since. You might be thinking of indie game Xenosis: Alien Infection, which we saw in a preview but was never released.Tough luckOne aspect of the Switch 2 that doesnt seem to be getting a lot of attention is its backwards compatibility. I was wondering what people are hoping for from this? Im not expecting huge gains on old software and dont expect old third party titles to be patched for the new machine beyond a few classics, but stabilising existing titles to their target settings just via the extra grunt would be very welcome.Some of Nintendos own titles would obviously benefit from a native glow up too, Zelda: Tears Of The Kingdom does drop from its 30fps cap into the mid-to-low 20s quite often (clearing out monster camps with the squad is a nightmare). However, I dont expect any free lunches from Nintendo, tough love is their policy when it comes to fans.Sony has been (unfairly in my opinion) criticised for putting out PlayStation 4 remasters but paying some kind of fee for upgrades seems fair, remastering does require resources. Also, Nintendo has a history of maximising income from its back catalogue and their fans have proven to be happy to fork out again for games way they arent on other machines.I definitely expect full price Switch 2 versions of the two Switch main Zelda titles, Super Mario Odessey, some Pokmon, and select others at upscaled 4K with other technical improvements. I just hope that Nintendo put out modestly priced upgrade patches for existing owners, I would pay a tenner (Sony style) to take my copy of Zelda: Breath Of The Wild to 4K60fps.However, I wouldnt be surprised if they expect us to fork out full price again for the new versions and existing owners are stuck at 720p/30fps on their new consoles. How would you review that? Its still a 10/10 game but such an obvious fleecing of fans would leave such a bad taste I think itd be worth a points docking.MarcGC: But thats irrelevant to anyone that hasnt played/bought it before. Wed probably take the same approach we did with Super Mario 3D All-Stars, where we scored it highly but made it clear we considered the whole thing a cynical cash grab.Inbox also-ransHeres my GTA 6 trailer theory: there isnt going to be one until after the summer because its been delayed to next year. Prove me wrong!BobWas Dark Souls ever in Super Smash Bros. Ultimate? I swear there was a sticker or something, but I cant see it in anything Ive unlocked.RatonGC: No. You may be thinking of the Solaire of Astora amiibo (which, ironically, is not compatible with Super Smash Bros. Ultimate).More TrendingEmail your comments to: gamecentral@metro.co.ukThe small printNew Inbox updates appear every weekday morning, with special Hot Topic Inboxes at the weekend. Readers letters are used on merit and may be edited for length and content.You can also submit your own 500 to 600-word Readers Feature at any time via email or our Submit Stuff page, which if used will be shown in the next available weekend slot.You can also leave your comments below and dont forget to follow us on Twitter.GameCentralSign up for exclusive analysis, latest releases, and bonus community content.This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply. Your information will be used in line with our Privacy Policy0 Комментарии 0 Поделились 161 Просмотры

METRO.CO.UKGames Inbox: What was the best game in the Xbox Developer Direct?Doom: The Dark Ages- now with added story (Bethesda)The Friday letters page is surprised and worried that EA has had two sales disappointments, as one reader is glad Ninja Gaiden is making a comeback.To join in with the discussions yourself email gamecentral@metro.co.ukNot only on XboxVery interesting Developer Direct for Xbox. Lots of great games that had nothing to do with Activision Blizzard and yet theyve got more games coming out in the first four months of this year than Sony has had in over two years. Its crazy that thats true and Im not even exaggerating.If Microsoft can keep this up, then I will be very impressed but why couldnt they have done this years ago? Ive got a PlayStation 5 and none of this gives me any urge to buy an Xbox console or Game Pass. Why would I? Ill just buy the games I want on the console Ive got, probably some of them after theyve been discounted.Xbox is just another publisher now, like EA or Ubisoft. In terms of how I connect with their games theres no difference. Was 25 years of Xbox gaming really leading up to this? If it makes money for them, it makes money, but it means they now have very little influence on the future of gaming.Without Xbox we wouldnt have got online gaming until years later, if it was just left to Sony. And in general its good to have a competitor for PlayStation, since Nintendo play by their own rules. Its a strange way to end the Xbox dream: successful but not in any of things they originally set out to do.TacleSight and soundIm in two minds over the Doom: The Dark Ages reveal. On the one hand I like what I see but a lot of what the devs were saying was really worrying. They obviously know everyones going to call it dumbed down, whether it is or not, and the stuff about the story is just what? What does Doom need a story for? Especially cut scenes that are going to be keeping you from the action.To me, it sounds like someone high up at Bethesda wasnt happy with the sales of the last couple and said they had to make it more accessible to casual gamers. But, as usual when a publisher says that, its not going to make a blind bit of difference and likely only put off the fans they already had by changing things.Hopefully its not that bad, because I really like the idea of the chain shield, but just totally unnecessary changes that are a huge red flag. Id love to know whose idea they were, so I can call them an idiot.JonesyObscure ClaireI knew the secret game at the Developer Direct would be Ninja Gaiden 4. It was so obvious as soon as the possibility arose, because Microsoft loves to obsess over old Xbox games and you can tell they think this is one of theirs. I bet they publish the next Dead Or Alive too, if and when that happens.But like you said, if Microsoft is, for whatever reason, funding non-blockbuster game then dont knock it, its good for everyone. This one not only helps PlatinumGames out but it brings back a franchise that probably wouldve been dead without them. Im all for it and I hope it turns out well, even if the graphics look kind of bad.I like the look of that French role-playing game too. For me this solidifies that Japanese role-playing games are absolutely a genre and not just where theyre made. It even has a terrible name that nobody will remember or be able to spell so authentic!FocusEmail your comments to: gamecentral@metro.co.ukThe doubleVery interesting to see EA suffering two flops (or at least disappointments) in a row, I cant remember that ever happening before. Im not surprised at Dragon Age: The Veilguard, as the marketing for that was very bad. Id bet that a lot of the damage was done with that terrible reveal trailer, which made it seem like some sort of bad comedy game for kids.I have no idea what they were thinking with that, but it should mean the game goes cheap sooner than it wouldve been and I can pick it up then.The one that does surprise me though is EA Sports FC 25. If the problem is bugs that would explain it but even so, I thought FIFA fans basically put up with anything. Its tempting to say that the answer is always make good games but its also do good marketing, ideally with someone that has an understanding of the game and its audience.Whatever you think of them, we dont need EA doing a Ubisoft. Especially as theyll just end up cheap enough for some other company to buy up and absorb.ZeissThe obvious choicesI feel like a 3D Mario and a Zelda remaster are so easy to guess for the Nintendo Switch 2 launch that you cant rely on any leak that mentions them, but I still hope its true. The original Switch had such an amazing first year and so far everything suggest Nintendo is going to try and repeat that success in as many specific ways as possible.That means Mario Kart probably coming out in the autumn, which Im fine with as I agree its not the sort of thing that seems right for a launch game. Personally, I hope the Zelda remaster is actually another remake of one of the 2D Zelda games. A Link To The Past or the Capcom games would be perfect, if you ask me, but I dont know how likely that is.CalbrenGrab bagI cant say that I was all that impressed by the Switch 2 reveal. Maybe the leaks sort of took the wind out of its sails. Its an interesting strategy for a company such as Nintendo to double down on proven success (Wii U was their lesson to not) when they are proven and successful risk takers in an increasingly volatile and risk averse market but Im positive that they now have a winning formula going forwards.Maybe, some might say sadly, theyve steered into the incremental zone. Its the games though and if the new hardware means thats positive progress then count me in (my inner cynicism tells me to wait for the inevitable OLED model).With that statement, Im going to try to invoke some Inbox magic as of now, in no particular order: Metroid Prime: 2 & 3 remasters for Switch 1/2 crossover, Shenmue remasters ( la PlayStation 4 standard), new Nintendogs, any Chibi-Robo!, Tenchu: Stealth Assassins, Dino Crisis, Hotel Dusk new episode, new F-Zero iteration, Super Mario Land 2 remake (along with original/reworked soundtrack options).Thats the spaghetti thrown to the wall. I could go on, but I think the cauldron is steaming dry already. Ill grab my coatD DubyaGC: That is a very random selection of games, but we wish you luck.Breeding disappointmentIm actually okay with Team17 changing their name because there is nothing about them now that has any similarity to what they used to be. Saying that, I still wish theyd make a new Alien Breed game, werent they going to at one point? Im sure I remember them announcing one.Considering theyve already given up on Worms though Im not surprised. Im not even going to bother mentioning SuperfrogBowieGC: There was a reboot trilogy of episodic games in the Xbox 360 era, but we dont recall them mentioning it again since. You might be thinking of indie game Xenosis: Alien Infection, which we saw in a preview but was never released.Tough luckOne aspect of the Switch 2 that doesnt seem to be getting a lot of attention is its backwards compatibility. I was wondering what people are hoping for from this? Im not expecting huge gains on old software and dont expect old third party titles to be patched for the new machine beyond a few classics, but stabilising existing titles to their target settings just via the extra grunt would be very welcome.Some of Nintendos own titles would obviously benefit from a native glow up too, Zelda: Tears Of The Kingdom does drop from its 30fps cap into the mid-to-low 20s quite often (clearing out monster camps with the squad is a nightmare). However, I dont expect any free lunches from Nintendo, tough love is their policy when it comes to fans.Sony has been (unfairly in my opinion) criticised for putting out PlayStation 4 remasters but paying some kind of fee for upgrades seems fair, remastering does require resources. Also, Nintendo has a history of maximising income from its back catalogue and their fans have proven to be happy to fork out again for games way they arent on other machines.I definitely expect full price Switch 2 versions of the two Switch main Zelda titles, Super Mario Odessey, some Pokmon, and select others at upscaled 4K with other technical improvements. I just hope that Nintendo put out modestly priced upgrade patches for existing owners, I would pay a tenner (Sony style) to take my copy of Zelda: Breath Of The Wild to 4K60fps.However, I wouldnt be surprised if they expect us to fork out full price again for the new versions and existing owners are stuck at 720p/30fps on their new consoles. How would you review that? Its still a 10/10 game but such an obvious fleecing of fans would leave such a bad taste I think itd be worth a points docking.MarcGC: But thats irrelevant to anyone that hasnt played/bought it before. Wed probably take the same approach we did with Super Mario 3D All-Stars, where we scored it highly but made it clear we considered the whole thing a cynical cash grab.Inbox also-ransHeres my GTA 6 trailer theory: there isnt going to be one until after the summer because its been delayed to next year. Prove me wrong!BobWas Dark Souls ever in Super Smash Bros. Ultimate? I swear there was a sticker or something, but I cant see it in anything Ive unlocked.RatonGC: No. You may be thinking of the Solaire of Astora amiibo (which, ironically, is not compatible with Super Smash Bros. Ultimate).More TrendingEmail your comments to: gamecentral@metro.co.ukThe small printNew Inbox updates appear every weekday morning, with special Hot Topic Inboxes at the weekend. Readers letters are used on merit and may be edited for length and content.You can also submit your own 500 to 600-word Readers Feature at any time via email or our Submit Stuff page, which if used will be shown in the next available weekend slot.You can also leave your comments below and dont forget to follow us on Twitter.GameCentralSign up for exclusive analysis, latest releases, and bonus community content.This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply. Your information will be used in line with our Privacy Policy0 Комментарии 0 Поделились 161 Просмотры -

GIZMODO.COMHow to watch Djokovic vs Zverev live stream on a free TV channelAfter a spectacular win the other day, Djokovic continues his Australian Open domination as he faces Zverev. If you want to follow the semi-final, you might be wondering how to watch Djokovic vs Zverev online for free.Today, well discuss a way to watch the Djokovic vs Zverev live stream on a free channel without spending a dollar. Were talking about a legal HD stream with English commentary. Read on for more information.US: ESPN+UK: EurosportFree Channel: 9now / Channel 9 (Australia)Watch any live stream from anywhere with NordVPNHow to Watch Djokovic vs Zverev Live Stream for Free OnlineIn a recent article, we explained how to access the Australian Open live stream on a free channel. The free channel we mentioned is 9Now.9Now is an Australia-based Video-on-Demand service and simultaneously a popular channel, also known as Channel 9.This is the official broadcaster of the Australian Open, which also streams Djokovic vs Zverev live for free. Since 9Now is free, you only need an account but the problem is that it works only in Australia. Luckily, people online have found a solution.They reported that using a VPN, such as NordVPN, made 9Now available.Those abroad mentioned that connecting to the Australian server assigned them an IP address from this country. So, even if they were in the UK, Canada, or Europe, they could still access the channel. Now, NordVPN isnt free.Change your IP address with NordVPNHowever, these people reported using its 30-day refund policy.Essentially, all these years, theyve been streaming AO on 9Now for 3 or so weeks and obtaining a refund just after the tournament ended. With no money lost in the end, its clear how this solution can help you watch Djokovic vs Zverev live on a free channel.Djokovic vs Zverev RivalryWatching this Djokovic vs Zverev live stream for free is exciting for many.The two tennis giants have met 12 times so far and Djokovic took the upper hand 8 times. However, Zverev is only 27 and is higher on the ATP list than Djokovic.Their 13th duel will be played in the Australian Open semi-finals, where well see who will make it to the finals and potentially hold the trophy of the years first Grand Slam. atptour.comNow that you know how to access the Zverev vs Djokovic live stream on a free channel, it should be easy to get right into it. An HD stream of the duel awaits you on 9Now.If you apply the fix tennis fans worldwide have used, you can enjoy it as well. By the way, feel free to keep the VPN for a few more days so you can also enjoy the Australian Open final.If needed, you can have a look at how to unblock other Australian TV channels abroad in a dedicated guide on our website. Itll help you access other sports events for free.Try NordVPN risk-free for 30 days0 Комментарии 0 Поделились 161 Просмотры

GIZMODO.COMHow to watch Djokovic vs Zverev live stream on a free TV channelAfter a spectacular win the other day, Djokovic continues his Australian Open domination as he faces Zverev. If you want to follow the semi-final, you might be wondering how to watch Djokovic vs Zverev online for free.Today, well discuss a way to watch the Djokovic vs Zverev live stream on a free channel without spending a dollar. Were talking about a legal HD stream with English commentary. Read on for more information.US: ESPN+UK: EurosportFree Channel: 9now / Channel 9 (Australia)Watch any live stream from anywhere with NordVPNHow to Watch Djokovic vs Zverev Live Stream for Free OnlineIn a recent article, we explained how to access the Australian Open live stream on a free channel. The free channel we mentioned is 9Now.9Now is an Australia-based Video-on-Demand service and simultaneously a popular channel, also known as Channel 9.This is the official broadcaster of the Australian Open, which also streams Djokovic vs Zverev live for free. Since 9Now is free, you only need an account but the problem is that it works only in Australia. Luckily, people online have found a solution.They reported that using a VPN, such as NordVPN, made 9Now available.Those abroad mentioned that connecting to the Australian server assigned them an IP address from this country. So, even if they were in the UK, Canada, or Europe, they could still access the channel. Now, NordVPN isnt free.Change your IP address with NordVPNHowever, these people reported using its 30-day refund policy.Essentially, all these years, theyve been streaming AO on 9Now for 3 or so weeks and obtaining a refund just after the tournament ended. With no money lost in the end, its clear how this solution can help you watch Djokovic vs Zverev live on a free channel.Djokovic vs Zverev RivalryWatching this Djokovic vs Zverev live stream for free is exciting for many.The two tennis giants have met 12 times so far and Djokovic took the upper hand 8 times. However, Zverev is only 27 and is higher on the ATP list than Djokovic.Their 13th duel will be played in the Australian Open semi-finals, where well see who will make it to the finals and potentially hold the trophy of the years first Grand Slam. atptour.comNow that you know how to access the Zverev vs Djokovic live stream on a free channel, it should be easy to get right into it. An HD stream of the duel awaits you on 9Now.If you apply the fix tennis fans worldwide have used, you can enjoy it as well. By the way, feel free to keep the VPN for a few more days so you can also enjoy the Australian Open final.If needed, you can have a look at how to unblock other Australian TV channels abroad in a dedicated guide on our website. Itll help you access other sports events for free.Try NordVPN risk-free for 30 days0 Комментарии 0 Поделились 161 Просмотры -

WWW.ARCHDAILY.COMMuseum of Meenakari Heritage & Flagship Store / Studio LotusMuseum of Meenakari Heritage & Flagship Store / Studio LotusSave this picture! The Fishy ProjectGallery, Retail, ShowroomJaipur, IndiaArchitects: Studio LotusAreaArea of this architecture projectArea:20360 ftYearCompletion year of this architecture project Year: 2024 PhotographsPhotographs:The Fishy Project More SpecsLess SpecsSave this picture!Studio Lotus proposes a unique archetype for retail design with the new brand experience center for luxury jewelry label Sunita Shekhawat. The art of Meenakari or enamel work is an age-old technique renowned for its vibrant and intricate designs on metal surfaces. While enamel work originated in Persia, it has flourished in India, particularly in Rajasthan where it is passed down through the generations and deeply embedded in the region's artistic legacy. This 16th-century art serves as the foundation for the work of well-known Indian jewelry designer, Sunita Shekhawat. Her eponymous brand lends a fresh spin to the age-old tradition with timeless yet contemporary jewelry. Studio Lotus' design for the brand's flagship store and Museum of Meenakari in Jaipur pays homage to this approach; a nod to the region's vibrant cultural heritage amidst a fast-evolving cosmopolitan landscape.Save this picture!Save this picture!An existing concrete shell had already been erected on site when Studio Lotus was brought on board. The studio was given carte blanche to pull the structure down and treat the site as a blank canvas. Since it had only recently been cast in concrete, the architects decided to work with the existing shell, in line with their design philosophy of minimizing the embodied carbon footprint.Programmatically, the ground floor houses a museum gallery, with the store located one floor below and the offices a floor above. The second floor is intended for bringing in like-minded luxury brands, and the top floor, with its panoramic views of the nearby Rajmahal Palace, is designed for use as a restaurant.Save this picture!Save this picture!The identity of the building is shaped by multiple historical influences of the regionRajputana, Mughal, and Art Decoto create a unique composite distinct from any particular style. This new vocabulary mirrors Sunita Shekhawat's own design approach to her jewelry, which, while rooted in tradition, crosses over seamlessly into European and other contemporary iterations.Save this picture!The oddly shaped footprint was externally articulated by bevelling the balconies and subsequently devising a form that intricately layers patterns and details that draw from different periods, woven together into one cohesive image. Externally, the ground and first floors are articulated to read as one through a double-height entrance arch in elevation. Clad in hand-carved Jodhpur red sandstone, the facade harks to Sunita' Shekhawat's roots in Jodhpur. While quite distinct in expression, it fits seamlessly into the pink palette synonymous with Jaipur's urban fabric.Save this picture!Save this picture!On the inside, the client's three floors are unified through a striking sculptural staircase that winds through the center of the space. The original brief outlined the retail space occupying the ground floor, the basement to house offices and karigars' workshops, and the remaining floors to be let out. The studio suggested to the client, the idea of using the ground level as an opportunity for the Sunita Shekhawat brand to own a storytelling space on the art of enamelinga fitting way of giving back to the arts and cultural heritage of the citythrough the introduction of a museum-gallery that traces the provenance and history of Meenakari craftsmanship. This distinct addition not only serves as the fulcrum of the experience but also elevates the brand from a jewelry label to a custodian of the craft. The museum experience has been designed by Sidhartha Das, with the content curated by Usha Balakrishnana foremost expert on Indian jewelry traditions.Save this picture!The first floora bright, daylit space crafted out of lime plaster, stone, and terrazzohouses the client'soffice and design studio.With its wide floorplate and tall ceilings, the lower ground floor lends itself well to the exclusive, by-appointment-only, bespoke nature of the business, and of the product toothe lack of natural light being conducive to the controlled lighting necessary for jewelry display. The first experience is that of a library- cum-lounge space that further leads guests into one of four private pods, designed for one-on-one client interactions. The floor further accommodates a jewelry finishing unit, strongrooms, workshops for karigars, and ancillary spaces for accounts and sales. The planning of the retail space circulation allows for discreet, private servicing of all these pods through a service corridor that wraps itself around their external periphery, ensuring that guests have a highly personalized experience, with a sense of mystery to it.Save this picture!Save this picture!The pods are clad in an off-white 'araish' lime stucco, their semi-vaulted ceilings embellished with frescoes by artists specializing in miniature painting. Developed over several months in situ by 12 artisans, the frescoes depict vignettes of the region's architecture and flora and fauna. The outcomea jewel-like expression of hand-crafted luxury that celebrates the brand's ethosis a testament to the skills of the artisans, who've interpreted them on an unfamiliar scale and medium; a synergistic collaboration between the architects, the client, and Nisha Vikram of CraftCanvas. The remaining surfaces are monochromatic, so as to have no distractions at eye level from the intricately-crafted jewelry on display.Save this picture!The Sunita Shekhawat Flagship Store is designed to foster an environment where the act of purchasing jewelry is not the primary goal; instead, it becomes the natural conclusion of a transformative experience. The underlying emotion behind the Meenakari museumthe first of its kindbecomes the client's way of paying homage to the city that has given her so much, while also establishing a novel paradigm in luxury retail design.Save this picture!Project gallerySee allShow lessProject locationAddress:Jaipur, IndiaLocation to be used only as a reference. It could indicate city/country but not exact address.About this officeStudio LotusOfficePublished on January 24, 2025Cite: "Museum of Meenakari Heritage & Flagship Store / Studio Lotus" 23 Jan 2025. ArchDaily. Accessed . <https://www.archdaily.com/1025982/museum-of-meenakari-heritage-and-flagship-store-studio-lotus&gt ISSN 0719-8884Save!ArchDaily?You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Комментарии 0 Поделились 170 Просмотры

WWW.ARCHDAILY.COMMuseum of Meenakari Heritage & Flagship Store / Studio LotusMuseum of Meenakari Heritage & Flagship Store / Studio LotusSave this picture! The Fishy ProjectGallery, Retail, ShowroomJaipur, IndiaArchitects: Studio LotusAreaArea of this architecture projectArea:20360 ftYearCompletion year of this architecture project Year: 2024 PhotographsPhotographs:The Fishy Project More SpecsLess SpecsSave this picture!Studio Lotus proposes a unique archetype for retail design with the new brand experience center for luxury jewelry label Sunita Shekhawat. The art of Meenakari or enamel work is an age-old technique renowned for its vibrant and intricate designs on metal surfaces. While enamel work originated in Persia, it has flourished in India, particularly in Rajasthan where it is passed down through the generations and deeply embedded in the region's artistic legacy. This 16th-century art serves as the foundation for the work of well-known Indian jewelry designer, Sunita Shekhawat. Her eponymous brand lends a fresh spin to the age-old tradition with timeless yet contemporary jewelry. Studio Lotus' design for the brand's flagship store and Museum of Meenakari in Jaipur pays homage to this approach; a nod to the region's vibrant cultural heritage amidst a fast-evolving cosmopolitan landscape.Save this picture!Save this picture!An existing concrete shell had already been erected on site when Studio Lotus was brought on board. The studio was given carte blanche to pull the structure down and treat the site as a blank canvas. Since it had only recently been cast in concrete, the architects decided to work with the existing shell, in line with their design philosophy of minimizing the embodied carbon footprint.Programmatically, the ground floor houses a museum gallery, with the store located one floor below and the offices a floor above. The second floor is intended for bringing in like-minded luxury brands, and the top floor, with its panoramic views of the nearby Rajmahal Palace, is designed for use as a restaurant.Save this picture!Save this picture!The identity of the building is shaped by multiple historical influences of the regionRajputana, Mughal, and Art Decoto create a unique composite distinct from any particular style. This new vocabulary mirrors Sunita Shekhawat's own design approach to her jewelry, which, while rooted in tradition, crosses over seamlessly into European and other contemporary iterations.Save this picture!The oddly shaped footprint was externally articulated by bevelling the balconies and subsequently devising a form that intricately layers patterns and details that draw from different periods, woven together into one cohesive image. Externally, the ground and first floors are articulated to read as one through a double-height entrance arch in elevation. Clad in hand-carved Jodhpur red sandstone, the facade harks to Sunita' Shekhawat's roots in Jodhpur. While quite distinct in expression, it fits seamlessly into the pink palette synonymous with Jaipur's urban fabric.Save this picture!Save this picture!On the inside, the client's three floors are unified through a striking sculptural staircase that winds through the center of the space. The original brief outlined the retail space occupying the ground floor, the basement to house offices and karigars' workshops, and the remaining floors to be let out. The studio suggested to the client, the idea of using the ground level as an opportunity for the Sunita Shekhawat brand to own a storytelling space on the art of enamelinga fitting way of giving back to the arts and cultural heritage of the citythrough the introduction of a museum-gallery that traces the provenance and history of Meenakari craftsmanship. This distinct addition not only serves as the fulcrum of the experience but also elevates the brand from a jewelry label to a custodian of the craft. The museum experience has been designed by Sidhartha Das, with the content curated by Usha Balakrishnana foremost expert on Indian jewelry traditions.Save this picture!The first floora bright, daylit space crafted out of lime plaster, stone, and terrazzohouses the client'soffice and design studio.With its wide floorplate and tall ceilings, the lower ground floor lends itself well to the exclusive, by-appointment-only, bespoke nature of the business, and of the product toothe lack of natural light being conducive to the controlled lighting necessary for jewelry display. The first experience is that of a library- cum-lounge space that further leads guests into one of four private pods, designed for one-on-one client interactions. The floor further accommodates a jewelry finishing unit, strongrooms, workshops for karigars, and ancillary spaces for accounts and sales. The planning of the retail space circulation allows for discreet, private servicing of all these pods through a service corridor that wraps itself around their external periphery, ensuring that guests have a highly personalized experience, with a sense of mystery to it.Save this picture!Save this picture!The pods are clad in an off-white 'araish' lime stucco, their semi-vaulted ceilings embellished with frescoes by artists specializing in miniature painting. Developed over several months in situ by 12 artisans, the frescoes depict vignettes of the region's architecture and flora and fauna. The outcomea jewel-like expression of hand-crafted luxury that celebrates the brand's ethosis a testament to the skills of the artisans, who've interpreted them on an unfamiliar scale and medium; a synergistic collaboration between the architects, the client, and Nisha Vikram of CraftCanvas. The remaining surfaces are monochromatic, so as to have no distractions at eye level from the intricately-crafted jewelry on display.Save this picture!The Sunita Shekhawat Flagship Store is designed to foster an environment where the act of purchasing jewelry is not the primary goal; instead, it becomes the natural conclusion of a transformative experience. The underlying emotion behind the Meenakari museumthe first of its kindbecomes the client's way of paying homage to the city that has given her so much, while also establishing a novel paradigm in luxury retail design.Save this picture!Project gallerySee allShow lessProject locationAddress:Jaipur, IndiaLocation to be used only as a reference. It could indicate city/country but not exact address.About this officeStudio LotusOfficePublished on January 24, 2025Cite: "Museum of Meenakari Heritage & Flagship Store / Studio Lotus" 23 Jan 2025. ArchDaily. Accessed . <https://www.archdaily.com/1025982/museum-of-meenakari-heritage-and-flagship-store-studio-lotus&gt ISSN 0719-8884Save!ArchDaily?You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Комментарии 0 Поделились 170 Просмотры -

WWW.DISCOVERMAGAZINE.COMRomanian Animal Fossils Reveal Hominin Spread Into Europe 2 Million Years AgoIn the relentless search to know how the ancestors of humans spread across the world, the latest evidence has revealed that hominins were in Europe at least 1.95 million years ago. Clues on their presence were recently found by researchers at an archaeological site in Romania that could now help explain hominins early dispersal out of Africa.A study published in Nature Communications details the prominent find, which predatesevidence of hominins previously found at other sites across Europe. Researchers involved with the study uncovered new answers at Grunceanu, a site that lies south of the Carpathian Mountains in a river valley that is rich in fossils.Looking for Hominin EvidenceResearchers didn't identify hominin remains at Grunceanu, but instead found animal bones with cuts that revealed a hominin presence. The site was once teeming with extinct fauna, including several carnivores, equine species, rhinocerotoids, and rodents.Additionally, the presence of certain warm-adapted (or less cold-tolerant) species like ostriches, pangolins, and Paradolichopithecus (an ancient monkey) indicates that the river valley region had mild winters. Analyzing isotopes in the fossilized upper jaw of a horse specimen also allowed the researchers to conclude that the region was temperate and had seasonal variability.These conditions would have made Grunceanu and the surrounding region a fitting home for hominins to occupy. The researchers proved this by examining 4,524 fauna specimens for signs of surface modifications on bones dated to around 1.95 million years ago. They detected probable cut marks in 20 of the specimens, eight of which were classified as high-confidence marks. These high-confidence marks were located on four tibiae, one mandible, one humerus, and two long bone fragments. Based on the anatomical location, these marks appeared to reflect defleshing done by hominins.Who Were the Grunceanu Hominins?The bone surface modifications show that hominins had likely come to Grunceanu around 2 million years ago, making it the earliest European hominin site known so far. The researchers, however, cannot say for sure what species the hominins were.Hominin fossils in Europe are regularly identified as Homo sapiens, with some others identified as the older Homo erectus. Due to the fact that the earliest H. erectus sensu lato (referring to African and West Eurasian populations of H. erectus and potentially related subspecies) appeared in South Africa and Ethiopia around 2 million years ago, it is possible that this was not the hominin species at Grunceanu, or that H. erectus is actually older than current data suggests.Hominin Journeys Across EurasiaEvidence of hominin dispersal has been unearthed at several sites across the Middle East, Russia, and China, dating between 2 million years to 1.5 million years ago. But before the find at Grunceanu, the oldest known hominin site in Europe was at Dmanisi, a town in the nation of Georgia. This site, containing hominin remains and butchery marks on animal remains, is dated to around 1.85 million years ago. The identity of the Dmanisi hominins specifically, their relation to H. erectus and H. habilis is also a disputed topic, with the population sometimes referred to as Homo georgicus. With hominin evidence at Grunceanu, the date for archaic humans' trek into Europe can be pushed back. The study signifies that our ancestors may have been spreading to unexplored areas in Eurasia earlier than we thought, taking advantage of mild climates in temperate environments. Article SourcesOur writers at Discovermagazine.com use peer-reviewed studies and high-quality sources for our articles, and our editors review for scientific accuracy and editorial standards. Review the sources used below for this article:Nature Communications. Hominin presence in Eurasia by at least 1.95 million years ago UNESCO World Heritage Convention. Dmanisi Hominid Archaeological SiteJack Knudson is an assistant editor at Discover with a strong interest in environmental science and history. Before joining Discover in 2023, he studied journalism at the Scripps College of Communication at Ohio University and previously interned at Recycling Today magazine.0 Комментарии 0 Поделились 174 Просмотры

WWW.DISCOVERMAGAZINE.COMRomanian Animal Fossils Reveal Hominin Spread Into Europe 2 Million Years AgoIn the relentless search to know how the ancestors of humans spread across the world, the latest evidence has revealed that hominins were in Europe at least 1.95 million years ago. Clues on their presence were recently found by researchers at an archaeological site in Romania that could now help explain hominins early dispersal out of Africa.A study published in Nature Communications details the prominent find, which predatesevidence of hominins previously found at other sites across Europe. Researchers involved with the study uncovered new answers at Grunceanu, a site that lies south of the Carpathian Mountains in a river valley that is rich in fossils.Looking for Hominin EvidenceResearchers didn't identify hominin remains at Grunceanu, but instead found animal bones with cuts that revealed a hominin presence. The site was once teeming with extinct fauna, including several carnivores, equine species, rhinocerotoids, and rodents.Additionally, the presence of certain warm-adapted (or less cold-tolerant) species like ostriches, pangolins, and Paradolichopithecus (an ancient monkey) indicates that the river valley region had mild winters. Analyzing isotopes in the fossilized upper jaw of a horse specimen also allowed the researchers to conclude that the region was temperate and had seasonal variability.These conditions would have made Grunceanu and the surrounding region a fitting home for hominins to occupy. The researchers proved this by examining 4,524 fauna specimens for signs of surface modifications on bones dated to around 1.95 million years ago. They detected probable cut marks in 20 of the specimens, eight of which were classified as high-confidence marks. These high-confidence marks were located on four tibiae, one mandible, one humerus, and two long bone fragments. Based on the anatomical location, these marks appeared to reflect defleshing done by hominins.Who Were the Grunceanu Hominins?The bone surface modifications show that hominins had likely come to Grunceanu around 2 million years ago, making it the earliest European hominin site known so far. The researchers, however, cannot say for sure what species the hominins were.Hominin fossils in Europe are regularly identified as Homo sapiens, with some others identified as the older Homo erectus. Due to the fact that the earliest H. erectus sensu lato (referring to African and West Eurasian populations of H. erectus and potentially related subspecies) appeared in South Africa and Ethiopia around 2 million years ago, it is possible that this was not the hominin species at Grunceanu, or that H. erectus is actually older than current data suggests.Hominin Journeys Across EurasiaEvidence of hominin dispersal has been unearthed at several sites across the Middle East, Russia, and China, dating between 2 million years to 1.5 million years ago. But before the find at Grunceanu, the oldest known hominin site in Europe was at Dmanisi, a town in the nation of Georgia. This site, containing hominin remains and butchery marks on animal remains, is dated to around 1.85 million years ago. The identity of the Dmanisi hominins specifically, their relation to H. erectus and H. habilis is also a disputed topic, with the population sometimes referred to as Homo georgicus. With hominin evidence at Grunceanu, the date for archaic humans' trek into Europe can be pushed back. The study signifies that our ancestors may have been spreading to unexplored areas in Eurasia earlier than we thought, taking advantage of mild climates in temperate environments. Article SourcesOur writers at Discovermagazine.com use peer-reviewed studies and high-quality sources for our articles, and our editors review for scientific accuracy and editorial standards. Review the sources used below for this article:Nature Communications. Hominin presence in Eurasia by at least 1.95 million years ago UNESCO World Heritage Convention. Dmanisi Hominid Archaeological SiteJack Knudson is an assistant editor at Discover with a strong interest in environmental science and history. Before joining Discover in 2023, he studied journalism at the Scripps College of Communication at Ohio University and previously interned at Recycling Today magazine.0 Комментарии 0 Поделились 174 Просмотры -

WWW.NATURE.COMDaily briefing: Scientific serendipity is everywhereNature, Published online: 22 January 2025; doi:10.1038/d41586-025-00228-7The chance of a lucky break in biomedical research increases with bigger funding grants. Plus, the changing landscape of menopause treatments.0 Комментарии 0 Поделились 186 Просмотры

WWW.NATURE.COMDaily briefing: Scientific serendipity is everywhereNature, Published online: 22 January 2025; doi:10.1038/d41586-025-00228-7The chance of a lucky break in biomedical research increases with bigger funding grants. Plus, the changing landscape of menopause treatments.0 Комментарии 0 Поделились 186 Просмотры -

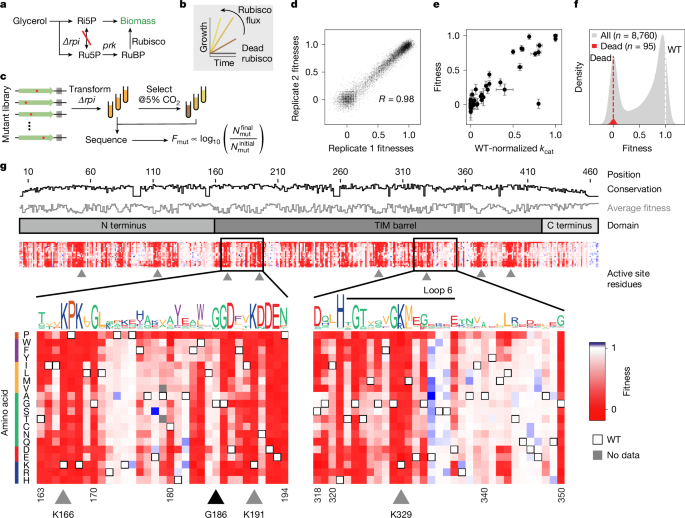

WWW.NATURE.COMA map of the rubisco biochemical landscapeNature, Published online: 22 January 2025; doi:10.1038/s41586-024-08455-0A massively parallel assay developed to map the essential photosynthetic enzyme rubisco showed that non-trivial biochemical changes and improvements in CO2 affinity are possible, signposting further enzyme engineering efforts to increase crop yields.0 Комментарии 0 Поделились 179 Просмотры

WWW.NATURE.COMA map of the rubisco biochemical landscapeNature, Published online: 22 January 2025; doi:10.1038/s41586-024-08455-0A massively parallel assay developed to map the essential photosynthetic enzyme rubisco showed that non-trivial biochemical changes and improvements in CO2 affinity are possible, signposting further enzyme engineering efforts to increase crop yields.0 Комментарии 0 Поделились 179 Просмотры -

CGSHARES.COMTutorial: Creating Transition & Reveal Effect In Cinema 4DIn this video, youll learn how to use Cinema 4Ds PolyFX to break your object into small pieces that gradually come together for a reveal. This feature has been around for a while and is pretty handy for motion graphics and similar projects, perfect for creating sci-fi and technology animations.Another key aspect of this tutorial is demonstrating how to use a proxy object to apply the animation first, which lets you fine-tune everything quickly before applying the effects to your high-resolution object. Grab the free car model used in the tutorial here and follow along.The Pixel Lab is a fantastic resource for 3D and VFX Artists, offering a huge collection of free models, hundreds of tutorials for Cinema 4D, Octane, and After Effects, and other great products designed to speed up your motion design workflow. Check out some of their recent quick tips and visit their site for more:Also,dont forget to join our80 Level Talent platformand ournew Discord server, follow us onInstagram,Twitter,LinkedIn,Telegram,TikTok, andThreads, where we share breakdowns, the latest news, awesome artworks, and more.Source link The post Tutorial: Creating Transition & Reveal Effect In Cinema 4D appeared first on CG SHARES.0 Комментарии 0 Поделились 221 Просмотры

CGSHARES.COMTutorial: Creating Transition & Reveal Effect In Cinema 4DIn this video, youll learn how to use Cinema 4Ds PolyFX to break your object into small pieces that gradually come together for a reveal. This feature has been around for a while and is pretty handy for motion graphics and similar projects, perfect for creating sci-fi and technology animations.Another key aspect of this tutorial is demonstrating how to use a proxy object to apply the animation first, which lets you fine-tune everything quickly before applying the effects to your high-resolution object. Grab the free car model used in the tutorial here and follow along.The Pixel Lab is a fantastic resource for 3D and VFX Artists, offering a huge collection of free models, hundreds of tutorials for Cinema 4D, Octane, and After Effects, and other great products designed to speed up your motion design workflow. Check out some of their recent quick tips and visit their site for more:Also,dont forget to join our80 Level Talent platformand ournew Discord server, follow us onInstagram,Twitter,LinkedIn,Telegram,TikTok, andThreads, where we share breakdowns, the latest news, awesome artworks, and more.Source link The post Tutorial: Creating Transition & Reveal Effect In Cinema 4D appeared first on CG SHARES.0 Комментарии 0 Поделились 221 Просмотры -

CGSHARES.COMArtist Compared Range of Motion of Live2D & 3D ModelsAn artist known as tetra decided to test the range of motion of Live2D and 3D models, showing a cute anime girl making faces. Spoiler: there isnt that much difference, although the 3D one looks a little freer with its movement.If youre not familiar with it, Live2D is an animation technique used to animate images by separating them into parts and working on each. It is also software that generates real-time 2D animations based on this.One of the best illustrations for this is rukusu20XXs experiment with a 3D body and a Live2D head:We have also recently seen a 3D-like halo made in Live2D, a hand model, a cute cat, and a smooth anime character.If you like tetras work, visit their X/Twitter for more.Also, join our80 Level Talent platformand ournew Discord server, follow us onInstagram,Twitter,LinkedIn,Telegram,TikTok, andThreads,where we share breakdowns, the latest news, awesome artworks, and more.Source link The post Artist Compared Range of Motion of Live2D & 3D Models appeared first on CG SHARES.0 Комментарии 0 Поделились 227 Просмотры

CGSHARES.COMArtist Compared Range of Motion of Live2D & 3D ModelsAn artist known as tetra decided to test the range of motion of Live2D and 3D models, showing a cute anime girl making faces. Spoiler: there isnt that much difference, although the 3D one looks a little freer with its movement.If youre not familiar with it, Live2D is an animation technique used to animate images by separating them into parts and working on each. It is also software that generates real-time 2D animations based on this.One of the best illustrations for this is rukusu20XXs experiment with a 3D body and a Live2D head:We have also recently seen a 3D-like halo made in Live2D, a hand model, a cute cat, and a smooth anime character.If you like tetras work, visit their X/Twitter for more.Also, join our80 Level Talent platformand ournew Discord server, follow us onInstagram,Twitter,LinkedIn,Telegram,TikTok, andThreads,where we share breakdowns, the latest news, awesome artworks, and more.Source link The post Artist Compared Range of Motion of Live2D & 3D Models appeared first on CG SHARES.0 Комментарии 0 Поделились 227 Просмотры -

WWW.GAMESPOT.COMRevisit David Lynch's Dune With These 4K Blu-Ray And Collectible Book DealsDavid Lynch was one of the most revered filmmakers in Hollywood, with classics such as The Straight Story, Twin Peaks, Eraserhead, and Mulholland Drive credited to his name throughout his nearly 50-year career writing and directing for film and TV. Lynch also directed the first adaptation of Frank Herbert's sci-fi novel Dune. Unlike Twin Peaks and the aforementioned films, Dune was not well-received in 1984--Lynch himself wasn't even a fan--but audiences have warmed up to it over the years. If you're interested in revisiting Lynch's work or experience it for the first time in the wake of his death last week, Dune remains fascinating and visually impressive 40 years after its release.David Lynch's Dune: Special Edition (4K Blu-ray) is on sale for only $25, down from $50, at Walmart. Amazon had this deal earlier today but was sold out at the time of writing. And if you'd like to learn more about the tumultuous production of one of Lynch's most divisive films, the 560-page oral history of Dune is available for only $20 in hardcover format at Amazon and Walmart. Continue Reading at GameSpot0 Комментарии 0 Поделились 154 Просмотры

WWW.GAMESPOT.COMRevisit David Lynch's Dune With These 4K Blu-Ray And Collectible Book DealsDavid Lynch was one of the most revered filmmakers in Hollywood, with classics such as The Straight Story, Twin Peaks, Eraserhead, and Mulholland Drive credited to his name throughout his nearly 50-year career writing and directing for film and TV. Lynch also directed the first adaptation of Frank Herbert's sci-fi novel Dune. Unlike Twin Peaks and the aforementioned films, Dune was not well-received in 1984--Lynch himself wasn't even a fan--but audiences have warmed up to it over the years. If you're interested in revisiting Lynch's work or experience it for the first time in the wake of his death last week, Dune remains fascinating and visually impressive 40 years after its release.David Lynch's Dune: Special Edition (4K Blu-ray) is on sale for only $25, down from $50, at Walmart. Amazon had this deal earlier today but was sold out at the time of writing. And if you'd like to learn more about the tumultuous production of one of Lynch's most divisive films, the 560-page oral history of Dune is available for only $20 in hardcover format at Amazon and Walmart. Continue Reading at GameSpot0 Комментарии 0 Поделились 154 Просмотры