Engadget Podcast: The AI and XR of Google I/O 2025

Would you believe Google really wants to sell you on its AI? This week, we dive into the news from Google I/O 2025 with Engadget's Karissa Bell. We discuss how Gemini is headed to even more places, as well as Karissa's brief hands-on with Google's prototype XR glasses. It seems like Google is trying a bit harder now than it did with Google Glass and its defunct Daydream VR platform. But will the company end up giving up again, or does it really have a shot against Meta and Apple?

Subscribe!

iTunes

Spotify

Pocket Casts

Stitcher

Google Podcasts

Topics

Lots of AI and a little XR: Highlights from Google I/O 2025 – 1:15

OpenAI buys Jony Ive’s design company for B, in an all equity deal – 29:27

Fujifilm’s X Half could be the perfect retro camera for the social media age – 39:42

Sesame Street is moving from HBO to Netflix – 44:09

Cuts to IMLS will lead to headaches accessing content on apps like Libby and Hoopla – 45:49

Listener Mail: Should I replace my Chromebook with a Mac or PC Laptop? – 48:33

Pop culture picks – 52:22

Credits

Hosts: Devindra Hardawar and Karissa BellProducer: Ben EllmanMusic: Dale North and Terrence O'Brien

Transcript

Devindra:What's up, internet and welcome back to the Engadget Podcast. I'm Senior Editor Devindra Hardawar. I'm joined this morning by Senior Writer Karissa Bell. Hello, Karissa.

Karissa: Hello. Good morning.

Devindra: Good morning. And also podcast producer Ben Elman. Hey Ben, I'm muted my dang self. Hello. Hello, Ben. Good morning. It's been a busy week, like it's one of those weeks where.

Three major conferences happened all at once and a varying like relevance to us. Google IO is the big one. We'll be talking about that with Karissa who was there and got to demo Google's XR glasses, but also Computex was happening. That's over in Taipei and we got a lot of news from that to, we'll mention some of those things.

Also, Microsoft build happened and I feel like this was the less least relevant build to us ever. I got one bit of news I can mention there. That's pretty much it. It's been a crazy hectic week for us over at Eng Gadget. As always, if you're enjoying the show, please be free to subscribe to us on iTunes or your podcast catcher of choice.

Leave us a review on iTunes, drop us email at podcast@enggadget.com.Those emails, by the way, if you ask a good question, it could end up being part of our Ask Engadget section, so that's something we're starting out. I have another good one. I'll be throwing to asking Eng gadgets soon. So send us your emails podcast@enggadget.com, Google io.

It's all about ai, isn't it? I feel like Karissa, we were watching the keynote for this thing and it felt like it went on and on of the thing about the things, like we all pretty much expect more about Gemini ai, more about their newer models a bit about xr. Can you give me, what's your overall impression of IO at this point?

Karissa: Yeah, it's interesting because I've been covering IO long enough that I remember back when it used to be Android. And then there'd be like that little section at the end about, AI and some of the other stuff. And now it's completely reversed where it's entirely AI and basically no Android to the point where they had a whole separate event with their typical Android stuff the week before.

So it didn't have to go through and talk about any of yeah, the mobile things.

Devindra: That was just like a live stream that was just like a chill, live stream. No realeffort put into it. Whereas this is the whole show. They had a, who was it? But they had TOIs. TOIs, yeah. They had actual music which is something a lot of these folks do at keynotes.

It's actually really disconcerting to see cool musicians taking the corporate gig and performing at one of these things. I think, it was like 20 13, 20 14, maybe the Intel one, IDF or something. But the weekend was there. Just trying to jam to all these nerds and it was sad, but yeah. How was the experience Karissa like actually going there?

Karissa: Yeah, it was good. That keynote is always kind of a slog. Just, live blogging for our almost two hours straight, just constant is it's a lot. I did like the music. Towa was very chill. It was a nice way to start much. I preferred it over the crazy loop daddy set we got last year.

If anyone remembers that.

Devindra: Yeah.

Ben: Yeah. Oh, I remember that. Mark Rub was at audio. That was so weird.

Devindra: Yeah. Yeah, it was a little intense. Cool. So what are some of the highlights? Like there, there's a bunch of stuff. If you go look on, on the site on Engadget, wehave rounded up like all the major news and that includes a couple of things like hey, AI mode, chat bot coming to search.

That's cool. We got more, I think the thing a lot of people were looking at was like Project Astra and where that's gonna be going. And that is the sort of universal AI assistant where you could hold your phone up and just ask it questions about the world. We got another demo video about that.

Which again, the actual utility of it, I'm weirded out by. There was also one video where they were just like I'm gonna be dumb. I'm gonna pretend I'm very stupid and ask ask Astro, what is this tall building in front of me. And it was like a fire hydrant or something. It was like some piece of street thing.

It was not a really well done demo. Do you have any thoughts about that, Krista? Does that seem more compelling to you now or is it the same as what we saw last year?

Karissa: I think what was interesting to me about it was that we saw Astro last year and like that, I think there was a lot of excitement around that, but it wasn't really entirely clear where that.

Project is going. They've said it's like an experimental research thing. And then, I feel like this year they really laid out that they want tobring all that stuff to Gemini. Astra is sort of their place to like tinker with this and, get all this stuff working.

But like their end game is putting this into Gemini. You can already see it a little bit in Gemini Live, which is like their multimodal feature where you can do some. Version of what ASRA can do. And so that was interesting. They're saying, we want Gemini to be this universal AI assistant.

They didn't use the word a GI or anything like that. But I think it's pretty clear where they're going and like what their ambition is they want this to be, an all seeing, all knowing AI assistant that can help you with anything is what they're trying to sell it as.

Devindra: It is weird, like we're watching the demo video and it's a guy trying to fix his bike and he is pointing his phone at like the bike and asking questions like which, which particular, I don't know. It's which particular nut do I need for this tightening thing and it's giving him good advice.

It's pointing to things on YouTube. I. I don't know how useful this will actually be. This kind of goes to part of the stuff we're seeing with AI too, of just like offloadingsome of the grunt work of human intelligence because you can do this right now, people have been YouTubing to fix things forever.

YouTube has become this like information repository of just fix it stuff or home plumbing or whatever. And now it's just like you'll be able to talk to your phone. It'll direct you right to those videos or. Extract the actual instructions from those. That's cool. I feel like that's among the more useful things, more useful than like putting Gemini right into Chrome, which is another thing they're talking about, and I don't know how useful that is other than.

They wanna push AI in front of us, just like Microsoft wants to push copilot in front of us at all times.

Ben: What is a situation where you would have a question about your Chrome tabs? Like I'm not one of those people that has 15 chrome tabs open at any given time, and I know that I am. Yeah, I know.

Wait, you're saying that like it's a high. Like it's high. Yeah, no I know. So I have a abnormally low number of chrome tabs open, but can you still come upwith an idea of why you would ask Gemini anything about your own tabs open? Hopefully you have them organized. At least

Karissa: they should. A few examples of like online shopping, like maybe you have.

Two tabs of two different products open. And you can say

Devindra: exactly,

Karissa: ask Gemini to like, compare the reviews. Or they use like the example of a recipe video, a recipe blog. And maybe, you wanna make some kind of modification, make the recipe gluten free. And you could ask Gemini Hey, make this how would I make this gluten free?

But I think you're right, like it's not exactly clear. You can already just open a new tab and go to Gemini and ask it. Something. So they're just trying to reduce

Devindra: friction. I think that's the main thing. Like just the less you have to think about it, the more it's in your face. You can just always always just jump right to it.

It's hey, you can Google search from any your UL bar, your location bar in any browser. We've just grown to use that, but that didn't used to be the case. I remember there used to be a separate Google field. Some browsers and it wasn't always there in every browser too. They did announce some new models.

Wesaw there's Gemini 2.5 Pro. There's a deep think reasoning model. There's also a flash model that they announced for smaller devices. Did they show any good demos of the reasoning stuff? Because I that's essentially slower AI processing to hopefully get you better answers with fewer flaws.

Did they actually show how that worked? Karissa.

Karissa: I only saw what we all saw during the keynote and I think it's, we've seen a few other AI companies do something similar where you can see it think like its reasoning process. Yeah. And see it do that in real time.

But I think it's a bit unclear exactly what that's gonna look like.

Devindra: Watching a video, oh, Gemini can simulate nature simulate light. Simulate puzzles, term images into code.

Ben: I feel like the big thing, yeah. A lot of this stuff is from DeepMind, right? This is DeepMind an alphabet company.

Devindra: DeepMind and Alphabet company. There is Deep mind. This is deep Think and don't confuse this with deep seek, which is that the Chinese AI company, and theyclearly knew what they were doing when they call it that thing. Deep seek. But no, yeah, that is, this is partially stuff coming out of DeepMind.

DeepMind, a company which Google has been like doing stuff with for a while. And we just have not really seen much out of it. So I guess Gemini and all their AI processes are a way to do that. We also saw something that got a lot of people, we saw

Ben: Nobel Prize from them. Come on.

Devindra: Hey, we did see that.

What does that mean? What is that even worth anymore? That's an open question. They also showed off. A new video tool called Flow, which I think got a lot of people intrigued because it's using a new VO three model. So an updated version of what they've had for video effects for a while.

And the results look good. Like the video looks higher quality. Humans look more realistic. There have been. The interesting thing about VO three is it can also do synchronized audio to actually produce audio and dialogue for people too. So people have been uploading videos around this stuff online at this point, and you have tosubscribe to the crazy high end.

Version of Google's subscription to even test out this thing at this point that is the AI Ultra plan that costs a month. But I saw something of yeah, here's a pretend tour of a make believe car show. And it was just people spouting random facts. So yeah, I like EVs. I would like an ev.

And then it looks realistic. They sound synchronized like you could. I think this is a normal person. Then they just kinda start laughing at the end for no reason. Like weird little things. It's if you see a sociopath, try to pretend to be a human for a little bit. There's real Patrick Bateman vibes from a lot of those things, so I don't know.

It's fun. It's cool. I think there's, so didn't we

Ben: announce that they also had a tool to help you figure out whether or not a video was generated by flow? They did announce that

Devindra: too.

Ben: I've yeah, go ahead. Go

Karissa: ahead. Yeah. The synth id, they've been working on that for a while. They talked about it last year at io.

That's like their digital watermarking technology. And the funny thing about this istheir whole, the whole concept of AI watermarking is you put like these like invisible watermarks into AI generated content. You might, you couldn't just. See it, just watching this content.

But you can go to this website now and basically like double check. If it has one of these watermarks, which is on one hand it's. I think it's important that they do this work, but I also just wonder how many people are gonna see a video and think I wonder what kind of AI is in this.

Let me go to this other website and like double check it like that. Just,

Ben: yeah. The people who are most likely to immediately believe it are the, also the least likely to go to the website and be like, I would like to double check

Devindra: this. It doesn't matter because most people will not do it and the damage will be done.

Just having super hyper realistic, AI video, they can, you can essentially make anything happen. It's funny that the big bad AI bad guy in the new Mission Impossible movies, the entity, one of the main things it does is oh, we don't know what's true anymore because the entity can just cr fabricate reality at whim.

We're just doing that.We're just doing that for, I don't know, for fun. I feel like this is a thing we should see in all AI video tools. This doesn't really answer the problem, answer the question that everyone's having though. It's what is the point of these tools? Because it does devalue filmmaking, it devalues people using actual actors or using, going out and actually shooting something.

Did Google make a better pitch for why you would use Flow Karissa or how it would fit into like actual filmmaking?

Karissa: I'm not sure they did. They showed that goofy Darren Aronofsky trailer for some woman who was trying to like, make a movie about her own birth, and it was like seemed like they was trying to be in the style of some sort of like psychological thriller, but it just, I don't know, it just felt really weird to me.

I was I was just like, what are we watching? This doesn't, what are we watching? Yeah.

Ben: Was there any like good backstory about why she was doing that either or was it just Hey, we're doing something really weird?

Karissa: No, she was just oh I wonder, you know what? I wanna tell the story of my own birth and Okay.

Ben:Okay, but why is your relate birth more? Listen its like every, I need more details. Why is your birth more important? It's, everybody wants lots of babies. Write I memoir like one of three ways or something.

Devindra: Yeah, it's about everybody who wants to write a memoir. It's kinda the same thing. Kinda that same naval ga thing.

The project's just called ancestral. I'm gonna play a bit of a trailer here. I remember seeing this, it reminds me of that footage I dunno if you guys remember seeing, look who's talking for the very first time or something, or those movies where they, they showed a lot of things about how babies are made.

And as a kid I was like, how'd they make that, how'd that get done? They're doing that now with AI video and ancestral this whole project. It is kinda sad because Aronofsky is one of my, like one of my favorite directors when he is on, he has made some of my favorite films, but also he's a guy who has admittedly stolen ideas and concepts from people like Satoshi kh as specific framing of scenes and things like that.

In Requa for a Dream are in some cones movies as well. SoI guess it's to be expected, but it is. Sad because Hollywood as a whole, the union certainly do not like AI video. There was a story about James Earl Jones' voice being used as Darth Vader. In Fortnite. In Fortnite. In Fortnite, yeah.

Which is something we knew was gonna happen because Disney licensed the rights to his voice before he died from his estate. He went in and recorded lines to at least create a better simulation of his voice. But people are going out there making that Darth Vader swear and say bad things in Fortnite and the WGA or is it sag?

It's probably sag but sad. Like the unions are pissed off about this because they do not know this was happening ahead of time and they're worried about what this could mean for the future of AI talent. Flow looks interesting. I keep seeing play people play with it. I made a couple videos asked it to make Hey, show me three cats living in Brooklyn with a view of the Manhattan skyline or something.

And it, it did that, but the apartment it rendered didn't look fully real.It had like weird heating things all around. And also apparently. If you just subscribe to the basic plan to get access to flow, you can use flow, but that's using the VO two model. So older AI model. To get VO three again, you have to pay a month.

So maybe that'll come down in price eventually. But we shall see. The thing I really want to talk with you about Krisa is like, what the heck is happening with Android xr? And that is a weird project for them because I was writing up the news and they announced like a few things.

They were like, Hey we have a new developer released to help you build Android XR apps. But it wasn't until the actual a IO show. That they showed off more of what they were actually thinking about. And you got to test out a pair of prototype Google XR glasses powered by Android xr. Can you tell me about that experience and just how does it differ from the other XR things you've seen from who is it from Several, look, you've seen Metas Meta, you saw one from Snap, right?

Meta

Karissa: I've seen Snap. Yeah. Yeah. I've seen the X reel. Yeah, some of the other smallercompanies I got to see at CES. Yeah, that was like a bit of a surprise. I know that they've been talking about Android XR for a while. I feel like it's been a little, more in the background. So they brought out these, these glasses and, the first thing that I noticed about them was like, they were actually pretty small and like normal looking compared to, met Orion or like the snap spectacles.

Like these were very thin which was cool. But the display was only on one side. It was only on one lens. They called it like a monocular display. So there's one lens on one side. So it's basically just like a little window, very small field of view.

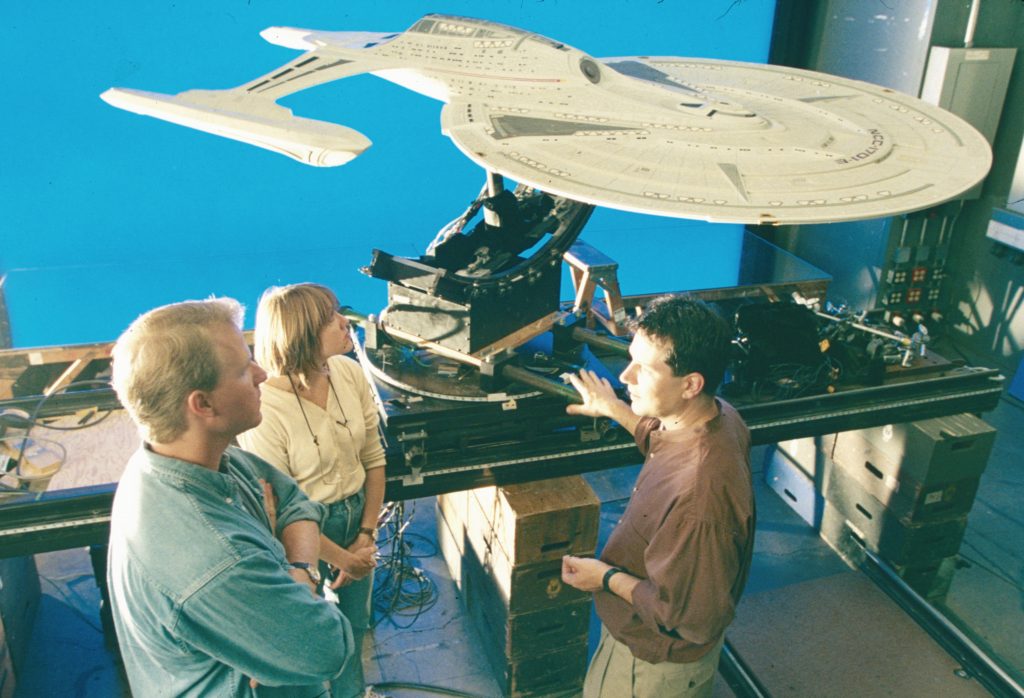

Devindra: We could see it in, if you go to the picture on top of Chris's hands on piece, you can see the frame out.

Of what that lens would be. Yeah.

Karissa: Yeah. And I noticed even when we were watching that, that demo video that they did on stage, that like the field of view looked very small. It was even smaller than Snaps, which is 35 degrees like this. I would, if I had to guess, I'd say it's maybe like around 20.

They wouldn't say what it was. They said, this is a prototype. We don't wanna say the way I thought about it, the wayI compared it to my piece was like the front screwing on a foldable phone, so it's you can get notifications and you can like glance at things, but it's not fully immersive ar it's not, surrounding your space and like really cha changing your reality, in the way that like snap and and meta are trying to do later when I was driving home, I realized it actually was reminded me like a better comparison might be the heads up display in your car.

Speaker: Yeah. Yeah.

Karissa: If you have a car that has that little hu where you can see how fast you're going and directions and stuff like that.

Devindra: That's what Google Glass was doing too, right? Because that was a little thing off to the side of your revision that was never a full takeover. Your vision type of thing.

Karissa: Yeah. It's funny, that's what our editor Aaron said when he was editing my piece, he was like, oh, this sounds like Google Glass.

And I'm like, no, it actually, it's, it is better than that. These are like normal looking glasses. The, I tried Google Glass many years ago. Like the Fidelity was better. Actually I was thinking. It feels like a happy medium almost between, meta ray bands and like full ar Yeah, like I, I've had a meta ray band glassesfor a long time and people always ask me, like when I show it to someone, they're like, oh, that's so cool.

And then they go, but you can see stuff, right? There's a display and I'm like. No. These are just, glasses with the speaker. And I feel like this might be like a good kind of InBetween thing because you have a little bit of display, but they still look like glasses. They're not bulky 'cause they're not trying to do too much. One thing I really liked is that when you take a photo, you actually get a little preview of that image that like floats onto the screen, which was really cool because it's hard to figure out how to frame pictures when you are taking using glasses camera on your smart glasses.

So I think there's some interesting ideas, but it's very early. Obviously they want like Gemini to be a big part of it. The Gemini stuff. Was busted in my demo.

Devindra: You also said they don't plan on selling these are like purely, hey, this is what could be a thing. But they're not selling these specific glasses, right?

Karissa: Yeah, these specific ones are like, this is a research prototype. But they did also announce a partnership with Warby Parker and another glasses company. So I think it's like you can see them trying to take a meta approach here, whichactually would be pretty smart to say let's partner with.

A known company that makes glasses, they're already popular. We can give them our, our tech expertise. They can make the glasses look good and, maybe we'll get something down the line. I actually heard a rumor that. Prototype was manufactured by Samsung.

They wouldn't say

Devindra: Of course it's Sam, Samsung wants to be all over this. Samsung is the one building their the full on Android XR headset, which is a sort of like vision Pro copycat, like it is Mohan. Yeah. Moan. It is displays with the pass through camera. That should be coming later this year.

Go ahead Ben.

Ben: Yeah. Question for Karissa. When Sergey brand was talking about Google Glass, did that happen before or after the big demo for the Google XR glasses?

Karissa: That was after. That was at the end of the day. He was a surprise guest in this fireside chat with the DeepMind, CEO. And yeah, it was, we were all wondering about that.

'cause we all, dev probably remembers this very well the, when Google Glass came out and cereal and skydivewearing them into io. Yeah.

Speaker: Yep.

Karissa: And then, now for him to come back and say we made a lot of mistakes with that product and.

Ben: But was it mistakes or was it just the fact that like technology was not there yet because he was talking about like consumer electronic supply chain, blah, blah, blah, blah, blah.

Devindra: He's right that the tech has caught up with what the vision of what they wanted to do, but also I think he fundamentally misread like people will see you looking like the goddamn borg and want to destroy you. They want you will turn into Captain Picard and be like, I must destroy whoever is wearing Google Glass because this looks like an alien trying to take over my civilization.

And the thing that meta did right, that you've seen Karissa, is that make 'em look like normal glasses and Yeah, but nobody will knows,

Ben: Karissa does not look entirely human in this picture either.

Karissa: Yes. But listen from, if you see 'em straight on, they don't, they look transparent. That was I used that photo because I was trying to.

Devindra: You get the angle, show The display.

Karissa: Yeah.

Devindra:Yeah. There's another one like you. This looks normal. This looks totally normal. The glasses themselves look like, they look like typical hipster glasses. Like they're not like a super big frame around them. You're they look like the arms seem big. The arms seem wider than a typical pair of glasses, but you wouldn't know that 'cause it's covered in your hair.

A lot of people won't notice glasses, arms as much.

Ben: Yeah,

Devindra: that is cool. The issue

Ben: still is that all of these frames are so chunky. And it's because you need to hide all of the internals and everything, but you're not gonna get like the beautiful, like thin Japanese like titanium anytime soon. No, because this stuff needs to shrink way more.

Devindra: This stuff that's not, those the kind of frames they are. I will say I had a meeting with the one of the I believe the CEO of X reel who. Came not, I did talk to them at c so they, they had like a lot of ideas about that. I talked to the the head of space top, which isthe, that's the company that was doing the sort of AR laptop thing.

And then they gave up on that idea because AI PCs have the nmps that they need to do that stuff. And they're all in on the idea that, more people will want to use these sorts of glasses. Maybe not all the time, but for specific use cases. Something that co covers your field of vision more.

Could be a great thing when you sit down at your desk. I could see people doing this. I could see people getting these glasses. I don't know if it's gonna be good for society, right? It feels when Bluetooth headsets were first popping up and everybody hated those people, and you're like, oh, we must shun this person from society.

This one, you can't quite see the screen. So you can pretend to be a normal human and then have this like augmented ability next to you. If they can hide that, if they can actually hide the fact that you have a display on your glasses that would help people like me who are face blind and I walk around I don't, I know this person.

I've seen them before. What is their name? What is their name? I could see that being useful.

Ben: On the other side of itthough, if you have one standard look for glasses like this, then you know, oh, this person is, I. Also interacting with like information and stuff that's like popping up in front of their eyes.

It's a universal signifier, just like having a big pair of headphones is

Devindra: I think you will see people looking off to the distance. Krisa, did you notice that your eye line was moving away from people you were talking to while you were wearing these?

Karissa: Yeah, and that was also one of the issues that I had was that the.

Actual, like display was like, was it like didn't quite render right? Where I'm not a farsighted person, but I actually had to look farther off in the distance to actually get it to like my eyes to focus on it. And I asked 'em about that and they're like, oh it's a prototype.

It's not quite dialed in. They weren't calibrating these things to your eyeballs. Like the way when I did the Meta Orion demo, they have to take these specific measurements because there's eye tracking and all these things and this, didn't have any of that. There. Yeah, there definitely was.

You're, somebody's talking to you, but you're looking over here.

Devindra: That's not great. That'snot great for society. You're having a conversation with people. I like how they're framing this oh yes, you can be more connected with reality. 'cause you don't have a phone in front of your face, except you always have another display in front of your face, which nobody else can see, and you're gonna look like an alien walking around.

They showed some videos of people using it for like street navigation. Which I kinda like. You're in a new city, you'll see the arrows and where to turn and stuff. That's useful. But there is this, there was one that was really overwrought. It was a couple dancing at Sunset, and the guy is take a picture of this beautiful moment of the sun peeking through behind, my lady friend.

And it just felt like that's what you wanna do in that moment. You wanna talk to your virtual assistant while you should be enjoying the fact that you are having this beautiful dancing evening, which nobody will ever actually have. So that's the whole thing. I will say my overall thoughts on this stuff, like just looking at this, the stuff they showed before they actually showed us the glasses, it doesn't feel like Google is actually that far in terms of making this a reality.

Karissa the, like I'm comparing it to. Where Metais right now, and even where Apple is right now, like when Apple showed us the vision Pro. We were able to sit down and I had a 30 minute demo of that thing working, and I saw the vision of what they were doing and they thought a lot about how this was.

How long was your demo with this thing?

Karissa: I was in the room with them for about five minutes and I had them on for about three minutes myself. That's not a demo. That's not a demo.

Ben: Oh, goodness. So all of these pictures were taken in the same 90 seconds? Yes. Yeah. God. That's amazing.

Devindra: It's amazing you were able to capture these impressions, Karissa.

Yeah,

Karissa: I will say that they did apparently have a demo in December, a press event in December where people got to see these things for a lot longer, but it was, they could not shoot them at all. We, a lot of us were wondering if that was why it was so constrained. They only had one room, there's hundreds of people basically lining up to try these out.

And they're like very strict. You got five minutes, somebody's in there like after a couple minutes, rushing you out, and we're like, okay. Like

Devindra: They clearly only have a handful of these. That's like the main reason this is happening. I am, this is the company, that did Google Glass and that was tooearly and also maybe too ambitious.

But also don't forget, Google Cardboard, which was this that was a fun little project of getting phone-based vr happening. Daydream vr, which was their self-contained headset, which was cool. That was when Samsung was doing the thing with Meta as well, or with Oculus at the time. So and they gave up on those things.

Completely. And Google's not a company I trust with consumer Hardaware in general. So I am. Don't think there is a huge future in Android xr, but they wanna be there. They wanna be where Meta is and where Apple is and we shall see. Anything else you wanna add about io, Karissa?

Karissa: No, just that AI.

A i a ai

Devindra: a I didn't AI ao, A IAO a IO starline. The thing that was a, like weird 3D rendering teleconferencing video that is becoming a real thing that's turning to Google Beam video. But it's gonna be an enterprise thing. They're teaming up with AI to, with HP to bring a scaled down version of that two businesses.

I don't think we'll love or see That's one of those things where it's oh, this existsin some corporate offices who will pay for this thing, but. I don't, normal people will never interact with this thing, so it practically just does not exist. So we shall see. Anyway, stay tuned for, we're gonna have more demos of the Gemini stuff.

We'll be looking at the new models, and certainly Chris and I will be looking hard at Android XR and wherever the heck that's going.

Let's quickly move on to other news. And I just wanna say there were other events, Compex, we wrote up a couple, a whole bunch of laptops. A MD announced a cheaper radio on graphics card. Go check out our stories on that stuff. Build. I wrote one, I got a 70 page book of news from Microsoft about build and 99% of that news just does not apply to us because Build is so fully a developer coding conference. Hey, there's more more copilot stuff. There's a copilot app coming to 360fi subscribers, and that's cool, but not super interesting. I would say the big thing that happened this week and that surprised a lot of us is the news that OpenAI has bought.

Johnny i's design startup for six and a half billion. Dollars. This is a wild story, which is also paired with a weird picture. It looks like they're getting married. It looks like they're announcing their engagement over here because Johnny, ive is just leaning into him. Their heads are touching a little bit.

It's so adorable. You're not showing

Ben: the full website though. The full website has like a script font. It literally looks, yeah, like something from the knot.

Devindra: It Is it? Yeah. Let's look at here. Sam and Johnny introduced io. This is an extraordinary moment. Computers are now seeing, thinking, understanding, please come to our ceremony at this coffee shop.

For some reason, they also yeah, so they produced this coffee shop video to really show this thing off and, it is wild to me. Let me pull this up over here.

Ben: While we're doing that. Karissa, what do youhave to say about this?

Karissa: I don't, I'm trying to remember, so I know this is Johnny Ives like AI because he also has like the love from, which is still

Devindra: this is love from, this is, so he is, let me get the specifics of the deal out here.

Yeah. As part of the deal Ive and his design studio love form. Is it love form or love form? Love form. Yeah. Love form are gonna be joining are gonna work independently of open ai. But Scott Cannon Evans Hanky and Ang Tan who co-founded io. This is another io. I hate these. Yeah, so IO is his AI.

Karissa: Focused design thing.

And then love form is like his design

Devindra: studio thing.

Karissa: Sure. Yeah. I'm just, he

Devindra: has two design things.

Karissa: I'm trying to remember what they've done. I remember there was like a story about they made like a really expensive jacket with some weird buttons or something like

Devindra: Yep. I do remember that.

Karissa: I was just trying to back my brain of what Johnny Iiv has really done in his post Apple life. I feel like we haven't, he's made

Devindra: billions of dollars courses. What's happened? Yes.Because he is now still an independent man. Clearly he's an independent contractor, but love like the other side of io.

Which includes those folks. They will become open AI employees alongside 50 other engineers, designers, and researchers. They're gonna be working on AI Hardaware. It seems like Johnny, I will come in with like ideas, but he, this is not quite a marriage. He's not quite committing. He's just taking the money and being like, Ew, you can have part of my AI startup for six and a half billion dollars.

Ben: Let us know your taxes. It's all equity though, so this is all paper money. Six and a half billion dollars. Of like open AI's like crazy, their crazy valuation who knows how act, how much it's actually going to be worth. But all these people are going to sell a huge chunk of stock as soon as open AI goes public anyway.

So it's still gonna be an enormous amount of money.

Devindra: Lemme, let me see here, the latest thing. Open OpenAI has raised 57.9 billion of funding over 11 rounds.Good Lord. Yeah. Yeah. So anyway, a big chunk of that is going to, to this thing because I think what happened is that Sam Altman wants to, he clearly just wants to be Steve Jobs.

I think that's what's happening here. And go, I, all of you go look at the video, the announcement video for this thing, because it is one of the weirdest things I've seen. It is. Johnny I have walking through San Francisco, Sam Altman, walking through San Francisco with his hands in his pockets. There's a whole lot of setup to these guys meeting in a coffee shop, and then they sit there at the coffee shop like normal human beings, and then have an announcement video talking to nobody.

They're just talking to the middle of the coffee bar. I don't know who they're addressing. Sometimes they refer to each other and sometimes they refer to camera, but they're never looking at the camera. This is just a really wild thing. Also. Yet, another thing that makes me believe, I don't think Sam Altman is is a real human boy.

I think there is actually something robotic about this man, because I can't see him actually perform in real lifewhat they're gonna do. They reference vagaries, that's all. It's, we don't know what exactly is happening. There is a quote. From Johnny Ive, and he says, quote, the responsibility that Sam shares is honestly beyond my comprehension end quote.

Responsibility of what? Just building this like giant AI thing. Sam Alman For humanity. Yeah, for humanity. Like just unlocking expertise everywhere. Sam Altman says he is. He has some sort of AI device and it's changed his life. We don't know what it is. We dunno what they're actually working on. They announced nothing here.

But Johnny Ive is very happy because he has just made billions of dollars. He's not getting all of that money, but he, I think he's very pleased with this arrangement. And Sam Malman seems pleased that, oh, the guy who who designed the iPhone and the MacBook can now work for me. And Johnny, I also says the work here at Open AI is the best work he's ever done.

Sure. You'd say that. Sure. By the way.

Karissa: Sure. What do you think Apple thinks about all this?

Devindra: Yeah,

Karissa: their AIprogram is flailing and like their, star designer who, granted is not, separated from Apple a while ago, but is now teaming up with Sam Altman for some future computing AI Hardaware where like they can't even get AI Siri to work.

That must be like a gut punch for folks maybe on the other side of it though. Yeah, I

Ben: don't think it's sour grapes to say. Are they going into the like. Friend, like friend isn't even out yet, but like the humane pin? Yes. Or any of the other like AI sidekick sort of things like that has already crashed and burned spectacularly twice.

Devindra: I think Apple is, maybe have dodged a bullet here because I, the only reason Johnny and I just working on this thing is because he OpenAI had put some money into left Formm or IO years ago too. So they already had some sort of collaboration and he's just okay, people are interested in the ai.

What sort of like beautiful AI device can I buy? The thing is.Johnny Ive unchecked as a designer, leads to maddening things like the magic mouse, the charges from the bottom butterfly

Karissa: keyboard,

Devindra: any butterfly keyboard. Yeah, that's beautiful, but not exactly functional. I've always worked best when he Johnny, ive always worked best when I.

He had the opposing force of somebody like a Steve Jobs who could be like, no, this idea is crazy. Or reign it in or be more functional. Steve Jobs not a great dude in many respects, but the very least, like he was able to hone into product ideas and think about how humans use products a lot. I don't think Johnny, ive on his own can do that.

I don't think Sam Altman can do that because this man can barely sit and have a cup of coffee together. Like a human being. So I, whatever this is. I honestly, Chris, I feel like Apple has dodged a bullet because this is jumping into the AI gadget trend. Apple just needs to get the software right, because they have the devices, right?

We are wearing, we're wearing Apple watches. People have iPhones, people have MacBooks. What they need to do, solidify the infrastructure the AIsmarts between all those devices. They don't need to go out and sell a whole new device. This just feels like opening AI is a new company and they can try to make an AI device a thing.

I don't think it's super compelling, but let us know listeners, if any of this, listen to this chat of them talking about nothing. Unlocking human greatness, unlocking expertise just through ai, through some AI gadget. I don't quite buy it. I think it's kind of garbage, but yeah.

Ben: Anything else you guys wanna say about this?

This is coming from the same guy who, when he was asked in an interview what college students should study, he said Resilience.

Karissa: Yeah. I just think all these companies want. To make the thing that's the next iPhone. Yes. They can all just stop being relying on Apple. It's the thing that Mark Zuckerberg has with all of their like Hardaware projects, which by the way, there was one of the stories said that Johnny I thing has been maybe working on some kind of.

Head earbuds with cameras on them, which soundedvery similar to a thing that meta has been rumored about meta for a long time. And and also Apple,

Devindra: like there, there were rumors about AirPods with head with

Karissa: cameras. Yeah. And everyone's just I think trying to like, make the thing that's like not an iPhone that will replace our iPhones, but good luck to them, good, good

Devindra: luck to that because I think that is coming from a fundamentally broken, like it's a broken purpose. The whole reason doing that is just try to outdo the iPhone. I was thinking about this, how many companies like Apple that was printing money with iPods would just be like, Hey we actually have a new thing and this will entirely kill our iPod business.

This new thing will destroy the existing business that is working so well for us. Not many companies do that. That's the innovator's dilemma that comes back and bites companies in the butt. That's why Sony held off so long on jumping into flat screen TVs because they were the world's leader in CRTs, in Trinitron, and they're like, we're good.

We're good into the nineties. And then they completely lost the TV business. That's why Toyota was so slow to EVs, because they're like, hybrids are good to us. Hybrids are great. We don't need an EV for a very long time. And then they released an EV thatwe, where the wheels fell off. So it comes for everybody.

I dunno. I don't believe in these devices. Let's talk about something that could be cool. Something that is a little unrealistic, I think, but, for a certain aesthetic it is cool. Fujifilm announced the X half. Today it is an digital camera with an analog film aesthetic. It shoots in a three by four portrait aspect ratio.

That's Inax mini ratio. It looks like an old school Fuji camera. This thing is pretty wild because the screen it's only making those portrait videos. One of the key selling points is that it can replicate some film some things you get from film there's a light leak simulation for when you like Overexpose film A little bit, a ation, and that's something

Ben: that Fujifilm is known for.

Devindra: Yes. They love that. They love these simulation modes. This is such a social media kid camera, especially for the people who cannot afford the Fuji films, compact cameras.Wow. Even the

Ben: screen is do you wanna take some vertical photographs for your social media? Because vertical video has completely won.

Devindra: You can't, and it can take video, but it is just, it is a simplistic living little device. It has that, what do you call that? It's that latch that you hit to wind film. It has that, so you can put it into a film photograph mode where you don't see anything on the screen. You have to use the viewfinder.

To take pictures and it starts a countdown. You could tell it to do like a film, real number of pictures, and you have to click through to hit, take your next picture. It's the winder, it's, you can wind to the next picture. You can combine two portrait photos together. It's really cool. It's really cute.

It's really unrealistic I think for a lot of folks, but. Hey, social media kits like influencers, the people who love to shoot stuff for social media and vertical video. This could be a really cool little device. I don't, what do you guys think about this?

Karissa: You know what this reminds me of? Do you remember like in the early Instagram days when there was all theseapps, like hip, systematic where they tried to emulate like film aesthetics?

And some of them would do these same things where like you would take the picture but you couldn't see it right away. 'cause it had to develop. And they even had a light leak thing. And I'm like, now we've come full circle where the camera companies are basically like yeah. Taking or like just doing their own.

Spin on that, but

Devindra: it only took them 15 years to really jump on this trend. But yes, everybody was trying to emulate classic cameras and foodie was like, oh, you want things that cost more but do less. Got it. That's the foodie film X half. And I think this thing will be a huge success. What you're talking about krisa, there is a mode where it's just yeah.

You won't see the picture immediately. It has to develop in our app and then you will see it eventually. That's cool honestly, like I love this. I would not, I love it. I would not want it to be my main camera, but I would love to have something like this to play around when you could just be a little creative and pretend to be a street photographer for a little bit.

Oh man. This would be huge in Brooklyn. I can just,

Ben: Tom Rogers says cute, but stupid tech. I think that'sthe perfect summary.

Devindra: But this is, and I would say this compared to the AI thing, which is just like. What is this device? What are you gonna do with it? It feels like a lot of nothing in bakery.

Whereas this is a thing you hold, it takes cool pictures and you share it with your friends. It is such a precise thing, even though it's very expensive for what it is. I would say if you're intrigued by this, you can get cheap compact cameras, get used cameras. I only ever buy refurbished cameras.

You don't necessarily need this, but, oh man, very, but having a

Karissa: Fuji film camera is a status symbol anyway. So I don't know. This is it's eight 50 still seems like a little steep for a little toy camera, basically. But also I'm like I see that. I'm like, Ooh, that looks nice.

Devindra: Yeah. It's funny the power shots that kids are into now from like the two thousands those used to cost like 200 to 300 bucks and I thought, oh, that is a big investment in camera. Then I stepped up to the Sony murals, which were like 500 to 600 or so. I'm like, okay, this is a bigger step up than even that.

Most people would be better off with amuralist, but also those things are bigger than this tiny little pocket camera. I dunno. I'm really I think it's, I'm enamored with this whole thing. Also briefly in other news we saw that apparently Netflix is the one that is jumping out to save Sesame Street and it's going to, Sesame Street will air on Netflix and PBS simultaneously.

That's a good, that's a good thing because there was previously a delay when HBO was in charge. Oh really? Yeah. They would get the new episodes and there was like, I forget how long the delay actually was, but it would be a while before new stuff hit PBS. This is just Hey, I don't love that so much of our entertainment and pop culture it, we are now relying on streamers for everything and the big media companies are just disappointing us, but.

This is a good move. I think Sesame Street should stick around, especially with federal funding being killed left and right for public media like this. This is a good thing. Sesame Street is still good. My kids love it. When my son starts leaning into like his Blippy era, I. I justkinda slowly tune that out.

Here's some Sesame Street. I got him into PeeWee's Playhouse, which is the original Blippy. I'm like, yes, let's go back to the source. Because Peewee was a good dude. He's really, and that show still holds up. That show is so much fun. Like a great introduction to camp for kids. Great. In introduction to like also.

Diverse neighborhoods, just Sesame Street as well. Peewee was, or mr. Rogers was doing

Ben: it before. I think everyone,

Devindra: Mr. Rogers was doing it really well too. But Peewee was always something special because PeeWee's Wild, Peewee, Lawrence Fishburn was on Peewee. There, there's just a lot of cool stuff happening there.

Looking back at it now as an adult, it is a strange thing. To watch, but anyway, great to hear that Sesame Street is back. Another thing, not so quick.

Ben: Yeah, let me do this one. Go ahead, if I may. Go ahead. So if you have any trouble getting audio books on Libby or Hoopla or any of the other interlibrary loan systems that you can like access on your phone or iPad any tablet.

That'sbecause of the US government because a while ago the Trump administration passed yet another executive order saying that they wanted to cut a bunch of funding to the Institute of Museum and Library Services, the IMLS, and they're the ones who help circulate big quotation marks there just because it's digital files, all of these things from interlibrary loans.

So you can, get your audio books that you want. The crazy thing about this is that the IMLS was created in 1996 by a Republican controlled Congress. What's the deal here, guys? There's no waste, fraud and abuse, but if you have problems getting audio books, you can tell a friend or if anybody's complaining about why their, library selection went down.

By a lot on Libby recently, now you have the answer.

Devindra: It is truly sad. A lot of what's happening is just to reduce access to information because hey, a well-formed population isdangerous to anybody in charge, right? Terrible news. Let's move on to stuff from that's happening around in gadget.

I wanna quickly shout out that Sam Rutherford has reviewed the ACEs RG flow Z 13. This is the sort of like surface like device. That's cool. This is the rise in pro Max chip. Sam seems to like it, so that's, it's a cool thing. Not exactly stealthy. He gave it a 79, which is right below. The threshold we have for recommending new products because this thing is expensive.

You're paying a lot of money to get, essentially get a gaming tablet. But I tested out cs. It is cool that it actually worked for a certain type of person with too much money and who just needs the lightest gaming thing possible. I could see it being compelling. Let's see, what is the starting price?

for a gaming tablet. Sam says it costs the same or more as a comparable RRG Zes G 14 with a real RTX 50 70. That is a great laptop. The RRGs Zes G 14, we have praised that laptop so much. So this is notreally meant for anybody ACEs lifts to do these experiments. They're getting there, they're getting there in terms of creating a gaming tablet, but not quite something I'd recommend for everybody at this point.

All right. We have a quick email from a listener too. Thank you for sending this in, Jake Thompson. If you wanna send us an email, e podcast in gadget.com, and again, your emails may head into our Asking Gadget section. Jake asks. He's a real estate agent in need of a new laptop. He uses a Chromebook right now and it meets every need he has.

Everything they do is web-based, but should they consider alternatives to a premium com Chromebook for their next computer, he says he doesn't mind spending or more if he can get something lightweight, trustworthy with a solid battery life. What would we consider in the search? I would point to, I immediately point to Jake, to our laptop guides because literally everything we mention, the MacBook Air.

The AsisZen book, S 14, even the Dell Xbs 13 would be not much more than that price. I think more useful than a premium Chromebook because I think the idea of a premium Chromebook is a, is insanity. I don't know why you're spending so much money for a thing that can only do web apps, cheap Chromebooks, mid-range Chromebooks fine, or less.

Great. But if you're spending that much money and you want something that's more reliable, that you could do more with, even if everything you're doing is web-based, there may be other things you wanna do. MacBook Windows laptop. There is so much more you can unlock there. Little bit, a little bit of gaming, a little bit of media creation.

I don't know, Karissa. Ben, do you have any thoughts on this? What would you recommend or do, would you guys be fine with the Chromebook?

Karissa: I like Chromebooks. I thought my first thought, and maybe this is like too out there, but would an iPad Pro fit that fit those requirements? 'cause you can do a lot with an iPad Pro.

You

Devindra: can do a lot that's actually great battery,

Karissa: lightweight, lots of apps. If most everything he's doing is web based, there's. You can probably use iPad apps.

Devindra: That's actually a good point. Karissa you cando a lot with an iPad and iPad Pro does start at around this price too. So it would be much lighter and thinner than a laptop.

Especially if you could do a lot of web stuff. I feel like there are some web things that don't always run well in an iPad form. Safari and iPad doesn't support like everything you'd expect from a web-based site. Like I think if you. There are things we use like we use Video Ninja to record podcasts and that's using web RTC.

Sometimes there are things like zencaster, something you have to use, apps to go use those things because I, iOS, iPad OS is so locked down. Multitasking isn't great on iPad os. But yeah, if you're not actually doing that much and you just want a nice. Media device. An iPad is a good option too. Alright, thank you so much Jake Thompson.

That's a good one too because I wanna hear about people moving on from Chromebooks. 'cause they, send us more emails at podcast@enggadget.com for sure. Let's just skip right past what we're working on 'cause we're all busy. We're all busy with stuff unless you wanna mention anything. Chris, anything you're working on at the moment?

Karissa: The only thing I wanna flag is thatwe are rapidly approaching another TikTok sale or ban. Deadline Yes. Next month.

Speaker: Sure.

Karissa: Been a while since we heard anything about that, but, I'm sure they're hard at work on trying to hammer out this deal.

Ben: Okay. But that's actually more relevant because they just figured out maybe the tariff situation and the tariff was the thing that spoiled the first deal.

So we'll see what happens like at the beginning of July, yeah. I think

Karissa: The deadline's the 19th of June

Ben: oh, at the beginning of June. Sorry.

Karissa: Yeah, so it's. It's pretty close. And yeah, there has been not much that I've heard on that front. So

Devindra: this is where we are. We're just like walking to one broken negotiation after another for the next couple years.

Anything you wanna mention, pop culture related krisa that is taking your mind off of our broken world.

Karissa: So this is a weird one, but I have been, my husband loves Stargate, and we have been for years through, wait, the movie, the TV shows, StargateSG one. Oh

Devindra: God. And I'm yeah. Just on the

Karissa: last few episodes now in the end game portion of that show.

So that has been I spent years like making fun of this and like making fun of him for watching it, but that show's

Devindra: ridiculously bad, but yeah. Yeah.

Karissa: Everything is so bad now that it's, actually just a nice. Yeah. Distraction to just watch something like so silly.

Devindra: That's heartwarming actually, because it is a throwback to when things were simpler. You could just make dumb TV shows and they would last for 24 episodes per season. My for how

Ben: many seasons too,

Devindra: Karissa?

Karissa: 10 seasons.

Devindra: You just go on forever. Yeah. My local or lamb and rice place, my local place that does essentially New York streetcar style food, they placed Arga SG one.

Every time I'm in there and I'm sitting there watching, I was like, how did we survive with this? How did we watch this show? It's because we just didn't have that much. We were desperate for for genre of fiction, but okay, that's heartwarming Krisa. Have you guys done Farscape? No. Have you seen Farscape?

'cause Farscape is very, is a very similar type ofshow, but it has Jim Henson puppets and it has better writing. I love Jim Henson. It's very cool. Okay. It's it's also, it's unlike Stargate. It also dares to be like I don't know, sexy and violent too. Stargate always felt too campy to me. But Farscape was great.

I bought that for On iTunes, so that was a deal. I dunno if that deal is still there, but the entire series plus the the post series stuff is all out there. Shout out to Farscape. Shout out to Stargate SG one Simpler times. I'll just really briefly run down a few things and or season two finished over the last week.

Incredible stuff. As I said in my initial review, it is really cool to people see people watching this thing and just being blown away by it. And I will say the show. Brought me to tears at the end, and I did not expect that. I did not expect that because we know this guy's gonna die. This is, we know his fate and yet it still means so much and it's so well written and the show is a phenomenon.

Chris, I'd recommend it to you when you guys are recovering from Stargate SG one loss and or is fantastic. I also checked out a bit of murderbot theApple TV plus adaptation of the Martha Wells books. It's fine. It is weirdly I would say it is funny and entertaining because Alexander Skarsgard is a fun person to watch in in genre fiction.

But it also feels like this could be funnier, this could be better produced. Like you could be doing more with this material and it feels like just lazy at times too. But it's a fine distraction if you are into like half-baked sci-fi. So I don't know. Another recommendation for Stargate SG one Levers, Karissa Final Destination Bloodlines.

I reviewed over at the film Cast and I love this franchise. It is so cool to see it coming back after 15 years. This movie is incredible. Like this movie is great. If you understand the final destination formula, it's even better because it plays with your expectations of the franchise. I love a horror franchise where there's no, no definable villain.

You're just trying to escape death. There's some great setups here. This is a great time at the movies. Get your popcorn. Just go enjoy the wonderfully creative kills.And shout out to the Zap lapovsky and Adam B. Stein who. Apparently we're listening to my other podcast, and now we're making good movies.

So that's always fun thing to see Mount Destination Bloodlines a much better film. The Mission Impossible, the Final Reckoning. My review of that is on the website now too. You can read that in a gadget.

Ben: Thanks everybody for listening. Our theme music is by Game Composer Dale North. Our outro music is by our former managing editor, Terrence O'Brien. The podcast is produced by me. Ben Elman. You can find Karissa online at

Karissa: Karissa b on threads Blue Sky, and sometimes still X.

Ben: Unfortunately, you can find Dendra online

Devindra: At dendra on Blue Sky and also podcast about movies and TV at the film cast@thefilmcast.com.

Ben: If you really want to, you can find me. At hey bellman on Blue Sky. Email us at podcast@enggadget.com. Leave us a review on iTunes and subscribe on anything that gets podcasts. That includesSpotify.

This article originally appeared on Engadget at

#engadget #podcast #googleEngadget Podcast: The AI and XR of Google I/O 2025

Would you believe Google really wants to sell you on its AI? This week, we dive into the news from Google I/O 2025 with Engadget's Karissa Bell. We discuss how Gemini is headed to even more places, as well as Karissa's brief hands-on with Google's prototype XR glasses. It seems like Google is trying a bit harder now than it did with Google Glass and its defunct Daydream VR platform. But will the company end up giving up again, or does it really have a shot against Meta and Apple?

Subscribe!

iTunes

Spotify

Pocket Casts

Stitcher

Google Podcasts

Topics

Lots of AI and a little XR: Highlights from Google I/O 2025 – 1:15

OpenAI buys Jony Ive’s design company for B, in an all equity deal – 29:27

Fujifilm’s X Half could be the perfect retro camera for the social media age – 39:42

Sesame Street is moving from HBO to Netflix – 44:09

Cuts to IMLS will lead to headaches accessing content on apps like Libby and Hoopla – 45:49

Listener Mail: Should I replace my Chromebook with a Mac or PC Laptop? – 48:33

Pop culture picks – 52:22

Credits

Hosts: Devindra Hardawar and Karissa BellProducer: Ben EllmanMusic: Dale North and Terrence O'Brien

Transcript

Devindra:What's up, internet and welcome back to the Engadget Podcast. I'm Senior Editor Devindra Hardawar. I'm joined this morning by Senior Writer Karissa Bell. Hello, Karissa.

Karissa: Hello. Good morning.

Devindra: Good morning. And also podcast producer Ben Elman. Hey Ben, I'm muted my dang self. Hello. Hello, Ben. Good morning. It's been a busy week, like it's one of those weeks where.

Three major conferences happened all at once and a varying like relevance to us. Google IO is the big one. We'll be talking about that with Karissa who was there and got to demo Google's XR glasses, but also Computex was happening. That's over in Taipei and we got a lot of news from that to, we'll mention some of those things.

Also, Microsoft build happened and I feel like this was the less least relevant build to us ever. I got one bit of news I can mention there. That's pretty much it. It's been a crazy hectic week for us over at Eng Gadget. As always, if you're enjoying the show, please be free to subscribe to us on iTunes or your podcast catcher of choice.

Leave us a review on iTunes, drop us email at podcast@enggadget.com.Those emails, by the way, if you ask a good question, it could end up being part of our Ask Engadget section, so that's something we're starting out. I have another good one. I'll be throwing to asking Eng gadgets soon. So send us your emails podcast@enggadget.com, Google io.

It's all about ai, isn't it? I feel like Karissa, we were watching the keynote for this thing and it felt like it went on and on of the thing about the things, like we all pretty much expect more about Gemini ai, more about their newer models a bit about xr. Can you give me, what's your overall impression of IO at this point?

Karissa: Yeah, it's interesting because I've been covering IO long enough that I remember back when it used to be Android. And then there'd be like that little section at the end about, AI and some of the other stuff. And now it's completely reversed where it's entirely AI and basically no Android to the point where they had a whole separate event with their typical Android stuff the week before.

So it didn't have to go through and talk about any of yeah, the mobile things.

Devindra: That was just like a live stream that was just like a chill, live stream. No realeffort put into it. Whereas this is the whole show. They had a, who was it? But they had TOIs. TOIs, yeah. They had actual music which is something a lot of these folks do at keynotes.

It's actually really disconcerting to see cool musicians taking the corporate gig and performing at one of these things. I think, it was like 20 13, 20 14, maybe the Intel one, IDF or something. But the weekend was there. Just trying to jam to all these nerds and it was sad, but yeah. How was the experience Karissa like actually going there?

Karissa: Yeah, it was good. That keynote is always kind of a slog. Just, live blogging for our almost two hours straight, just constant is it's a lot. I did like the music. Towa was very chill. It was a nice way to start much. I preferred it over the crazy loop daddy set we got last year.

If anyone remembers that.

Devindra: Yeah.

Ben: Yeah. Oh, I remember that. Mark Rub was at audio. That was so weird.

Devindra: Yeah. Yeah, it was a little intense. Cool. So what are some of the highlights? Like there, there's a bunch of stuff. If you go look on, on the site on Engadget, wehave rounded up like all the major news and that includes a couple of things like hey, AI mode, chat bot coming to search.

That's cool. We got more, I think the thing a lot of people were looking at was like Project Astra and where that's gonna be going. And that is the sort of universal AI assistant where you could hold your phone up and just ask it questions about the world. We got another demo video about that.

Which again, the actual utility of it, I'm weirded out by. There was also one video where they were just like I'm gonna be dumb. I'm gonna pretend I'm very stupid and ask ask Astro, what is this tall building in front of me. And it was like a fire hydrant or something. It was like some piece of street thing.

It was not a really well done demo. Do you have any thoughts about that, Krista? Does that seem more compelling to you now or is it the same as what we saw last year?

Karissa: I think what was interesting to me about it was that we saw Astro last year and like that, I think there was a lot of excitement around that, but it wasn't really entirely clear where that.

Project is going. They've said it's like an experimental research thing. And then, I feel like this year they really laid out that they want tobring all that stuff to Gemini. Astra is sort of their place to like tinker with this and, get all this stuff working.

But like their end game is putting this into Gemini. You can already see it a little bit in Gemini Live, which is like their multimodal feature where you can do some. Version of what ASRA can do. And so that was interesting. They're saying, we want Gemini to be this universal AI assistant.

They didn't use the word a GI or anything like that. But I think it's pretty clear where they're going and like what their ambition is they want this to be, an all seeing, all knowing AI assistant that can help you with anything is what they're trying to sell it as.

Devindra: It is weird, like we're watching the demo video and it's a guy trying to fix his bike and he is pointing his phone at like the bike and asking questions like which, which particular, I don't know. It's which particular nut do I need for this tightening thing and it's giving him good advice.

It's pointing to things on YouTube. I. I don't know how useful this will actually be. This kind of goes to part of the stuff we're seeing with AI too, of just like offloadingsome of the grunt work of human intelligence because you can do this right now, people have been YouTubing to fix things forever.

YouTube has become this like information repository of just fix it stuff or home plumbing or whatever. And now it's just like you'll be able to talk to your phone. It'll direct you right to those videos or. Extract the actual instructions from those. That's cool. I feel like that's among the more useful things, more useful than like putting Gemini right into Chrome, which is another thing they're talking about, and I don't know how useful that is other than.

They wanna push AI in front of us, just like Microsoft wants to push copilot in front of us at all times.

Ben: What is a situation where you would have a question about your Chrome tabs? Like I'm not one of those people that has 15 chrome tabs open at any given time, and I know that I am. Yeah, I know.

Wait, you're saying that like it's a high. Like it's high. Yeah, no I know. So I have a abnormally low number of chrome tabs open, but can you still come upwith an idea of why you would ask Gemini anything about your own tabs open? Hopefully you have them organized. At least

Karissa: they should. A few examples of like online shopping, like maybe you have.

Two tabs of two different products open. And you can say

Devindra: exactly,

Karissa: ask Gemini to like, compare the reviews. Or they use like the example of a recipe video, a recipe blog. And maybe, you wanna make some kind of modification, make the recipe gluten free. And you could ask Gemini Hey, make this how would I make this gluten free?

But I think you're right, like it's not exactly clear. You can already just open a new tab and go to Gemini and ask it. Something. So they're just trying to reduce

Devindra: friction. I think that's the main thing. Like just the less you have to think about it, the more it's in your face. You can just always always just jump right to it.

It's hey, you can Google search from any your UL bar, your location bar in any browser. We've just grown to use that, but that didn't used to be the case. I remember there used to be a separate Google field. Some browsers and it wasn't always there in every browser too. They did announce some new models.

Wesaw there's Gemini 2.5 Pro. There's a deep think reasoning model. There's also a flash model that they announced for smaller devices. Did they show any good demos of the reasoning stuff? Because I that's essentially slower AI processing to hopefully get you better answers with fewer flaws.

Did they actually show how that worked? Karissa.

Karissa: I only saw what we all saw during the keynote and I think it's, we've seen a few other AI companies do something similar where you can see it think like its reasoning process. Yeah. And see it do that in real time.

But I think it's a bit unclear exactly what that's gonna look like.

Devindra: Watching a video, oh, Gemini can simulate nature simulate light. Simulate puzzles, term images into code.

Ben: I feel like the big thing, yeah. A lot of this stuff is from DeepMind, right? This is DeepMind an alphabet company.

Devindra: DeepMind and Alphabet company. There is Deep mind. This is deep Think and don't confuse this with deep seek, which is that the Chinese AI company, and theyclearly knew what they were doing when they call it that thing. Deep seek. But no, yeah, that is, this is partially stuff coming out of DeepMind.

DeepMind, a company which Google has been like doing stuff with for a while. And we just have not really seen much out of it. So I guess Gemini and all their AI processes are a way to do that. We also saw something that got a lot of people, we saw

Ben: Nobel Prize from them. Come on.

Devindra: Hey, we did see that.

What does that mean? What is that even worth anymore? That's an open question. They also showed off. A new video tool called Flow, which I think got a lot of people intrigued because it's using a new VO three model. So an updated version of what they've had for video effects for a while.

And the results look good. Like the video looks higher quality. Humans look more realistic. There have been. The interesting thing about VO three is it can also do synchronized audio to actually produce audio and dialogue for people too. So people have been uploading videos around this stuff online at this point, and you have tosubscribe to the crazy high end.

Version of Google's subscription to even test out this thing at this point that is the AI Ultra plan that costs a month. But I saw something of yeah, here's a pretend tour of a make believe car show. And it was just people spouting random facts. So yeah, I like EVs. I would like an ev.

And then it looks realistic. They sound synchronized like you could. I think this is a normal person. Then they just kinda start laughing at the end for no reason. Like weird little things. It's if you see a sociopath, try to pretend to be a human for a little bit. There's real Patrick Bateman vibes from a lot of those things, so I don't know.

It's fun. It's cool. I think there's, so didn't we

Ben: announce that they also had a tool to help you figure out whether or not a video was generated by flow? They did announce that

Devindra: too.

Ben: I've yeah, go ahead. Go

Karissa: ahead. Yeah. The synth id, they've been working on that for a while. They talked about it last year at io.

That's like their digital watermarking technology. And the funny thing about this istheir whole, the whole concept of AI watermarking is you put like these like invisible watermarks into AI generated content. You might, you couldn't just. See it, just watching this content.

But you can go to this website now and basically like double check. If it has one of these watermarks, which is on one hand it's. I think it's important that they do this work, but I also just wonder how many people are gonna see a video and think I wonder what kind of AI is in this.

Let me go to this other website and like double check it like that. Just,

Ben: yeah. The people who are most likely to immediately believe it are the, also the least likely to go to the website and be like, I would like to double check

Devindra: this. It doesn't matter because most people will not do it and the damage will be done.

Just having super hyper realistic, AI video, they can, you can essentially make anything happen. It's funny that the big bad AI bad guy in the new Mission Impossible movies, the entity, one of the main things it does is oh, we don't know what's true anymore because the entity can just cr fabricate reality at whim.

We're just doing that.We're just doing that for, I don't know, for fun. I feel like this is a thing we should see in all AI video tools. This doesn't really answer the problem, answer the question that everyone's having though. It's what is the point of these tools? Because it does devalue filmmaking, it devalues people using actual actors or using, going out and actually shooting something.

Did Google make a better pitch for why you would use Flow Karissa or how it would fit into like actual filmmaking?

Karissa: I'm not sure they did. They showed that goofy Darren Aronofsky trailer for some woman who was trying to like, make a movie about her own birth, and it was like seemed like they was trying to be in the style of some sort of like psychological thriller, but it just, I don't know, it just felt really weird to me.

I was I was just like, what are we watching? This doesn't, what are we watching? Yeah.

Ben: Was there any like good backstory about why she was doing that either or was it just Hey, we're doing something really weird?

Karissa: No, she was just oh I wonder, you know what? I wanna tell the story of my own birth and Okay.

Ben:Okay, but why is your relate birth more? Listen its like every, I need more details. Why is your birth more important? It's, everybody wants lots of babies. Write I memoir like one of three ways or something.

Devindra: Yeah, it's about everybody who wants to write a memoir. It's kinda the same thing. Kinda that same naval ga thing.

The project's just called ancestral. I'm gonna play a bit of a trailer here. I remember seeing this, it reminds me of that footage I dunno if you guys remember seeing, look who's talking for the very first time or something, or those movies where they, they showed a lot of things about how babies are made.

And as a kid I was like, how'd they make that, how'd that get done? They're doing that now with AI video and ancestral this whole project. It is kinda sad because Aronofsky is one of my, like one of my favorite directors when he is on, he has made some of my favorite films, but also he's a guy who has admittedly stolen ideas and concepts from people like Satoshi kh as specific framing of scenes and things like that.

In Requa for a Dream are in some cones movies as well. SoI guess it's to be expected, but it is. Sad because Hollywood as a whole, the union certainly do not like AI video. There was a story about James Earl Jones' voice being used as Darth Vader. In Fortnite. In Fortnite. In Fortnite, yeah.

Which is something we knew was gonna happen because Disney licensed the rights to his voice before he died from his estate. He went in and recorded lines to at least create a better simulation of his voice. But people are going out there making that Darth Vader swear and say bad things in Fortnite and the WGA or is it sag?

It's probably sag but sad. Like the unions are pissed off about this because they do not know this was happening ahead of time and they're worried about what this could mean for the future of AI talent. Flow looks interesting. I keep seeing play people play with it. I made a couple videos asked it to make Hey, show me three cats living in Brooklyn with a view of the Manhattan skyline or something.

And it, it did that, but the apartment it rendered didn't look fully real.It had like weird heating things all around. And also apparently. If you just subscribe to the basic plan to get access to flow, you can use flow, but that's using the VO two model. So older AI model. To get VO three again, you have to pay a month.

So maybe that'll come down in price eventually. But we shall see. The thing I really want to talk with you about Krisa is like, what the heck is happening with Android xr? And that is a weird project for them because I was writing up the news and they announced like a few things.

They were like, Hey we have a new developer released to help you build Android XR apps. But it wasn't until the actual a IO show. That they showed off more of what they were actually thinking about. And you got to test out a pair of prototype Google XR glasses powered by Android xr. Can you tell me about that experience and just how does it differ from the other XR things you've seen from who is it from Several, look, you've seen Metas Meta, you saw one from Snap, right?

Meta

Karissa: I've seen Snap. Yeah. Yeah. I've seen the X reel. Yeah, some of the other smallercompanies I got to see at CES. Yeah, that was like a bit of a surprise. I know that they've been talking about Android XR for a while. I feel like it's been a little, more in the background. So they brought out these, these glasses and, the first thing that I noticed about them was like, they were actually pretty small and like normal looking compared to, met Orion or like the snap spectacles.

Like these were very thin which was cool. But the display was only on one side. It was only on one lens. They called it like a monocular display. So there's one lens on one side. So it's basically just like a little window, very small field of view.

Devindra: We could see it in, if you go to the picture on top of Chris's hands on piece, you can see the frame out.

Of what that lens would be. Yeah.