0 Commentaires

0 Parts

60 Vue

Annuaire

Annuaire

-

Connectez-vous pour aimer, partager et commenter!

-

WWW.CNET.COMBest Internet Providers in Chapel Hill, North CarolinaIf you're looking for fast speeds in Chapel Hill, you're in luck. The area is well-known for its great offerings. But which is the very best for you?0 Commentaires 0 Parts 68 Vue

WWW.CNET.COMBest Internet Providers in Chapel Hill, North CarolinaIf you're looking for fast speeds in Chapel Hill, you're in luck. The area is well-known for its great offerings. But which is the very best for you?0 Commentaires 0 Parts 68 Vue -

WWW.VIDEOGAMER.COMNintendo Switch 2 is so popular that even MicroSD cards are rapidly selling outYou can trust VideoGamer. Our team of gaming experts spend hours testing and reviewing the latest games, to ensure you're reading the most comprehensive guide possible. Rest assured, all imagery and advice is unique and original. Check out how we test and review games here The reveal of the Nintendo Switch 2 may have been criticised for its launch price, but the hype is real. In the week after the console’s true reveal, gamers are quickly buying up MicroSD Express cards to expand the new device’s storage. Unlike the original Nintendo Switch console, the upcoming successor requires a much faster MicroSD Express card for games exclusively on the new console. In preparation for the console’s launch, players are quickly nabbing the faster memory cards to prepare for release. Nintendo Switch 2 causes MicroSD Express cards to sell out Via Tom’s Hardware, MicroSD Express cards are selling like hotcakes following the new console’s reveal. While supplies are quickly being resupplied, many stores are quickly running out of the newer memory cards. In Japan, the new memory cards were quickly sold out in both physical and digital stores across the region. Despite their expensive price, Japanese store Hermitage Akihabara sold 337 memory cards every hour, and they’re still selling. Impressively, stores are finding that the massive sale of MicroSD Express cards are organic sales. Instead of being boosted by scalpers, 80% of sales only include a single memory card instead of scalpers grabbing dozens of them to resell at a higher price. Nintendo’s surprisingly cheaper version of the new MicroSD standard is also selling well with the My Nintendo Store frequently selling out of MicroSD Express cards worldwide. Of course, traditional MicroSD cards will also work on the new Nintendo Switch console. However, these memory cards will only be able to play games for the original console as Switch 2 games require faster storage speeds than their predecessor. For more Nintendo Switch 2 coverage, read about how developers are very happy with the new console’s power. Additionally, check out why the console can’t play every Switch 1 game on launch. Subscribe to our newsletters! By subscribing, you agree to our Privacy Policy and may receive occasional deal communications; you can unsubscribe anytime. Share0 Commentaires 0 Parts 81 Vue

WWW.VIDEOGAMER.COMNintendo Switch 2 is so popular that even MicroSD cards are rapidly selling outYou can trust VideoGamer. Our team of gaming experts spend hours testing and reviewing the latest games, to ensure you're reading the most comprehensive guide possible. Rest assured, all imagery and advice is unique and original. Check out how we test and review games here The reveal of the Nintendo Switch 2 may have been criticised for its launch price, but the hype is real. In the week after the console’s true reveal, gamers are quickly buying up MicroSD Express cards to expand the new device’s storage. Unlike the original Nintendo Switch console, the upcoming successor requires a much faster MicroSD Express card for games exclusively on the new console. In preparation for the console’s launch, players are quickly nabbing the faster memory cards to prepare for release. Nintendo Switch 2 causes MicroSD Express cards to sell out Via Tom’s Hardware, MicroSD Express cards are selling like hotcakes following the new console’s reveal. While supplies are quickly being resupplied, many stores are quickly running out of the newer memory cards. In Japan, the new memory cards were quickly sold out in both physical and digital stores across the region. Despite their expensive price, Japanese store Hermitage Akihabara sold 337 memory cards every hour, and they’re still selling. Impressively, stores are finding that the massive sale of MicroSD Express cards are organic sales. Instead of being boosted by scalpers, 80% of sales only include a single memory card instead of scalpers grabbing dozens of them to resell at a higher price. Nintendo’s surprisingly cheaper version of the new MicroSD standard is also selling well with the My Nintendo Store frequently selling out of MicroSD Express cards worldwide. Of course, traditional MicroSD cards will also work on the new Nintendo Switch console. However, these memory cards will only be able to play games for the original console as Switch 2 games require faster storage speeds than their predecessor. For more Nintendo Switch 2 coverage, read about how developers are very happy with the new console’s power. Additionally, check out why the console can’t play every Switch 1 game on launch. Subscribe to our newsletters! By subscribing, you agree to our Privacy Policy and may receive occasional deal communications; you can unsubscribe anytime. Share0 Commentaires 0 Parts 81 Vue -

WWW.BLENDERNATION.COMBest of Blender Artists: 2025-16Every week, hundreds of artists share their work on the Blender Artists forum. I'm putting some of the best work in the spotlight in a weekly post here on BlenderNation. Source0 Commentaires 0 Parts 81 Vue

WWW.BLENDERNATION.COMBest of Blender Artists: 2025-16Every week, hundreds of artists share their work on the Blender Artists forum. I'm putting some of the best work in the spotlight in a weekly post here on BlenderNation. Source0 Commentaires 0 Parts 81 Vue -

WWW.VG247.COMDiablo 4's next season, Belial's Return, will be fully revealed next weekFollowing a small extension to Diablo 4’s currently-ongoing Season 7, Blizzard will officially kick off the game’s eighth season. We’ve known much about what to expect from this coming season - thanks to the PTR - but the developer never properly revealed it. Read more0 Commentaires 0 Parts 69 Vue

WWW.VG247.COMDiablo 4's next season, Belial's Return, will be fully revealed next weekFollowing a small extension to Diablo 4’s currently-ongoing Season 7, Blizzard will officially kick off the game’s eighth season. We’ve known much about what to expect from this coming season - thanks to the PTR - but the developer never properly revealed it. Read more0 Commentaires 0 Parts 69 Vue -

WWW.NINTENDOLIFE.COMUh Oh, It Looks Like Select Switch 2 Games Won't Support Cloud SavesImage: Nintendo LifeJust when you thought the messaging around Nintendo's 'Switch 2 Edition' games couldn't get any more confusing, another discovery comes along to stir things up even further. Looking at the game's store pages, it seems some upcoming titles won't support the Nintendo Switch Online Cloud Save feature — or maybe they will, it's all a bit confusing (thanks for the heads up, Eurogamer). As was pointed out in a recent Resetera thread by user RandomlyRandom67, the Tears of the Kingdom Nintendo Switch 2 Edition page on Nintendo's website houses the following disclaimer: "Please note: this software does not support the Nintendo Switch Online paid membership's Save Data Cloud backup feature".Subscribe to Nintendo Life on YouTube808kWatch on YouTube You'll find the same message at the bottom of Nintendo's Donkey Kong Bananza listing, and the original poster claimed it could also be found on the Breath of the Wild and Super Mario Party Jamboree Nintendo Switch 2 Edition pages, but it seems to have been removed now. Hmm. Image: Nintendo Life Image: Nintendo Life Where things get really confusing, however, is how these disclaimers appear to only be present on the European and North American game listings. Eurogamer noted that no such message appears on the Japanese site for any of the games, muddying the waters even further. Again, hmmmmm. We've reached out to Nintendo for a comment on these cloud save shenanigans and will update this article when we hear back. Removing the NSO cloud save option from new Switch 2 games is a baffling move, but taking it from the likes of TOTK — which, let's not forget, supported it in its Switch 1 form — makes even less sense. We know that you'll be able to move your original BOTW and TOTK save files over to the Switch 2 editions, but, if the disclaimer is correct, you won't be able to make a new cloud save in the latter once it's there. Surely there's been a mistake here. We'll be keeping an eye out for more clarification in the run-up to Switch 2's launch, but until then, cherish you're cloud saves, folks. Who knows how long they'll last? "That DLC is available as a separate purchase" You'll need to use 'Zelda Notes', though What do you think is going on here? Let us know in the comments. [source resetera.com, via eurogamer.net] Related Games See Also Share:0 0 Jim came to Nintendo Life in 2022 and, despite his insistence that The Minish Cap is the best Zelda game and his unwavering love for the Star Wars prequels (yes, really), he has continued to write news and features on the site ever since. Hold on there, you need to login to post a comment... Related Articles Switch 2's Backwards Compatibility List Provides Updates On Two Titles Here's what you can expect Here's Why Switch 2 Joy-Con And Pro Controllers Don't Have Analogue Triggers Nintendo thought instant input was the "better option"0 Commentaires 0 Parts 79 Vue

WWW.NINTENDOLIFE.COMUh Oh, It Looks Like Select Switch 2 Games Won't Support Cloud SavesImage: Nintendo LifeJust when you thought the messaging around Nintendo's 'Switch 2 Edition' games couldn't get any more confusing, another discovery comes along to stir things up even further. Looking at the game's store pages, it seems some upcoming titles won't support the Nintendo Switch Online Cloud Save feature — or maybe they will, it's all a bit confusing (thanks for the heads up, Eurogamer). As was pointed out in a recent Resetera thread by user RandomlyRandom67, the Tears of the Kingdom Nintendo Switch 2 Edition page on Nintendo's website houses the following disclaimer: "Please note: this software does not support the Nintendo Switch Online paid membership's Save Data Cloud backup feature".Subscribe to Nintendo Life on YouTube808kWatch on YouTube You'll find the same message at the bottom of Nintendo's Donkey Kong Bananza listing, and the original poster claimed it could also be found on the Breath of the Wild and Super Mario Party Jamboree Nintendo Switch 2 Edition pages, but it seems to have been removed now. Hmm. Image: Nintendo Life Image: Nintendo Life Where things get really confusing, however, is how these disclaimers appear to only be present on the European and North American game listings. Eurogamer noted that no such message appears on the Japanese site for any of the games, muddying the waters even further. Again, hmmmmm. We've reached out to Nintendo for a comment on these cloud save shenanigans and will update this article when we hear back. Removing the NSO cloud save option from new Switch 2 games is a baffling move, but taking it from the likes of TOTK — which, let's not forget, supported it in its Switch 1 form — makes even less sense. We know that you'll be able to move your original BOTW and TOTK save files over to the Switch 2 editions, but, if the disclaimer is correct, you won't be able to make a new cloud save in the latter once it's there. Surely there's been a mistake here. We'll be keeping an eye out for more clarification in the run-up to Switch 2's launch, but until then, cherish you're cloud saves, folks. Who knows how long they'll last? "That DLC is available as a separate purchase" You'll need to use 'Zelda Notes', though What do you think is going on here? Let us know in the comments. [source resetera.com, via eurogamer.net] Related Games See Also Share:0 0 Jim came to Nintendo Life in 2022 and, despite his insistence that The Minish Cap is the best Zelda game and his unwavering love for the Star Wars prequels (yes, really), he has continued to write news and features on the site ever since. Hold on there, you need to login to post a comment... Related Articles Switch 2's Backwards Compatibility List Provides Updates On Two Titles Here's what you can expect Here's Why Switch 2 Joy-Con And Pro Controllers Don't Have Analogue Triggers Nintendo thought instant input was the "better option"0 Commentaires 0 Parts 79 Vue -

REALTIMEVFX.COMCreate Flipbook Textures! (Tutorial)Hello Everyone! I made a tutorial on how you can make your own explosion fireball flipbook texture using Blender! I show you how to simulate, render, make motion vectors and use it in a game engine. check it out ↓Make A Fireball Flipbook Texture in Blender! (Game VFX Asset) if you have any questions, let me know in Youtube’s comments! 1 post - 1 participant Read full topic0 Commentaires 0 Parts 94 Vue

REALTIMEVFX.COMCreate Flipbook Textures! (Tutorial)Hello Everyone! I made a tutorial on how you can make your own explosion fireball flipbook texture using Blender! I show you how to simulate, render, make motion vectors and use it in a game engine. check it out ↓Make A Fireball Flipbook Texture in Blender! (Game VFX Asset) if you have any questions, let me know in Youtube’s comments! 1 post - 1 participant Read full topic0 Commentaires 0 Parts 94 Vue -

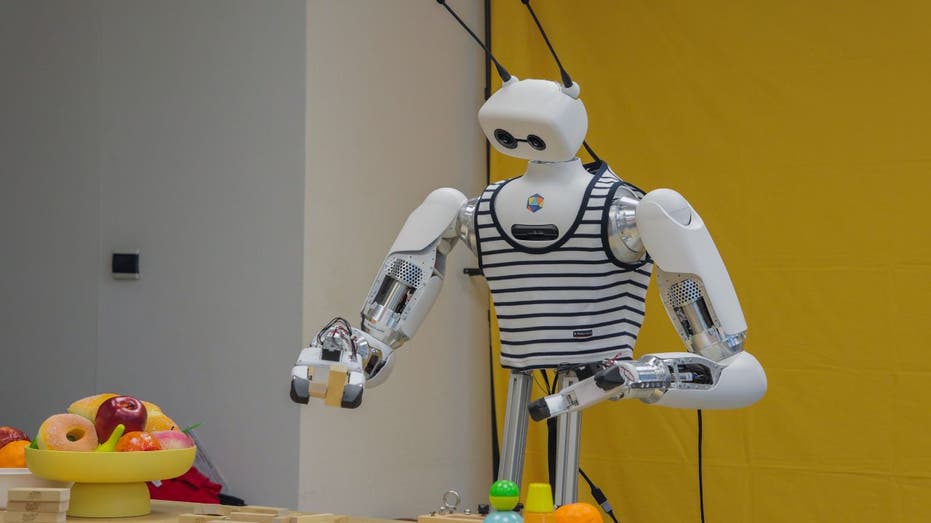

WWW.FOXNEWS.COMCan this $70,000 robot transform AI research?Tech Can this $70,000 robot transform AI research? Hugging Face to sell open-source robots thanks to Pollen Robotics acquisition Published April 18, 2025 6:00am EDT close Can this $70,000 robot transform AI research? Reachy 2 is touted as a "lab partner for the AI era." The folks at Hugging Face, the open-source artificial intelligence gurus, just jumped into the world of robotics by acquiring Pollen Robotics. And right out of the gate, they are offering the Reachy 2, a super-interesting humanoid robot designed as a "lab partner for the AI era." Ready to dive in and see what all the buzz is about?STAY PROTECTED & INFORMED! GET SECURITY ALERTS & EXPERT TECH TIPS – SIGN UP FOR KURT’S ‘THE CYBERGUY REPORT’ NOW Reachy 2 Humanoid robot (Hugging Face)What makes this humanoid robot so special?So, what makes Reachy 2 stand out? Well, first off, it's a state-of-the-art humanoid robot already making waves in labs like Cornell and Carnegie Mellon. It's designed to be friendly and approachable, inviting natural interaction. This robot is open-source and VR-compatible, perfect for research, education and experimenting with embodied AI.The innovative Orbita joint system gives Reachy 2's neck and wrists smooth, multi-directional movement, making it remarkably expressive. Reachy 2 also features human-inspired arms.Its mobile base, equipped with omni wheels and lidar, allows for seamless navigation, and the VR teleoperation feature lets you literally see through the robot's eyes! Finally, its open-source nature fosters collaboration and customization, with Pollen Robotics providing a ton of resources on their Hugging Face organization. Reachy 2 Humanoid robot (Hugging Face)Technical specificationsThis humanoid robot combines advanced vision, audio and actuator systems for cutting-edge AI interaction and teleoperation. Here's a quick look at what Reachy 2 brings to the table. Standing between 4.46 and 5.45 feet tall and weighing in at 110 pounds, it features bio-inspired arms with 7 degrees of freedom, capable of handling payloads up to 6.6 pounds. It's also equipped with a parallel torque-controlled gripper and multiple cameras for depth perception, plus a high-quality audio system. Navigating its environment is a breeze thanks to its omnidirectional mobile base.When it comes to perception, Reachy 2 has a vision module in its head with dual RGB cameras and a Time-of-Flight module for depth measurement. There's also an RGB-D camera in its torso for accurate depth sensing. Immersive stereo perception is achieved through microphones in Reachy's antennas.For interaction, Reachy 2 has custom-built speakers with a high-quality amplifier and a Rode AI-Micro audio interface. Its expressive head is powered by an Orbita system, and it has motorized antennas for enhanced human-robot interaction.Reachy 2's manipulation capabilities stem from its Orbita 3D and 2D parallel mechanisms, along with a Dynamixel-based parallel gripper that features torque control.Controlling Reachy 2 is a Solidrun Bedrock v3000 unit, with AI processing handled on external hardware. Finally, the mobile base includes omnidirectional wheels, Hall sensors and IMU, an RP Lidar S2 and a LiFePO₄ Battery.WHAT IS ARTIFICIAL INTELLIGENCE (AI)? Reachy 2 Humanoid robot (Hugging Face)Cost of the humanoid robotGetting your hands on Reachy 2 will cost you $70,000, a price that reflects its cutting-edge robotics and AI components and open-source capabilities, making it a serious investment for researchers and educators looking to push the boundaries of human-robot interaction.GET FOX BUSINESS ON THE GO BY CLICKING HERE Reachy 2 Humanoid robot (Hugging Face)Hugging Face and Pollen Robotics team upSo, what does Hugging Face scooping up Pollen Robotics really mean? Well, it could signal a big push toward making robotics more accessible. Think of it this way: Hugging Face co-founder Thomas Wolf and chief scientist at Hugging Face says, "We believe robotics could be the next frontier unlocked by AI, and it should be open, affordable, and private."Matthieu Lapeyre, Pollen Robotics co-founder, echoes this sentiment: "Hugging Face is a natural home for us to grow, as we share a common goal: putting AI and robotics in the hands of everyone."Hugging Face's acquisition of Pollen Robotics represents its fifth acquisition after Gradio and Xethub. This move solidifies Hugging Face's commitment to open-source AI and its vision for a future where AI and robotics are accessible to all. Reachy 2 Humanoid robot (Hugging Face)Kurt's key takeawaysBottom line? Hugging Face is making moves. Who knows, maybe one day we'll all have our own Reachy to help with the chores (or just keep us company). Either way, the collaboration between Hugging Face and Pollen Robotics is definitely worth keeping an eye on.If you could use a robot like Reachy 2 for any purpose, what would it be and why? Let us know by writing us at Cyberguy.com/Contact.For more of my tech tips and security alerts, subscribe to my free CyberGuy Report Newsletter by heading to Cyberguy.com/Newsletter.CLICK HERE TO GET THE FOX NEWS APPAsk Kurt a question or let us know what stories you'd like us to cover.Follow Kurt on his social channels:Answers to the most-asked CyberGuy questions:New from Kurt:Copyright 2025 CyberGuy.com. All rights reserved. Kurt "CyberGuy" Knutsson is an award-winning tech journalist who has a deep love of technology, gear and gadgets that make life better with his contributions for Fox News & FOX Business beginning mornings on "FOX & Friends." Got a tech question? Get Kurt’s free CyberGuy Newsletter, share your voice, a story idea or comment at CyberGuy.com.0 Commentaires 0 Parts 80 Vue

WWW.FOXNEWS.COMCan this $70,000 robot transform AI research?Tech Can this $70,000 robot transform AI research? Hugging Face to sell open-source robots thanks to Pollen Robotics acquisition Published April 18, 2025 6:00am EDT close Can this $70,000 robot transform AI research? Reachy 2 is touted as a "lab partner for the AI era." The folks at Hugging Face, the open-source artificial intelligence gurus, just jumped into the world of robotics by acquiring Pollen Robotics. And right out of the gate, they are offering the Reachy 2, a super-interesting humanoid robot designed as a "lab partner for the AI era." Ready to dive in and see what all the buzz is about?STAY PROTECTED & INFORMED! GET SECURITY ALERTS & EXPERT TECH TIPS – SIGN UP FOR KURT’S ‘THE CYBERGUY REPORT’ NOW Reachy 2 Humanoid robot (Hugging Face)What makes this humanoid robot so special?So, what makes Reachy 2 stand out? Well, first off, it's a state-of-the-art humanoid robot already making waves in labs like Cornell and Carnegie Mellon. It's designed to be friendly and approachable, inviting natural interaction. This robot is open-source and VR-compatible, perfect for research, education and experimenting with embodied AI.The innovative Orbita joint system gives Reachy 2's neck and wrists smooth, multi-directional movement, making it remarkably expressive. Reachy 2 also features human-inspired arms.Its mobile base, equipped with omni wheels and lidar, allows for seamless navigation, and the VR teleoperation feature lets you literally see through the robot's eyes! Finally, its open-source nature fosters collaboration and customization, with Pollen Robotics providing a ton of resources on their Hugging Face organization. Reachy 2 Humanoid robot (Hugging Face)Technical specificationsThis humanoid robot combines advanced vision, audio and actuator systems for cutting-edge AI interaction and teleoperation. Here's a quick look at what Reachy 2 brings to the table. Standing between 4.46 and 5.45 feet tall and weighing in at 110 pounds, it features bio-inspired arms with 7 degrees of freedom, capable of handling payloads up to 6.6 pounds. It's also equipped with a parallel torque-controlled gripper and multiple cameras for depth perception, plus a high-quality audio system. Navigating its environment is a breeze thanks to its omnidirectional mobile base.When it comes to perception, Reachy 2 has a vision module in its head with dual RGB cameras and a Time-of-Flight module for depth measurement. There's also an RGB-D camera in its torso for accurate depth sensing. Immersive stereo perception is achieved through microphones in Reachy's antennas.For interaction, Reachy 2 has custom-built speakers with a high-quality amplifier and a Rode AI-Micro audio interface. Its expressive head is powered by an Orbita system, and it has motorized antennas for enhanced human-robot interaction.Reachy 2's manipulation capabilities stem from its Orbita 3D and 2D parallel mechanisms, along with a Dynamixel-based parallel gripper that features torque control.Controlling Reachy 2 is a Solidrun Bedrock v3000 unit, with AI processing handled on external hardware. Finally, the mobile base includes omnidirectional wheels, Hall sensors and IMU, an RP Lidar S2 and a LiFePO₄ Battery.WHAT IS ARTIFICIAL INTELLIGENCE (AI)? Reachy 2 Humanoid robot (Hugging Face)Cost of the humanoid robotGetting your hands on Reachy 2 will cost you $70,000, a price that reflects its cutting-edge robotics and AI components and open-source capabilities, making it a serious investment for researchers and educators looking to push the boundaries of human-robot interaction.GET FOX BUSINESS ON THE GO BY CLICKING HERE Reachy 2 Humanoid robot (Hugging Face)Hugging Face and Pollen Robotics team upSo, what does Hugging Face scooping up Pollen Robotics really mean? Well, it could signal a big push toward making robotics more accessible. Think of it this way: Hugging Face co-founder Thomas Wolf and chief scientist at Hugging Face says, "We believe robotics could be the next frontier unlocked by AI, and it should be open, affordable, and private."Matthieu Lapeyre, Pollen Robotics co-founder, echoes this sentiment: "Hugging Face is a natural home for us to grow, as we share a common goal: putting AI and robotics in the hands of everyone."Hugging Face's acquisition of Pollen Robotics represents its fifth acquisition after Gradio and Xethub. This move solidifies Hugging Face's commitment to open-source AI and its vision for a future where AI and robotics are accessible to all. Reachy 2 Humanoid robot (Hugging Face)Kurt's key takeawaysBottom line? Hugging Face is making moves. Who knows, maybe one day we'll all have our own Reachy to help with the chores (or just keep us company). Either way, the collaboration between Hugging Face and Pollen Robotics is definitely worth keeping an eye on.If you could use a robot like Reachy 2 for any purpose, what would it be and why? Let us know by writing us at Cyberguy.com/Contact.For more of my tech tips and security alerts, subscribe to my free CyberGuy Report Newsletter by heading to Cyberguy.com/Newsletter.CLICK HERE TO GET THE FOX NEWS APPAsk Kurt a question or let us know what stories you'd like us to cover.Follow Kurt on his social channels:Answers to the most-asked CyberGuy questions:New from Kurt:Copyright 2025 CyberGuy.com. All rights reserved. Kurt "CyberGuy" Knutsson is an award-winning tech journalist who has a deep love of technology, gear and gadgets that make life better with his contributions for Fox News & FOX Business beginning mornings on "FOX & Friends." Got a tech question? Get Kurt’s free CyberGuy Newsletter, share your voice, a story idea or comment at CyberGuy.com.0 Commentaires 0 Parts 80 Vue -

WWW.ZDNET.COMAI has grown beyond human knowledge, says Google's DeepMind unitworawit chutrakunwanit/Getty ImagesThe world of artificial intelligence (AI) has recently been preoccupied with advancing generative AI beyond simple tests that AI models easily pass. The famed Turing Test has been "beaten" in some sense, and controversy rages over whether the newest models are being built to game the benchmark tests that measure performance.The problem, say scholars at Google's DeepMind unit, is not the tests themselves but the limited way AI models are developed. The data used to train AI is too restricted and static, and will never propel AI to new and better abilities. In a paper posted by DeepMind last week, part of a forthcoming book by MIT Press, researchers propose that AI must be allowed to have "experiences" of a sort, interacting with the world to formulate goals based on signals from the environment."Incredible new capabilities will arise once the full potential of experiential learning is harnessed," write DeepMind scholars David Silver and Richard Sutton in the paper, Welcome to the Era of Experience.The two scholars are legends in the field. Silver most famously led the research that resulted in AlphaZero, DeepMind's AI model that beat humans in games of Chess and Go. Sutton is one of two Turing Award-winning developers of an AI approach called reinforcement learning that Silver and his team used to create AlphaZero. The approach the two scholars advocate builds upon reinforcement learning and the lessons of AlphaZero. It's called "streams" and is meant to remedy the shortcomings of today's large language models (LLMs), which are developed solely to answer individual human questions. Google DeepMindSilver and Sutton suggest that shortly after AlphaZero and its predecessor, AlphaGo, burst on the scene, generative AI tools, such as ChatGPT, took the stage and "discarded" reinforcement learning. That move had benefits and drawbacks. Gen AI was an important advance because AlphaZero's use of reinforcement learning was restricted to limited applications. The technology couldn't go beyond "full information" games, such as Chess, where all the rules are known. Gen AI models, on the other hand, can handle spontaneous input from humans never before encountered, without explicit rules about how things are supposed to turn out. However, discarding reinforcement learning meant, "something was lost in this transition: an agent's ability to self-discover its own knowledge," they write.Instead, they observe that LLMs "[rely] on human prejudgment", or what the human wants at the prompt stage. That approach is too limited. They suggest that human judgment "imposes "an impenetrable ceiling on the agent's performance: the agent cannot discover better strategies underappreciated by the human rater.Not only is human judgment an impediment, but the short, clipped nature of prompt interactions never allows the AI model to advance beyond question and answer. "In the era of human data, language-based AI has largely focused on short interaction episodes: e.g., a user asks a question and (perhaps after a few thinking steps or tool-use actions) the agent responds," the researchers write."The agent aims exclusively for outcomes within the current episode, such as directly answering a user's question." There's no memory, there's no continuity between snippets of interaction in prompting. "Typically, little or no information carries over from one episode to the next, precluding any adaptation over time," write Silver and Sutton. However, in their proposed Age of Experience, "Agents will inhabit streams of experience, rather than short snippets of interaction."Silver and Sutton draw an analogy between streams and humans learning over a lifetime of accumulated experience, and how they act based on long-range goals, not just the immediate task."Powerful agents should have their own stream of experience that progresses, like humans, over a long time-scale," they write.Silver and Sutton argue that "today's technology" is enough to start building streams. In fact, the initial steps along the way can be seen in developments such as web-browsing AI agents, including OpenAI's Deep Research. "Recently, a new wave of prototype agents have started to interact with computers in an even more general manner, by using the same interface that humans use to operate a computer," they write.The browser agent marks "a transition from exclusively human-privileged communication, to much more autonomous interactions where the agent is able to act independently in the world."As AI agents move beyond just web browsing, they need a way to interact and learn from the world, Silver and Sutton suggest. They propose that the AI agents in streams will learn via the same reinforcement learning principle as AlphaZero. The machine is given a model of the world in which it interacts, akin to a chessboard, and a set of rules. As the AI agent explores and takes actions, it receives feedback as "rewards". These rewards train the AI model on what is more or less valuable among possible actions in a given circumstance.The world is full of various "signals" providing those rewards, if the agent is allowed to look for them, Silver and Sutton suggest."Where do rewards come from, if not from human data? Once agents become connected to the world through rich action and observation spaces, there will be no shortage of grounded signals to provide a basis for reward. In fact, the world abounds with quantities such as cost, error rates, hunger, productivity, health metrics, climate metrics, profit, sales, exam results, success, visits, yields, stocks, likes, income, pleasure/pain, economic indicators, accuracy, power, distance, speed, efficiency, or energy consumption. In addition, there are innumerable additional signals arising from the occurrence of specific events, or from features derived from raw sequences of observations and actions."To start the AI agent from a foundation, AI developers might use a "world model" simulation. The world model lets an AI model make predictions, test those predictions in the real world, and then use the reward signals to make the model more realistic. "As the agent continues to interact with the world throughout its stream of experience, its dynamics model is continually updated to correct any errors in its predictions," they write.Silver and Sutton still expect humans to have a role in defining goals, for which the signals and rewards serve to steer the agent. For example, a user might specify a broad goal such as 'improve my fitness', and the reward function might return a function of the user's heart rate, sleep duration, and steps taken. Or the user might specify a goal of 'help me learn Spanish', and the reward function could return the user's Spanish exam results.The human feedback becomes "the top-level goal" that all else serves.The researchers write that AI agents with those long-range capabilities would be better as AI assistants. They could track a person's sleep and diet over months or years, providing health advice not limited to recent trends. Such agents could also be educational assistants tracking students over a long timeframe."A science agent could pursue ambitious goals, such as discovering a new material or reducing carbon dioxide," they offer. "Such an agent could analyse real-world observations over an extended period, developing and running simulations, and suggesting real-world experiments or interventions."The researchers suggest that the arrival of "thinking" or "reasoning" AI models, such as Gemini, DeepSeek's R1, and OpenAI's o1, may be surpassed by experience agents. The problem with reasoning agents is that they "imitate" human language when they produce verbose output about steps to an answer, and human thought can be limited by its embedded assumptions. "For example, if an agent had been trained to reason using human thoughts and expert answers from 5,000 years ago, it may have reasoned about a physical problem in terms of animism," they offer. "1,000 years ago, it may have reasoned in theistic terms; 300 years ago, it may have reasoned in terms of Newtonian mechanics; and 50 years ago, in terms of quantum mechanics."The researchers write that such agents "will unlock unprecedented capabilities," leading to "a future profoundly different from anything we have seen before." However, they suggest there are also many, many risks. These risks are not just focused on AI agents making human labor obsolete, although they note that job loss is a risk. Agents that "can autonomously interact with the world over extended periods of time to achieve long-term goals," they write, raise the prospect of humans having fewer opportunities to "intervene and mediate the agent's actions." On the positive side, they suggest, an agent that can adapt, as opposed to today's fixed AI models, "could recognise when its behaviour is triggering human concern, dissatisfaction, or distress, and adaptively modify its behaviour to avoid these negative consequences."Leaving aside the details, Silver and Sutton are confident the streams experience will generate so much more information about the world that it will dwarf all the Wikipedia and Reddit data used to train today's AI. Stream-based agents may even move past human intelligence, alluding to the arrival of artificial general intelligence, or super-intelligence."Experiential data will eclipse the scale and quality of human-generated data," the researchers write. "This paradigm shift, accompanied by algorithmic advancements in RL [reinforcement learning], will unlock in many domains new capabilities that surpass those possessed by any human."Silver also explored the subject in a DeepMind podcast this month.Artificial Intelligence0 Commentaires 0 Parts 81 Vue

WWW.ZDNET.COMAI has grown beyond human knowledge, says Google's DeepMind unitworawit chutrakunwanit/Getty ImagesThe world of artificial intelligence (AI) has recently been preoccupied with advancing generative AI beyond simple tests that AI models easily pass. The famed Turing Test has been "beaten" in some sense, and controversy rages over whether the newest models are being built to game the benchmark tests that measure performance.The problem, say scholars at Google's DeepMind unit, is not the tests themselves but the limited way AI models are developed. The data used to train AI is too restricted and static, and will never propel AI to new and better abilities. In a paper posted by DeepMind last week, part of a forthcoming book by MIT Press, researchers propose that AI must be allowed to have "experiences" of a sort, interacting with the world to formulate goals based on signals from the environment."Incredible new capabilities will arise once the full potential of experiential learning is harnessed," write DeepMind scholars David Silver and Richard Sutton in the paper, Welcome to the Era of Experience.The two scholars are legends in the field. Silver most famously led the research that resulted in AlphaZero, DeepMind's AI model that beat humans in games of Chess and Go. Sutton is one of two Turing Award-winning developers of an AI approach called reinforcement learning that Silver and his team used to create AlphaZero. The approach the two scholars advocate builds upon reinforcement learning and the lessons of AlphaZero. It's called "streams" and is meant to remedy the shortcomings of today's large language models (LLMs), which are developed solely to answer individual human questions. Google DeepMindSilver and Sutton suggest that shortly after AlphaZero and its predecessor, AlphaGo, burst on the scene, generative AI tools, such as ChatGPT, took the stage and "discarded" reinforcement learning. That move had benefits and drawbacks. Gen AI was an important advance because AlphaZero's use of reinforcement learning was restricted to limited applications. The technology couldn't go beyond "full information" games, such as Chess, where all the rules are known. Gen AI models, on the other hand, can handle spontaneous input from humans never before encountered, without explicit rules about how things are supposed to turn out. However, discarding reinforcement learning meant, "something was lost in this transition: an agent's ability to self-discover its own knowledge," they write.Instead, they observe that LLMs "[rely] on human prejudgment", or what the human wants at the prompt stage. That approach is too limited. They suggest that human judgment "imposes "an impenetrable ceiling on the agent's performance: the agent cannot discover better strategies underappreciated by the human rater.Not only is human judgment an impediment, but the short, clipped nature of prompt interactions never allows the AI model to advance beyond question and answer. "In the era of human data, language-based AI has largely focused on short interaction episodes: e.g., a user asks a question and (perhaps after a few thinking steps or tool-use actions) the agent responds," the researchers write."The agent aims exclusively for outcomes within the current episode, such as directly answering a user's question." There's no memory, there's no continuity between snippets of interaction in prompting. "Typically, little or no information carries over from one episode to the next, precluding any adaptation over time," write Silver and Sutton. However, in their proposed Age of Experience, "Agents will inhabit streams of experience, rather than short snippets of interaction."Silver and Sutton draw an analogy between streams and humans learning over a lifetime of accumulated experience, and how they act based on long-range goals, not just the immediate task."Powerful agents should have their own stream of experience that progresses, like humans, over a long time-scale," they write.Silver and Sutton argue that "today's technology" is enough to start building streams. In fact, the initial steps along the way can be seen in developments such as web-browsing AI agents, including OpenAI's Deep Research. "Recently, a new wave of prototype agents have started to interact with computers in an even more general manner, by using the same interface that humans use to operate a computer," they write.The browser agent marks "a transition from exclusively human-privileged communication, to much more autonomous interactions where the agent is able to act independently in the world."As AI agents move beyond just web browsing, they need a way to interact and learn from the world, Silver and Sutton suggest. They propose that the AI agents in streams will learn via the same reinforcement learning principle as AlphaZero. The machine is given a model of the world in which it interacts, akin to a chessboard, and a set of rules. As the AI agent explores and takes actions, it receives feedback as "rewards". These rewards train the AI model on what is more or less valuable among possible actions in a given circumstance.The world is full of various "signals" providing those rewards, if the agent is allowed to look for them, Silver and Sutton suggest."Where do rewards come from, if not from human data? Once agents become connected to the world through rich action and observation spaces, there will be no shortage of grounded signals to provide a basis for reward. In fact, the world abounds with quantities such as cost, error rates, hunger, productivity, health metrics, climate metrics, profit, sales, exam results, success, visits, yields, stocks, likes, income, pleasure/pain, economic indicators, accuracy, power, distance, speed, efficiency, or energy consumption. In addition, there are innumerable additional signals arising from the occurrence of specific events, or from features derived from raw sequences of observations and actions."To start the AI agent from a foundation, AI developers might use a "world model" simulation. The world model lets an AI model make predictions, test those predictions in the real world, and then use the reward signals to make the model more realistic. "As the agent continues to interact with the world throughout its stream of experience, its dynamics model is continually updated to correct any errors in its predictions," they write.Silver and Sutton still expect humans to have a role in defining goals, for which the signals and rewards serve to steer the agent. For example, a user might specify a broad goal such as 'improve my fitness', and the reward function might return a function of the user's heart rate, sleep duration, and steps taken. Or the user might specify a goal of 'help me learn Spanish', and the reward function could return the user's Spanish exam results.The human feedback becomes "the top-level goal" that all else serves.The researchers write that AI agents with those long-range capabilities would be better as AI assistants. They could track a person's sleep and diet over months or years, providing health advice not limited to recent trends. Such agents could also be educational assistants tracking students over a long timeframe."A science agent could pursue ambitious goals, such as discovering a new material or reducing carbon dioxide," they offer. "Such an agent could analyse real-world observations over an extended period, developing and running simulations, and suggesting real-world experiments or interventions."The researchers suggest that the arrival of "thinking" or "reasoning" AI models, such as Gemini, DeepSeek's R1, and OpenAI's o1, may be surpassed by experience agents. The problem with reasoning agents is that they "imitate" human language when they produce verbose output about steps to an answer, and human thought can be limited by its embedded assumptions. "For example, if an agent had been trained to reason using human thoughts and expert answers from 5,000 years ago, it may have reasoned about a physical problem in terms of animism," they offer. "1,000 years ago, it may have reasoned in theistic terms; 300 years ago, it may have reasoned in terms of Newtonian mechanics; and 50 years ago, in terms of quantum mechanics."The researchers write that such agents "will unlock unprecedented capabilities," leading to "a future profoundly different from anything we have seen before." However, they suggest there are also many, many risks. These risks are not just focused on AI agents making human labor obsolete, although they note that job loss is a risk. Agents that "can autonomously interact with the world over extended periods of time to achieve long-term goals," they write, raise the prospect of humans having fewer opportunities to "intervene and mediate the agent's actions." On the positive side, they suggest, an agent that can adapt, as opposed to today's fixed AI models, "could recognise when its behaviour is triggering human concern, dissatisfaction, or distress, and adaptively modify its behaviour to avoid these negative consequences."Leaving aside the details, Silver and Sutton are confident the streams experience will generate so much more information about the world that it will dwarf all the Wikipedia and Reddit data used to train today's AI. Stream-based agents may even move past human intelligence, alluding to the arrival of artificial general intelligence, or super-intelligence."Experiential data will eclipse the scale and quality of human-generated data," the researchers write. "This paradigm shift, accompanied by algorithmic advancements in RL [reinforcement learning], will unlock in many domains new capabilities that surpass those possessed by any human."Silver also explored the subject in a DeepMind podcast this month.Artificial Intelligence0 Commentaires 0 Parts 81 Vue -

WWW.FORBES.COMThe Age Of Agents: Modernizing Your ApplicactionsAs new technologies emerge and business needs change, legacy applications face multiple challenges in performance, scalability and security.0 Commentaires 0 Parts 71 Vue

WWW.FORBES.COMThe Age Of Agents: Modernizing Your ApplicactionsAs new technologies emerge and business needs change, legacy applications face multiple challenges in performance, scalability and security.0 Commentaires 0 Parts 71 Vue