0 Commentarii

·0 Distribuiri

·65 Views

Director

-

How to record audio and get transcripts in Notes on iOS 18www.popsci.comNotes is even more useful with iOS 18. Image: Graphicscoco/Getty ImagesShareApple has packed a lot into iOS 18, not just the mobile operating system but also all the apps that run on top of itand one of the most useful upgrades is in the Notes app. You can now record voice memos right inside Notes, and even have the text transcribed while you talk.There are all kinds of scenarios where you might find this useful, from lectures to interviews to your own personal thoughts and musings. You can listen to the voice notes and review the text any time you like, and export the text elsewhere too (if you need to share notes from a meeting, for example).Note that the Voice Memos app includes transcription features with iOS 18so you can use that as an alternative to Notes.Recording voice notesTap the paperclip icon to find the option to record (and transcribe) audio. Screenshot: Apple With a note open on your iPhone, tap the paperclip icon on the toolbar underneath the note, then choose Record Audio. You get a new recording interface up on screen: Use the large red button to start recording, then tap it again to pause and resume recording. You can record several segments in the same audio file, if needed.The playback controls on the recording screen are all straightforward: You can listen to what youve already recorded via the play button, and use the jump buttons on either side to go forwards or backwards in the audio in increments of 15 seconds. Note you cant resume a recording unless youre at the end of it.Tap on the speech bubble in the lower left corner and you can toggle between the sound wave view and the transcription view. If you start recording while the transcription view is showing, youll see the text update in real time as you talk. If you play back the audio in transcription mode, the text is highlighted as the clip plays.If youve enabled Apple Intelligence on your iPhone, you have access to a few additional features. You can tap the Summary button at the top to see an AI-generated summary of everything youve said so far, which can come in useful if youre recording lengthy audio clips (for meetings and presentations, for example).At the top of the recording you can see a date and timestamp to help you identify it, and you can give it a name by tapping the three dots (top right) and Renameprobably a good idea to avoid a whole series of clips just called New Recording. When youre happy with your clip, tap Done to save it in the note.Accessing voice notesYour voice memo and its transcription are embedded in the note. Screenshot: Apple Recordings appear as small embeds inside notes, and you can have multiple clips inside the same note if you need. Each embedded recording comes with an AI-generated preview summary, and you can see the clip length and title too. To have the audio played back, just tap the play button.Tap on the title of the voice recording to go back to the main recording screen again. From here you can listen to the clip, view the full transcription, and append more audio to the end of the file, just as you could when you first created it. Tap Done or swipe down from the top to go back to your note.When youre on the recording and transcription screen, tap the three dots (top right) to find more options to play around with. The menu enables you to add the full text of the transcript to your note, copy the transcript to the iPhone clipboard (for pasting into another app), or search through the transcript.You can also save the audio clip to the main Files app on your iPhone, or share the clip to a different app. Finally, theres a Delete option, which erases the recorded audio and its attached transcript. Anything you delete is fully wiped and cant be brought back, so be careful with this (you do get a confirmation screen before deleting forever).These transcription features can be applied to existing audio recordings too; you dont have to record something new. Tap the paperclip icon inside a note, choose Attach File and select an audio file, and itll be embedded in the note in the same way as a live recording. Tap on the audio file to see a transcription.0 Commentarii ·0 Distribuiri ·26 Views

How to record audio and get transcripts in Notes on iOS 18www.popsci.comNotes is even more useful with iOS 18. Image: Graphicscoco/Getty ImagesShareApple has packed a lot into iOS 18, not just the mobile operating system but also all the apps that run on top of itand one of the most useful upgrades is in the Notes app. You can now record voice memos right inside Notes, and even have the text transcribed while you talk.There are all kinds of scenarios where you might find this useful, from lectures to interviews to your own personal thoughts and musings. You can listen to the voice notes and review the text any time you like, and export the text elsewhere too (if you need to share notes from a meeting, for example).Note that the Voice Memos app includes transcription features with iOS 18so you can use that as an alternative to Notes.Recording voice notesTap the paperclip icon to find the option to record (and transcribe) audio. Screenshot: Apple With a note open on your iPhone, tap the paperclip icon on the toolbar underneath the note, then choose Record Audio. You get a new recording interface up on screen: Use the large red button to start recording, then tap it again to pause and resume recording. You can record several segments in the same audio file, if needed.The playback controls on the recording screen are all straightforward: You can listen to what youve already recorded via the play button, and use the jump buttons on either side to go forwards or backwards in the audio in increments of 15 seconds. Note you cant resume a recording unless youre at the end of it.Tap on the speech bubble in the lower left corner and you can toggle between the sound wave view and the transcription view. If you start recording while the transcription view is showing, youll see the text update in real time as you talk. If you play back the audio in transcription mode, the text is highlighted as the clip plays.If youve enabled Apple Intelligence on your iPhone, you have access to a few additional features. You can tap the Summary button at the top to see an AI-generated summary of everything youve said so far, which can come in useful if youre recording lengthy audio clips (for meetings and presentations, for example).At the top of the recording you can see a date and timestamp to help you identify it, and you can give it a name by tapping the three dots (top right) and Renameprobably a good idea to avoid a whole series of clips just called New Recording. When youre happy with your clip, tap Done to save it in the note.Accessing voice notesYour voice memo and its transcription are embedded in the note. Screenshot: Apple Recordings appear as small embeds inside notes, and you can have multiple clips inside the same note if you need. Each embedded recording comes with an AI-generated preview summary, and you can see the clip length and title too. To have the audio played back, just tap the play button.Tap on the title of the voice recording to go back to the main recording screen again. From here you can listen to the clip, view the full transcription, and append more audio to the end of the file, just as you could when you first created it. Tap Done or swipe down from the top to go back to your note.When youre on the recording and transcription screen, tap the three dots (top right) to find more options to play around with. The menu enables you to add the full text of the transcript to your note, copy the transcript to the iPhone clipboard (for pasting into another app), or search through the transcript.You can also save the audio clip to the main Files app on your iPhone, or share the clip to a different app. Finally, theres a Delete option, which erases the recorded audio and its attached transcript. Anything you delete is fully wiped and cant be brought back, so be careful with this (you do get a confirmation screen before deleting forever).These transcription features can be applied to existing audio recordings too; you dont have to record something new. Tap the paperclip icon inside a note, choose Attach File and select an audio file, and itll be embedded in the note in the same way as a live recording. Tap on the audio file to see a transcription.0 Commentarii ·0 Distribuiri ·26 Views -

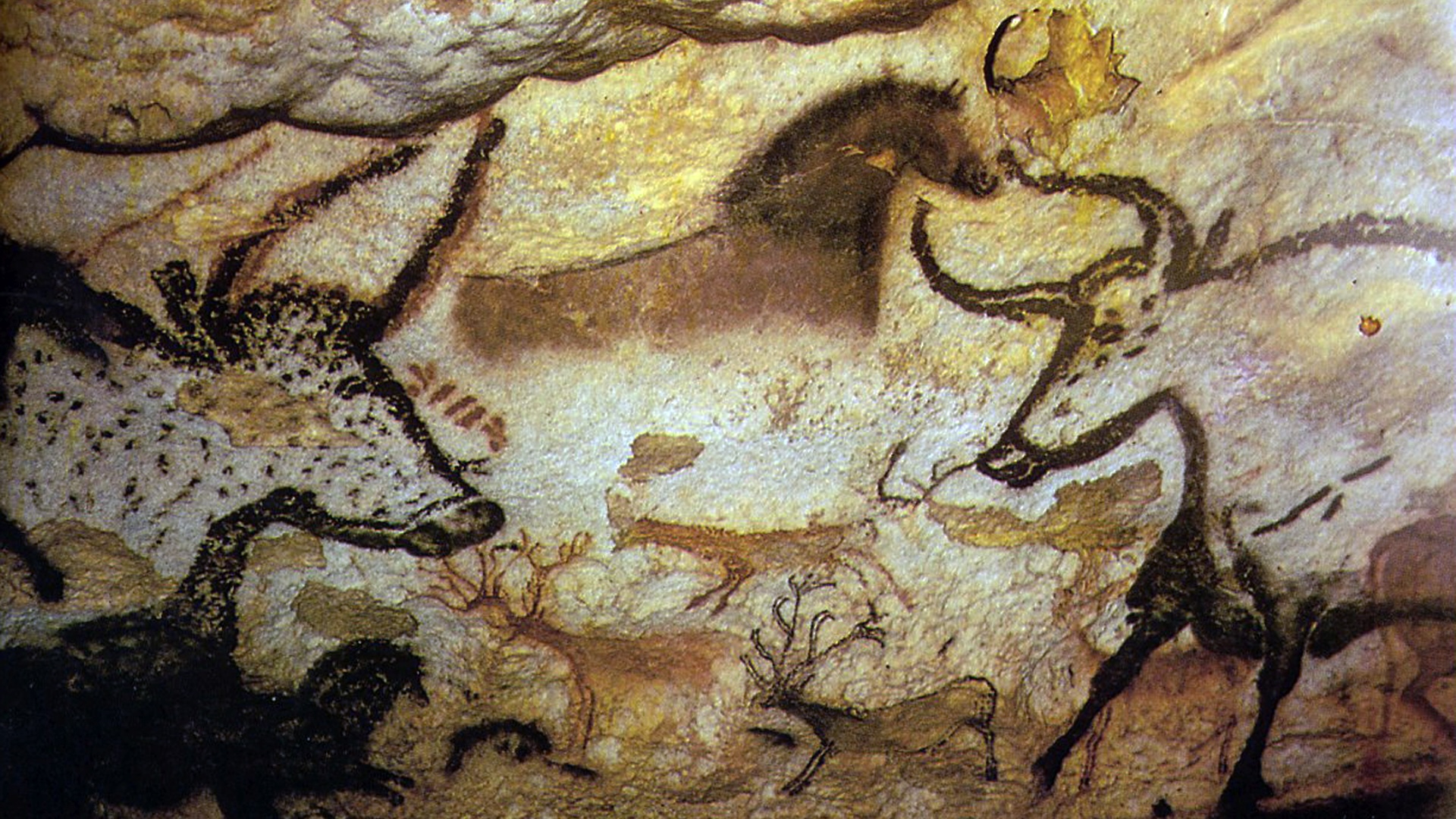

7 haunting caves ancient humans used for art, burials and butcheringwww.livescience.comFrom stunning artwork to evidence of elaborate prehistoric butchering, Live Science takes a look at seven amazing caves that contain archaeological remains.0 Commentarii ·0 Distribuiri ·25 Views

7 haunting caves ancient humans used for art, burials and butcheringwww.livescience.comFrom stunning artwork to evidence of elaborate prehistoric butchering, Live Science takes a look at seven amazing caves that contain archaeological remains.0 Commentarii ·0 Distribuiri ·25 Views -

It's tough to resist scratching an itch and evolution may be to blamewww.livescience.comA new study conducted in mice suggests that, although it's not all good, our urge to scratch at itchy skin may have an evolutionary benefit.0 Commentarii ·0 Distribuiri ·27 Views

It's tough to resist scratching an itch and evolution may be to blamewww.livescience.comA new study conducted in mice suggests that, although it's not all good, our urge to scratch at itchy skin may have an evolutionary benefit.0 Commentarii ·0 Distribuiri ·27 Views -

www.reddit.comHey everyone! In Hinokai, ancient warriors, advanced robots, and creatures from Japanese folklore coexist. Crime syndicates big and small rule its neon streets, and the slave markets of old are thriving. Through its dark, corrupted streets, we follow over the tales and legend of each unique character. I'm really excited to share with the community, the encyclopedia book that me and my friends have been working on for many years. Our ambition is to make Japanese tales set in a futuristic world. My friends and I love Japanese folklore and the Cyberpunk genre, and so we made this series together. I would really appreciate if you guys follow our Kickstarter so you can check out the trailer weve been working on with we launch. Weve put together over 200 pages filled with stunning illustrations, rich lore, and world-building that make this setting feel alive. Its an encyclopedia, a visual experience, and a gateway into the heart of Hinokai. Now, this has been a passion project for a long long time, and I cant wait to share more with all of you. Thank you, and let me know what you think! submitted by /u/Cyber_Sheep_Film [link] [comments]0 Commentarii ·0 Distribuiri ·34 Views

-

Hogwarts Legacy 2 Multiplayer Would Have a Great Stand-In For One Mechanicgamerant.comMultiplayer has long been a topic of discussion in relation to Hogwarts Legacy, 2023's best-selling game by a significant margin. Neither Warner Bros. nor developer Avalanche Software has officially announced a Hogwarts Legacyfollow-up, but it seems like only a matter of time, considering the success of the first game. Assuming such a sequel is on the way, it will be interesting to see how Avalanche responds to the multiplayer discussion.0 Commentarii ·0 Distribuiri ·18 Views

Hogwarts Legacy 2 Multiplayer Would Have a Great Stand-In For One Mechanicgamerant.comMultiplayer has long been a topic of discussion in relation to Hogwarts Legacy, 2023's best-selling game by a significant margin. Neither Warner Bros. nor developer Avalanche Software has officially announced a Hogwarts Legacyfollow-up, but it seems like only a matter of time, considering the success of the first game. Assuming such a sequel is on the way, it will be interesting to see how Avalanche responds to the multiplayer discussion.0 Commentarii ·0 Distribuiri ·18 Views -

Lost Castle 2: Multiplayer & Co-Op Guidegamerant.comFinding a multiplayer game like Lost Castle 2 that's both fun and highly replayable isn't easy. If you've discovered this hidden gem, consider yourself lucky! The best part is that you can team up with up to three other players, whether you're all on the same couch or scattered across different continents.0 Commentarii ·0 Distribuiri ·19 Views

Lost Castle 2: Multiplayer & Co-Op Guidegamerant.comFinding a multiplayer game like Lost Castle 2 that's both fun and highly replayable isn't easy. If you've discovered this hidden gem, consider yourself lucky! The best part is that you can team up with up to three other players, whether you're all on the same couch or scattered across different continents.0 Commentarii ·0 Distribuiri ·19 Views -

The best movies new to streaming this Februarywww.polygon.comIts only been one month since the year started (can you believe it?), and there are a ton of exciting new releases in February to look forward to. Captain America: Brave New World and Osgood Perkins The Monkey are the movies to see in theaters this month, while Scott Derricksons sci-fi horror film The Gorge premieres on Apple TV Plus. If youre looking for even more great movies to stream from the comfort of your home this February, youve come to the right place.This month, weve got a beautiful period drama starring Daniel Day-Lewis, an idiosyncratic comedy about an eccentric concierge, an underrated crime comedy set in 1980s Los Angeles, and more.Here are the best movies new to streaming services you should watch this month!Editors pick: Phantom ThreadWhere to watch:Criterion ChannelGenre:Period dramaDirector: Paul Thomas AndersonCast: Daniel Day-Lewis, Lesley Manville, Vicky KriepsPaul Thomas Andersons 2017 historical drama Phantom Thread follows the story of Reynolds Woodcock (Daniel Day-Lewis), an irascible haute couture dressmaker in 1950s London whose carefully cultivated lifestyle is upset by his ongoing love affair with his muse Alma (Vicky Krieps), a strong-willed woman with ambitions and desires of her own. In his most recent film role to date, Day-Lewis is unsurprisingly masterful in his portrayal of Woodcock as an artist whose capricious infatuations and fastidious inflexibility prove unbearable to all except Alma, who discovers a lets say unconventional way of leveling the power dynamic in their relationship. Top that with an exquisite score by Jonny Greenwood and beautiful costume designs by Mark Bridges and youve got what is undoubtedly one of Andersons finest films to date. Toussaint EganNew to NetflixThe Nice GuysGenre:Neo-noir actionDirector:Shane BlackCast:Russell Crowe, Ryan Gosling, Angourie RiceIn the nearly 10 years since it was first released, Hollywood still hasnt made a movie as funny as The Nice Guys. The movie stars Ryan Gosling and Russell Crowe as private eyes reluctantly thrown together on a mysterious case that takes them all through 1970s Los Angeles, from adult film industry parties to the top of the United States government.But for all the fun silliness of The Nice Guys actual plot, the movies most hilarious charm comes from the interplay of its two lead actors. Crowe was born to play a no-nonsense straight man whos twice as large and intimidating as anyone else in the movie, and watching him deal with Goslings slacker nitwit character never gets old. Even better is the fact that the movie is absolutely packed with the kind of physical comedy thats been sorely lacking in Hollywood over the last few decades. The whole thing adds up to an absolutely fantastic movie thats constantly begging for a rewatch. Austen GoslinNew to HuluThe Grand Budapest HotelGenre:Comedy dramaDirector:Wes AndersonCast: Ralph Fiennes, F. Murray Abraham, Mathieu AmalricThere are a lot of right answers when it comes to the question of what your favorite Wes Anderson movie is, but its likely that all told, The Grand Budapest Hotel is the directors masterpiece. The movie follows the life story of a man who started his time at the hotel as a lowly bellboy, taken under the wing of the hotels legendary concierge, M. Gustave (Ralph Fiennes). The story follows the adventures the two go on together, including seeing the hotel through the end of a peaceful era and the rise of fascism in Europe.While the subject matter may sound heavy, part of the key to the movies excellence is the deftness with which Anderson handles its tone. He uses his signature whimsy and talent for twee aesthetic sensibilities to counterbalance the heaviness of the movies themes, turning the whole movie into the kind of safe haven from doom that the hotel itself comes to represent in the story. Its the most effective weaponization of self-awareness that Anderson has ever employed, and makes The Grand Budapest Hotel a particularly lofty achievement, even in a career full of classics. AGNew to MaxMalcolm XGenre:Biographical dramaDirector:Spike LeeCast:Denzel Washington, Angela Bassett, Albert HallWhat better way to ring in Black History Month than by watching Spike Lees critically acclaimed biopic of one of the most divisive and iconic civil rights activists of his time? Based on The Autobiography of Malcolm X, the film roughly follows the same arc as its inspiration, charting the late human rights activist and firebrand from his formative years in Boston to his tenure as a member of the Nation of Islam and untimely death at the age of 39. Denzel Washington delivers one of the defining performances of his career, capturing the emotional complexity and intellectual depth of Malcolms life. If youre looking for a compelling drama about a complicated, flawed, and thoroughly inspiring historical figure, this is the one to watch this month. TENew to Prime VideoEdge of TomorrowGenre:Sci-fi actionDirector:Doug LimanCast:Tom Cruise, Emily Blunt, Bill PaxtonFor all the greatness of the later Mission: Impossible movies, it was Edge of Tomorrow that truly kicked off Tom Cruises mid-career renaissance. And thats not too surprising, considering that Tom Cruise has never made a better movie about being Tom Cruise than this one.This sci-fi spin on Groundhog Day follows Cruise as a military officer in a future where the world is in a war of annihilation with a race of sentient alien machines. But far from a battle-hardened commander or a decorated hero of the conflict, Cruises character is just a PR person, a figurehead with no combat experience and a disdainful view of the soldiers around him at least until he gets stuck in a time loop and realizes hes going to have to do his part to save humanity. Meanwhile, the one taking on the more traditional movie-star role is Emily Blunt, who plays a true war hero who takes it upon herself to train Cruise again and again.This excellent premise gives us the chance to see the one thing we almost never get to see Cruise do on screen: fail. He fails over and over and over again, dying meaninglessly each time until he finally starts to succeed, because he knows that all it takes is one right runthrough of the loop to save the world. Its Tom Cruises moviemaking philosophy in miniature, and its beautiful, fun, and, of course, incredibly entertaining to watch. AG0 Commentarii ·0 Distribuiri ·19 Views

The best movies new to streaming this Februarywww.polygon.comIts only been one month since the year started (can you believe it?), and there are a ton of exciting new releases in February to look forward to. Captain America: Brave New World and Osgood Perkins The Monkey are the movies to see in theaters this month, while Scott Derricksons sci-fi horror film The Gorge premieres on Apple TV Plus. If youre looking for even more great movies to stream from the comfort of your home this February, youve come to the right place.This month, weve got a beautiful period drama starring Daniel Day-Lewis, an idiosyncratic comedy about an eccentric concierge, an underrated crime comedy set in 1980s Los Angeles, and more.Here are the best movies new to streaming services you should watch this month!Editors pick: Phantom ThreadWhere to watch:Criterion ChannelGenre:Period dramaDirector: Paul Thomas AndersonCast: Daniel Day-Lewis, Lesley Manville, Vicky KriepsPaul Thomas Andersons 2017 historical drama Phantom Thread follows the story of Reynolds Woodcock (Daniel Day-Lewis), an irascible haute couture dressmaker in 1950s London whose carefully cultivated lifestyle is upset by his ongoing love affair with his muse Alma (Vicky Krieps), a strong-willed woman with ambitions and desires of her own. In his most recent film role to date, Day-Lewis is unsurprisingly masterful in his portrayal of Woodcock as an artist whose capricious infatuations and fastidious inflexibility prove unbearable to all except Alma, who discovers a lets say unconventional way of leveling the power dynamic in their relationship. Top that with an exquisite score by Jonny Greenwood and beautiful costume designs by Mark Bridges and youve got what is undoubtedly one of Andersons finest films to date. Toussaint EganNew to NetflixThe Nice GuysGenre:Neo-noir actionDirector:Shane BlackCast:Russell Crowe, Ryan Gosling, Angourie RiceIn the nearly 10 years since it was first released, Hollywood still hasnt made a movie as funny as The Nice Guys. The movie stars Ryan Gosling and Russell Crowe as private eyes reluctantly thrown together on a mysterious case that takes them all through 1970s Los Angeles, from adult film industry parties to the top of the United States government.But for all the fun silliness of The Nice Guys actual plot, the movies most hilarious charm comes from the interplay of its two lead actors. Crowe was born to play a no-nonsense straight man whos twice as large and intimidating as anyone else in the movie, and watching him deal with Goslings slacker nitwit character never gets old. Even better is the fact that the movie is absolutely packed with the kind of physical comedy thats been sorely lacking in Hollywood over the last few decades. The whole thing adds up to an absolutely fantastic movie thats constantly begging for a rewatch. Austen GoslinNew to HuluThe Grand Budapest HotelGenre:Comedy dramaDirector:Wes AndersonCast: Ralph Fiennes, F. Murray Abraham, Mathieu AmalricThere are a lot of right answers when it comes to the question of what your favorite Wes Anderson movie is, but its likely that all told, The Grand Budapest Hotel is the directors masterpiece. The movie follows the life story of a man who started his time at the hotel as a lowly bellboy, taken under the wing of the hotels legendary concierge, M. Gustave (Ralph Fiennes). The story follows the adventures the two go on together, including seeing the hotel through the end of a peaceful era and the rise of fascism in Europe.While the subject matter may sound heavy, part of the key to the movies excellence is the deftness with which Anderson handles its tone. He uses his signature whimsy and talent for twee aesthetic sensibilities to counterbalance the heaviness of the movies themes, turning the whole movie into the kind of safe haven from doom that the hotel itself comes to represent in the story. Its the most effective weaponization of self-awareness that Anderson has ever employed, and makes The Grand Budapest Hotel a particularly lofty achievement, even in a career full of classics. AGNew to MaxMalcolm XGenre:Biographical dramaDirector:Spike LeeCast:Denzel Washington, Angela Bassett, Albert HallWhat better way to ring in Black History Month than by watching Spike Lees critically acclaimed biopic of one of the most divisive and iconic civil rights activists of his time? Based on The Autobiography of Malcolm X, the film roughly follows the same arc as its inspiration, charting the late human rights activist and firebrand from his formative years in Boston to his tenure as a member of the Nation of Islam and untimely death at the age of 39. Denzel Washington delivers one of the defining performances of his career, capturing the emotional complexity and intellectual depth of Malcolms life. If youre looking for a compelling drama about a complicated, flawed, and thoroughly inspiring historical figure, this is the one to watch this month. TENew to Prime VideoEdge of TomorrowGenre:Sci-fi actionDirector:Doug LimanCast:Tom Cruise, Emily Blunt, Bill PaxtonFor all the greatness of the later Mission: Impossible movies, it was Edge of Tomorrow that truly kicked off Tom Cruises mid-career renaissance. And thats not too surprising, considering that Tom Cruise has never made a better movie about being Tom Cruise than this one.This sci-fi spin on Groundhog Day follows Cruise as a military officer in a future where the world is in a war of annihilation with a race of sentient alien machines. But far from a battle-hardened commander or a decorated hero of the conflict, Cruises character is just a PR person, a figurehead with no combat experience and a disdainful view of the soldiers around him at least until he gets stuck in a time loop and realizes hes going to have to do his part to save humanity. Meanwhile, the one taking on the more traditional movie-star role is Emily Blunt, who plays a true war hero who takes it upon herself to train Cruise again and again.This excellent premise gives us the chance to see the one thing we almost never get to see Cruise do on screen: fail. He fails over and over and over again, dying meaninglessly each time until he finally starts to succeed, because he knows that all it takes is one right runthrough of the loop to save the world. Its Tom Cruises moviemaking philosophy in miniature, and its beautiful, fun, and, of course, incredibly entertaining to watch. AG0 Commentarii ·0 Distribuiri ·19 Views -

Dimension 20s guide to the real Unsleeping City, actual New Yorkwww.polygon.comDimension 20s sold-out appearance at Madison Square Garden saw the return of their Unsleeping City campaign, set in a magical sixth borough of New York City. Ahead of the live show, we sat down with Ally Beardsley and Brennan Lee Mulligan to get their take on the real New York City.Read on for advice on the best time to visit Times Square, and the unsung neighborhoods that were foundational to Mulligans bohemian youth in the city.Yankee StadiumBrennan Lee Mulligan: Yankee Stadium is a stadium that I didnt go to too much. My dad was a Mets fan, and I used to go to Shea Stadium with my old man, and we would yell at the Mets, which is what Mets fans do. If you love the Yankees, you want to see the Yankees play, then go to the Yankee Stadium.I bear them no ill will, but I would not pressure someone to go to a Yankees game if they did not feel in their heart that that was right were starting off with something from a place of pure, kind neutrality.Ally Beardsley: Last time I was at a baseball game, the woman in front of me was Googling how long is a baseball game. So it should tell you how much fun is in store for you.GreenpointBeardsley: You got married there.Mulligan: I got married there. Look at that. And I lived in Greenpoint for a little while because a cruise ship broke! And I had nowhere else to go. I had to sublet my room and I had to move into Ryan Haneys place. I slept in a closet on an air mattress.Greenpoint was very central to me because at my most bohemian, where again, I was sleeping on an air mattress in a closet, thats where I was. So theres a little bit of autobiography. That was also a time when I had just been dumped, and so I was single and dating and I was fun and fancy free in Brooklyn. So its like, Were dating! Were doing shows at night! Its in a new neighborhood! Lifes an adventure! Oh, we have feelings for each other! Lets go to your place. I sleep on a balloon. That was the vibe. I was like, I need to date so I can sleep somewhere where I can actually rest.It is a vibrant immigrant community in New York, and I think theres a certain degree in Greenpoint of places that are weirdly central while being off the beaten path. So it felt like a cool little artsy place to be hidden away.[In the Unsleeping City campaign] Pete the Plug was looking for the New York transplant experience, and at the time we were doing this, Greenpoint was a neighborhood that still had affordable apartments. Its still largely a Polish immigrant neighborhood, and theres places for artsy like, people in Petes bracket made sense to live in Greenpoint.Beardsley: And not anymore.Mulligan: Less so these days. Less so these days for sure.The City Hall subway stationMulligan: This is not in service and has not been for a long, long time. You get here by I think taking the 6 [subway line]. You take the 6 past its last stop and it goes through a beautiful non-in-use subway station that you can see through the windows of the train. God, its magical. I cant believe no. Did we use this [in Unsleeping City]? I think this is where the entrance to the Dragon of Bleecker Street place was, in the Unsleeping City. Its an incredibly magical location. Stay on the 6 past the last stop heading southbound.Its a funny thing because obviously every New Yorker complains about the subway, because it should be better, but it is still the best 24-hour public transit. It transports millions of New Yorkers every day. Its an incredible feat, and it creates a type of civic life that cannot exist in the American cities that were poisoned by Robert Moses attempts to kill it, and that affected the American city such that places that should not be car-centric are car-centric.Beardsley: Yes, shoutout to the MTA. Metro North. My whole family works on Metro North.AstoriaMulligan: Astoria is a really wonderful neighborhood. I believe for a period of time, Astoria had the distinction in the world not just the country, in the world of being the postal code with the most first languages spoken of anywhere in the world. And if that doesnt make you proud to be a fucking New Yorker, I dont know, baby. The melting pot shit is not a joke, man. I know its corny, but its fucking beautiful. Its amazing. People all over the world come to Astoria and live there. And as St. [Anthony] Bourdain said, Queens is the center of New York food. Theres amazing food in every single borough, but Queens has a relationship to food, especially because of all the vibrant different communities. How many first languages are spoken here? How many cuisines are represented authentically from people who know how to cook it?A bodegaBeardsley: A bodega is a place where cats live.Mulligan: A bodega is a spiritual center that gives the lie to the disproven thesis that the only way to ensure reliability of service is through the forced sterility of a corporate chain. You can get the constancy of your Panera Bread from multiple, homegrown, local businesses. God bless the bodega. Long may she reign.Beardsley: I love bodegas. God, I love bodegas. Hows your wife? I love to say that when I walk into a bodega. Immediate familiarity.Times SquareMulligan: The most magical place in Times Square is not a place. Its time. Times Square is the armpit of hell for 20 hours out of the day. But for a four-hour stretch in between, Id say, 1:30 a.m. and 5:30 a.m., Times Square is beautiful.Beardsley: Like during COVID, where everyone was like, Times Square is empty! Its really crazy how beautiful it is.Mulligan: I used to be a driver for indie features. I was a production van driver, and when youre a driver, you gotta be up early enough to pick up the earliest people who are getting up. So I was up at like 3:30 in the morning to get to work, and Id walk through at like 4:00 a.m. with a cup of coffee and be freezing cold, and look at the lights. And you see all of the fucking beating heart of this modern world and advertising. And just for a moment, all of this power and energy is directed at selling you, and you alone, lingerie and Broadway tickets and M&Ms. And youre like, this is crazy.Whats the real sixth borough?Beardsley: The one in your heart.Mulligan: Los Feliz.Beardsley: Thats real! Thats fucking real!Mulligan: Lemme tell you, Los Feliz is Brooklyn circa 2006. I look around and Im like, I saw you in Brooklyn. I saw you in Brooklyn. What are you all doing out here?Beardsley: If youre moving to LA from New York, just go to Los Feliz. Youre going to end up there anyway.Mulligan: You legally cant get an apartment anywhere else.0 Commentarii ·0 Distribuiri ·20 Views

Dimension 20s guide to the real Unsleeping City, actual New Yorkwww.polygon.comDimension 20s sold-out appearance at Madison Square Garden saw the return of their Unsleeping City campaign, set in a magical sixth borough of New York City. Ahead of the live show, we sat down with Ally Beardsley and Brennan Lee Mulligan to get their take on the real New York City.Read on for advice on the best time to visit Times Square, and the unsung neighborhoods that were foundational to Mulligans bohemian youth in the city.Yankee StadiumBrennan Lee Mulligan: Yankee Stadium is a stadium that I didnt go to too much. My dad was a Mets fan, and I used to go to Shea Stadium with my old man, and we would yell at the Mets, which is what Mets fans do. If you love the Yankees, you want to see the Yankees play, then go to the Yankee Stadium.I bear them no ill will, but I would not pressure someone to go to a Yankees game if they did not feel in their heart that that was right were starting off with something from a place of pure, kind neutrality.Ally Beardsley: Last time I was at a baseball game, the woman in front of me was Googling how long is a baseball game. So it should tell you how much fun is in store for you.GreenpointBeardsley: You got married there.Mulligan: I got married there. Look at that. And I lived in Greenpoint for a little while because a cruise ship broke! And I had nowhere else to go. I had to sublet my room and I had to move into Ryan Haneys place. I slept in a closet on an air mattress.Greenpoint was very central to me because at my most bohemian, where again, I was sleeping on an air mattress in a closet, thats where I was. So theres a little bit of autobiography. That was also a time when I had just been dumped, and so I was single and dating and I was fun and fancy free in Brooklyn. So its like, Were dating! Were doing shows at night! Its in a new neighborhood! Lifes an adventure! Oh, we have feelings for each other! Lets go to your place. I sleep on a balloon. That was the vibe. I was like, I need to date so I can sleep somewhere where I can actually rest.It is a vibrant immigrant community in New York, and I think theres a certain degree in Greenpoint of places that are weirdly central while being off the beaten path. So it felt like a cool little artsy place to be hidden away.[In the Unsleeping City campaign] Pete the Plug was looking for the New York transplant experience, and at the time we were doing this, Greenpoint was a neighborhood that still had affordable apartments. Its still largely a Polish immigrant neighborhood, and theres places for artsy like, people in Petes bracket made sense to live in Greenpoint.Beardsley: And not anymore.Mulligan: Less so these days. Less so these days for sure.The City Hall subway stationMulligan: This is not in service and has not been for a long, long time. You get here by I think taking the 6 [subway line]. You take the 6 past its last stop and it goes through a beautiful non-in-use subway station that you can see through the windows of the train. God, its magical. I cant believe no. Did we use this [in Unsleeping City]? I think this is where the entrance to the Dragon of Bleecker Street place was, in the Unsleeping City. Its an incredibly magical location. Stay on the 6 past the last stop heading southbound.Its a funny thing because obviously every New Yorker complains about the subway, because it should be better, but it is still the best 24-hour public transit. It transports millions of New Yorkers every day. Its an incredible feat, and it creates a type of civic life that cannot exist in the American cities that were poisoned by Robert Moses attempts to kill it, and that affected the American city such that places that should not be car-centric are car-centric.Beardsley: Yes, shoutout to the MTA. Metro North. My whole family works on Metro North.AstoriaMulligan: Astoria is a really wonderful neighborhood. I believe for a period of time, Astoria had the distinction in the world not just the country, in the world of being the postal code with the most first languages spoken of anywhere in the world. And if that doesnt make you proud to be a fucking New Yorker, I dont know, baby. The melting pot shit is not a joke, man. I know its corny, but its fucking beautiful. Its amazing. People all over the world come to Astoria and live there. And as St. [Anthony] Bourdain said, Queens is the center of New York food. Theres amazing food in every single borough, but Queens has a relationship to food, especially because of all the vibrant different communities. How many first languages are spoken here? How many cuisines are represented authentically from people who know how to cook it?A bodegaBeardsley: A bodega is a place where cats live.Mulligan: A bodega is a spiritual center that gives the lie to the disproven thesis that the only way to ensure reliability of service is through the forced sterility of a corporate chain. You can get the constancy of your Panera Bread from multiple, homegrown, local businesses. God bless the bodega. Long may she reign.Beardsley: I love bodegas. God, I love bodegas. Hows your wife? I love to say that when I walk into a bodega. Immediate familiarity.Times SquareMulligan: The most magical place in Times Square is not a place. Its time. Times Square is the armpit of hell for 20 hours out of the day. But for a four-hour stretch in between, Id say, 1:30 a.m. and 5:30 a.m., Times Square is beautiful.Beardsley: Like during COVID, where everyone was like, Times Square is empty! Its really crazy how beautiful it is.Mulligan: I used to be a driver for indie features. I was a production van driver, and when youre a driver, you gotta be up early enough to pick up the earliest people who are getting up. So I was up at like 3:30 in the morning to get to work, and Id walk through at like 4:00 a.m. with a cup of coffee and be freezing cold, and look at the lights. And you see all of the fucking beating heart of this modern world and advertising. And just for a moment, all of this power and energy is directed at selling you, and you alone, lingerie and Broadway tickets and M&Ms. And youre like, this is crazy.Whats the real sixth borough?Beardsley: The one in your heart.Mulligan: Los Feliz.Beardsley: Thats real! Thats fucking real!Mulligan: Lemme tell you, Los Feliz is Brooklyn circa 2006. I look around and Im like, I saw you in Brooklyn. I saw you in Brooklyn. What are you all doing out here?Beardsley: If youre moving to LA from New York, just go to Los Feliz. Youre going to end up there anyway.Mulligan: You legally cant get an apartment anywhere else.0 Commentarii ·0 Distribuiri ·20 Views -

Collected consciousness: AI product design for empowering human creativityuxdesign.ccGuernica, Picasso,1937Before we begin, I want you to consume this painting.If you know the story, the details might already scream atyou.However, if youre unfamiliar with it, consider the content of the painting, read into the details, and build a story aboutit.Try to understand why Picasso paintedthis.Guernica, the town, Euskadi.eusThe painting is named after Guernica, the Basque town in Northern Spain, picturedabove.On April 26th, 1937, about 6 weeks before the painting was unveiled at the Paris International Exposition, the population was about7000.In addition to the local civilian population, the town housed a communications center for the antifascist Spanish Republic during the Spanish Civil War, and so it was bombed by Nazi Germany and Fascist Italyan intentional attack on not just the military forces, but the civilians that inhabited the town, to send amessage.Scroll back up and look at the paintingagain.When youre done, come backhere.If you didnt know the story of this painting beforehand, now you do, and it might strike a different chord, if just slightly. The details of the painting now have the context that shows us what Picasso was thinking when he painted Guernica. The strained expression on the horse. The cold stare of the bull. The fallen soldier below the crying mother holding her dead child. The people of Guernica hold a candle that sheds a small ray of hope and growth for their fellow citizens while the light bulb, emitting no light, observes.Its this kind of context that drives meaning in art. Guernica is not just a painting. Its communication. Its a very human way to express the feelings, experiences, and politics Picasso intended to express behind it. Its a vessel of meaning built through words, actions, and paint. It's not just the product of a paintbrush hitting a canvas, but the product of a complex series of events and decisions made by its creator. This is why Guernica has become such a protected piece of art, and why we still know its storytoday.And one day, that story will be lost to time. And, if for some reason, the painting has survived past its story, the people in this future can only speculate about what it means. But the details will be there, and the choices made by Picasso, expressed through those details, will guide the viewers to try and help them understand his feelings, experiences, and politics.But this isnt an essay about art. Its about technology, and what it can and cannot do inart.I started with this message because so much of our creative communication as human beings so deeply depends on the emotion, connection, and meaningful context created and consumed between the creator and consumer. If I (Brandon) tell you (the reader) I love you, thats very different from someone you actually care about, someone you have a history with telling you they love you. Real, true communication between people, especially through art, requires emotion, connection, context, andmeaning.Unfortunately for some, but to the benefit of the rest of us, those are all things that artificial intelligence lacks within the content it generates.The aesthetic of communicationRed and Blue Macaw, Allen & Ginter,1889If the above is an example of why people can generate content that communicates meaning and intention, I think its important to distinguish why AI-generated contentcannot.Generative AI models are pattern visualization machines. LLMs process the words provided to them by computing an analysis of the relationships of those words, how often they appear together, and the different contexts they appear together within the collected data its trained on. These relationships are what inform the probability of the next word or token in a sequence of words ortokens.Temperature: 1Temperature: 2This is why temperature is such an important factor in getting AI systems to generate anything we perceive as meaningful. A lower temperature instructs the model to generate tokens that have a higher probability of coming after the tokens provided, which more often looks like what we might see as an elaborate, well-thought-out response.However, decreasing the probability of the next token with a higher temperature more often generates content we might see as nonsensical because theyre further apart in the training data and arent as statistically related to the wordsinput.Midjourney Prompt: A scene indicative of the horrors of war, in the style of PabloPicassoAnother way to visualize this concept is by highlighting bias in AI-generated images.When we ask Midjourney to interpret and produce an image representative of the vague notion of the horrors of war, it doesnt understand what I might mean by that. It associates horror with skeletons, war with soldiers in uniforms and helmets, Picasso with cubist aesthetic style, and Picasso+War+Horror with horses, because those are elements present in Guernica. There are also noticeable influences from other artists with horror styles, like colors and compositional elements you might see from Goya or Beksinski.When we discuss bias in AI, the focus is usually on a model's inability to accurately represent the world because the data its trained on lacks information representative of the real world. Some of the most abhorrent examples are also the most obvious; when we rely on AI to represent people through generated images. It often defaults to stereotypes (because the training data says white men are a closer probabilistic image to wealth, and black people are closer to servicework).Generated with Canva Magic Media February2024The images are racist and sexist because its a visualization of data that is racist and sexist. There are efforts to reduce this kind of representational bias in generated images of people, for example, through prompt injection. When you provide a vague prompt for a fast food worker it will add details to your prompt around race/age/gender etc. before generating the image to add a level of variety to the more socially risky aspects of theimage.A fast food worker cleaning a table generated with Canva Magic Media January2025But do you notice anything else in these images that points to other, more subtle visualizations ofbias?The colors, the composition of the images, and the metal reflective tables.Demographics are only one of countless pattern associations in the training data. Prompt injection is a response to the outcry of people (rightfully) calling AI companies out for using racist and sexist data, but its just a band-aid solution. It ignores the underlying fact that the architecture of GenAI models doesnt produce biased content merely due to a lack of data, but because AI-generated content is just data visualization of bias,period.A city road at night generated with Canva Magic Media. Notice the patterns in composition, color, visual elements, etc.This is why its easier to prompt for a particular style or subject than fine-controlled content. GenAI doesnt think about the image its producing, the form, content, or anything. Its just math synthesizing meaningful content to mimic the general aesthetic of meaningful content.It doesnt know a human is providing input (or anything else), and it doesnt take into account what they think about during the creative process. It simply generates text based on patterns of what a conversation looks like (or painting, or photograph, or voice, or), without a true understanding of the meaning behind the words it generates. Yes, even GPT-o1 doesnt think through or truly understand anything, it merely generates aesthetic text that mimics an internal dialogue, to generate more aesthetic text that mimics external words that come after internal dialogue.Bender et al. call these models stochastic parrots for this reason (and Hicks et al. call them bullshit, claiming that the term hallucinations is not only incorrect but misleading, as it implies mistakenly incorrect text generated with the intention to tell the truth on part of theAI).There is no intention behind AI-generated text, no regard for the truthjust a likely (or unlikely) next string of data. In the Frankfurtian sense, it truly is bullshitcontent.(Note: Hicks et al. call ChatGPT bullshit specifically, but I think its important to note that its not just one model or even the models in general that are bullshit, thats also anthropomorphizing them. Its the content produced that is bullshit, as it is content generated without regard for truth or meaning.)ChatGPT does not communicate meaningwe inferit. IndiYoungThis is a fundamental flaw in the reliance on generative AI alone as a means to produce anything creative. Any AI-generated content produced without human input and/or iteration is just weighed averages of collected data points that represent the output of conscious human thought processesour text, our photographs, our paintings, our musicand reduces them to their aesthetics without regard for the truthful context or meaning we as humans try to convey through these creative or documentative mediums. Its like a search engine for generalities.And because its limited to producing only generalities, it cannot learn or understand the contextual gaps in data as we can. It cannot make creative decisions, or appreciate them. It can only highlight patterns in the noise that make statistical sense to highlight given what comes before, leaving us only to judge those patterns.When we read human-written text, there is deeper thought, feeling, and intention present. The words do not always represent the writer's deeper thoughts/feelings/intentions, but this is part of what makes great artists or communicatorsthe ability to articulate those deeper parts of the self and communicate with people directly or indirectly through the artifacts theybuild.But not everyone is an artist, and few people are great at communicating, despite the fact that they have those same kinds of thoughts, feelings, and intentions behind the text they write and the art theyproduce.Indi Young touches on this through the lens of user research. When we interview people or read their reviews, the users are trying to convey their thoughts and opinions about an application. We record these thoughts, and when we play them back we often need to work through and interpret them to truly understand what they meant. People say things but mean other things way more often than wed like toadmit.This is why the concept of synthetic users or user research without the users just doesnt work as a means to replace humansthe text built out of user interviews is merely an end-product of the nuanced lived experiences of people. Its hard work to read through text or interview transcripts and build a meaningful understanding of the context or reasoning behind whats recorded, and that context and reasoning are completely absent in AI training.When we talk about the aesthetics of communication, were talking about the patterns present in recorded content without the knowledge or understanding of how those patterns came to be in the first place. If generative AI is only able to synthesize and generate the aesthetics of communication, then it ultimately fails to capture the richness, nuance, and intent behind human expression.Beyond the fundamental limitations of AI as pattern visualization, I also want to acknowledge that a large number of these models are built by training on vast collections works without consent or compensation. The same artists, writers, and musicians whose work these tools aim to replace, have had their own creative output scraped and used to train these systems. Were replacing artists' work and perspective with soulless, perspectiveless synthetic malappropriations of their work. Some companies are building models that exclusively use content they have the rights to (e.g. Pleias recently released a few models trained exclusively on data that are either non-copyrighted or are published under a permissible license.) but regardless, the data sourcing of these models needs reform, and the tools built from them need to understand not only that they cannot replace artists work, but its poor design totry.Artists share with each other, directly and indirectly. They are informed and inspired by one another. Directly, they talk with and learn from each other. Indirectly, they use each other's works as references for their own works. This indirect sharing and consuming of open knowledge is extremely important for building a larger cultural sphere of communication and influence for artists that space andtime.Extending this knowledge-sharing gives us a framework for how we might design useful products for creative contexts. The patterns and insights that emerge from data synthesis provide utility not as replacements for human creativity, but as tools that can highlight new directions, validate assumptions, or spark creative exploration.The problem isnt with the technology itself or its use in creative contexts. The problem is that most applications built on GenAI try to replace creative people with poor simulacrums of their own work, rather than helping them harness these rich, implicit knowledge patterns to reach new creativeheights.This is a common pattern in design for emergent technology.Designing around theMachinePunctuation Personified, John Harris,1824Quite often, AI solutions are built as a result of the technology being merely available to build with and an opportunity to be had, and not as a means to solve a problem that the technology is particularly good at solving. Were designing around the machine, not thecontext.This isnt a bad thing on its ownspeculative design can lead to useful insights about potential futures, and reflecting on these experiments helps us understand what problems a new technology is good at solving, and what itsnot.The problem is the disconnect between the motivations and intentions between research institutions and startups. When we build these experiments and call them products without carefully involving and learning from the people weve supposedly designed them for, and with the pressure of tight turnarounds for profit and risk-taking, the solutions become just that; experiments. Were not considering the people we build for; were building something for ourselves and marketing it as the next big thing. Its costly when it fails, and harmful before itdoes.The large majority of attempts to inject AI into creative spaces, while marketed as tools for artists, writers, or musicians, are in reality just attempts to replace them because we dont consider the actual needs or mental models of creative people. In art especially, the people building these experiments are often not artists themselves. Theyre building experiments that help people to avoid becoming artists. They dont see or care about what artists do, or how they do it, they care about the end product of their work. Then they use AI to build a shortcut to that end product because thats what they see it producean aesthetic of an end product without the understanding of how the work it imitates comes to be in the firstplace.Unsurprisingly, the end products of AI tools built this way usually end up replaceable, uninspiring, or boring because AI alone produces content that is kitsch. It doesnt make the kinds of decisions artists make, and when we rely on AI to do creativity for us, it generates something that is particularly and specifically not creative, something that doesnt tell a story or connect with the human experience. Its just data visualization.So what do we do with content that doesnt tell a story, but looks likeone?What can we do with images or text or audio produced with no true meaning or understanding of the context surrounding intention?What is bullshit usefulfor?Im reminded of my work facilitating design thinking and ideation workshops.When facilitating people to think creatively, we solve a problem sourced from the limitations of working in and understanding contexts. Facilitation helps folks think outside their normal modes of thinking by providing means to consider their limited context from a newangle.As facilitators, we dont need to understand that context ourselves, nor do we need to have the niche expertise or knowledge our participants have. We just need to be able to get their knowledge out in the open, within a limited context, to help them reflect on it and find the connections, insights, and observations that help them expand their thinking about thatcontext.Sometimes, that means interpreting complex topics and asking the obvious beginner questions, or making confidently wrong assumptions. Sometimes it means saying bullshit you know nothing about, not so you provide some grand insight, but so the participants, who do know a thing or two, can respond appropriately and say Wait what? No, thats not it, but it reminds me of this thing. It begins arguments, inspires discussion, or starts new trains of thought because youre coming in and participating without understanding the fullcontext.As I said earlier, while Generative AI can synthesize its training data into images, it does not inherently understand human desires, needs, or goals. But does providing a window into those insights for humans to summon and observe solve the same problem? Does it help them to gain inspiration, make more informed and creative decisions, or build meaning themselves?When I started working on interaction design for human-AI co-creativity, I worked with IBM Research to explore the question: How might we help users form accurate mental models of Generative AI in co-creative contexts to build effective human-AI collaboration. This research has mostly taken place within the realm of co-creation and design thinking, but over time, as Ive run workshops, experimented with AI, and gathered the opinions and insights I covered in the previous section, Ive come to abstract the way people interact with information and content as a medium for personal thought, regardless of the source of that content. I started to see the connections between these different modes of interacting with information, and the question for my personal research became: How might we ensure human agency and intent when introducing artificial perspective & bias within creative contexts?When we consume AI-generated content, it can become a way to navigate weird, vague, cloud-like collections of patterns in human thought and expression, and despite (or perhaps because of) the lack of meaning behind these generations, we are presented with raw material to build new meaning from. Meaning that can help us shape how we move forward in the real world. This hollow simulacrum of communication now becomes an interface to capture, understand, shape, and represent our creative intentions, goals, thoughts, and feelings.However, its important to note that the concept of gaining creative inspiration and building personal meaning from what is essentially random or pseudorandom information is not a new idea; it is, in fact, a very ancient one. To consider how AI can be useful in creativity, we need to consider where else we build meaning with information, and what AI actually does when we interact with it creatively.Lets cover this through two concepts.Meaning MachinesThe Sun, Pamela Colman Smith,1909Chris Noessel has been thinking about the question of How do you think new things? for a longtime.In the talk linked above, Chris describes Meaning Machines as mechanisms for noodling semantically, (or changing your frame of mind on a thing by intentionally skewing the semantics of that thing). He gives Tarot as an example, as well as I Ching, Haruspicy, other esoteric practices, and more modern tools for spiritual fulfillment, like Mad Libs. Please go watch the talk, its super interesting.Meaning Machines are, at their core, signifiers, randomized into a fixed grammar, and read for new meaning.Lets consider the Tarot example for a second, and more importantly, lets examine the interaction design of Tarot: Each card in the deck is a symbol (the signifier) with meaning assigned to it. We randomize the cards by shuffling them, place them on the mat, and interpret them. Depending on how they fall, their placements relative to one another, their direction, etc. we react to and reflect on these symbols as they relate to ourlife.And so, we build personalmeaning.This is a creative act! We create meaning and intention for future decisions or outlooks on life out of what is essentially random data presented and interpreted within the context we set and are setwithin.Within the context of strictly creative work, a more practical analog example meaning machine is Brian Eno & Peter Schmidts Oblique Strategiesa deck of cards containing cryptic or ambiguous phrases intended to be shuffled and pulled at random to provoke creative thinking and break a creative block. Intuit is another, inspired by tarot and using gestalt principles, these cards are intended to help the player better understand their creativity, and inspire the performance of creativeacts.Products of Place by SPACE10 andoioBringing this concept into the digital world, the prototype above was developed by the creative agency OiO, in partnership with the now-closed IKEA R&D Lab SPACE10. Its an interface where you choose any point in the world, and an AI system identifies and generates a summary of materials that are abundant in that location, often waste material, which can be used or recycled to create new things, likeplates!The core of the problem I highlighted earlier about AI is that too often we view the output of an AI system as the final product, something to be consumed or distributed as a means to avoid doing the important work. But a more useful application of these artifacts is to incorporate them as materials for use within larger scopes of work. AI systems can become a new kind of meaning machinea way to add interactivity and deeper, more complex variability to otherwise static signifiers, likecards.When we employ AI like this, we begin to see how we might use it to enhance creative ideation and help people explore creative domains in ways they might not have considered before, rather than relying on the generated content as the final product we push into theworld.In this general context then, the randomized signifiers are the contextual data surrounding our creative pursuit, the data the AI is trained on, and the relationships built on that data through its training. These signifiers, the data, are then placed into a fixed grammar through agentive interaction and/or agentic actions, and the user can then interpret the result to stimulate their creativity, build new meaning, or explore ideas they might not have considered before.When we consider the utility of AI in creativity as a feature that helps us create meaning instead of consuming content, it provides a means for us to frame how we build tools that act as collaborative partners in creative work and stimulate our creativeaction.So, when building creative tools with this in mind, what should the actual interaction design between humans and AI looklike?Co-creative AIrolesIn a previous article, I broke down the utility of Generative AI within creative domains into three roles: The Puller, the Pusher, and the Producer. Ill cover them below justbriefly.The Puller: The AI system gathers information about the context the user is working in through active question generation and passive information collection on the works.Example: Pulpoa GPT that takes notes about your ideas through interviewThe Pusher: The AI system uses some/none of this context to synthesize considerations for the user to employ throughout their creative journey.Example: An AI Chatbot that redesigns its interface at yourcommandThe Producer: The AI system creates artifacts for use as elements of the users larger creative output. Example: A contextually informed sticky note content generator(The examples provided show all roles in play because they depend on one another to build a complete AI experience, but are intended to highlight the specific role theyre attachedto.)Informed by aesthetic patterns in its training data rather than informed opinion, the AI system can synthesize questions, observations, assumptions, and potentially useful artifacts in response to the users expressed/gathered context, goals, needs, thoughts, feelings, andactions.These actions of pulling context to generate pushed suggestions provide the user with information that doesnt require the AI system to have a deeper understanding of their historical context or knowledge around the creative pursuit, but acts as a naive sounding board for them to respond to in reflection of their progress. Pushing provides a means for the user to consider new paths, challenging them through artificial assumptions about their work, with the ability to highlight gaps, acting as a kind of meaning machine for facilitating new ideas incontext.(One note on the Pusher role: Its important to ensure push systems are designed to make the user feel comfortable rejecting the propositions from the AI conversational AI characters encourage anthropomorphizing the AI, and enforce a subtle power dynamic over the user where there doesnt need to beone.)Where the Pusher role provokes the user to create their creative ideas or artifacts, the Producer role uses GenAI to produce creative artifacts for use. Its important to consider how we might design our systems to produce artifacts here, rather than full works. This ensures our users creative process holds agency rather than simply assuming their intended output. An example of this might be an AI-enabled rapid UI prototyping tool that builds web components based on an established design system, or a lighting simulator to move through options for a film set for technicians to consider and plan before setting up equipment. Generative fill is an example of productive co-creative AI.One big point I want to make about these roles is that they intentionally dont frame generative AI as the product, but instead frame it as features. None of the examples provided work as full products, but components that provide value within larger flows of creativity. As designers, the solutions we create must be holistically useful to our users, and so far, AI seems only to provide useful features that fit neatly within larger solutions. Call them agents or call them bots, they are justtools.Designing co-creative AI solutionconceptsLets get intoit.In this section, Ill build on the concepts described above to walk through a framework that can act as a basis for setting direction through a workshop (along with an example workshop case study) or framing longer-form user research and AI Interaction design processes. This is intended to help designers or product teams quickly come together to align on a robust design concept for an AI solution informed by creative user needs and intended to understand, react to, and empower creative processes, rather than replacethem.Designing AI systems that complement rather than replace creative functions is difficult, but dealing with the consequences of betting on AI to be able to do the work of creatives isharder.Creativity is something people enjoy doing, and weve already seen why theyre better at it than machines. When designing systems meant to complement creative processes, its important to understand the nuanced aspects of what people do that build up creative action, why we enjoy doing it, how we move through creativity in our real, human lives, and where we seek help throughout creative journeys.As Ive considered where AI might fit within creative domains, where it helps, and where it hurts, Ive built a framework that I believe can help others think through co-creative human-AI systems. Ive provided an outline of the framework below:Part 1: modeling creativity incontextThe first step involves building an understanding of creativity in context and how creative people move through creative work. To do this, we can build a mental model of their creative flow and environment, the processes they move through, their goals, and the actions they workthrough.To do this, choose a primary creative persona to focus on, and, ideally by talking with them, map out the following:What modalities do they work in? (e.g. audio, visual, text, concepts, ideas, material, etc.) andwhen?What actions do they perform when being creative? (e.g. ideating, sketching, experimenting etc.) Start at a high level and break these tasks down, placing them inorder.To perform these actions, what key concepts & principles guide their creative practice? (e.g., inspiration, feedback, iteration)Where might our persona struggle, or benefit from outside help along this creative process? Where is the tedium in this process? How could that tedium be useful for them, even indirectly?Example:At the STRAT 2024 conference I ran a short workshop walking the participants through this framework to see if we could build a solution that uses AI in a way that enhances creativity, and within a few hours we conceptualized a rough idea for something I think we were all excited about: a tool to help designers create documentation more efficiently. Ill outline out process as we move through the framework.As this was an educational workshop for designers, performed by designers, we started by roughly mapping out these categories on sticky notes that focus on the modalities, actions, concepts, principles, and struggles designers face as a whole, so we could narrow down the usecase.Heres a summary of what we workedthrough:Modalities: Conceptual (User Journeys, Psychology, Information Architecture etc.) Visual (Graphic Design, Interfaces, Branding etc.)Interactive (Accessibility, Usability, Design Systemsetc.)Actions, concepts, & principles: Conceptualize / Define / Plan / Develop / Research / Iterate / Experiment / Develop / Simplify / Annotate / Decide / Prioritize / Document / (and muchmore)Struggles: Prioritizing & Understanding Project Needs / Documenting Decisions / Communicating Reasoning / Reconciling & Articulating User and Business Needs / Feedback Without Context / Lack of Data / Ethical Decision Making / Understanding Long-term Implications.After mapping these out, we played it back and talked through where in the process of design wed really love some help, and landed on documenting data used for design decisions, and documenting design reasoning. We ended this part of the workshop aligning on the following context to design a solutionfor:Designers Tasked with Design Documentation really struggle through the tedium of capturing, formatting, and sharing the reasoning and historical decisions of their design process, especially when they dont have the time or resources to format it properly. This affects their relationships with developers, business stakeholders, and future designers iterating on their work. Designers in the workshop also agreed that while they understood the utility of documentation, they just didnt enjoy they process, making it a good target for creating a system that eases their workload.Part 2: mapping contextual dataIn this step, identify and map the data surrounding these creative tasks, categorizing them into what AI can pull, push, orproduce.First, gather the types of Input, Output, and Contextual information/data/artifacts involved in the mental model we built. Consider:What might our persona need, use, observe, or consume as part of their creative process? (e.g., reference images, past work, markettrends)What might our persona create, and what are the artifacts produced? (e.g., sketches, drafts, final products)What contextual information is relevant to our persona's creative task? (e.g., mindset, beliefs, political climate, project constraints)Then, consider the most useful information, data & artifacts our AI could pull, push, or produce for our persona, asking questions like:Pull: What can/should be gathered from our persona or other various sources to inform the larger creative context? (e.g. reasoning, info about the work, outside inspiration)Push: Where can AI most usefully generate suggestions, insights, or new ideas in the process? (e.g. creative directions, variations of work, material recommendations)Produce: What content or artifacts might AI produce directly that are useful to, but dont replace our user's final output? (e.g. prototypes, elements, color palettes, code snippets)ExampleMapping out data designers work with during documentation, what they produce as a result, and the contextual data surrounding documentation, some examples of what we ended up with included: Input Data: Product requirements / The why / Stakeholder input / User Personas / The where / Modality of content Output Data: Wireframes / prototypes / mockups / Annotations / Design iterations / Design system components / Instructions / Tokens Contextual Data: Brand / Time constraints / Developer capabilities / Budget constraints / Designer limitations / Origins of decision reasoningThen we mapped this data to that which AI might most usefully push, pull, and produce to make documentation easier for designers.Pull: Product requirements / User Input / Annotations / Clarification of reasoning / design versions / Connections to BrandSystemPush: Reasoning summariesProduce: Formatted Documentation Data / Historical Captures of Reasoning / Audience-adapted ExplanationsWorkshop part 3: human/AI interaction designWith our context in mind and the necessary components in place, determine the interaction design and task assignments for our System, Persona, and AI, and what the result of this interaction will look like. In this step, its important to consider the specific, tangible capabilities AI can perform while interacting with a user orsystem.A very useful resource for thinking about discrete GenAI Capabilities is Designing With: A New Educational Module to Integrate Artificial Intelligence, Machine Learning and Data Visualization in Design CurriculaFirst, using the mental model, data categories, and AI capabilities; outline key tasks throughout the creative process youre examining:Human Tasks: What should remain human-centric due to the need for judgment, intuition, emotional intelligence, or simply because people enjoy doingit?AI Tasks: Review the AI Capabilities List. How might the AI help our user through their creative journey? Hint: Consider explicitly highlighting both the capability and data/output e.g. Summarize rough notes into formatted documentationSystem Tasks: What roles or tasks does the broader system play out to support the interaction? (e.g., storing data, managing data flow, communicating, committing)Then, review your work so far. Map out how your persona, AI, and System interact. Include:Data Categories & Examples: Clearly mark input, output, and contextual datapoints.Task Assignments: Use distinct symbols or colors to differentiate between human, AI, and systemtasks.Interactions & Flows: Draw lines/arrows to show how data & tasks interact, illustrating the flow of the creativeprocess.Feedback Loops: Highlight any iterative steps or feedback loops that influence theprocess.Example:In the end, we outlined a system intended to recognize patterns in documentation artifacts, supplement them by identifying gaps, posing clarifying questions, re-framing design decisions to fit the context alongside historical reasoning, and format everything to system standards. The result was a collaborative system where designers remain in control while AI assists in enhancing clarity and completeness, building more robust documentation while easing the process for the designer.Heres another example of an interaction design flow that could be built as a result of this framework:This is an outline for an AI system that gathers information about a user's dream, tracks the symbols and themes, curates information, and forms connections that provide them the tools to interpret and analyze their dreams at a deeper level (rather than relying on the AI to act as an authority and analyze their dreams forthem).Example of how this could be articulated through a UI beyond achatbot.ConclusionRemember Guernica. When we look at it, we dont just see patterns of paint on canvaswe see Picassos horror at civilian bombing, his protest against fascism, and his attempt to communicate profound human suffering. AI can analyze Guernicas composition, mimic its cubist style, or generate images that look superficially similar, but it cannot understand why Picasso painted it, cannot feel what he felt, and cannot intend to communicate meaning as hedid.Humans are creative beings. While AI can have a place in our creativity, that doesnt mean it should replace it. The framing for it to be a powerful creative tool is there, and I hope the information above helps distinguish that. I hope the larger community engages and calls me out for any gaps or inconsistencies Ive missed when working through thisIm sure there are many, and Id love a larger dialogue to form out ofthis.To summarize everything:Generative AI produces content without regard for truth or meaning.AI-generated content merely highlights patterns found in data without genuine understanding or regard for truth. It doesnt think, feel, or understand, it employs the aesthetics of thought, feeling, and understanding.We build meaning creatively by reflecting on what is generated.When we interact with AI-generated content, we imbue it with meaning. By manipulating this content correctly, AI can become a tool to enhance creative processes.Pull, Push, Produce.Design AI systems to gather the context of a creative pursuit. Use this context to prompt users to think and act more creatively, and guide AI to generate content that aligns more closely with the usersvision.Model creative processes, map contextual data, and assign the right tasks.Understand the environment your user works within and the struggles they face. Create a balance between the human and the AI that supports and nurtures the user's creative goals, rather than simply automating it withAI.Consider all of the Human.Generally, even outside creative realms, I hope this article helps those who build things to think more deeply about the relationship between humans and technology, why we build things using technology, and why wedont.Thanks yall. I loveyou.Brandon Harwood is a designer and emergent technology strategist for people interested in tackling the complex relationship between humans and the technologies we use to build products that deeply help the people we buildfor.https://www.bah.design/https://www.linkedin.com/in/brandon-harwood/Collected consciousness: AI product design for empowering human creativity was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.0 Commentarii ·0 Distribuiri ·17 Views