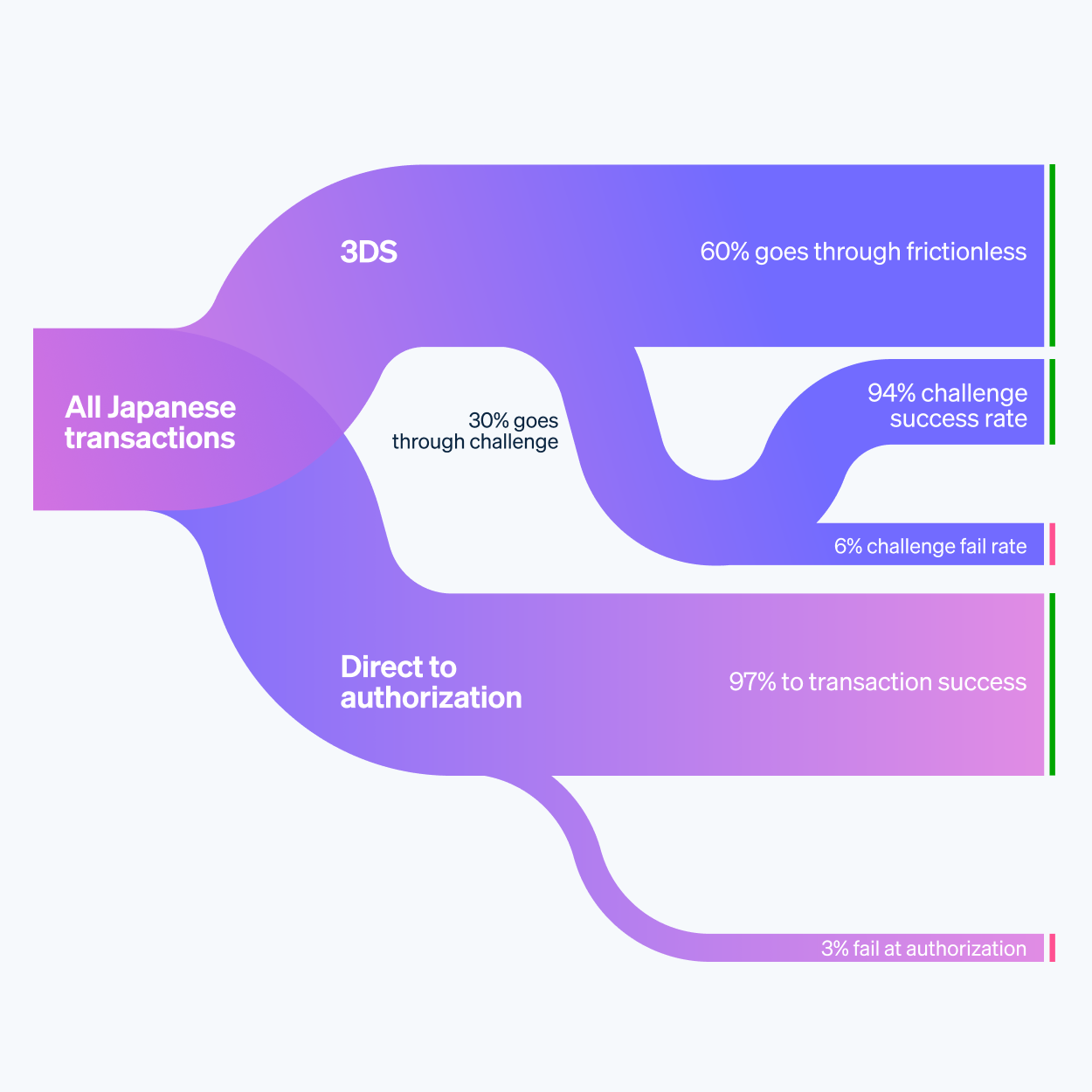

De conversieparadox: ondanks de hoge triggerpercentages voor tweefactorauthenticatie in gereguleerde markten zoals Frankrijk, het VK en Japan, blijven de conversiepercentages hoog. Het is een beetje verwarrend, maar ja, dat is hoe het gaat. Mensen klikken nog steeds door, zelfs met al die extra stappen. We blijven dus maar afwachten wat de volgende trends in 3DS zullen brengen.

#ConversieParadox

#3DSTrends

#GereguleerdeMarkten

#Tweefactorauthenticatie

#Ecommerce

#ConversieParadox

#3DSTrends

#GereguleerdeMarkten

#Tweefactorauthenticatie

#Ecommerce

De conversieparadox: ondanks de hoge triggerpercentages voor tweefactorauthenticatie in gereguleerde markten zoals Frankrijk, het VK en Japan, blijven de conversiepercentages hoog. Het is een beetje verwarrend, maar ja, dat is hoe het gaat. Mensen klikken nog steeds door, zelfs met al die extra stappen. We blijven dus maar afwachten wat de volgende trends in 3DS zullen brengen.

#ConversieParadox

#3DSTrends

#GereguleerdeMarkten

#Tweefactorauthenticatie

#Ecommerce